🧠 Grigori Perelman, the Poincaré Conjecture, and What Academic Integrity Demands

In the early 2000s, Russian mathematician Grigori Perelman published a solution to the Poincaré Conjecture, a century-old problem and one of the Clay Millennium Prize challenges.

In the early 2000s, Russian mathematician Grigori Perelman published a solution to the Poincaré Conjecture, a century-old problem and one of the Clay Millennium Prize challenges.

His work was brilliant, concise, and transformative.

And yet—he rejected both the Fields Medal (2006) and the $1 million Millennium Prize (2010).

And yet—he rejected both the Fields Medal (2006) and the $1 million Millennium Prize (2010).

While often portrayed as an eccentric or loner, Perelman's decision was grounded not in personal oddity but in a principled rejection of how credit and recognition were being handled in the mathematics community.

📍 What Actually Happened

📍 What Actually Happened

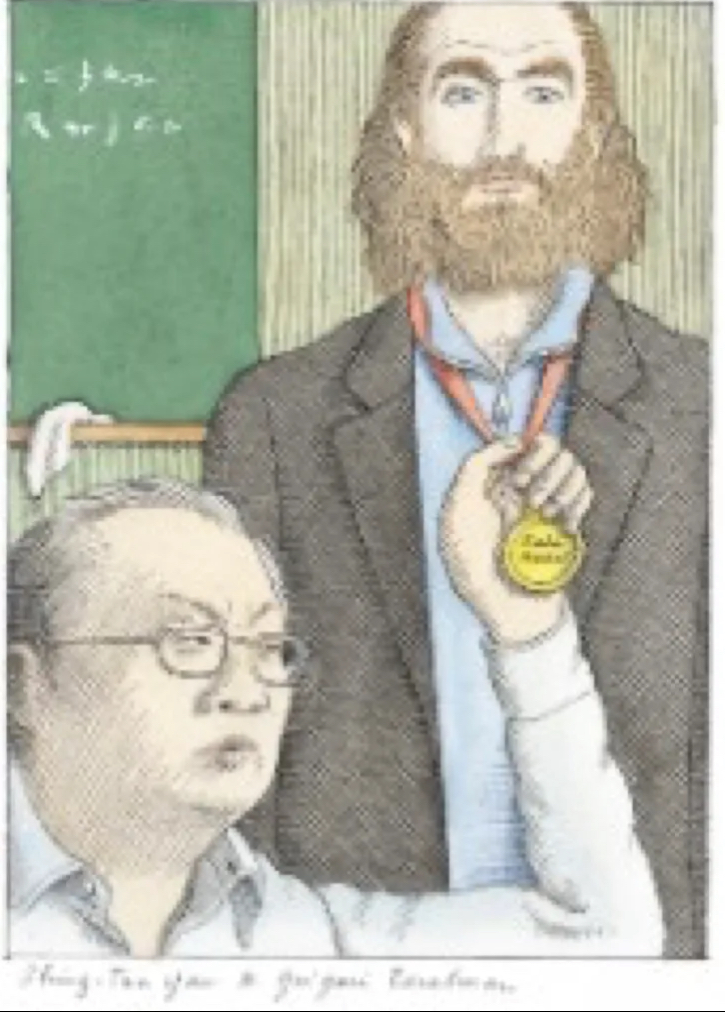

After Perelman released his proofs on arXiv, a formal verification process began. Notably, a trio of closely affiliated Chinese 🇨🇳 mathematicians—Huai-Dong Cao, Xi-Ping Zhu, and Shing-Tung Yau—were among those whose work became central to that effort.

Cao and Zhu published a paper in 2006 presenting a complete proof.

However, many in the community noted that their work closely followed both Perelman's original ideas and the independent verification notes by Bruce Kleiner and John Lott, who had been systematically clarifying Perelman's dense arguments and sharing them publicly.

Following criticism, Cao and Zhu issued an erratum acknowledging that much of their paper's structure and content had been anticipated in Kleiner and Lott's earlier drafts.

This sequence of events raised concerns—of questionable academic judgment regarding attribution and timing.

Moreover, some observers noted that the group tasked with formally verifying Perelman's work included only those with close institutional or national ties—raising concerns about objectivity in how credit was being assigned.

📰 The Cultural Moment

📰 The Cultural Moment

This controversy was captured in the 2006 New Yorker article, “Manifold Destiny,” which portrayed the tensions over recognition using vivid metaphor—most memorably, an illustration of one mathematician reaching for a medal around Perelman's neck.

Though the article drew backlash from those portrayed, its factual claims were not retracted. The broader conversation it triggered—about fairness, transparency, and gatekeeping in elite research—remains deeply relevant.

🔎 Lessons That Still Matter

🔎 Lessons That Still Matter

Perelman's withdrawal was not an act of vanity—it was a principled stand against what he viewed as a flawed system of academic reward.

Even without overt misconduct, structural biases in how panels are composed and how contributions are acknowledged can distort the truth.

Even without overt misconduct, structural biases in how panels are composed and how contributions are acknowledged can distort the truth.

* Integrity in scholarship requires more than technical brilliance—it demands humility, fairness, and open acknowledgment of others’ work.

*Further Reading:**

* Perelman's papers on arXiv (2002–2003)

* Kleiner & Lott’s verification: [arXiv\:math/0605667]()arxiv.org/abs/math/06056…

*Further Reading:**

* Perelman's papers on arXiv (2002–2003)

* Kleiner & Lott’s verification: [arXiv\:math/0605667]()arxiv.org/abs/math/06056…

*The New Yorker* article: ["Manifold Destiny"](newyorker.com/magazine/2006/…)

* [Wikipedia summary](en.wikipedia.org/wiki/Manifold_…)

* [Wikipedia summary](en.wikipedia.org/wiki/Manifold_…)

This is not just a story about one mathematician—it’s a case study in how we assign credit, verify contributions, and maintain trust in academic institutions.

Let’s keep striving for a research culture where fairness is as important as brilliance.

#math

Let’s keep striving for a research culture where fairness is as important as brilliance.

#math

• • •

Missing some Tweet in this thread? You can try to

force a refresh