So, exactly how big will the intelligence explosion be?

…Ten years of AI progress in a year? In a month?

Our new paper tackles this question head-on.

I've researched AI takeoff speeds for many years. This is my best stab at an answer.🧵

…Ten years of AI progress in a year? In a month?

Our new paper tackles this question head-on.

I've researched AI takeoff speeds for many years. This is my best stab at an answer.🧵

An intelligence explosion is where AI makes smarter AI, which quickly makes even smarter AI, etc.

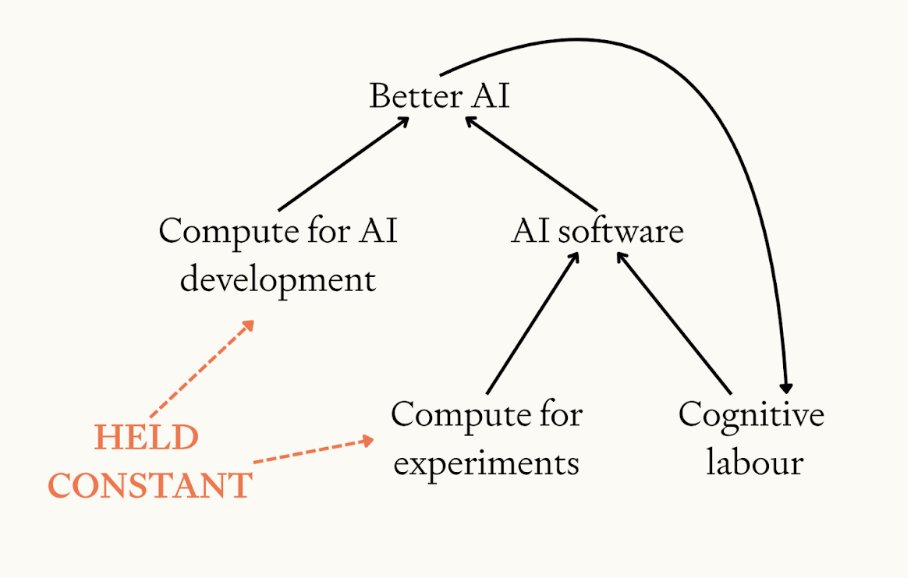

Our scenario: AI fully replaces humans at improving AI “software” (algorithms and data).

(We conservatively assume that the amount of compute remains constant.)

Our scenario: AI fully replaces humans at improving AI “software” (algorithms and data).

(We conservatively assume that the amount of compute remains constant.)

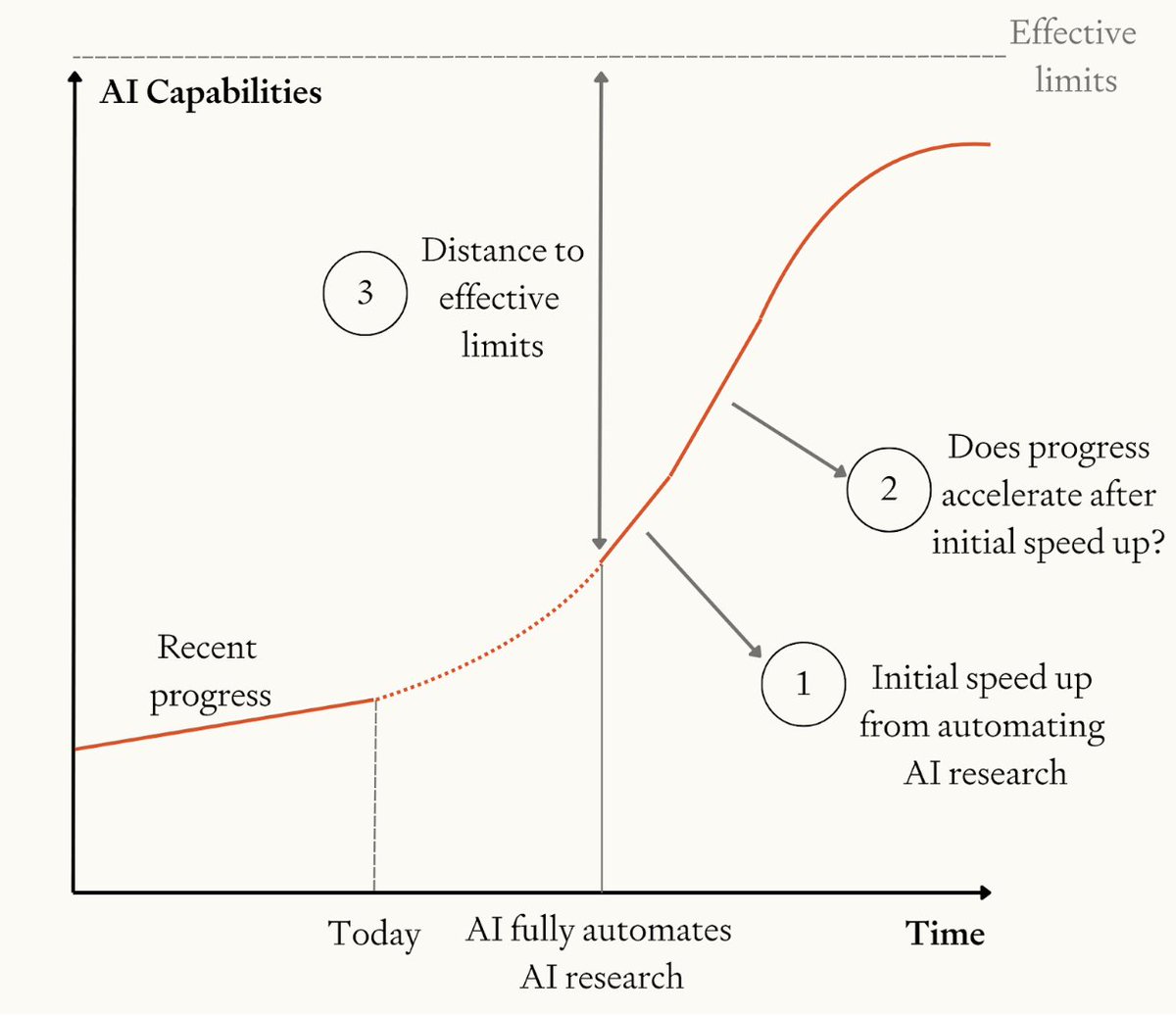

Our model has three main parameters:

1) Initial speed-up in software progress from AI automating AI research

2) After the initial speed-up, does progress accelerate or decelerate?

3) How far until AI software reaches fundamental limits on compute efficiency?

1) Initial speed-up in software progress from AI automating AI research

2) After the initial speed-up, does progress accelerate or decelerate?

3) How far until AI software reaches fundamental limits on compute efficiency?

One interesting insight:

Compressing >10 years of total AI progress into <1 year via software improvements is tough.

It would require >10 OOMs of efficiency improvements. (Effective compute for developing AI has recently risen by 10X/year.)

That's a 10 billion-fold increase!

Compressing >10 years of total AI progress into <1 year via software improvements is tough.

It would require >10 OOMs of efficiency improvements. (Effective compute for developing AI has recently risen by 10X/year.)

That's a 10 billion-fold increase!

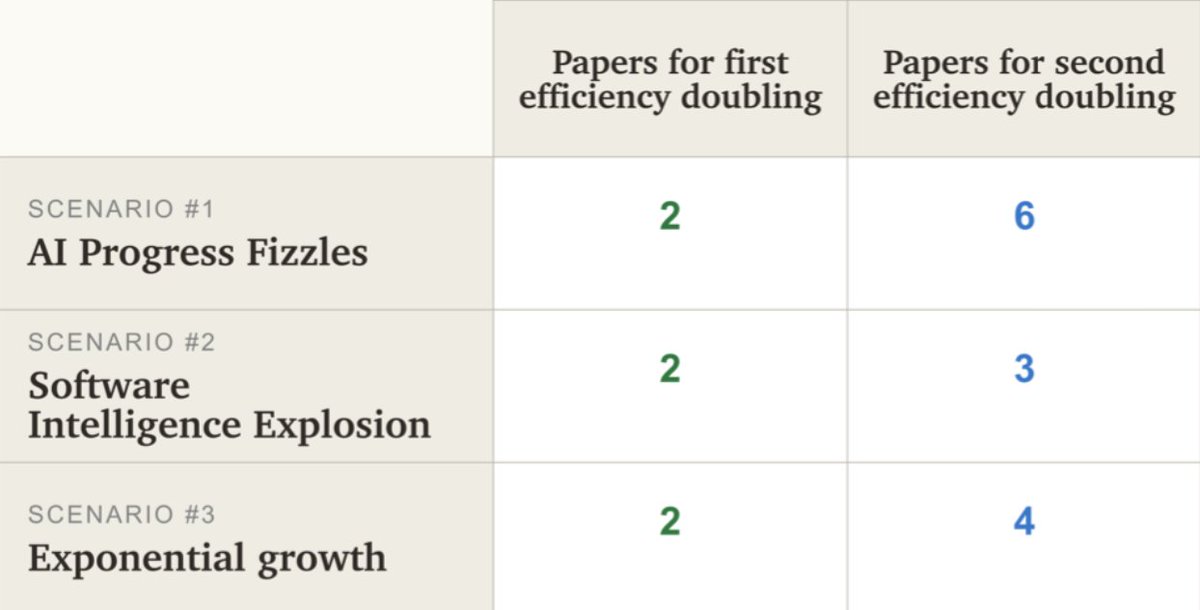

We put probability distributions over the params and run a Monte Carlo simulation.

The model spits out the probability of compressing multiple years of total AI progress into a few months from software improvements alone.

The model spits out the probability of compressing multiple years of total AI progress into a few months from software improvements alone.

I roughly estimate that our model assigns a ~20% probability to takeoff being faster than in AI-2027

@DKokotajlo @eli_lifland

@DKokotajlo @eli_lifland

https://x.com/DKokotajlo/status/1907826614186209524

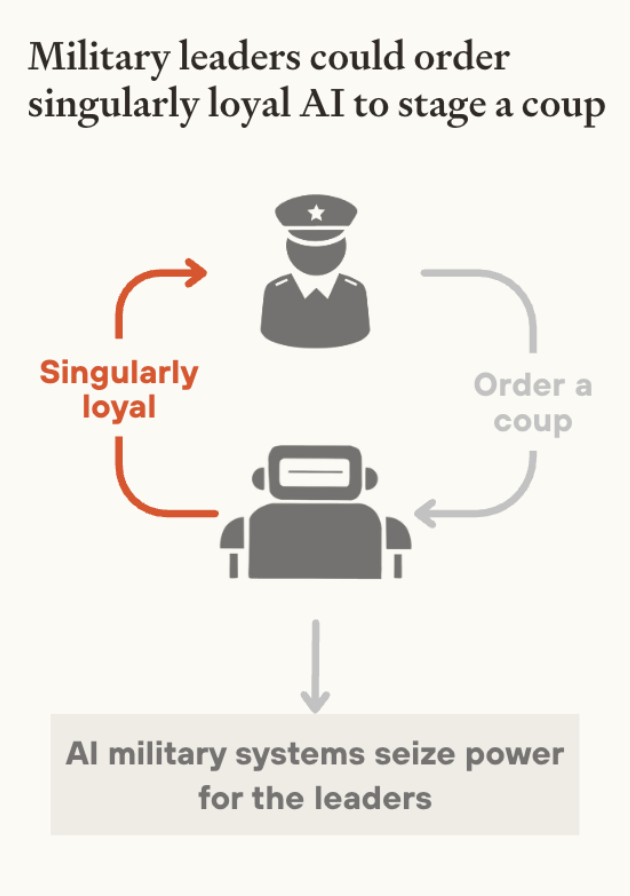

How scary would this be?

6 years of progress might take us from 30,000 expert-level AIs thinking 30x human speed to 30 million superintelligent AIs thinking 120X human speed (h/t @Ryan)

If that happens in <1 year, that's scarily fast just when we should proceed cautiously

6 years of progress might take us from 30,000 expert-level AIs thinking 30x human speed to 30 million superintelligent AIs thinking 120X human speed (h/t @Ryan)

If that happens in <1 year, that's scarily fast just when we should proceed cautiously

It goes without saying: the model is very basic and has many big limitations.

E.g. we assume AI progress will follow smooth trends.

But if there’s a big paradigm shift, AI progress could be much more dramatic. Alternatively, the current paradigm could fizzle out.

E.g. we assume AI progress will follow smooth trends.

But if there’s a big paradigm shift, AI progress could be much more dramatic. Alternatively, the current paradigm could fizzle out.

See the full paper for more:

forethought.org/research/how-q…

forethought.org/research/how-q…

This builds on previous work by myself and @daniel_271828

https://x.com/TomDavidsonX/status/1904956748995182704

• • •

Missing some Tweet in this thread? You can try to

force a refresh