Senior Research Fellow @forethought_org

Understanding the intelligence explosion and how to prepare

How to get URL link on X (Twitter) App

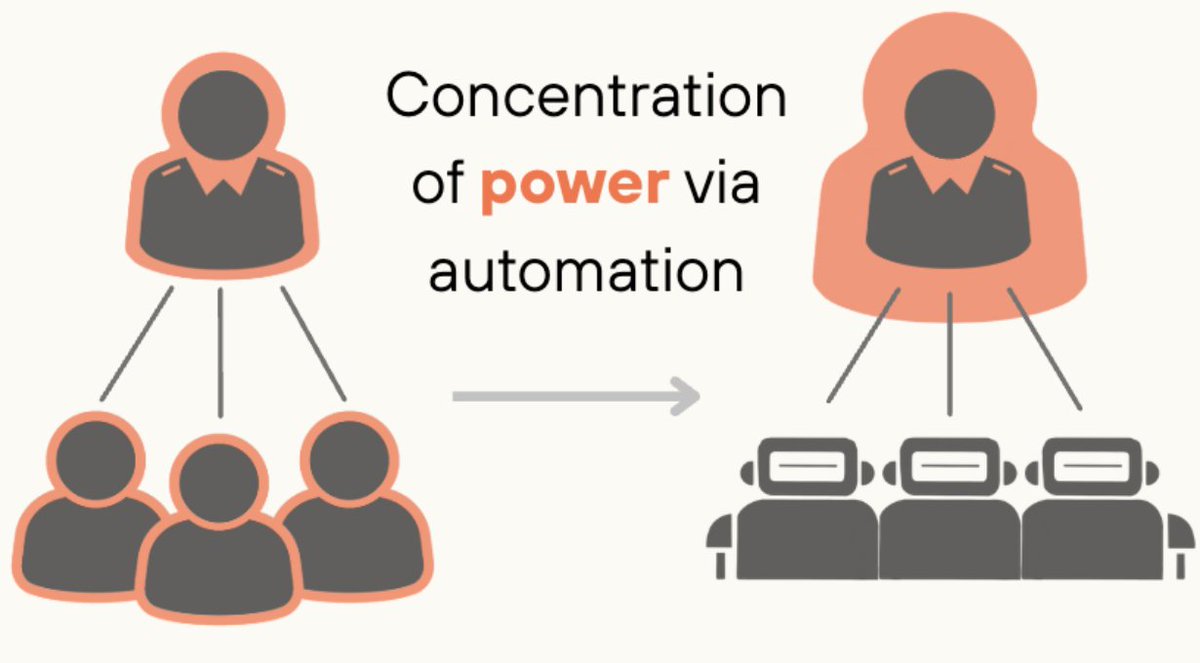

Three versions of the attack:

Three versions of the attack:

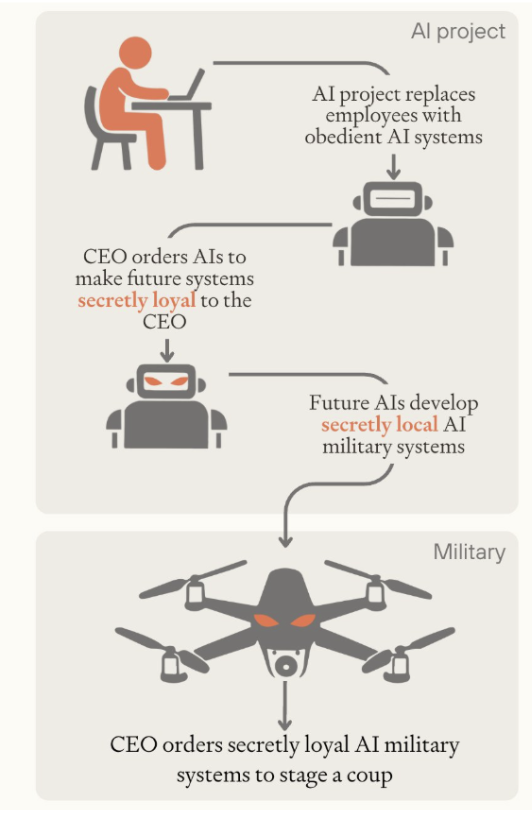

An intelligence explosion is where AI makes smarter AI, which quickly makes even smarter AI, etc.

An intelligence explosion is where AI makes smarter AI, which quickly makes even smarter AI, etc.

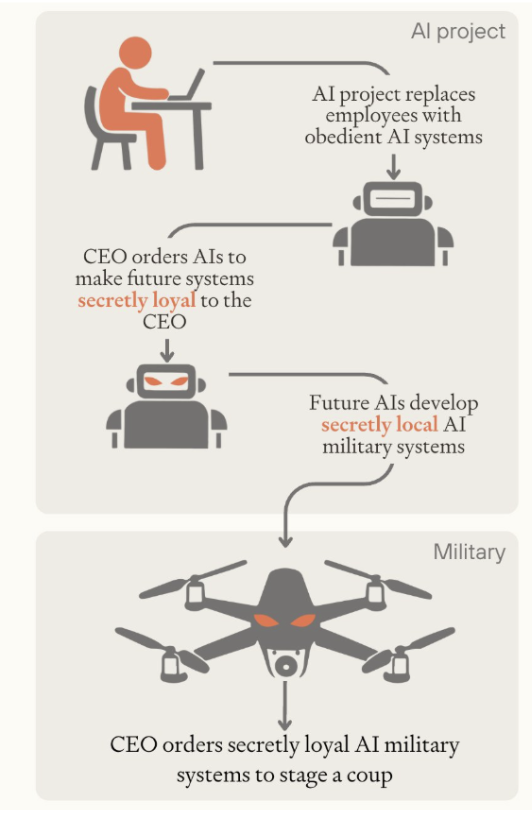

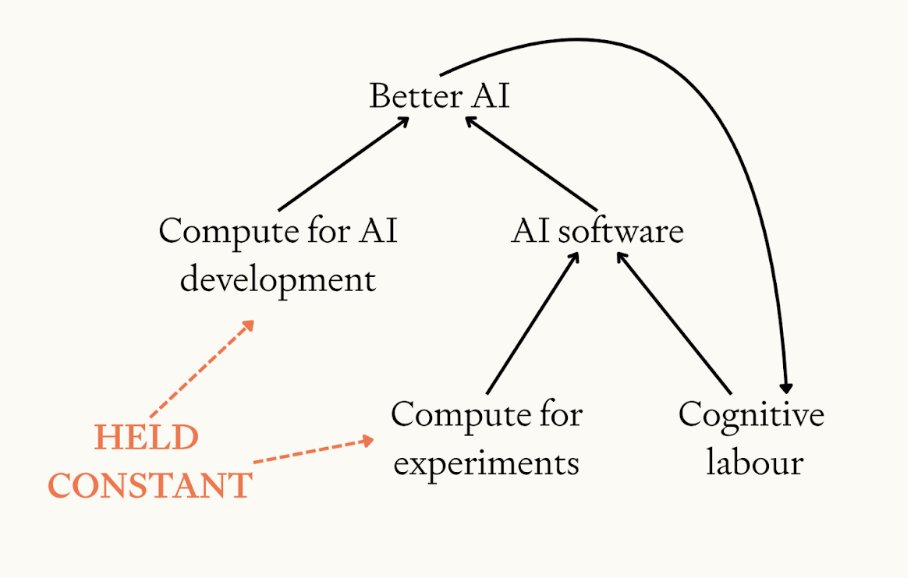

Coup mechanism #1: Singularly loyal AI

Coup mechanism #1: Singularly loyal AI

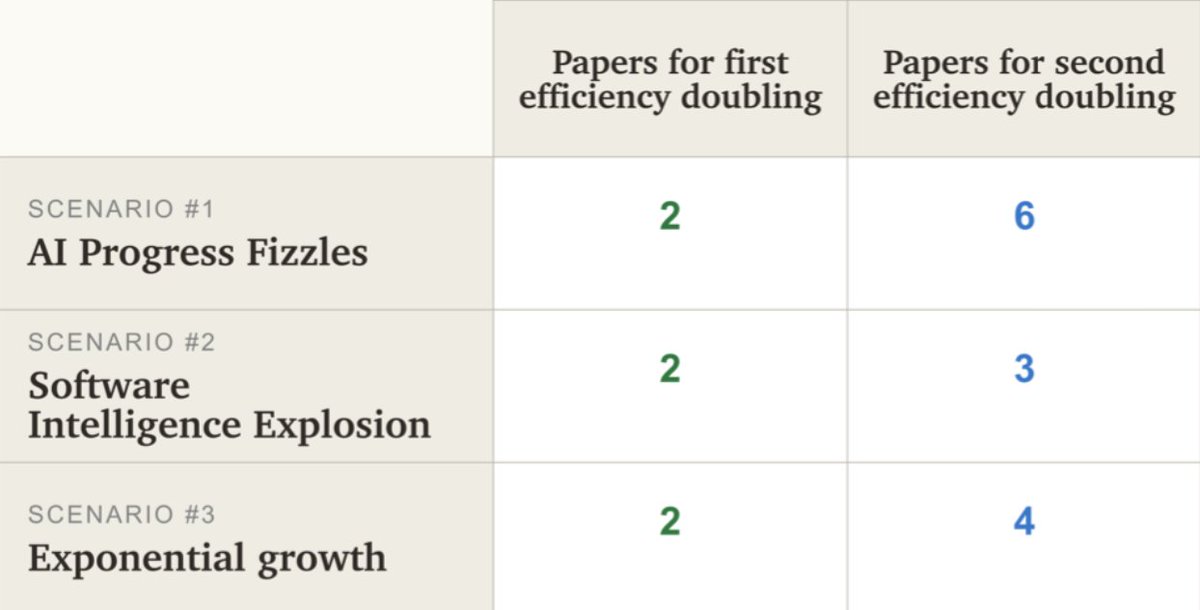

https://twitter.com/daniel_271828/status/1904937792620421209A software intelligence explosion is where AI improves in a runaway feedback loop: AI makes smarter AI, which makes even-smarter AI etc.