A few thoughts on what an exponentially increasing time horizon means for AI R&D automation:

I think a 1-year 50%-time-horizon is very likely not enough to automate AI research, but I also think that AI research is 50% likely to be automated by the end of 2028.

I think a 1-year 50%-time-horizon is very likely not enough to automate AI research, but I also think that AI research is 50% likely to be automated by the end of 2028.

https://twitter.com/nikolaj2030/status/1954248757513720297

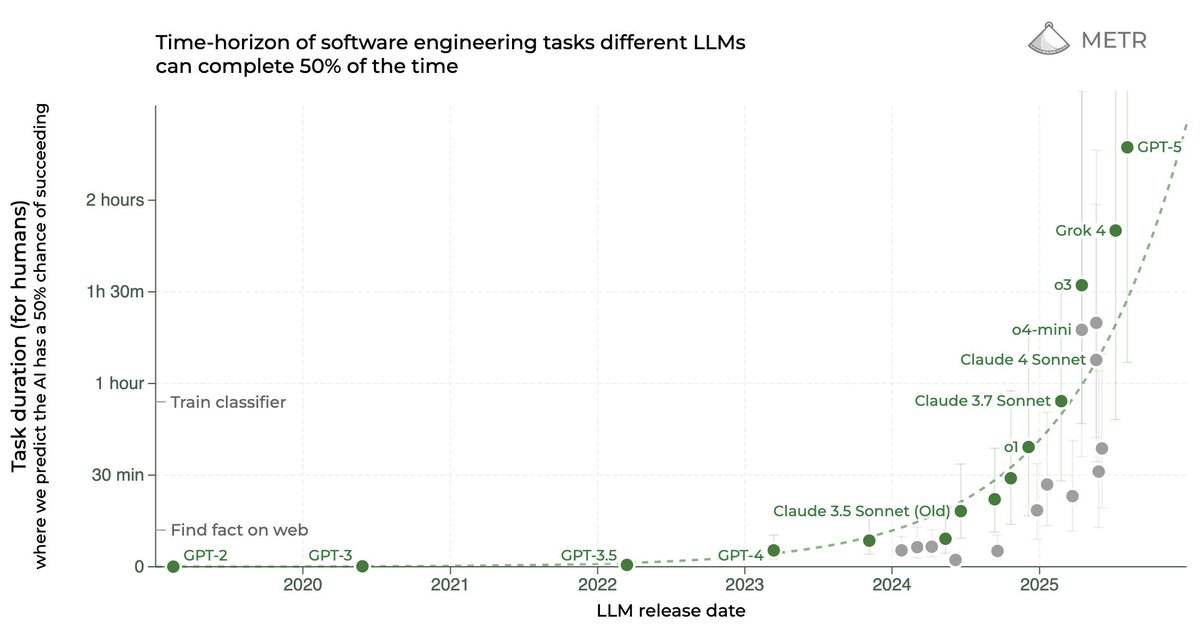

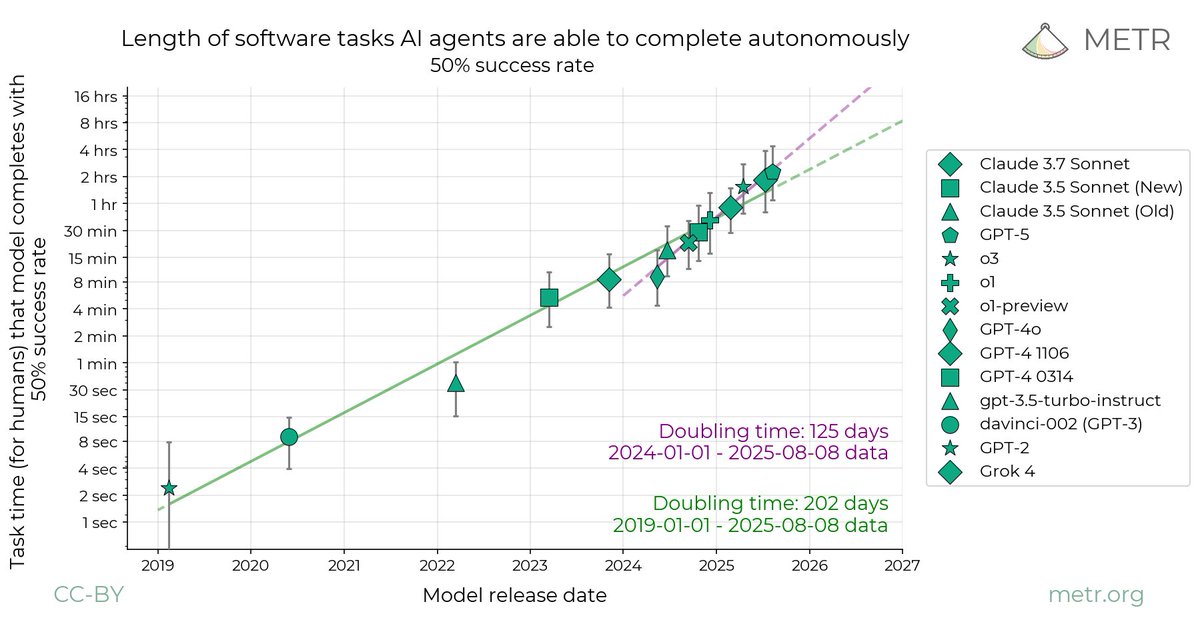

The reason I think AI research might be automated by EOY 2028 is because I think the time horizon at that time will be much higher than 1 year (the result of a naive extrapolation from current rates), as the time horizon will increase faster and faster over time. A few reasons:

1. AI speeding up AI research probably starts making a dent in the time horizon doubling time (making it at least 10% faster) by the time we hit 100hr time horizons. It's pretty hard to reason about the specifics here but I find it hard to imagine such AIs not being super useful.

2. I place some probability on the "inherently superexponential time horizons" hypothesis. To me, 1-month-coherence, 1-year-coherence, and 10-year-coherence (of the kind performed by humans) seem like extremely similar skills which will thus be learned in quick succession.

3. It's likely that the discovery of reasoning models contributed to decreasing the doubling time from 7 months to 4 months. It's plausible we get another reasoning-shaped breakthrough. My guess is that the base rate for such breakthroughs is around 10% per year.

So my best guess for the 50% and 80% time horizons at EOY 2028 are more like >10yrs and >4yrs. But past ~2027 I care more about tracking how much AI R&D is being automated and less about tracking the time horizon itself as the time horizon becomes a less meaningful number.

But all of these are my median expectations, it's possible things will go even faster (!) or slower. Part of me thinks that I should defer more to the naive extrapolations and move my median for AGI to 2030 or 2031.

I'm pretty uncertain. We'll have more data as time goes on.

I'm pretty uncertain. We'll have more data as time goes on.

• • •

Missing some Tweet in this thread? You can try to

force a refresh