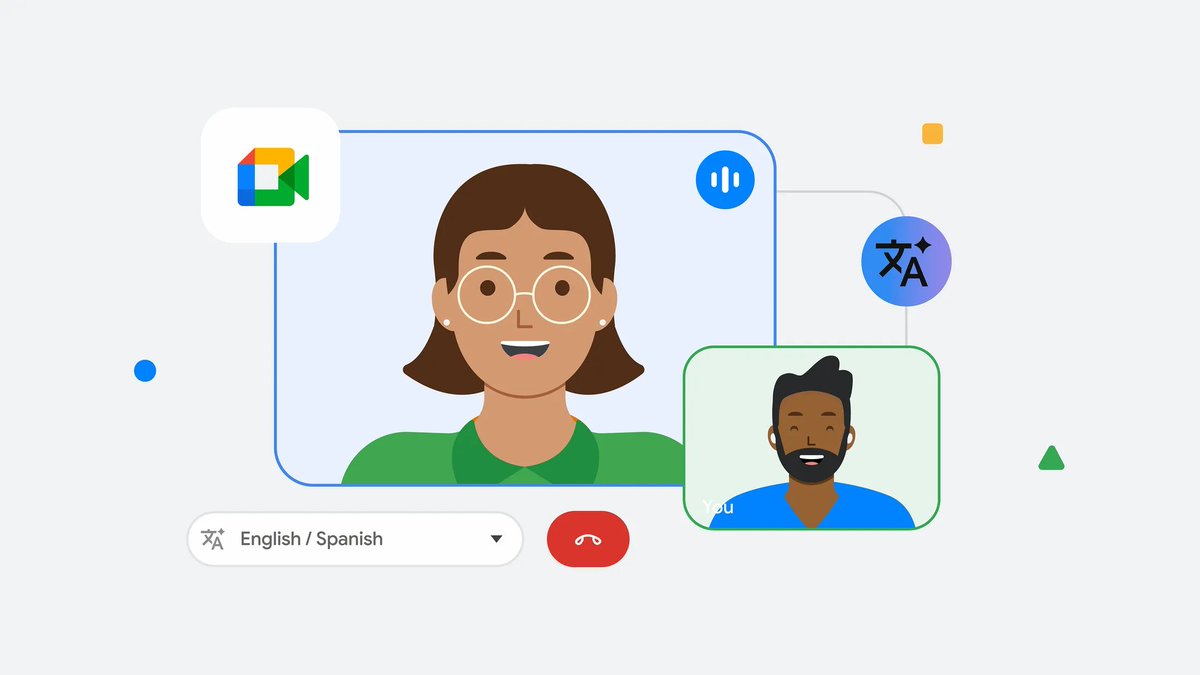

Google just solved the language barrier problem that's plagued video calls forever.

Their new Meet translation tech went from "maybe in 5 years" to shipping in 24 months.

Here's how they cracked it and why it changes everything.

Their new Meet translation tech went from "maybe in 5 years" to shipping in 24 months.

Here's how they cracked it and why it changes everything.

The old translation process was a joke. Your voice → transcribed to text → translated → converted back to robotic speech.

10-20 seconds of dead air while everyone stared at their screens. By the time the translation played, the conversation had moved on. Natural flow? Dead.

10-20 seconds of dead air while everyone stared at their screens. By the time the translation played, the conversation had moved on. Natural flow? Dead.

Google's breakthrough was eliminating that chain entirely.

They built models that do "one-shot" translation. You speak in English, and 2-3 seconds later your actual voice comes out speaking fluent Italian. Not some generic robot voice. YOUR voice, with your tone and inflection.

They built models that do "one-shot" translation. You speak in English, and 2-3 seconds later your actual voice comes out speaking fluent Italian. Not some generic robot voice. YOUR voice, with your tone and inflection.

The team discovered 2-3 seconds was the sweet spot through brutal testing. Faster than that? People couldn't process what they heard.

Slower? Conversations felt stilted and weird. They had to nail that human rhythm where translation feels like natural conversation flow.

Slower? Conversations felt stilted and weird. They had to nail that human rhythm where translation feels like natural conversation flow.

Here's where it gets interesting. Languages like Spanish, Italian, and Portuguese were easy wins because of structural similarities. German? Nightmare fuel.

Different grammar, sentence structure, idioms that make zero sense when translated literally.

They're still working on capturing sarcasm and irony.

The real validation wasn't in the tech specs. It came from user stories that hit different. Immigrants who moved to the US with parents who never learned English.

Grandparents meeting grandkids for the first time in actual conversation, not broken gestures and Google Translate screenshots.

Different grammar, sentence structure, idioms that make zero sense when translated literally.

They're still working on capturing sarcasm and irony.

The real validation wasn't in the tech specs. It came from user stories that hit different. Immigrants who moved to the US with parents who never learned English.

Grandparents meeting grandkids for the first time in actual conversation, not broken gestures and Google Translate screenshots.

This is live right now in Italian, Portuguese, German, and French on Google Meet. More languages rolling out soon. We just watched the moment when "lost in translation" became a relic of the past.

The language barrier just got its first real crack.

The language barrier just got its first real crack.

I share AI updates here, but I build the tools at getoutbox.ai the fastest way to create your own AI voice agent without code.

Join our Skool community to learn, share, and get early access to AI voice strategies →

skool.com/outbox-ai/about

Join our Skool community to learn, share, and get early access to AI voice strategies →

skool.com/outbox-ai/about

I hope you've found this thread helpful.

Follow me @connordavis_ai for more.

Like/Repost the quote below if you can:

Follow me @connordavis_ai for more.

Like/Repost the quote below if you can:

https://twitter.com/1955077938879299584/status/1966817110556053956

• • •

Missing some Tweet in this thread? You can try to

force a refresh