AI Economic Meltdown: The Coming Expert Squeeze /🧵

There is a frenzy today that is seemingly unstoppable -- the process of replacing human workers with AI. On another front, the idea that AI has now reached a level beyond the smartest human beings is promoted by CEOs like Sam Altman, Dario Amodei and Elon Musk. In cases where humans aren't replaced, they're expected to augment themselves with AI models to increase their productivity.

On the opposing side, there are people who speak of the technology as impractical, overhyped or down right dangerous. This thread is going to take a different angle to these people: I will demonstrate not only this replacement will become a self-fulfilling prophecy, but why we are locked into this process (which has become an inescapable ponzi scheme) and it will culminate in the destruction of western economies.

In this short thread I'm going to show you why, starting with the economic feedback loops, the limitations of the technology, and finally human psychological dependency and the incentive process. To bring it all together, I will explain why the entire western economy is now dependent on this hype, and why the alternative is also collapse of a different kind.

I will start with the main driver of this trend: the economy.

There is a frenzy today that is seemingly unstoppable -- the process of replacing human workers with AI. On another front, the idea that AI has now reached a level beyond the smartest human beings is promoted by CEOs like Sam Altman, Dario Amodei and Elon Musk. In cases where humans aren't replaced, they're expected to augment themselves with AI models to increase their productivity.

On the opposing side, there are people who speak of the technology as impractical, overhyped or down right dangerous. This thread is going to take a different angle to these people: I will demonstrate not only this replacement will become a self-fulfilling prophecy, but why we are locked into this process (which has become an inescapable ponzi scheme) and it will culminate in the destruction of western economies.

In this short thread I'm going to show you why, starting with the economic feedback loops, the limitations of the technology, and finally human psychological dependency and the incentive process. To bring it all together, I will explain why the entire western economy is now dependent on this hype, and why the alternative is also collapse of a different kind.

I will start with the main driver of this trend: the economy.

The economic feedback loop

Tech companies and other firms all over the world are in a frenzy to fire as many employees as possible in order to minimise their payroll, keeping investors happy and increasing their stock price even as the real economy around them collapses.

Excluding algorithmic trading, the economy ultimately involves exchange between humans and groupings of humans (i.e. human run entities). The fewer people you have working, the fewer people you have buying things, the less money ultimately flows into large corporations without the public being forced to subsidise them via government grants.

It also goes the other way around, the lower the demand for people with certain skills, the less money groupings of people will offer, and the fewer people will develop these skills. The incentive is thus a feedback loop, the more successful the companies, the more successful the workers, the more both grow upwards.

The promise of AI is to cut this loop open, allowing companies to theoretically lower their payroll to near zero, moving that line item to either data centre costs or the cost of AI models hosted by other companies. This has flow-on effects too: the fewer individual contributors you have, the fewer managers, HR representatives and middle managers you need. Companies are also incentivised to disintermediate and flatten their hierarchies.

With all the hype this seems like a risk-free gamble until you break apart the assumptions and consequences. There are two main assumptions:

1. The cost of using AI models will remain cheap.

2. AI will be able to continuously fulfil the duties of humans in all domains that they replace or augment.

I will disprove assumption (1) later in this section and disprove assumption (2) in the next section.

The up-front cost is seemingly sending many people into unemployment, and driving down the consumer economy. Of course, it is never that simple and rarely linear or even reversible. In taking this gamble, these companies will lose centuries of inherited experience both at the individual contributor and the management level.

This has already been done before. Entering the late 1970s, the United States had a tight grip on world exports and industries with very few exceptions. This was all off-shored over the next few decades until the US was deindustrialised. Today, the US struggles to produce tanks and artillery shells, as the last few workers that still know how retire and the economic incentive for their replacement disappears.

Software engineers, spreadsheet jockeys and other service economy workers will soon be facing the same calculus as industrial workers did during that time. They will quickly move on, or move out of the United States and other western nations. Ironically, these are the very skills needed to keep data centres running smoothly, AI models fed with data (after all it's the information technology that is upstreaming these data feeds and data creation events) and even the AI models developed. Albeit, the full effect of this will not be felt for the time being.

Tech companies and other firms all over the world are in a frenzy to fire as many employees as possible in order to minimise their payroll, keeping investors happy and increasing their stock price even as the real economy around them collapses.

Excluding algorithmic trading, the economy ultimately involves exchange between humans and groupings of humans (i.e. human run entities). The fewer people you have working, the fewer people you have buying things, the less money ultimately flows into large corporations without the public being forced to subsidise them via government grants.

It also goes the other way around, the lower the demand for people with certain skills, the less money groupings of people will offer, and the fewer people will develop these skills. The incentive is thus a feedback loop, the more successful the companies, the more successful the workers, the more both grow upwards.

The promise of AI is to cut this loop open, allowing companies to theoretically lower their payroll to near zero, moving that line item to either data centre costs or the cost of AI models hosted by other companies. This has flow-on effects too: the fewer individual contributors you have, the fewer managers, HR representatives and middle managers you need. Companies are also incentivised to disintermediate and flatten their hierarchies.

With all the hype this seems like a risk-free gamble until you break apart the assumptions and consequences. There are two main assumptions:

1. The cost of using AI models will remain cheap.

2. AI will be able to continuously fulfil the duties of humans in all domains that they replace or augment.

I will disprove assumption (1) later in this section and disprove assumption (2) in the next section.

The up-front cost is seemingly sending many people into unemployment, and driving down the consumer economy. Of course, it is never that simple and rarely linear or even reversible. In taking this gamble, these companies will lose centuries of inherited experience both at the individual contributor and the management level.

This has already been done before. Entering the late 1970s, the United States had a tight grip on world exports and industries with very few exceptions. This was all off-shored over the next few decades until the US was deindustrialised. Today, the US struggles to produce tanks and artillery shells, as the last few workers that still know how retire and the economic incentive for their replacement disappears.

Software engineers, spreadsheet jockeys and other service economy workers will soon be facing the same calculus as industrial workers did during that time. They will quickly move on, or move out of the United States and other western nations. Ironically, these are the very skills needed to keep data centres running smoothly, AI models fed with data (after all it's the information technology that is upstreaming these data feeds and data creation events) and even the AI models developed. Albeit, the full effect of this will not be felt for the time being.

The worst thing is even if decision makers are fully aware of the gamble, they cannot change the trajectory because it has become a multi-level ponzi scheme. The software and hardware companies that are currently leading the economy like Microsoft and NVIDIA are dependent on the hype surrounding AI. If that hype is undermined even a little, as we saw in early January when the open source model DeepSeek R1 was released, the western economies fall into turmoil.

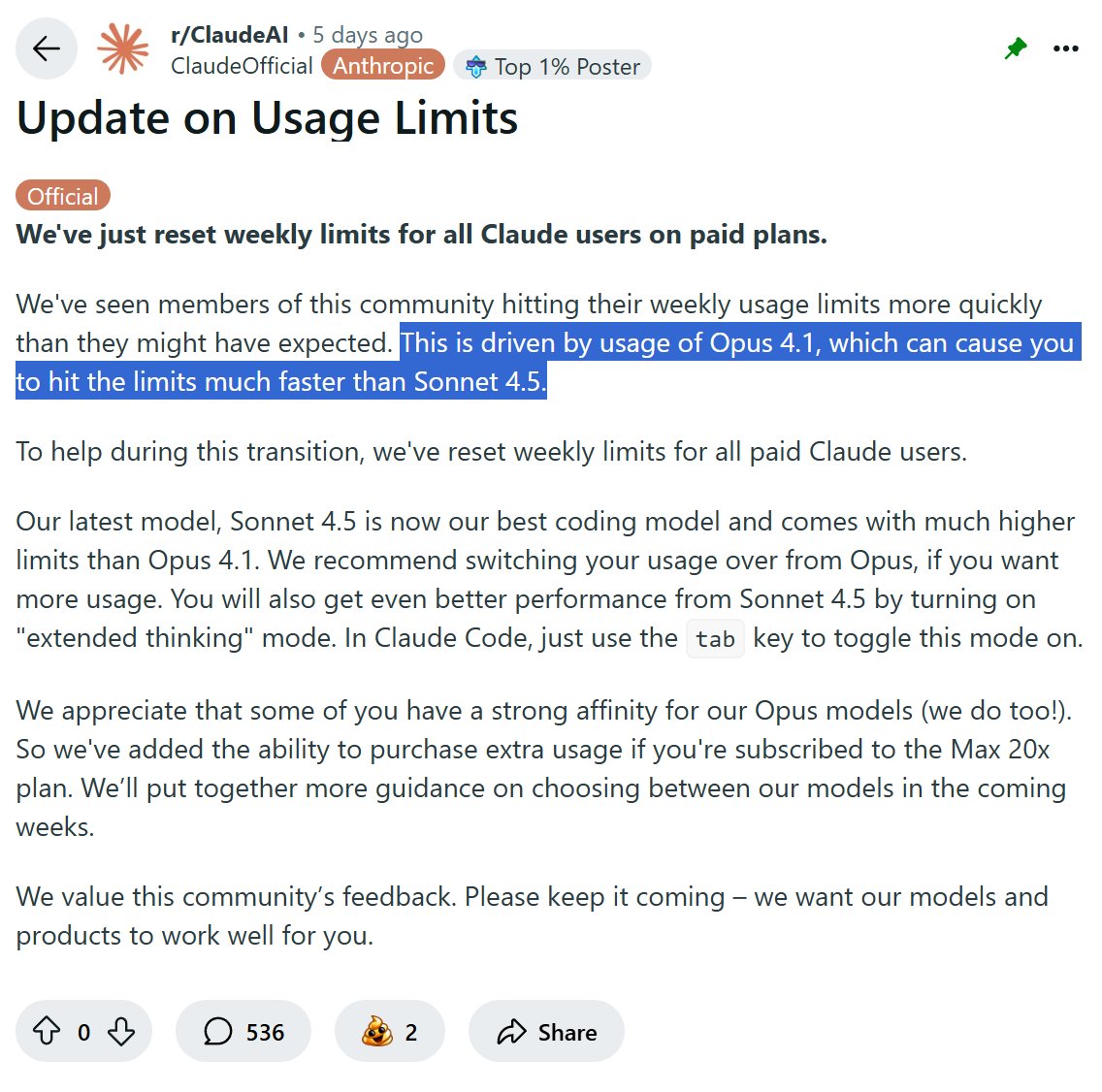

For the moment, AI is subsidised to a degree most people are unaware of. OpenAI is supported by Microsoft, and operates its models at a loss. Anthropic is likewise supported by Amazon and operates its expensive (and somewhat slower) model at a loss. It only gets worse for other players like Perplexity which has to spend 164% of its revenue on cost.

The gamble is as companies become dependent on this technology, and humans are replaced, the companies will be able to afford the real cost. It's like a "trial edition" right now. You can confirm this yourself, sign up to Anthropic's Claude for example, e.g. the Max account for $200USD/month, and watch how you can easily spend $40USD of their money in 5 hours. That should tell you something is seriously wrong.

What's happening is the speculation from both governments and large corporations that are speculating on the end game, gambling their bottom line and also a future without expertise.

Worse yet, to make meaningful gains, they've had to escalate the kind of hardware they use to host these AIs. Terabytes of RAM, insane and exotic networking equipment, brand new architectures, 100s of billions of dollars in one time engineering costs to impedance match currently popular mathematical models that may change dramatically in the near future.

AI is not getting cheaper. On the upper end, where businesses are concerned, it's actually getting more expensive. If you're a gamer you already know this, with the price of RTX3090s, RTX4090s and RTX5090s going through the roof over time. Moore's law is very much dead and inflation has caught up with the otherwise deflationary electronics economy.

But... maybe, despite all these trends, it will eventually work? What if they make it cheaper or cheap enough somehow and the AI exceeds human abilities even without data? Is that even possible in today's technology? That takes us to the next section and assumption (2).

For the moment, AI is subsidised to a degree most people are unaware of. OpenAI is supported by Microsoft, and operates its models at a loss. Anthropic is likewise supported by Amazon and operates its expensive (and somewhat slower) model at a loss. It only gets worse for other players like Perplexity which has to spend 164% of its revenue on cost.

The gamble is as companies become dependent on this technology, and humans are replaced, the companies will be able to afford the real cost. It's like a "trial edition" right now. You can confirm this yourself, sign up to Anthropic's Claude for example, e.g. the Max account for $200USD/month, and watch how you can easily spend $40USD of their money in 5 hours. That should tell you something is seriously wrong.

What's happening is the speculation from both governments and large corporations that are speculating on the end game, gambling their bottom line and also a future without expertise.

Worse yet, to make meaningful gains, they've had to escalate the kind of hardware they use to host these AIs. Terabytes of RAM, insane and exotic networking equipment, brand new architectures, 100s of billions of dollars in one time engineering costs to impedance match currently popular mathematical models that may change dramatically in the near future.

AI is not getting cheaper. On the upper end, where businesses are concerned, it's actually getting more expensive. If you're a gamer you already know this, with the price of RTX3090s, RTX4090s and RTX5090s going through the roof over time. Moore's law is very much dead and inflation has caught up with the otherwise deflationary electronics economy.

But... maybe, despite all these trends, it will eventually work? What if they make it cheaper or cheap enough somehow and the AI exceeds human abilities even without data? Is that even possible in today's technology? That takes us to the next section and assumption (2).

The limitations of "AI" technology

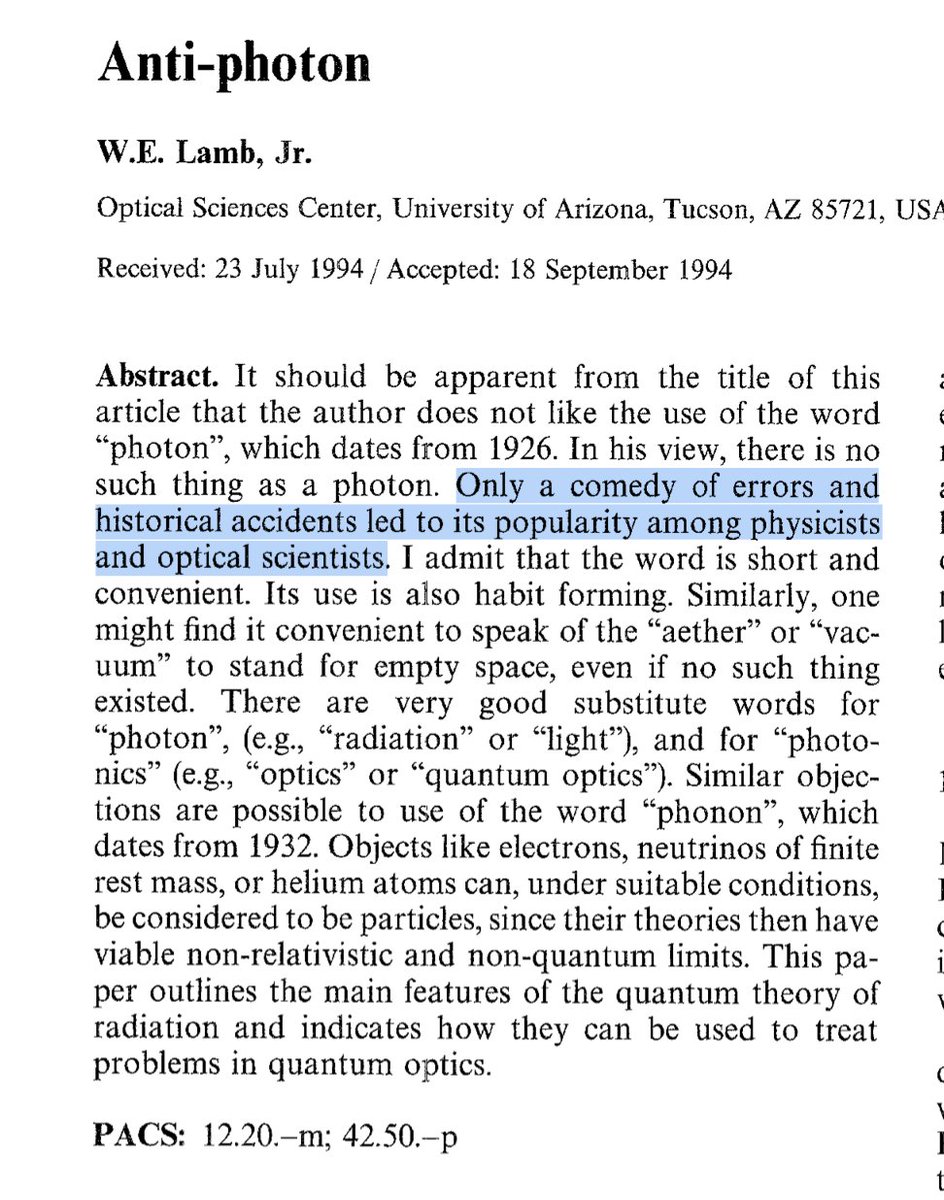

When people speak of AI today, they are normally talking about generative AI, or multimodal LLMs. This is actually a form of classification learning models, or supervised learning (with some hybrid techniques in the training stage). To simplify matters greatly, they are like your phone's autocomplete or predictive text on steroids. This is true even of "reasoning models" which are a neat hack called chain-of-thought that was first proposed on image boards, not AI researchers.

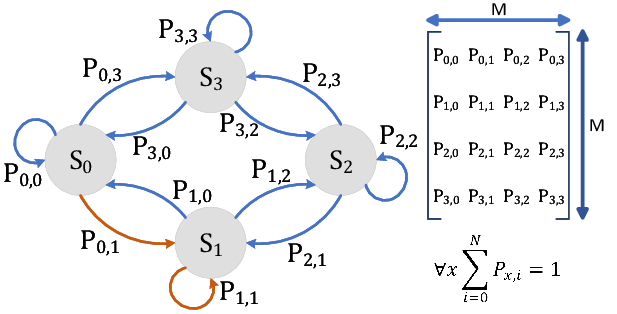

When autocorrect/autocomplete first came out, it probably seemed like magic to most of us!* Somehow the phone could predict several words ahead or exactly what we're thinking. This is because the phones contained models known as Markov chain models, which can give you a probability distribution for the next state given the current state (ironically this can be formulated as a memoryless model, but this goes beyond our current discussion).

The language models essentially build out these Markov chains in two stages, first taking all the data they can find in the world and creating these probabilities that depend only on the next word (the pre-training stage), then retrain these chains to solve specific problems such as solving exam questions sitting the bar interview and more (the fine-tuning stage).

The model performs close to perfect on the fine-tuned data, but also "zero-shots" (i.e. getting a question right the very first time it encounters it) many queries because they can be interpolated from the available data that was pre-trained into the underlying probabilities. In ML parlance this is called regularisation (as the fine-tuning isn't overfit) and the more data you have the better the performance -- i.e. the performance is more regular and not just dependent on the training set.

Most companies have now consumed all the data they can find, and things get worse... the internet is being flooded by AI generated data, and consuming this into the pre-training stage actually spoils the regularisation, making the performance worse. So human beings have to sort the data out, or superior models have to be trained to classify data as natural or not.

Speaking of that -- CAN you reliably tell AI generated data apart from human data? Yes you can, because of the way LLMs pick the next word (e.g. top-k), the low probability words are suppressed resulting in a thinner tail. Across a document this and other analysis such as word-pairs allow us to sniff out documents. Humans too can tell when documents and even programming code is AI generated intuitively.

* [Those old enough could even remember the Nokia T9 technology which turned numbers into words, which is similar in concept but not execution.]

When people speak of AI today, they are normally talking about generative AI, or multimodal LLMs. This is actually a form of classification learning models, or supervised learning (with some hybrid techniques in the training stage). To simplify matters greatly, they are like your phone's autocomplete or predictive text on steroids. This is true even of "reasoning models" which are a neat hack called chain-of-thought that was first proposed on image boards, not AI researchers.

When autocorrect/autocomplete first came out, it probably seemed like magic to most of us!* Somehow the phone could predict several words ahead or exactly what we're thinking. This is because the phones contained models known as Markov chain models, which can give you a probability distribution for the next state given the current state (ironically this can be formulated as a memoryless model, but this goes beyond our current discussion).

The language models essentially build out these Markov chains in two stages, first taking all the data they can find in the world and creating these probabilities that depend only on the next word (the pre-training stage), then retrain these chains to solve specific problems such as solving exam questions sitting the bar interview and more (the fine-tuning stage).

The model performs close to perfect on the fine-tuned data, but also "zero-shots" (i.e. getting a question right the very first time it encounters it) many queries because they can be interpolated from the available data that was pre-trained into the underlying probabilities. In ML parlance this is called regularisation (as the fine-tuning isn't overfit) and the more data you have the better the performance -- i.e. the performance is more regular and not just dependent on the training set.

Most companies have now consumed all the data they can find, and things get worse... the internet is being flooded by AI generated data, and consuming this into the pre-training stage actually spoils the regularisation, making the performance worse. So human beings have to sort the data out, or superior models have to be trained to classify data as natural or not.

Speaking of that -- CAN you reliably tell AI generated data apart from human data? Yes you can, because of the way LLMs pick the next word (e.g. top-k), the low probability words are suppressed resulting in a thinner tail. Across a document this and other analysis such as word-pairs allow us to sniff out documents. Humans too can tell when documents and even programming code is AI generated intuitively.

* [Those old enough could even remember the Nokia T9 technology which turned numbers into words, which is similar in concept but not execution.]

This tells you the underlying processes are not the same, and indeed, the best way to understand it is to compare the two realties:

Us humans are maximally embedded within the universe, amongst each other, in every moment possible. This is the source of all the data fed to AI, whether by word, drawing, audio or video of the world we built around us. We are machines that accept everything around us, filter it through our teleology and literally create the future in a manner that transcends our individual existence. We accept and create outside interference, when we are operating at our maximally effectiveness.

On the other hand, digital circuits and mathematical models operate at maximal effectiveness when outside interference is rejected, when the behaviour is almost perfectly predictable. The only time the outside interference comes into play is after it has already been processed by human beings (or by analog to digital converters) e.g. during the training phase.

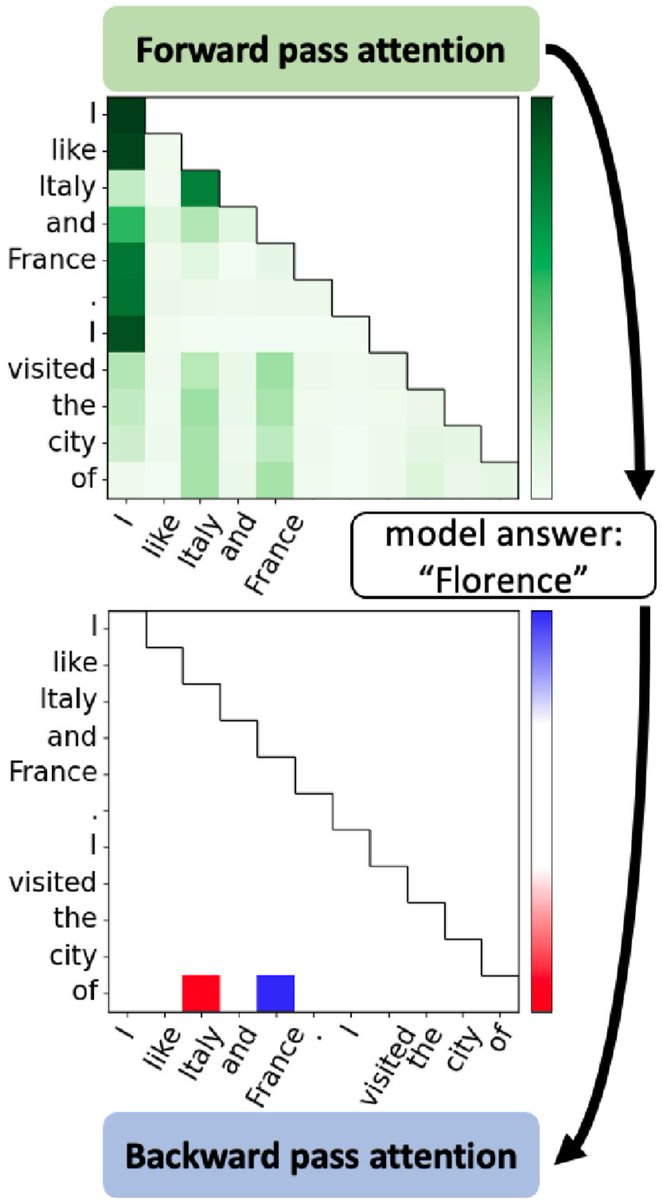

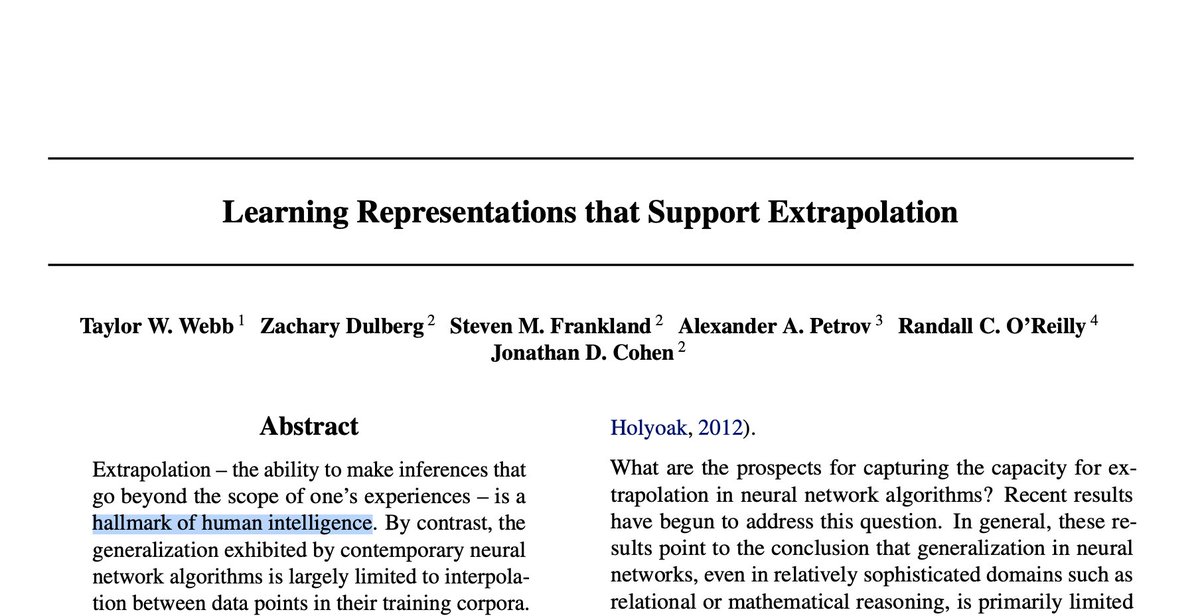

To summarise what this all means, in short, humans are better at extrapolation than computer models. On the other hand, computer models are superior when it comes to interpolation in terms of speed and efficiency. An AI model can read through a document in seconds, that would take a human an hour, and answer queries within the scope of that document.

You can think of this in a different manner: Humans are like a music composure able to produce amazing original tracks, sometimes brand new styles, but require 10,000 hours of experience/training and many hours of dedicated work to produce a gem. Often a human being produces just a few of these gems in a lifetime.

In contrast, an AI is like a remix artist, able to mix styles, mix tracks, create brand new music that just sounds a bit... off... derivative? Somehow, wrong. But often good enough for certain purposes. Never the same as a human created track however!

Nevertheless, even while "remixing" (interpolation), these models will still make mistakes as the underlying technology is essentially a probabilistic model. Today it's popular to call this effect "hallucination", but it's really just going down a chain with one mistake and continuing to build on it. Humans do this too, all the time in fact!

When faced with missing data (extrapolation), the LLM still has to fill a word in. If it doesn't recognise that it does not have an answer... generative AI will often make it up, and stick to it confidently. So, no matter what, a human being has to ultimately monitor the data coming out of these machines.

But what the hype machine misses is this: human beings also have to generate the data that feeds these ML models. And here, an unexpected problem happens: People have begun to prefer to chat with LLMs rather than interact with each other online. AI companies harvest this chat data, but it's often garbage because the human using it is rarely checking the output of the ML, so all they get are queries.

This creates a bottleneck on enhancement of the model, meaning no matter how much model capacity and % recall is improved, it will never catch up with the unfolding reality -- or an equally bad outcome can happen, reality will fold onto itself and disconnect humans from their embedding in reality. A future where humans generate and consume their data without sharing it is one where the fat tail disappears and the models along with reality collapse in on themselves.

On that note, while data scientists worry about data contamination perhaps what they should really worry about is data extinction.

Us humans are maximally embedded within the universe, amongst each other, in every moment possible. This is the source of all the data fed to AI, whether by word, drawing, audio or video of the world we built around us. We are machines that accept everything around us, filter it through our teleology and literally create the future in a manner that transcends our individual existence. We accept and create outside interference, when we are operating at our maximally effectiveness.

On the other hand, digital circuits and mathematical models operate at maximal effectiveness when outside interference is rejected, when the behaviour is almost perfectly predictable. The only time the outside interference comes into play is after it has already been processed by human beings (or by analog to digital converters) e.g. during the training phase.

To summarise what this all means, in short, humans are better at extrapolation than computer models. On the other hand, computer models are superior when it comes to interpolation in terms of speed and efficiency. An AI model can read through a document in seconds, that would take a human an hour, and answer queries within the scope of that document.

You can think of this in a different manner: Humans are like a music composure able to produce amazing original tracks, sometimes brand new styles, but require 10,000 hours of experience/training and many hours of dedicated work to produce a gem. Often a human being produces just a few of these gems in a lifetime.

In contrast, an AI is like a remix artist, able to mix styles, mix tracks, create brand new music that just sounds a bit... off... derivative? Somehow, wrong. But often good enough for certain purposes. Never the same as a human created track however!

Nevertheless, even while "remixing" (interpolation), these models will still make mistakes as the underlying technology is essentially a probabilistic model. Today it's popular to call this effect "hallucination", but it's really just going down a chain with one mistake and continuing to build on it. Humans do this too, all the time in fact!

When faced with missing data (extrapolation), the LLM still has to fill a word in. If it doesn't recognise that it does not have an answer... generative AI will often make it up, and stick to it confidently. So, no matter what, a human being has to ultimately monitor the data coming out of these machines.

But what the hype machine misses is this: human beings also have to generate the data that feeds these ML models. And here, an unexpected problem happens: People have begun to prefer to chat with LLMs rather than interact with each other online. AI companies harvest this chat data, but it's often garbage because the human using it is rarely checking the output of the ML, so all they get are queries.

This creates a bottleneck on enhancement of the model, meaning no matter how much model capacity and % recall is improved, it will never catch up with the unfolding reality -- or an equally bad outcome can happen, reality will fold onto itself and disconnect humans from their embedding in reality. A future where humans generate and consume their data without sharing it is one where the fat tail disappears and the models along with reality collapse in on themselves.

On that note, while data scientists worry about data contamination perhaps what they should really worry about is data extinction.

This doesn't just affect popular use of AI but also business use, such as using it to substitute junior engineers. You might think of software engineers as "coders", but 90% of their time is spent in meetings and reading each other's code. Often arguing over minute details for weeks that an AI wouldn't spent milliseconds over.

As with the folding of reality onto itself, the hidden cost to using AI is only revealed after you take it for granted!

One software engineer I know likened AI to the high interest rate credit card of technical debt. You can slam it on the table and get your way, bring forward amazing performance boosts in the short term... but without the knowledge base of human beings, the moment the complexity exceeds the model's ability to comprehend or extrapolate, it's game over.

You'll have to either start from scratch, or spend even more engineering time on comprehending code written by a machine that cannot attend meetings or answer questions in a reliable manner.

Engineers themselves are quickly catching on to this today, but the situation is worse for organisations that make strategic decisions related to AI. Firing entire complexes of engineers throws irreplaceable know-how and expertise. The engineers are unlikely to return to that side of the industry, and if they do, rarely with the same degree of loyalty and camaraderie needed to fully embody human beings within an organisation.

Indeed its worse than it appears on the surface, as human beings in organisations employ transactive memory, where knowledge or expertise is distributed between people (or as you'll see in the next section, things). This is where managers shine, teaming people up to create a synergy that isn't possible with either person working alone. As AI is pushed to isolate people and make them "work more efficiently" and without so much interdependency, this synergy will quickly begin to dissipate when the high interest rate of the AI credit card rears its ugly head.

In summary of this section, AI represents a trap that creates a structural collapse of organisations when misused. In the next section, we will go one level down: the cognitive collapse that those who misuse AI will face.

As with the folding of reality onto itself, the hidden cost to using AI is only revealed after you take it for granted!

One software engineer I know likened AI to the high interest rate credit card of technical debt. You can slam it on the table and get your way, bring forward amazing performance boosts in the short term... but without the knowledge base of human beings, the moment the complexity exceeds the model's ability to comprehend or extrapolate, it's game over.

You'll have to either start from scratch, or spend even more engineering time on comprehending code written by a machine that cannot attend meetings or answer questions in a reliable manner.

Engineers themselves are quickly catching on to this today, but the situation is worse for organisations that make strategic decisions related to AI. Firing entire complexes of engineers throws irreplaceable know-how and expertise. The engineers are unlikely to return to that side of the industry, and if they do, rarely with the same degree of loyalty and camaraderie needed to fully embody human beings within an organisation.

Indeed its worse than it appears on the surface, as human beings in organisations employ transactive memory, where knowledge or expertise is distributed between people (or as you'll see in the next section, things). This is where managers shine, teaming people up to create a synergy that isn't possible with either person working alone. As AI is pushed to isolate people and make them "work more efficiently" and without so much interdependency, this synergy will quickly begin to dissipate when the high interest rate of the AI credit card rears its ugly head.

In summary of this section, AI represents a trap that creates a structural collapse of organisations when misused. In the next section, we will go one level down: the cognitive collapse that those who misuse AI will face.

The Ultimate Trap: AI-Borne Cognitive Collapse

Let me take a step back and discuss transactive memory a bit more closely. This is an important concept in psychology which discusses how our brains connect to and depend on other brains. Initially the focus was on husband and wife and the manner in which memory/skills between them was shared, but it was generalised to organisations later.

You already have a notion of this if you grew up with search engines, or have witnessed people with a great dependency on them. Those with poor social decorum will often interrupt themselves or another person to deploy their cell phone, enter a search query, bring up a page and then resume the conversation with the results entering the conversation. More annoying to me is when they bring up a video instead.

This is the most embodied demonstration of transactive memory, where the person didn't bother to remember anything on the page, due to its availability. What's the point right? Instead of absorbing everything on the page, they absorb the feeling or notion they got out of reading it, turning it into a single memory:

The vague search query they used to bring up that experience!

Then it becomes a forward chain in their memory:

Cell phone -> Query -> Result (and experience)

So the moment your conversation interacts with anything in that experience, their brain (which works heavily on association) turns the result into the reverse sequence: pull out your phone, enter the query and resume the conversation.

They turn what should be an absorbed understanding into a key, with the smartphone and the internet locking away the result. So rather than enriching themselves with experiences, they become walking key masters to a personality and experience they don't own and haven't earnt.

Even before AI, software engineers relied on search engines, code repositories and places like Stack Overflow in the same manner, but these were still curated by human beings.

Got a problem? See if someone has solved it on sh*thub, never "reinvent the wheel". Don't worry about bringing in 2 million dependencies, the work has to be done efficiently.

Still stuck? Look it up on Stack Overflow.

Even more stuck? Pull out your debugger and start trying to understand the code and problem you're dealing with.

Can't get anywhere? Darn, now you have to do the horrid act of speaking to another human being, a more senior engineer perhaps. 😳

Yet even when you do speak to another human being, there's a good chance they'll be interacting with each of your keys, their transactive memory slightly less degraded than yours owing to their longer experience. You won't come to them with the problem, but with other people's solutions you're interpolated/remixed as your own solution.

The inexperienced, aggressive or junior engineer goes through this cycle, rather than speak to a human being first. Sketch out the problem on a whiteboard and look up solutions afterwards. Understand the problem entirely, look at the fat-tail, and see ahead of issues you'll face later.

Let me take a step back and discuss transactive memory a bit more closely. This is an important concept in psychology which discusses how our brains connect to and depend on other brains. Initially the focus was on husband and wife and the manner in which memory/skills between them was shared, but it was generalised to organisations later.

You already have a notion of this if you grew up with search engines, or have witnessed people with a great dependency on them. Those with poor social decorum will often interrupt themselves or another person to deploy their cell phone, enter a search query, bring up a page and then resume the conversation with the results entering the conversation. More annoying to me is when they bring up a video instead.

This is the most embodied demonstration of transactive memory, where the person didn't bother to remember anything on the page, due to its availability. What's the point right? Instead of absorbing everything on the page, they absorb the feeling or notion they got out of reading it, turning it into a single memory:

The vague search query they used to bring up that experience!

Then it becomes a forward chain in their memory:

Cell phone -> Query -> Result (and experience)

So the moment your conversation interacts with anything in that experience, their brain (which works heavily on association) turns the result into the reverse sequence: pull out your phone, enter the query and resume the conversation.

They turn what should be an absorbed understanding into a key, with the smartphone and the internet locking away the result. So rather than enriching themselves with experiences, they become walking key masters to a personality and experience they don't own and haven't earnt.

Even before AI, software engineers relied on search engines, code repositories and places like Stack Overflow in the same manner, but these were still curated by human beings.

Got a problem? See if someone has solved it on sh*thub, never "reinvent the wheel". Don't worry about bringing in 2 million dependencies, the work has to be done efficiently.

Still stuck? Look it up on Stack Overflow.

Even more stuck? Pull out your debugger and start trying to understand the code and problem you're dealing with.

Can't get anywhere? Darn, now you have to do the horrid act of speaking to another human being, a more senior engineer perhaps. 😳

Yet even when you do speak to another human being, there's a good chance they'll be interacting with each of your keys, their transactive memory slightly less degraded than yours owing to their longer experience. You won't come to them with the problem, but with other people's solutions you're interpolated/remixed as your own solution.

The inexperienced, aggressive or junior engineer goes through this cycle, rather than speak to a human being first. Sketch out the problem on a whiteboard and look up solutions afterwards. Understand the problem entirely, look at the fat-tail, and see ahead of issues you'll face later.

AI takes this degraded cognitive process and gives it a steroidal boost: the human writing the code, the senior engineer helping out, or even debugging it, disappears. AI in this manner can be used to augment the missing knowledge and skill of an inexperienced engineer. Indeed with things like Codex/Claude Code, the entire repository maintainer can disappear too. If the hype was true, then we could say software engineering is now a solved problem. Just put any inexperienced prompt engineer in front of a code repository trained LLM, and it'll do all the work from end-to-end.

Until... it inevitably gets stuck itself, and believe me, it does. Remember what I said about extrapolation? This happens even more in engineering problems. Where does one go then?

The transactive memory of the junior engineer no longer links with the senior, and indeed with all the firings going on and bias towards augmented use of AI, perhaps those engineers no longer exist in an organisation.

Suddenly, the high interest rate starts knocking on the door, and what should have been a cheap problem will soon become a very expensive problem requiring organisational attention. But by that time, it's just key masters staring at each other, waiting for someone to pull out the answer from a system that does not contain it, for the right answer is contained between people.

I'm using the example of software engineers here as experts, but you can substitute any profession, law, civil engineer, you name it. I'm also speaking mainly of juniors... but can this rot the cognition of senior experts as well? Yes it can, perhaps moreso in specific augmented use cases.

Until... it inevitably gets stuck itself, and believe me, it does. Remember what I said about extrapolation? This happens even more in engineering problems. Where does one go then?

The transactive memory of the junior engineer no longer links with the senior, and indeed with all the firings going on and bias towards augmented use of AI, perhaps those engineers no longer exist in an organisation.

Suddenly, the high interest rate starts knocking on the door, and what should have been a cheap problem will soon become a very expensive problem requiring organisational attention. But by that time, it's just key masters staring at each other, waiting for someone to pull out the answer from a system that does not contain it, for the right answer is contained between people.

I'm using the example of software engineers here as experts, but you can substitute any profession, law, civil engineer, you name it. I'm also speaking mainly of juniors... but can this rot the cognition of senior experts as well? Yes it can, perhaps moreso in specific augmented use cases.

In the naive model of human organisations, senior experts dispense knowledge to juniors, who absorb it and learn. Reality is different. Seniors learn from juniors, sometimes even more than juniors learn from seniors, because seniors can see more from less. The juniors act as lower stratum nodes in a super-neural network, perhaps the sensory parts. Without this interaction, the complex synergy that powers innovation and excellence disappears.

Any experience person will tell you: it's impossible to learn without teaching. AI will sever this teaching component, and also the learning one, for as senior experts augment the need for junior interaction, they will come to rely on AI as their juniors and stop learning about the innards of technologies/knowledge that they see as not worthy of their expense of time.

For example, UI toolkits for software engineers, or perhaps case law details for engineers. Without knowing the details, they don't understand the problems to look out for, interactions between parts, potential improvements and

Zooming out, something huge disappears. Between 6 people in an organisation, there are 15 possible connections. 15 transactive memories. Between one person and AI, it collapses to just 1.

Across an organisation? You can do the math (N(N-1)/2), albeit the networks are never fully connected. What will happen as this cognitive collapse takes hold of organisations and people are trapped within AI augmentation or automation?

We don't need to look far to see an example. Remember the remix analogy? The full embodiment of human beings in reality? Look at movies made in the 80s, for example the 1987 hit, RoboCop. In this movie, a dead man is brought back to life as a cyborg and augmented with machines, trapped within the control of a larger organisation. The plot to the story isn't the point here, it's the quality of the movie, how human it was in the end and how well it was received -- and how it embedded itself in reality.

It wasn't merely a movie. It quickly became a part of our reality, that we interacted with as though it was real. This is why art imitates life, and life imitates art. It's part of a loop, between symbolism and their physical realisation, like DNA, RNA and protein in our bodies.

Recently, reality itself has degraded as our society collapsed and our complex structures became vulgar, disconnected and shallow. As a result, the reality that inspires the creation of movies folded in on itself, resulting in garbage production. Movie theatres were desperate for fat-tailed content, rather than producing garbage originals based on our garbage reality, so they went back and started to remake movies.

In 2014, RoboCop was remade and it was hot garbage, because it was embedded in our reality which by then had already degraded beyond the point where human interaction and the novelty of the plot could be appreciated.

This is much like engineers looking things up on sh*thub, changing a few lines and trying to ram through a solution made by someone else, for another problem, in order to solve their own. In fact this is exactly how interpolation works, and the underlying mechanism that generative AI uses.

It cannot embed itself in reality.

It cannot form networks.

It can only create remakes.

Today, most of the original writers, directors are gone, or fired. Worse yet, the social reality that they used to be inspired into writing story has long disappeared, with newer generations escaping reality into video games, p*rnography, hookup culture and more social isolation.

AI will merely bring this to organisations and at a very rapid rate, creating an expert squeeze, just like movie writer loss. The know-how, personnel, connections, will all disappear at a rate most people cannot yet appreciate.

Any experience person will tell you: it's impossible to learn without teaching. AI will sever this teaching component, and also the learning one, for as senior experts augment the need for junior interaction, they will come to rely on AI as their juniors and stop learning about the innards of technologies/knowledge that they see as not worthy of their expense of time.

For example, UI toolkits for software engineers, or perhaps case law details for engineers. Without knowing the details, they don't understand the problems to look out for, interactions between parts, potential improvements and

Zooming out, something huge disappears. Between 6 people in an organisation, there are 15 possible connections. 15 transactive memories. Between one person and AI, it collapses to just 1.

Across an organisation? You can do the math (N(N-1)/2), albeit the networks are never fully connected. What will happen as this cognitive collapse takes hold of organisations and people are trapped within AI augmentation or automation?

We don't need to look far to see an example. Remember the remix analogy? The full embodiment of human beings in reality? Look at movies made in the 80s, for example the 1987 hit, RoboCop. In this movie, a dead man is brought back to life as a cyborg and augmented with machines, trapped within the control of a larger organisation. The plot to the story isn't the point here, it's the quality of the movie, how human it was in the end and how well it was received -- and how it embedded itself in reality.

It wasn't merely a movie. It quickly became a part of our reality, that we interacted with as though it was real. This is why art imitates life, and life imitates art. It's part of a loop, between symbolism and their physical realisation, like DNA, RNA and protein in our bodies.

Recently, reality itself has degraded as our society collapsed and our complex structures became vulgar, disconnected and shallow. As a result, the reality that inspires the creation of movies folded in on itself, resulting in garbage production. Movie theatres were desperate for fat-tailed content, rather than producing garbage originals based on our garbage reality, so they went back and started to remake movies.

In 2014, RoboCop was remade and it was hot garbage, because it was embedded in our reality which by then had already degraded beyond the point where human interaction and the novelty of the plot could be appreciated.

This is much like engineers looking things up on sh*thub, changing a few lines and trying to ram through a solution made by someone else, for another problem, in order to solve their own. In fact this is exactly how interpolation works, and the underlying mechanism that generative AI uses.

It cannot embed itself in reality.

It cannot form networks.

It can only create remakes.

Today, most of the original writers, directors are gone, or fired. Worse yet, the social reality that they used to be inspired into writing story has long disappeared, with newer generations escaping reality into video games, p*rnography, hookup culture and more social isolation.

AI will merely bring this to organisations and at a very rapid rate, creating an expert squeeze, just like movie writer loss. The know-how, personnel, connections, will all disappear at a rate most people cannot yet appreciate.

Locked In: The End

In Baudrillard's terminology, AI represents a fatal strategy. Bringing efficiency at the cost of understanding at increasingly rapid rates, subsidised to the point where the success of the economy depends on its success. The perfect Ponzi scheme, representing the very ecstasy of communication.

There's no escape either, as we saw with the trillion dollar loss over a few days due to competition from China, the western economies are completely dependent on the success of the AI augmented economy reality. All the largest stocks deal with AI. You literally cannot start a VC funded company today without mentioning AI in your business plan.

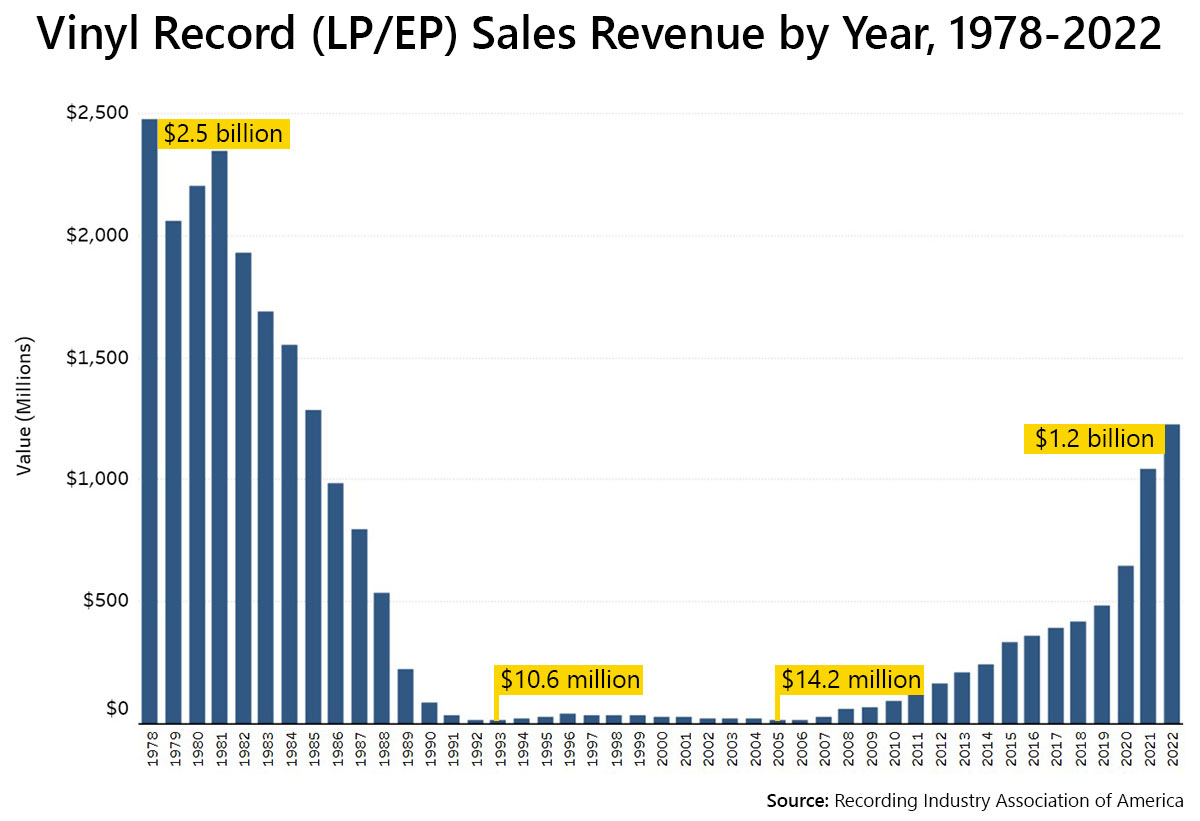

There in, though, lies the hope. Some people will see this coming as the collapse becomes impossible to ignore. Have you noticed how many people have returned to vinyl records? They don't quite know this but they do this in order to place the transactive memory of music they love into objects they hold. They know, this object in their hand (a vinyl record or its cover), will produce music they love. Then, the deliberate (albeit inefficient) action of setting the record in place and playing the track brings them the music they enjoy.

You can see this also with the people railing against Nintendo trying to phase out cartridges with a digital key counterfeit. People don't want a keymaster, they want the actual digital information contained on a cartridge, the ability to disconnect from the internet forever and play the games they paid for independent of Nintendo's future plans.

What does that have to do with AI? Everything. All fatal strategies eventually burn themselves out, producing a new reality we cannot anticipate yet.

This will happen across the board, not just in organisations or the economy. Chat bots ARE superior to most people you'll meet online. LLMs ARE superior to most junior engineers. The economic decisions made today cannot be undone because everyone is locked into this path.

And in the mean time, the problem itself will create appreciation for what is missing: boundaries, cells, and human interaction, the structural basis of our true existence and embedding within reality where the inefficient is truly beautiful.

/End

In Baudrillard's terminology, AI represents a fatal strategy. Bringing efficiency at the cost of understanding at increasingly rapid rates, subsidised to the point where the success of the economy depends on its success. The perfect Ponzi scheme, representing the very ecstasy of communication.

There's no escape either, as we saw with the trillion dollar loss over a few days due to competition from China, the western economies are completely dependent on the success of the AI augmented economy reality. All the largest stocks deal with AI. You literally cannot start a VC funded company today without mentioning AI in your business plan.

There in, though, lies the hope. Some people will see this coming as the collapse becomes impossible to ignore. Have you noticed how many people have returned to vinyl records? They don't quite know this but they do this in order to place the transactive memory of music they love into objects they hold. They know, this object in their hand (a vinyl record or its cover), will produce music they love. Then, the deliberate (albeit inefficient) action of setting the record in place and playing the track brings them the music they enjoy.

You can see this also with the people railing against Nintendo trying to phase out cartridges with a digital key counterfeit. People don't want a keymaster, they want the actual digital information contained on a cartridge, the ability to disconnect from the internet forever and play the games they paid for independent of Nintendo's future plans.

What does that have to do with AI? Everything. All fatal strategies eventually burn themselves out, producing a new reality we cannot anticipate yet.

This will happen across the board, not just in organisations or the economy. Chat bots ARE superior to most people you'll meet online. LLMs ARE superior to most junior engineers. The economic decisions made today cannot be undone because everyone is locked into this path.

And in the mean time, the problem itself will create appreciation for what is missing: boundaries, cells, and human interaction, the structural basis of our true existence and embedding within reality where the inefficient is truly beautiful.

/End

• • •

Missing some Tweet in this thread? You can try to

force a refresh