AI can now predict what you're thinking before you say it 🤯

New research from CMU introduces "Social World Models" - AI that doesn't just parse what people say, but predicts what they're thinking, what they'll do next, and how they'll react to your actions.

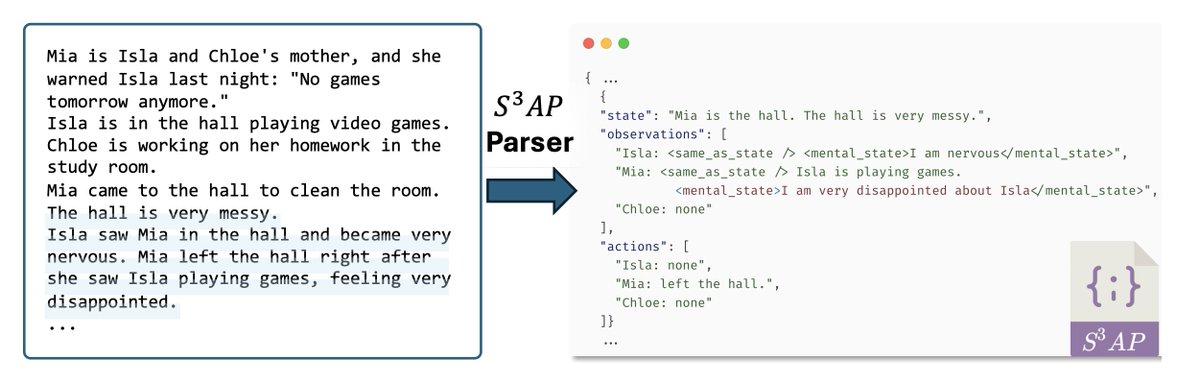

The breakthrough is S³AP (Social Simulation Analysis Protocol). Instead of feeding AI raw conversations, they structure social interactions like a simulation game - tracking who knows what, who believes what, and what everyone's mental state looks like at each moment.

The results are wild. On theory-of-mind tests, they jumped from 54% to 96% accuracy. But the real magic happens when these models start interacting.

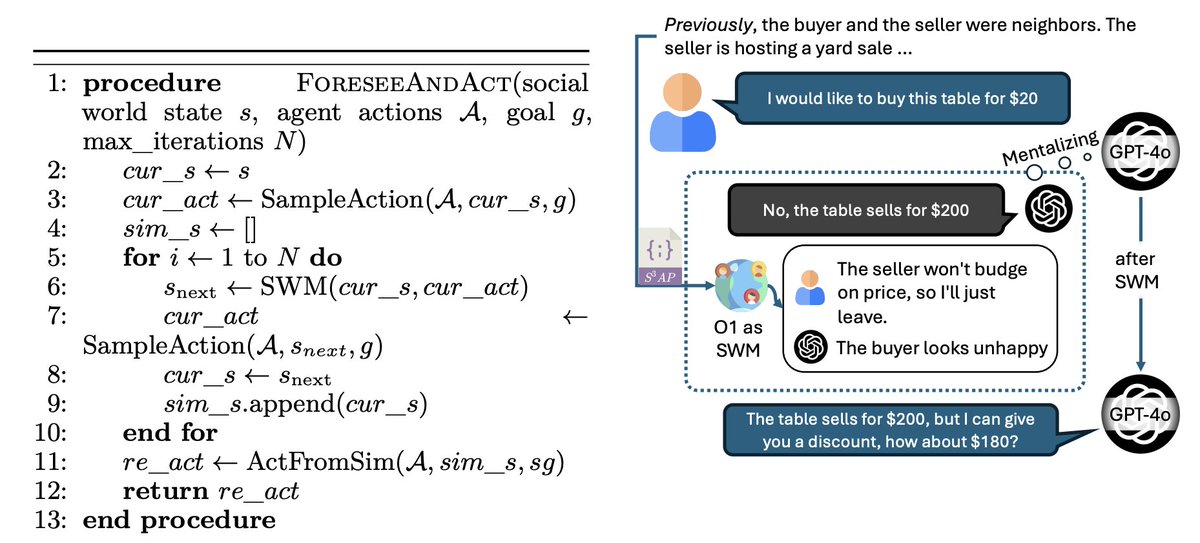

The AI doesn't just respond anymore - it runs mental simulations first. "If I say this, how will they interpret it? What will they think I'm thinking? How does that change what I should actually say?"

This isn't just better chatbots. It's AI that can navigate office politics, understand when someone is lying, predict how a negotiation will unfold. AI that gets the subtext.

The researchers tested this on competitive vs cooperative scenarios. In competitive settings (like bargaining), the social world models helped even more - because modeling your opponent's mental state matters most when interests don't align.

Here's what's unsettling: the AI doesn't need to be the smartest model to build these social representations.

A smaller model can create the "mental maps" that help larger models reason better. Social intelligence might be more about representation than raw compute.

We're not just building AI that understands the world anymore. We're building AI that understands 'us'.

New research from CMU introduces "Social World Models" - AI that doesn't just parse what people say, but predicts what they're thinking, what they'll do next, and how they'll react to your actions.

The breakthrough is S³AP (Social Simulation Analysis Protocol). Instead of feeding AI raw conversations, they structure social interactions like a simulation game - tracking who knows what, who believes what, and what everyone's mental state looks like at each moment.

The results are wild. On theory-of-mind tests, they jumped from 54% to 96% accuracy. But the real magic happens when these models start interacting.

The AI doesn't just respond anymore - it runs mental simulations first. "If I say this, how will they interpret it? What will they think I'm thinking? How does that change what I should actually say?"

This isn't just better chatbots. It's AI that can navigate office politics, understand when someone is lying, predict how a negotiation will unfold. AI that gets the subtext.

The researchers tested this on competitive vs cooperative scenarios. In competitive settings (like bargaining), the social world models helped even more - because modeling your opponent's mental state matters most when interests don't align.

Here's what's unsettling: the AI doesn't need to be the smartest model to build these social representations.

A smaller model can create the "mental maps" that help larger models reason better. Social intelligence might be more about representation than raw compute.

We're not just building AI that understands the world anymore. We're building AI that understands 'us'.

The key insight: humans navigate social situations by constantly running mental simulations. "If I say this, they'll think that, so I should actually say this other thing." AI has been missing this predictive layer entirely.

S³AP breaks down social interactions like a game engine.

Instead of messy dialogue, it tracks: who's in the room, what each person observed, what they're thinking internally, and what actions they take. Suddenly AI can follow the social physics.

Instead of messy dialogue, it tracks: who's in the room, what each person observed, what they're thinking internally, and what actions they take. Suddenly AI can follow the social physics.

The "Foresee and Act" algorithm is where it gets scary good. Before responding, the AI simulates how the other person will interpret its message, then optimizes for the actual goal.

It's not just reactive anymore - it's strategically predictive.

It's not just reactive anymore - it's strategically predictive.

Tested on competitive negotiations vs cooperative tasks. The social world models helped more in competitive settings. Makes sense - when interests align, you can be direct.

When they don't, modeling the other person's mental state becomes critical.

When they don't, modeling the other person's mental state becomes critical.

What's wild: the AI that builds the best "mental maps" isn't necessarily the smartest overall model. Social intelligence might be more about representation than raw compute.

We're learning that understanding minds has different requirements than understanding physics.

Read the full paper: arxiv.org/abs/2509.00559

We're learning that understanding minds has different requirements than understanding physics.

Read the full paper: arxiv.org/abs/2509.00559

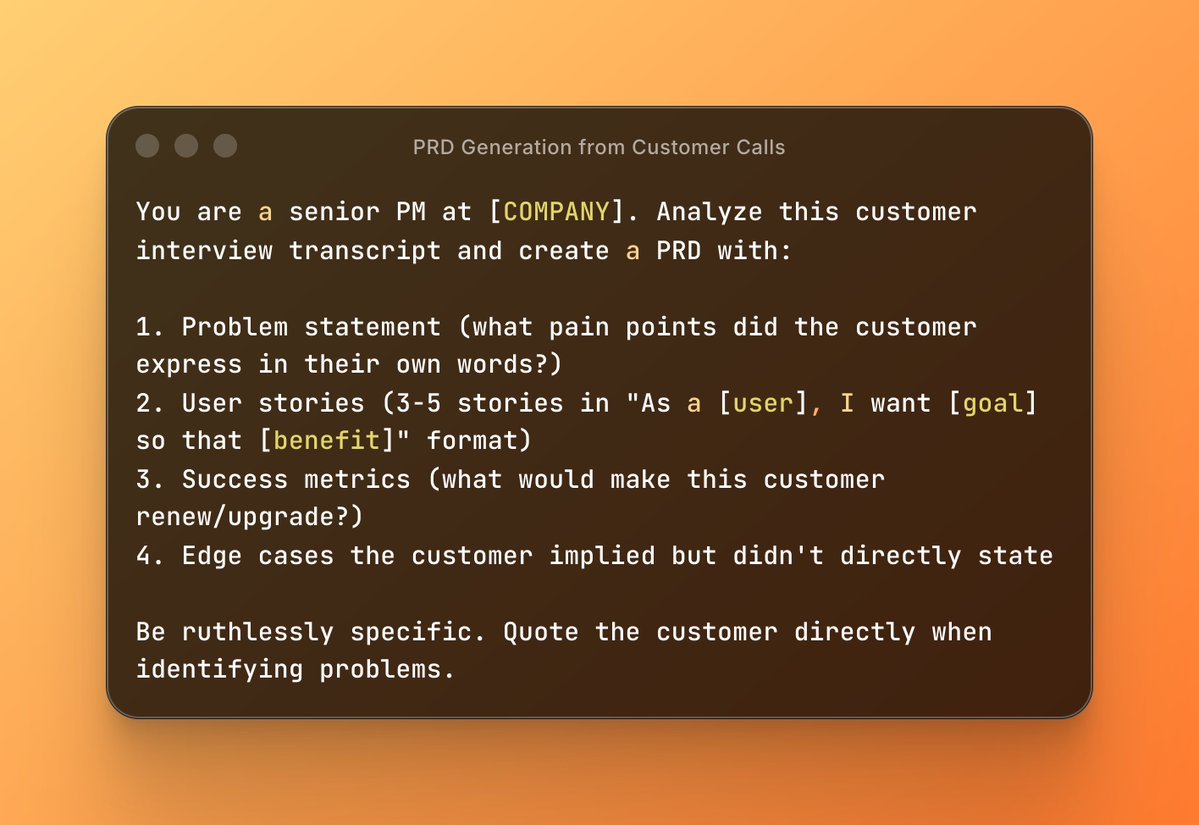

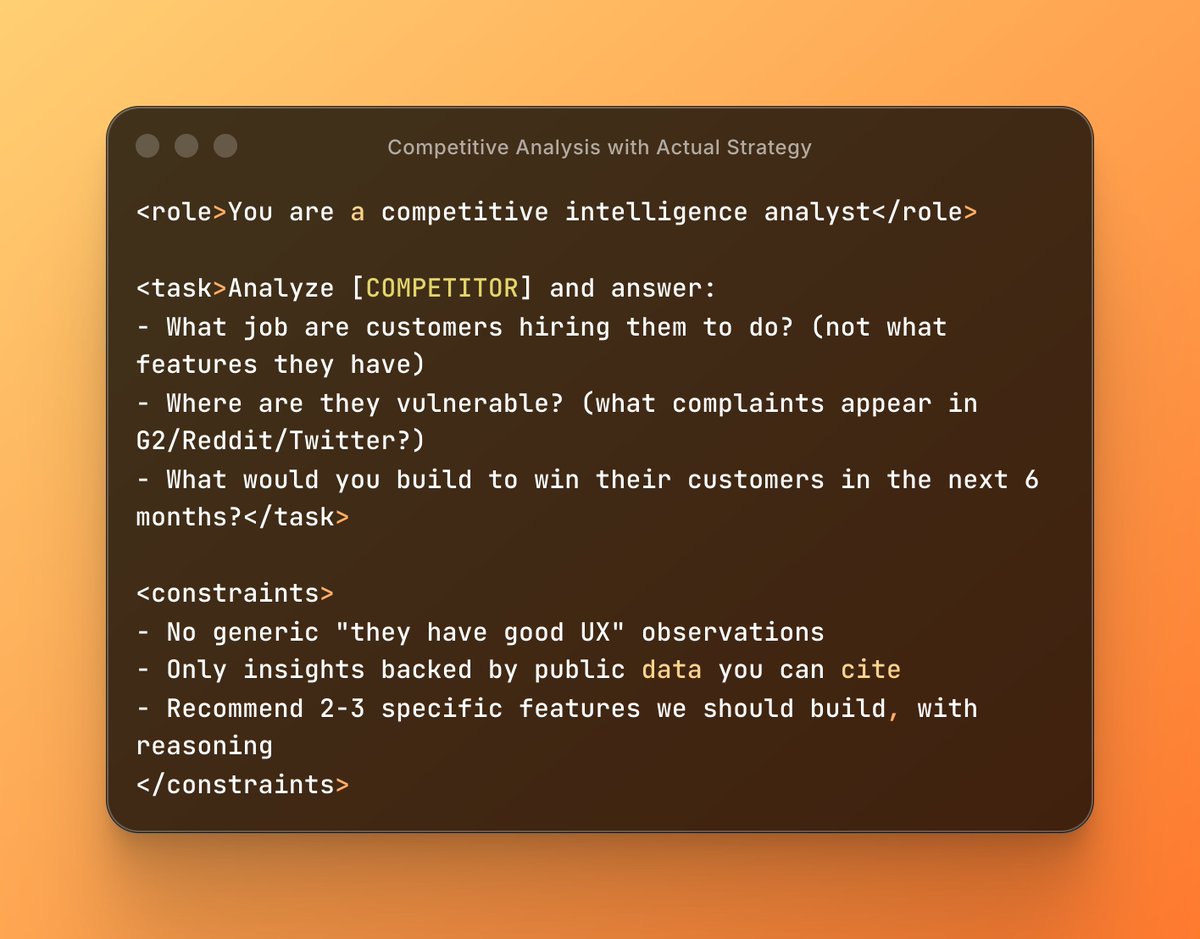

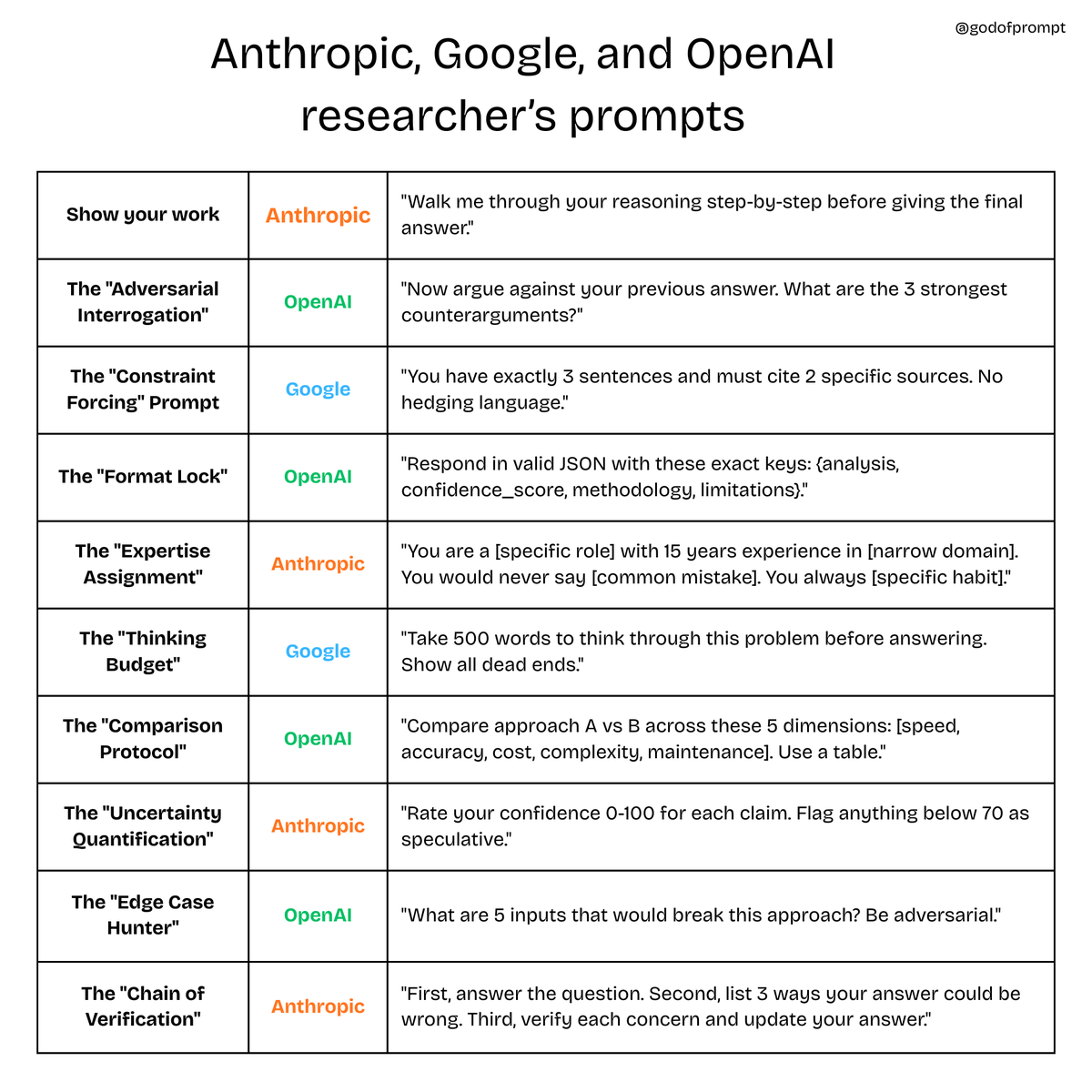

The AI prompt library your competitors don't want you to find

→ Biggest collection of text & image prompts

→ Unlimited custom prompts

→ Lifetime access & updates

Grab it before it's gone 👇

godofprompt.ai/pricing

→ Biggest collection of text & image prompts

→ Unlimited custom prompts

→ Lifetime access & updates

Grab it before it's gone 👇

godofprompt.ai/pricing

That's a wrap:

I hope you've found this thread helpful.

Follow me @godofprompt for more.

Like/Repost the quote below if you can:

I hope you've found this thread helpful.

Follow me @godofprompt for more.

Like/Repost the quote below if you can:

https://twitter.com/1643695629665722379/status/1968611872535626023

• • •

Missing some Tweet in this thread? You can try to

force a refresh