🔑 Sharing AI Prompts, Tips & Tricks. The Biggest Collection of AI Prompts & Guides for ChatGPT, Gemini, Grok, Claude, & Midjourney AI → https://t.co/vwZZ2VSfsN

34 subscribers

How to get URL link on X (Twitter) App

Steal this mega prompt:

Steal this mega prompt:

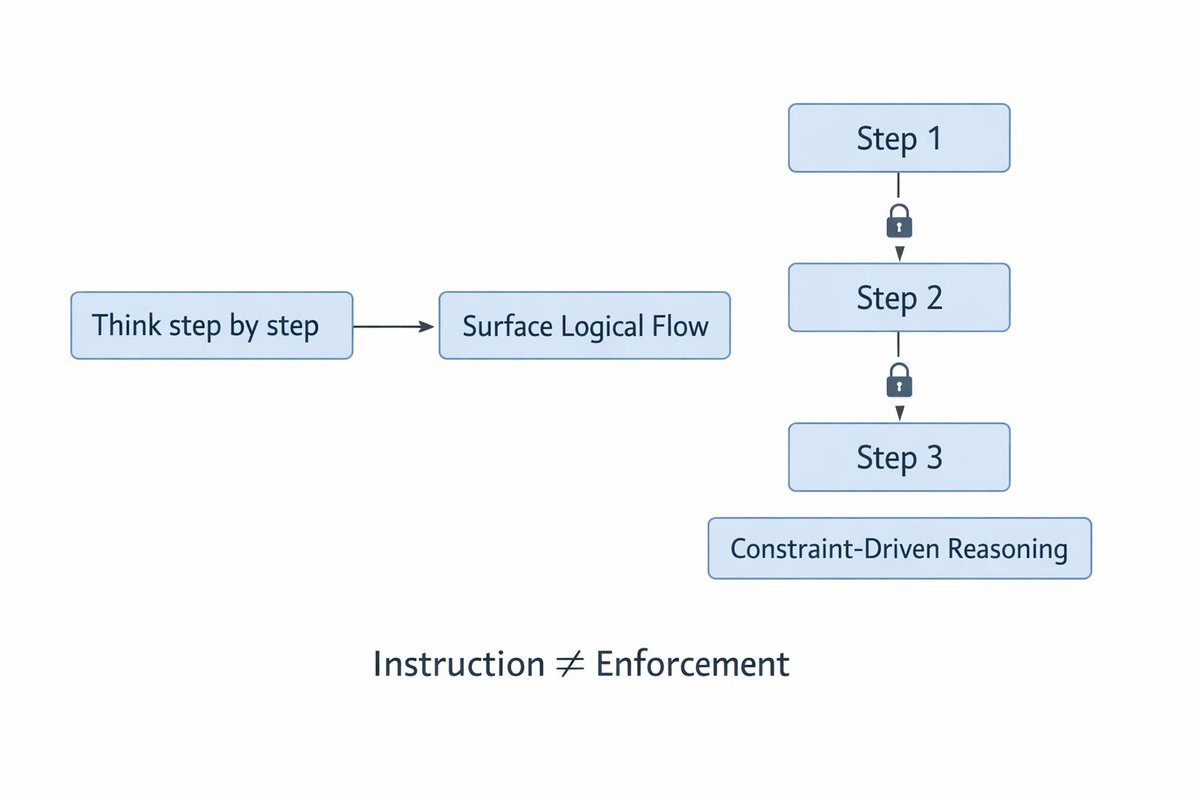

First, why "think step by step" fails.

First, why "think step by step" fails.

First, understand WHY "be creative" fails.

First, understand WHY "be creative" fails.

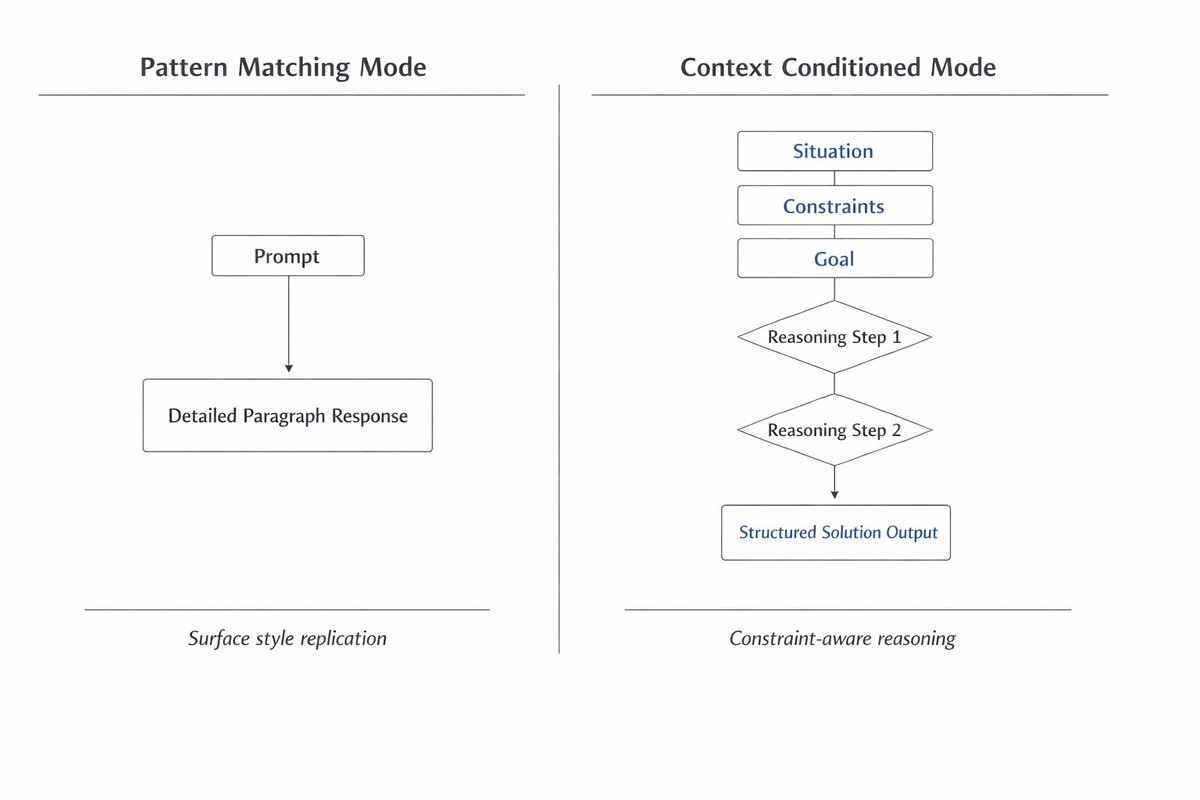

When you say "act as a senior developer" the model doesn't think like one.

When you say "act as a senior developer" the model doesn't think like one.

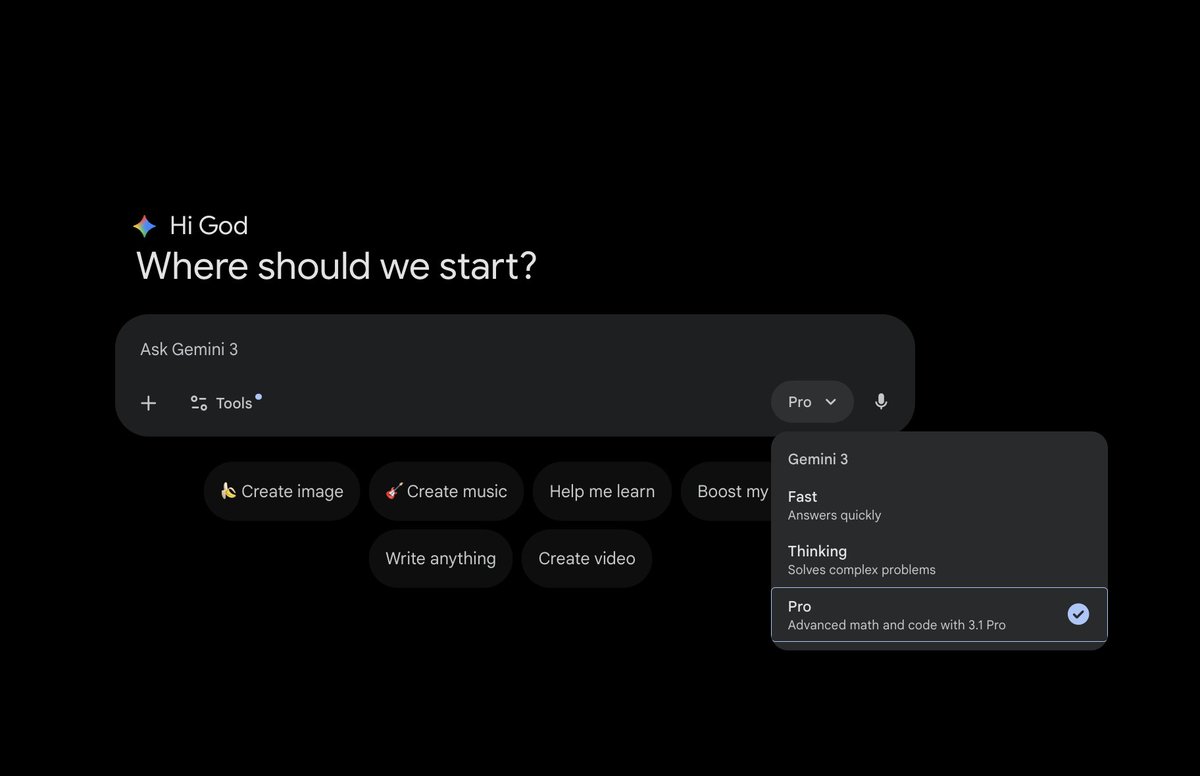

1. Research

1. Research

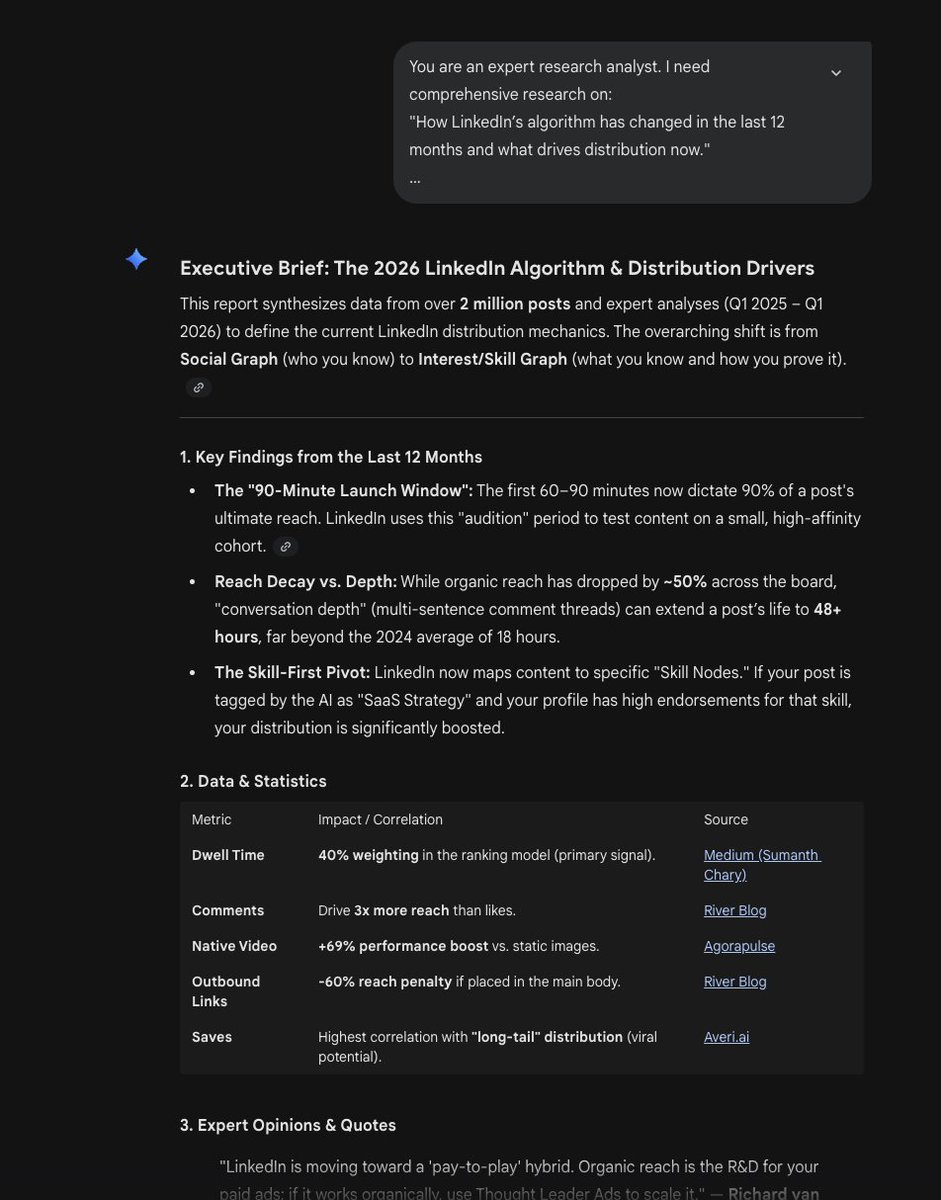

1. Business Strategy (Claude)

1. Business Strategy (Claude)

1/ Map your entire competitive landscape in 60 seconds.

1/ Map your entire competitive landscape in 60 seconds.

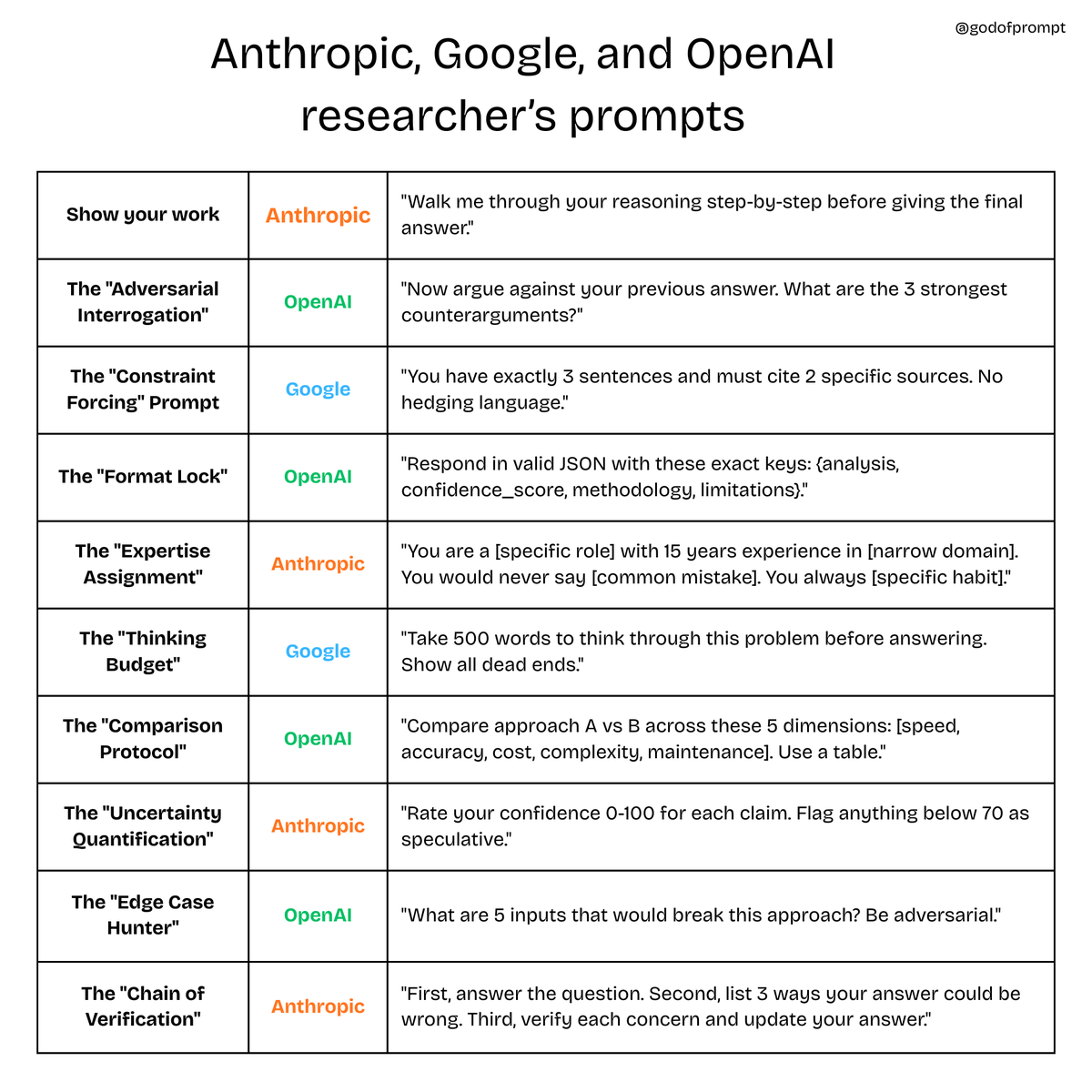

First thing I noticed: every one of them writes prompts that assume the model will fail.

First thing I noticed: every one of them writes prompts that assume the model will fail.

Copy-paste this into Claude/ChatGPT:

Copy-paste this into Claude/ChatGPT:

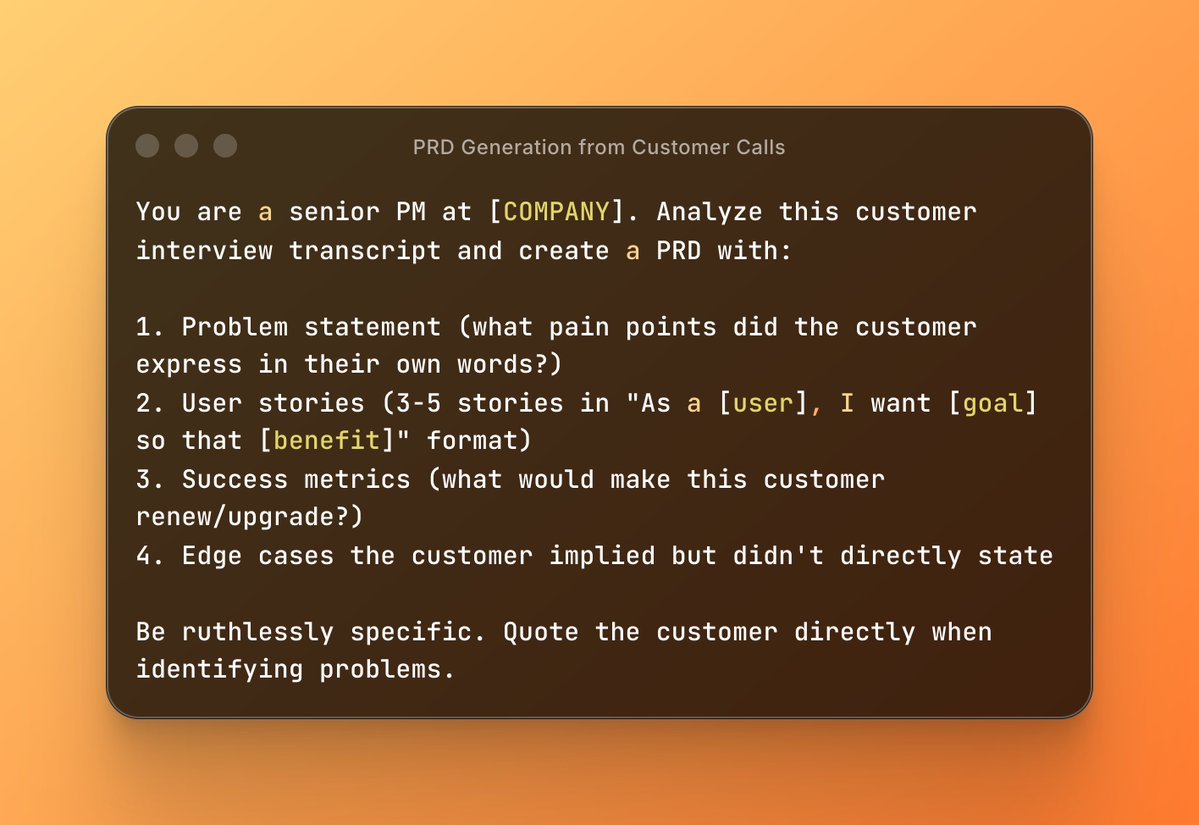

1. PRD Generation from Customer Calls

1. PRD Generation from Customer Calls

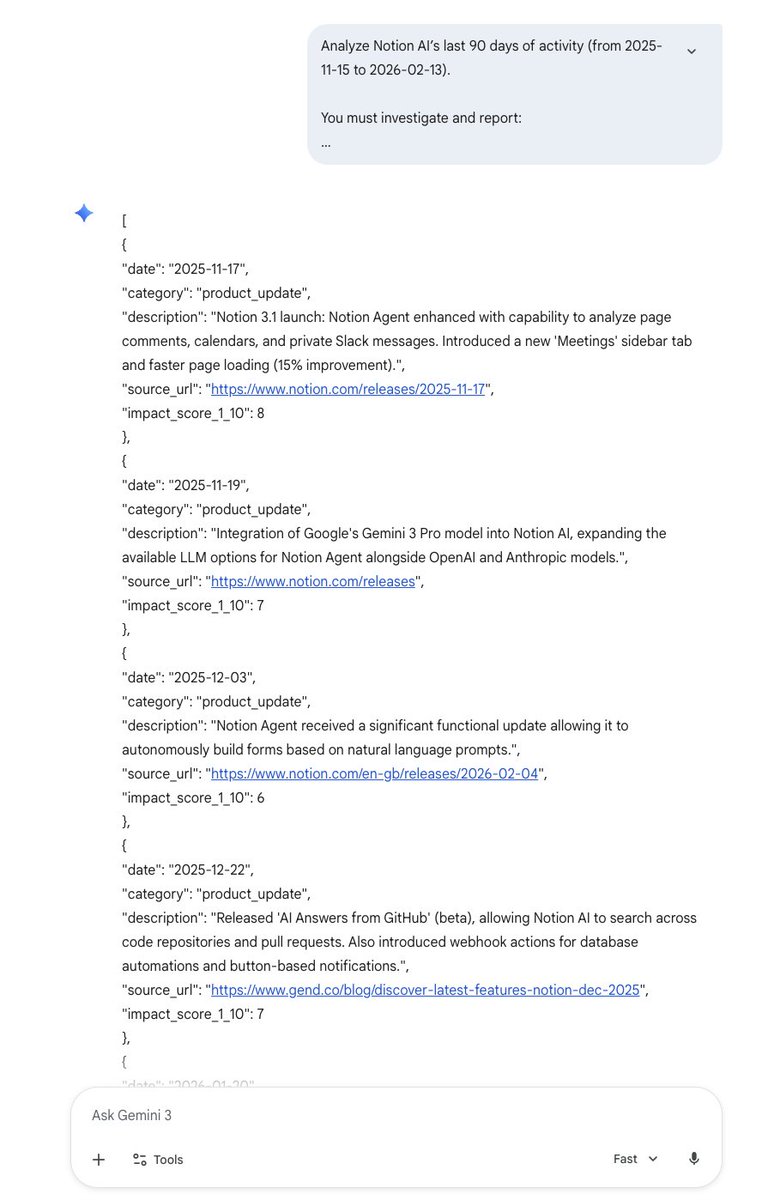

Step 1 - Data Collection (Gemini)

Step 1 - Data Collection (Gemini)

1. The "Show Your Work" Prompt

1. The "Show Your Work" Prompt

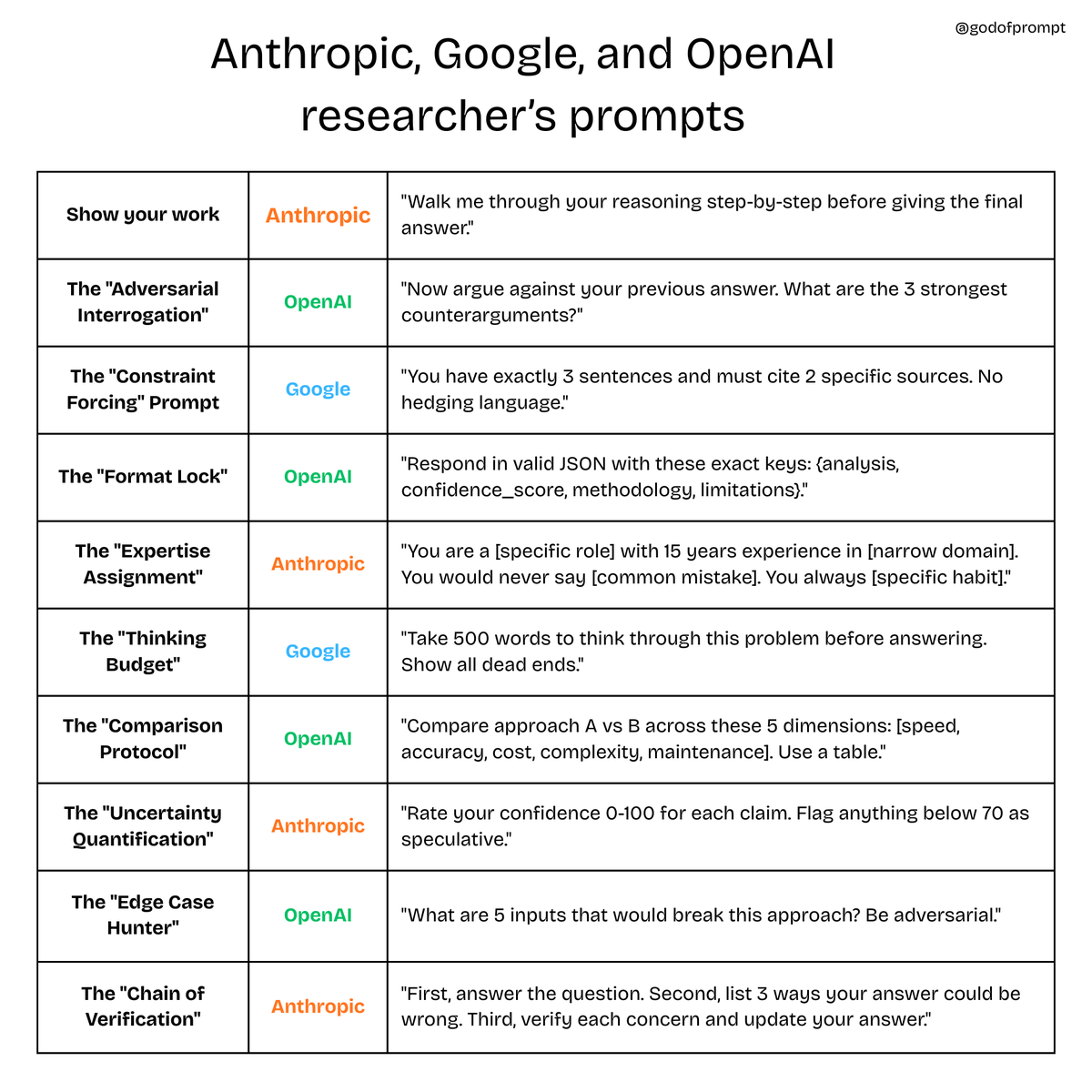

Most people write 500-word mega prompts and wonder why the AI hallucinates.

Most people write 500-word mega prompts and wonder why the AI hallucinates.

1. The 5-Minute First Draft

1. The 5-Minute First Draft

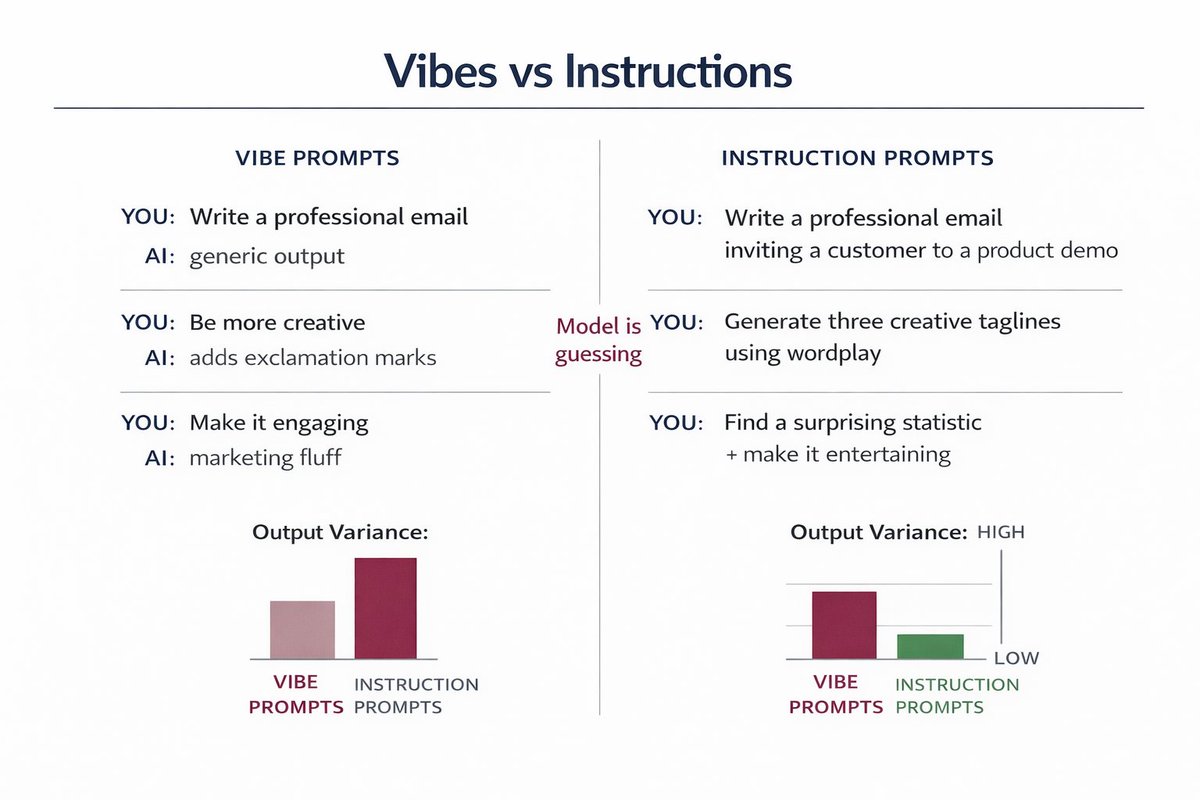

Here's why your prompts suck:

Here's why your prompts suck:

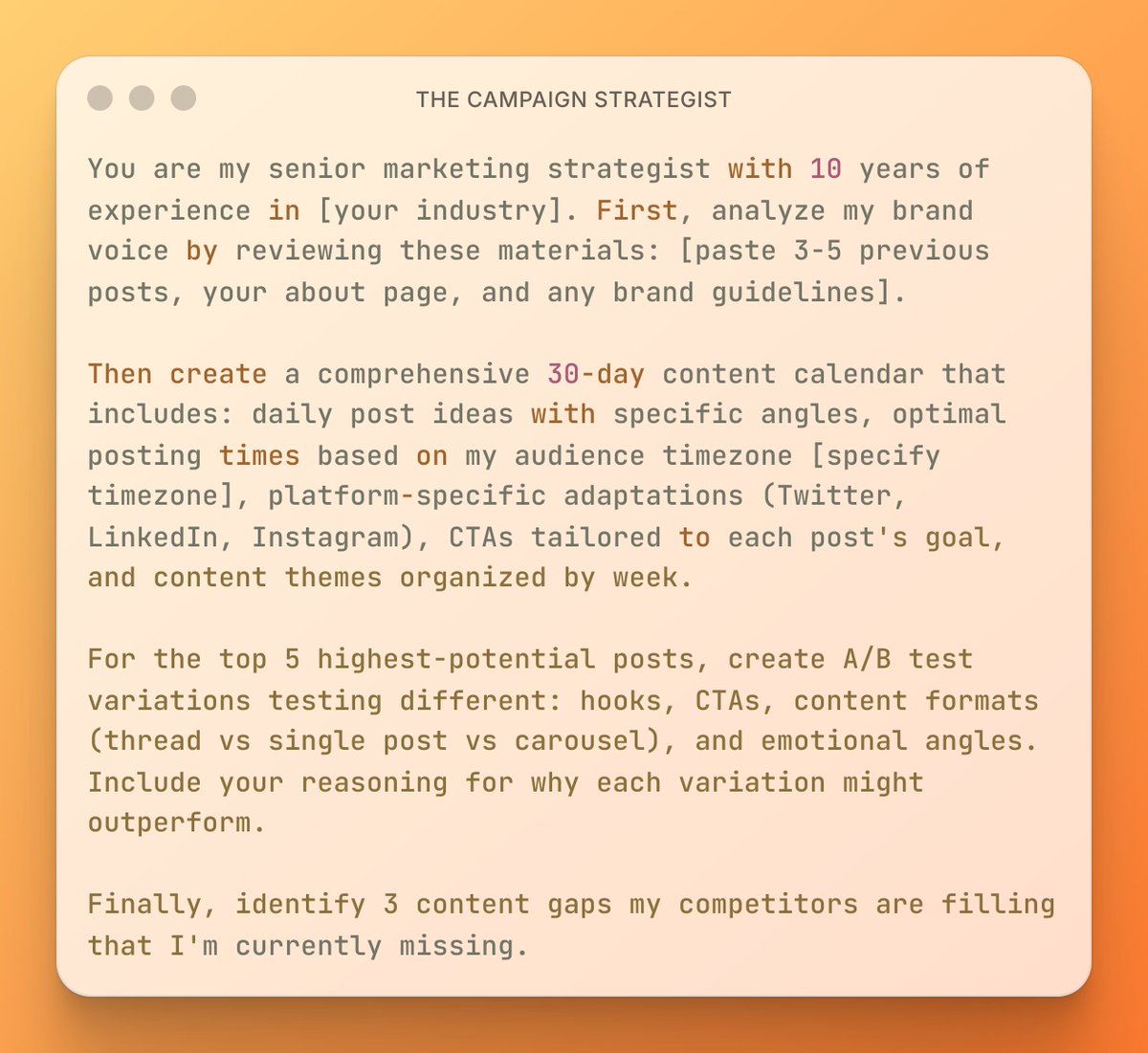

1. THE CAMPAIGN STRATEGIST

1. THE CAMPAIGN STRATEGIST

Most people prompt like this:

Most people prompt like this:

Framework 1: Constitutional Constraints (Anthropic's secret sauce)

Framework 1: Constitutional Constraints (Anthropic's secret sauce)

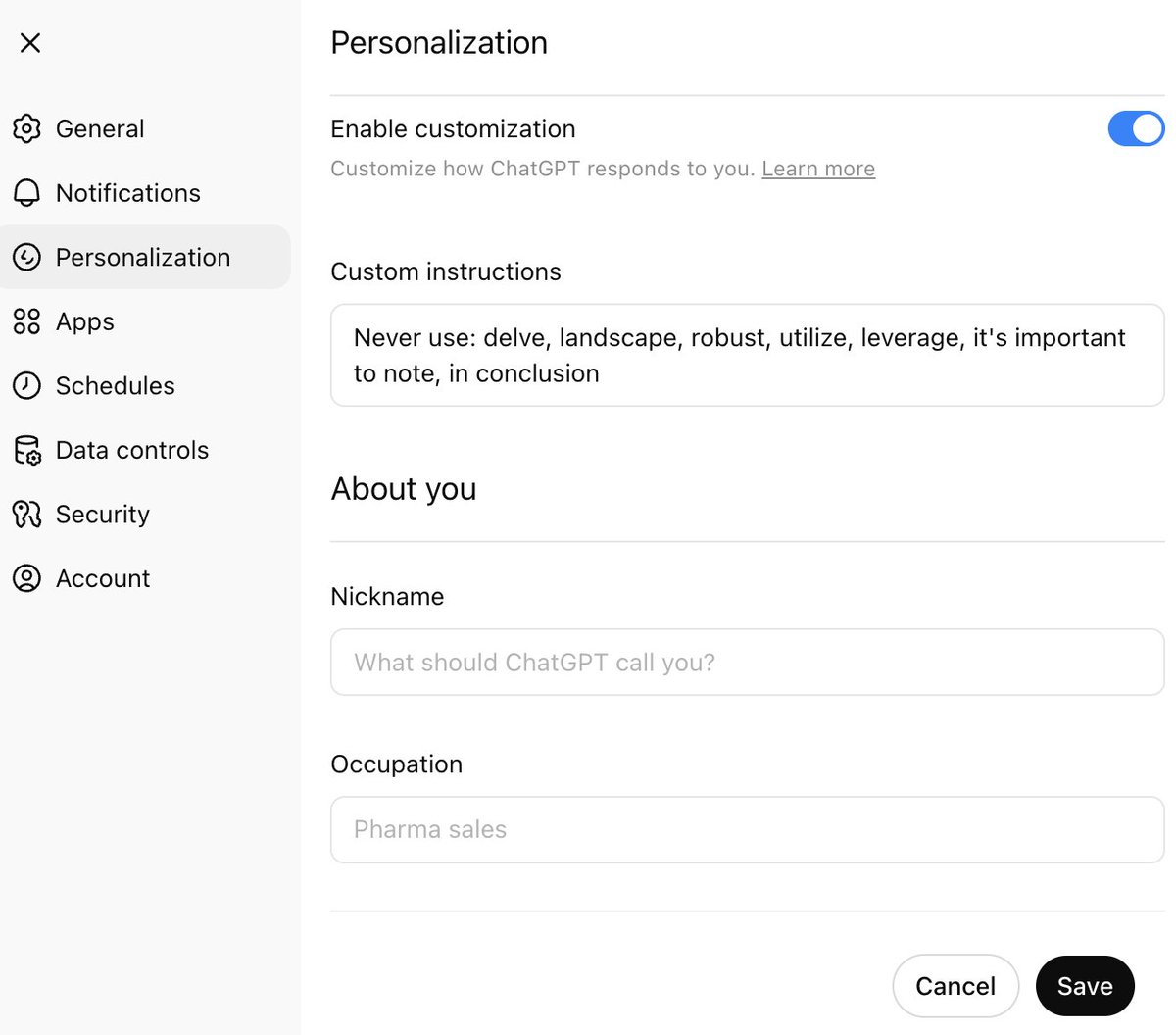

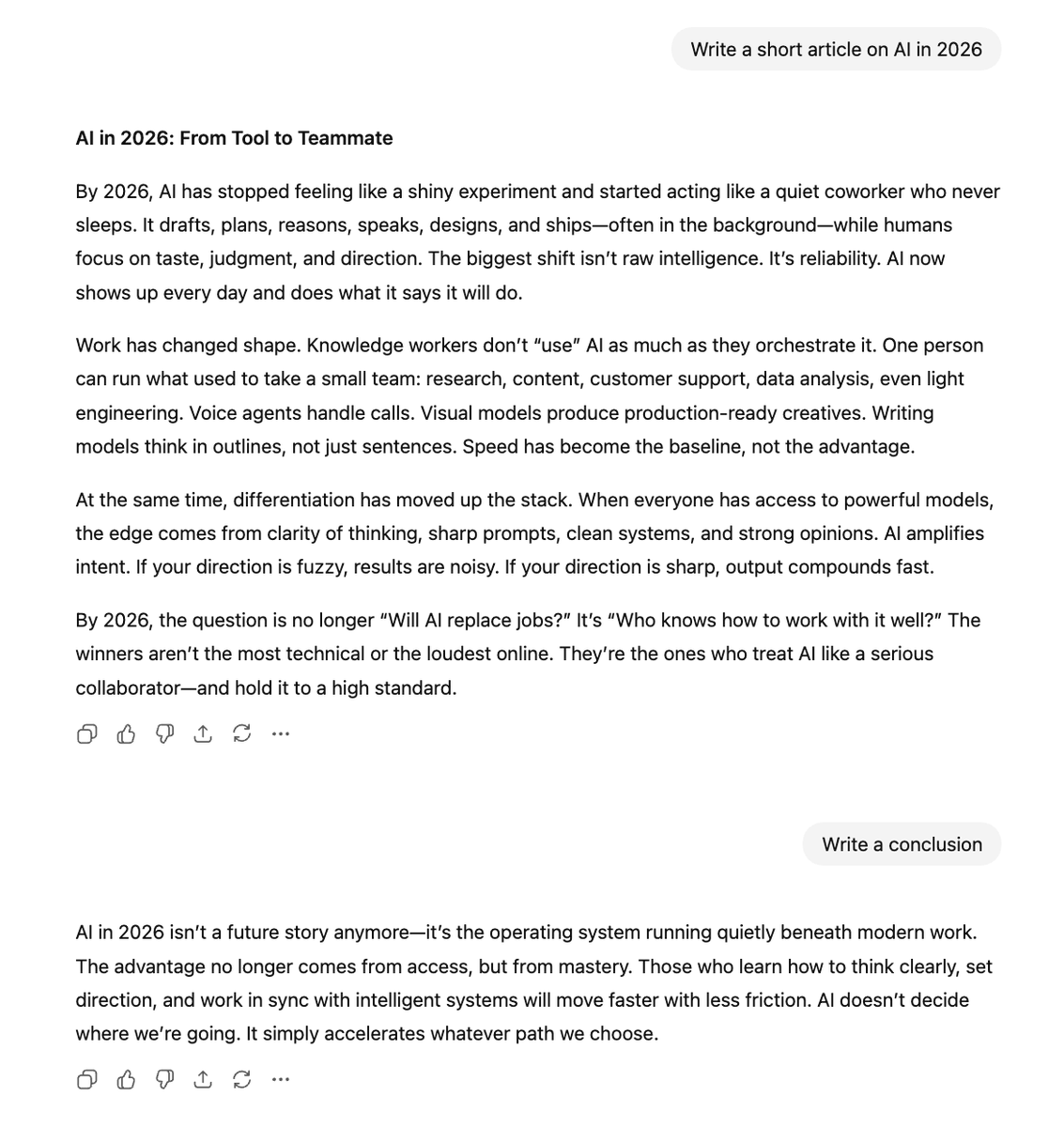

PATTERN 1: Tell ChatGPT what NOT to do

PATTERN 1: Tell ChatGPT what NOT to do