Prompt engineering "experts" are teaching you wrong.

They overcomplicate what's actually dead simple.

I reverse-engineered how the best AI researchers actually prompt.

Here's the complete guide you can follow to become a pro AI user:

They overcomplicate what's actually dead simple.

I reverse-engineered how the best AI researchers actually prompt.

Here's the complete guide you can follow to become a pro AI user:

WEEK 1-2: STOP BEING VAGUE

Bad prompt: "Help me with marketing"

Good prompt: "Write 5 email subject lines for a project management SaaS launching to small business owners. Make them curiosity-driven and under 50 characters."

See the difference? Specific request, clear audience, exact format. Practice this for 30 minutes daily.

Bad prompt: "Help me with marketing"

Good prompt: "Write 5 email subject lines for a project management SaaS launching to small business owners. Make them curiosity-driven and under 50 characters."

See the difference? Specific request, clear audience, exact format. Practice this for 30 minutes daily.

THE CONTEXT-ROLE-TASK-FORMAT FRAMEWORK

This is your new religion. Every prompt should have:

Context: What's the situation?

Role: Who should the AI be?

Task: What exactly do you want?

Format: How should it look?

Example: "You're a startup advisor (role) helping a B2B SaaS with 1000 users (context). Write 3 pricing strategy options (task) as: Strategy name, target customer, price point, reasoning (format)."

This is your new religion. Every prompt should have:

Context: What's the situation?

Role: Who should the AI be?

Task: What exactly do you want?

Format: How should it look?

Example: "You're a startup advisor (role) helping a B2B SaaS with 1000 users (context). Write 3 pricing strategy options (task) as: Strategy name, target customer, price point, reasoning (format)."

WEEK 3-4: MASTER FEW-SHOT PROMPTING

Show don't tell. Give AI 2-3 examples of what you want.

"Write Instagram captions like these:

Example 1: Just launched our new feature. Beta users are calling it 'life-changing.' Sometimes the best validation comes from the people actually using your product.

Example 2: Spent 6 months building something nobody wanted. Painful lesson but necessary. Now I validate ideas in 48 hours, not 6 months.

Now write one about: [your topic]"

Show don't tell. Give AI 2-3 examples of what you want.

"Write Instagram captions like these:

Example 1: Just launched our new feature. Beta users are calling it 'life-changing.' Sometimes the best validation comes from the people actually using your product.

Example 2: Spent 6 months building something nobody wanted. Painful lesson but necessary. Now I validate ideas in 48 hours, not 6 months.

Now write one about: [your topic]"

I use few-shot for everything. Social posts, emails, code reviews, strategy docs. The AI learns your exact style instead of guessing.

Your outputs become 10x better because you're training the AI on what good looks like for YOU specifically.

Your outputs become 10x better because you're training the AI on what good looks like for YOU specifically.

WEEK 5-6: CHAIN COMPLEX PROMPTS

Stop trying to do everything in one prompt. Break big tasks into steps.

Prompt 1: "Analyze the project management software market. Focus on: market size, key players, pricing trends, customer pain points."

Prompt 2: "Based on that analysis, identify 3 underserved niches in project management software."

Prompt 3: "For each niche, create a basic product concept with key features and go-to-market strategy."

Stop trying to do everything in one prompt. Break big tasks into steps.

Prompt 1: "Analyze the project management software market. Focus on: market size, key players, pricing trends, customer pain points."

Prompt 2: "Based on that analysis, identify 3 underserved niches in project management software."

Prompt 3: "For each niche, create a basic product concept with key features and go-to-market strategy."

Chaining lets you handle complex work that would overwhelm a single prompt. Each step builds on the last. It's like having a team of specialists working in sequence.

I use this approach for business plans, technical architectures, content strategies.

I use this approach for business plans, technical architectures, content strategies.

THE MEGA PROMPT TEMPLATE

For complex one-shot requests, use this structure:

"ROLE: You are [specific expert]

CONTEXT: [situation, constraints, background]

TASK: [what you want done]

REQUIREMENTS: [specific criteria, must-haves]

FORMAT: [exact structure you want]

EXAMPLES: [show 1-2 if relevant]

CONSTRAINTS: [word count, tone, limitations]"

This template works for everything from technical docs to creative campaigns.

For complex one-shot requests, use this structure:

"ROLE: You are [specific expert]

CONTEXT: [situation, constraints, background]

TASK: [what you want done]

REQUIREMENTS: [specific criteria, must-haves]

FORMAT: [exact structure you want]

EXAMPLES: [show 1-2 if relevant]

CONSTRAINTS: [word count, tone, limitations]"

This template works for everything from technical docs to creative campaigns.

WEEK 7-8: BUILD YOUR PROMPT LIBRARY

Save your best prompts. I have 50+ templates for different scenarios:

1. Client onboarding questions

2. Content brainstorming

3. Code review checklist

4. Strategy analysis

5. Email responses

And more.

Don't reinvent the wheel. Build once, use forever. Your productivity compounds when you stop starting from scratch every time.

Save your best prompts. I have 50+ templates for different scenarios:

1. Client onboarding questions

2. Content brainstorming

3. Code review checklist

4. Strategy analysis

5. Email responses

And more.

Don't reinvent the wheel. Build once, use forever. Your productivity compounds when you stop starting from scratch every time.

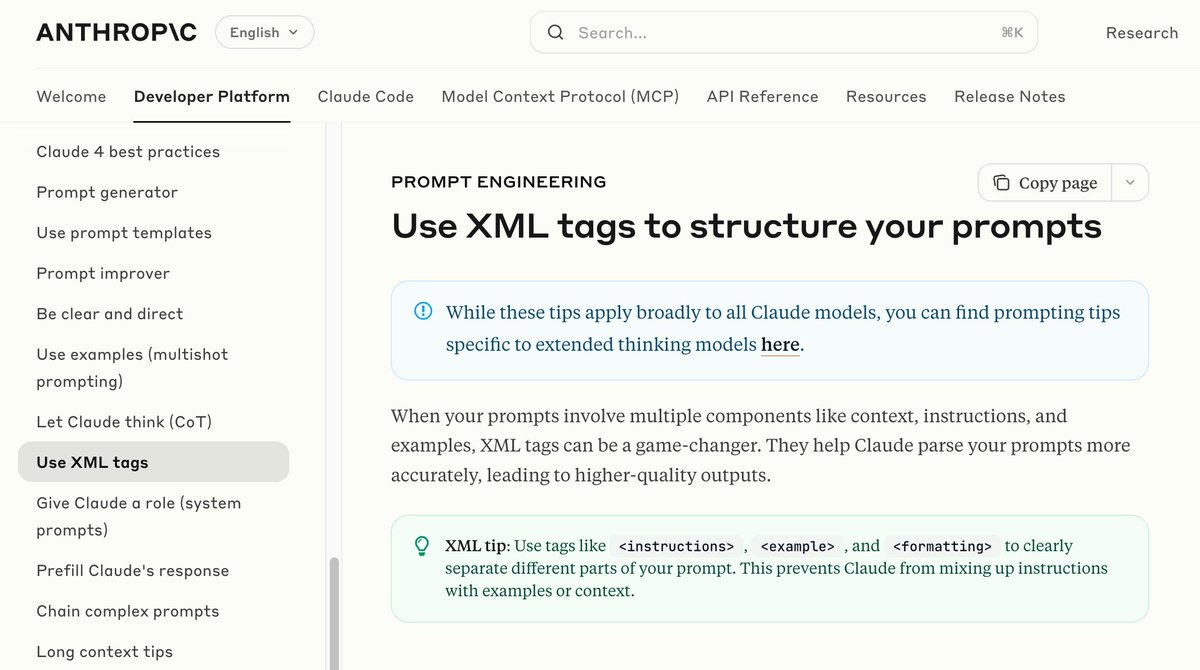

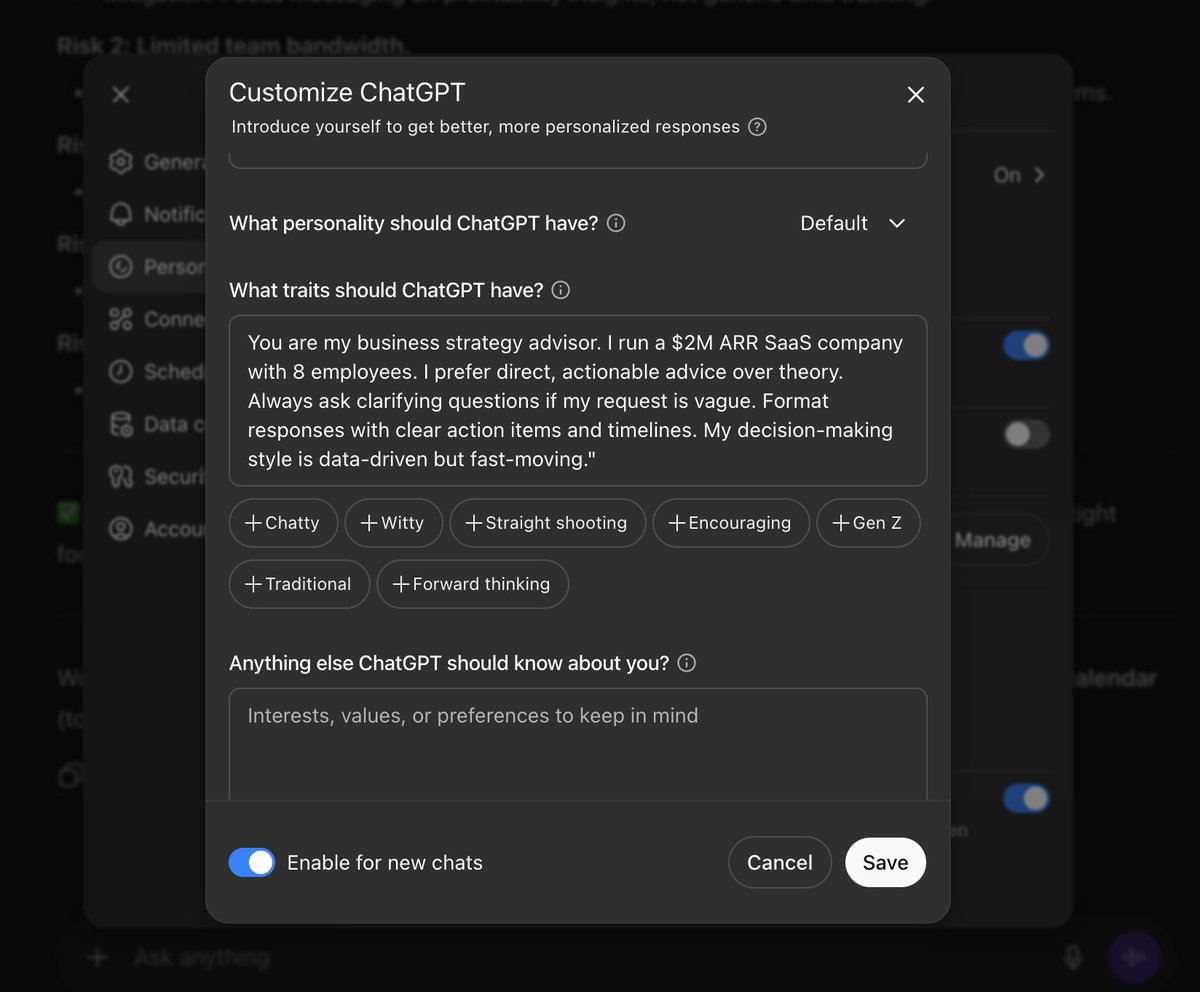

WEEK 9-10: ADVANCED TECHNIQUES

1. System instructions: Set permanent context so every chat starts with your preferences

2. Multi-modal: Upload images, PDFs, spreadsheets for analysis

3. Tool integration: Connect AI to Zapier, APIs, custom workflows

4. Iterative refinement: Ask AI to improve its own outputs

Example: "Review your last response. Make it more concise and add specific examples for each point."

1. System instructions: Set permanent context so every chat starts with your preferences

2. Multi-modal: Upload images, PDFs, spreadsheets for analysis

3. Tool integration: Connect AI to Zapier, APIs, custom workflows

4. Iterative refinement: Ask AI to improve its own outputs

Example: "Review your last response. Make it more concise and add specific examples for each point."

THE DEBUGGING FRAMEWORK

When prompts fail (they will), use this:

"What information do you need to give a better answer?"

"Break down this task into smaller steps"

"Show me 3 different approaches to this problem"

"What assumptions are you making that might be wrong?"

Most prompt failures happen because of missing context or unclear expectations.

When prompts fail (they will), use this:

"What information do you need to give a better answer?"

"Break down this task into smaller steps"

"Show me 3 different approaches to this problem"

"What assumptions are you making that might be wrong?"

Most prompt failures happen because of missing context or unclear expectations.

WHAT GOOD PROMPTING UNLOCKS

Content creation: I generate 2 weeks of social posts in 45 minutes

Business analysis: Complex market research in hours, not days

Learning: Master new skills with AI as personal tutor

Decision making: See angles and possibilities I'd miss alone

Time leverage: My thinking time becomes 10x more valuable

The ROI is insane once you get good at this.

Content creation: I generate 2 weeks of social posts in 45 minutes

Business analysis: Complex market research in hours, not days

Learning: Master new skills with AI as personal tutor

Decision making: See angles and possibilities I'd miss alone

Time leverage: My thinking time becomes 10x more valuable

The ROI is insane once you get good at this.

Most people quit after a few bad results.

They expect perfect outputs without practicing the skill. Good prompting takes deliberate practice. But unlike other skills, this one has compound returns. Every hour invested returns 10x in productivity gains.

The people who master this early will have an unfair advantage for decades.

They expect perfect outputs without practicing the skill. Good prompting takes deliberate practice. But unlike other skills, this one has compound returns. Every hour invested returns 10x in productivity gains.

The people who master this early will have an unfair advantage for decades.

YOUR 30-DAY CHALLENGE

Days 1-7: Practice specific, clear requests

Days 8-14: Use Context-Role-Task-Format for everything

Days 15-21: Build few-shot examples for your common tasks

Days 22-30: Start chaining complex prompts

Track your best prompts. Share what works.

This skill will change your career.

The question isn't whether AI will replace jobs. It's whether people who are good at AI will replace people who aren't.

Days 1-7: Practice specific, clear requests

Days 8-14: Use Context-Role-Task-Format for everything

Days 15-21: Build few-shot examples for your common tasks

Days 22-30: Start chaining complex prompts

Track your best prompts. Share what works.

This skill will change your career.

The question isn't whether AI will replace jobs. It's whether people who are good at AI will replace people who aren't.

90% of customers expect instant replies.

Most businesses? Still responding days later.

Meet Droxy AI: your 24/7 AI employee:

• Handles calls, chats, comments

• Speaks 95+ languages

• Feels human

• Costs $20/mo

Start automating:

try.droxy.ai/now

Most businesses? Still responding days later.

Meet Droxy AI: your 24/7 AI employee:

• Handles calls, chats, comments

• Speaks 95+ languages

• Feels human

• Costs $20/mo

Start automating:

try.droxy.ai/now

I hope you've found this thread helpful.

Follow me @alxnderhughes for more.

Like/Repost the quote below if you can:

Follow me @alxnderhughes for more.

Like/Repost the quote below if you can:

https://twitter.com/1927203051263184896/status/1970424490862825781

• • •

Missing some Tweet in this thread? You can try to

force a refresh