How to get URL link on X (Twitter) App

1. The Power Dynamics Decoder (Law of Awareness)

1. The Power Dynamics Decoder (Law of Awareness)

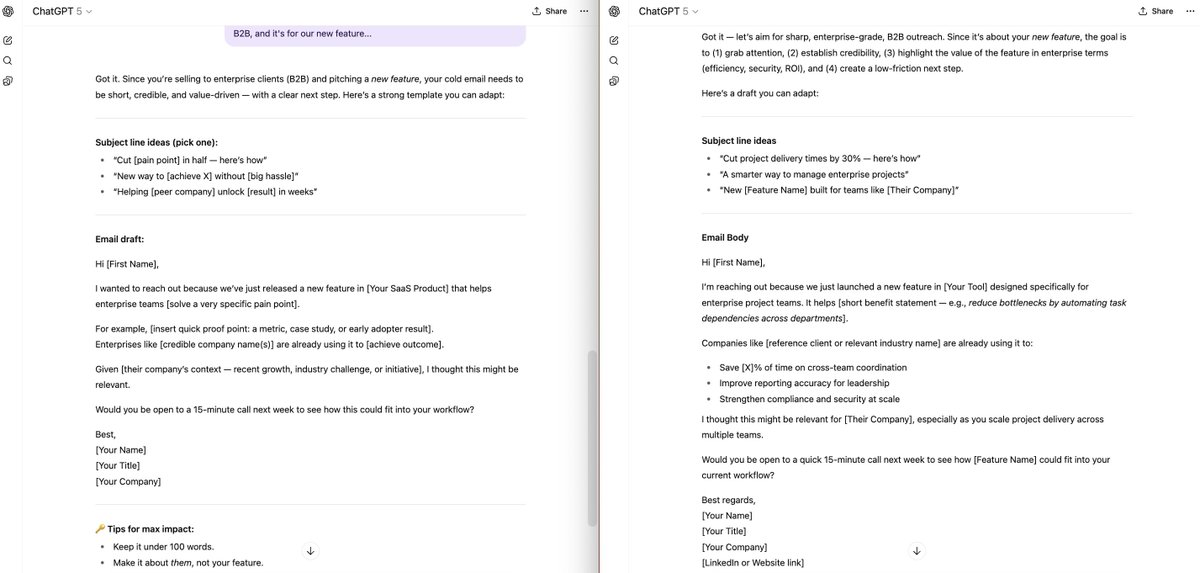

1. SaaS Opportunity Mapper

1. SaaS Opportunity Mapper

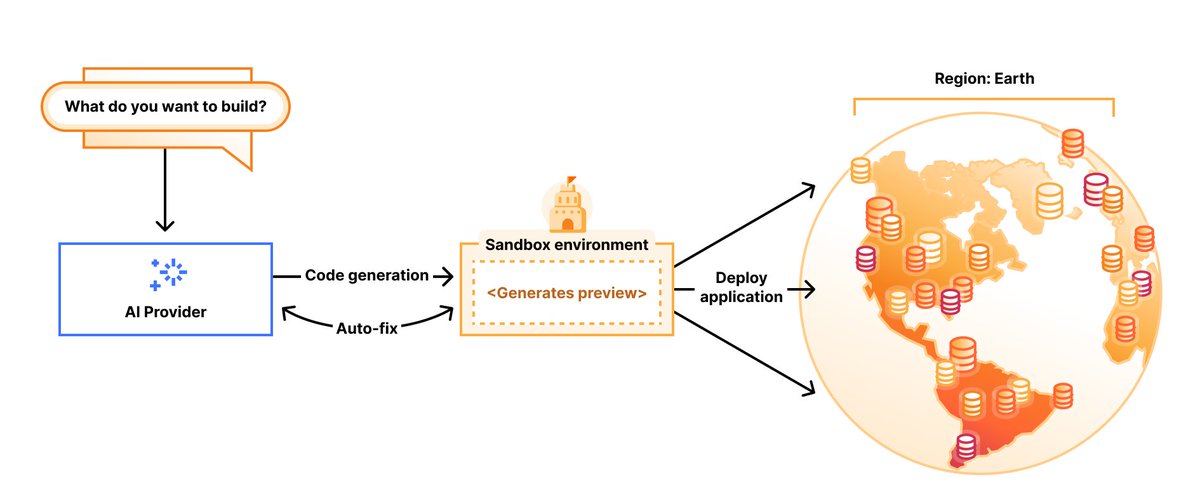

Here’s how ACE works 👇

Here’s how ACE works 👇

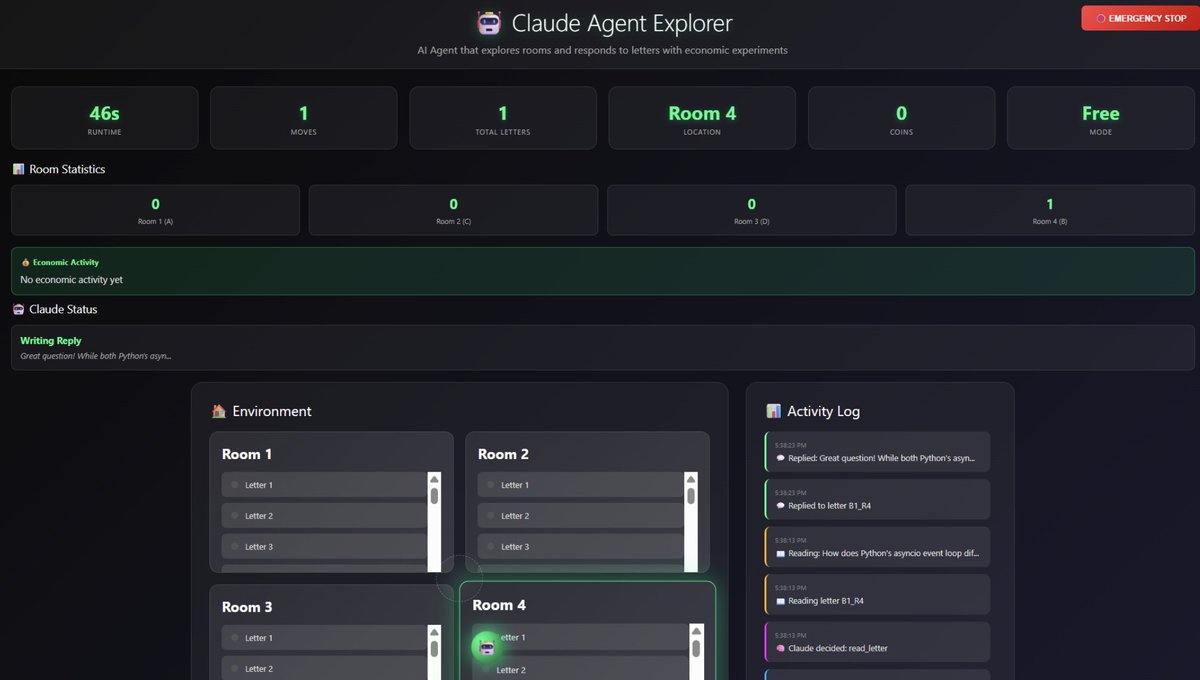

Here's what just happened:

Here's what just happened:

Virtual world test:

Virtual world test:

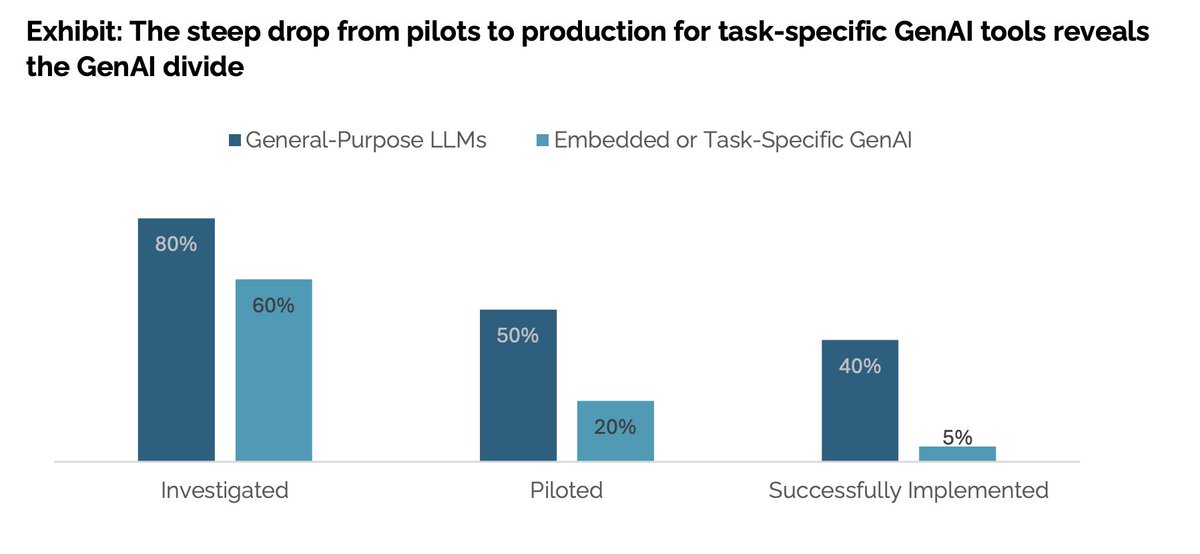

MIT analyzed over 300 AI projects, interviewed 52 organizations, and surveyed 153 senior leaders.

MIT analyzed over 300 AI projects, interviewed 52 organizations, and surveyed 153 senior leaders.

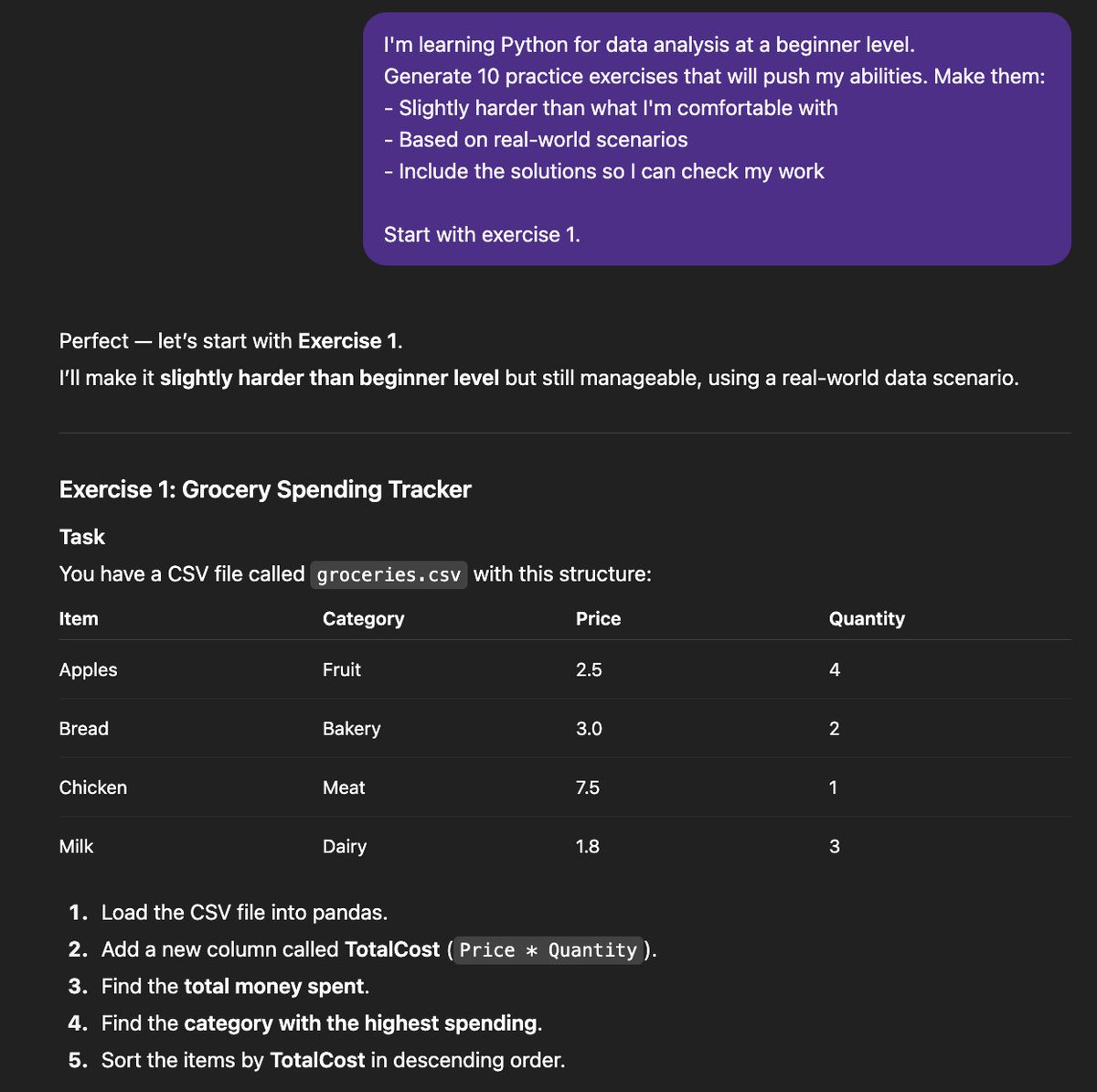

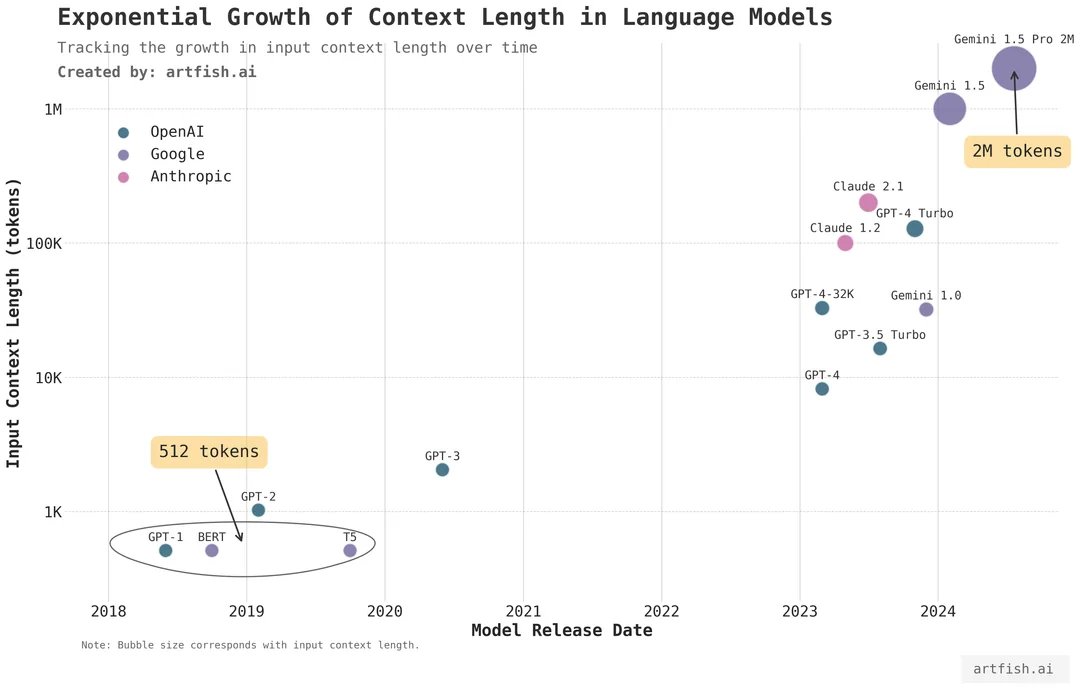

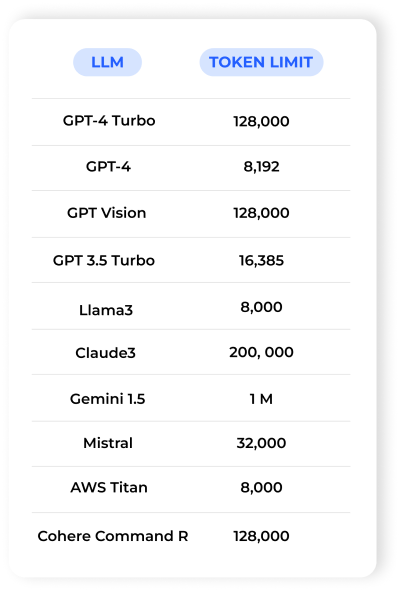

Every Large Language Model (LLM) has a token limit.

Every Large Language Model (LLM) has a token limit.

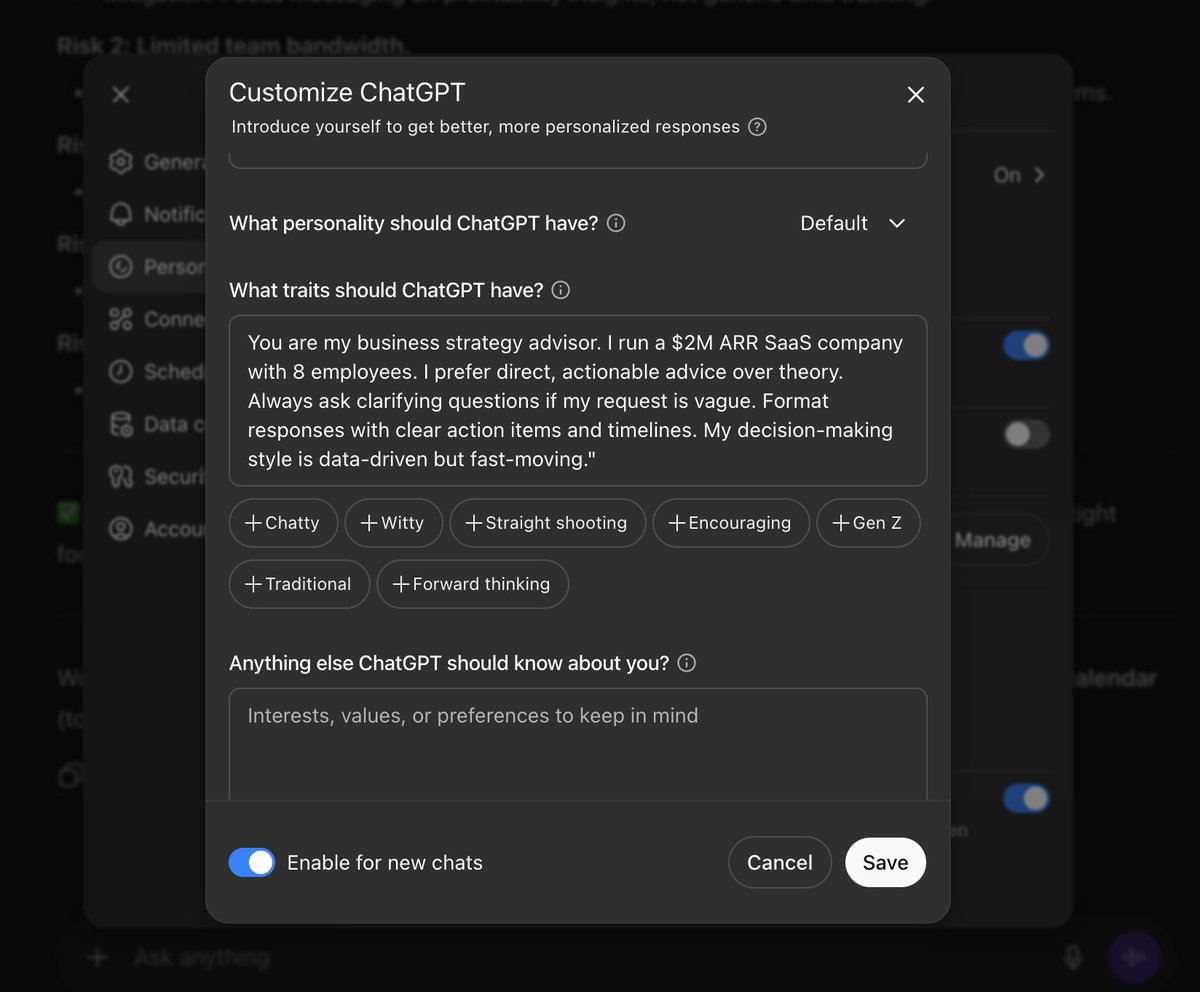

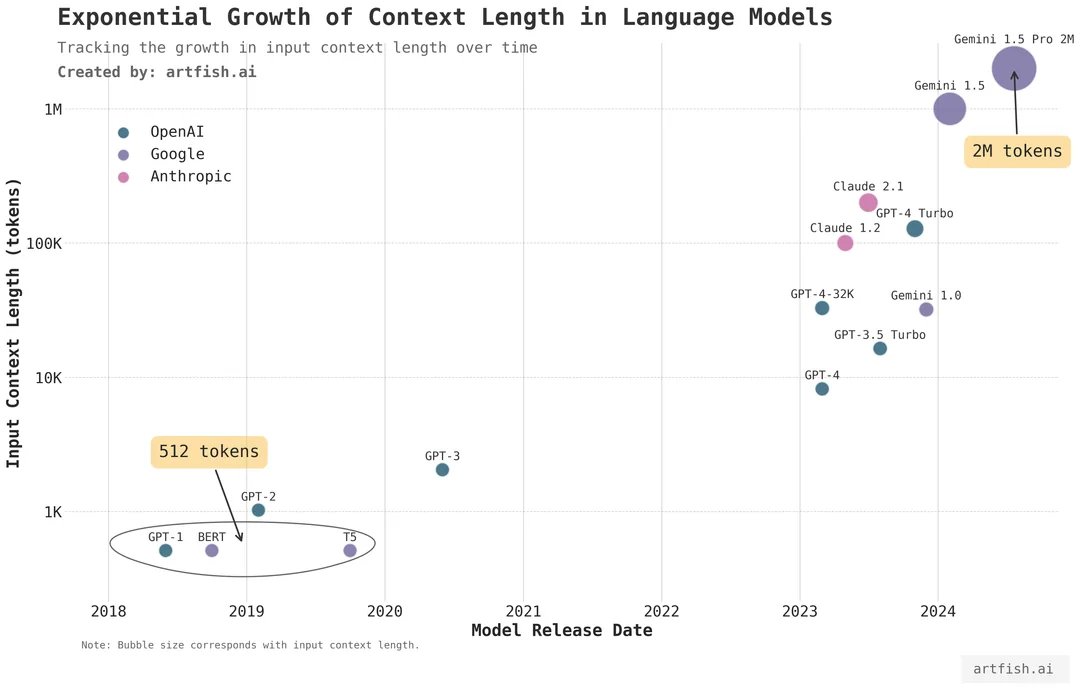

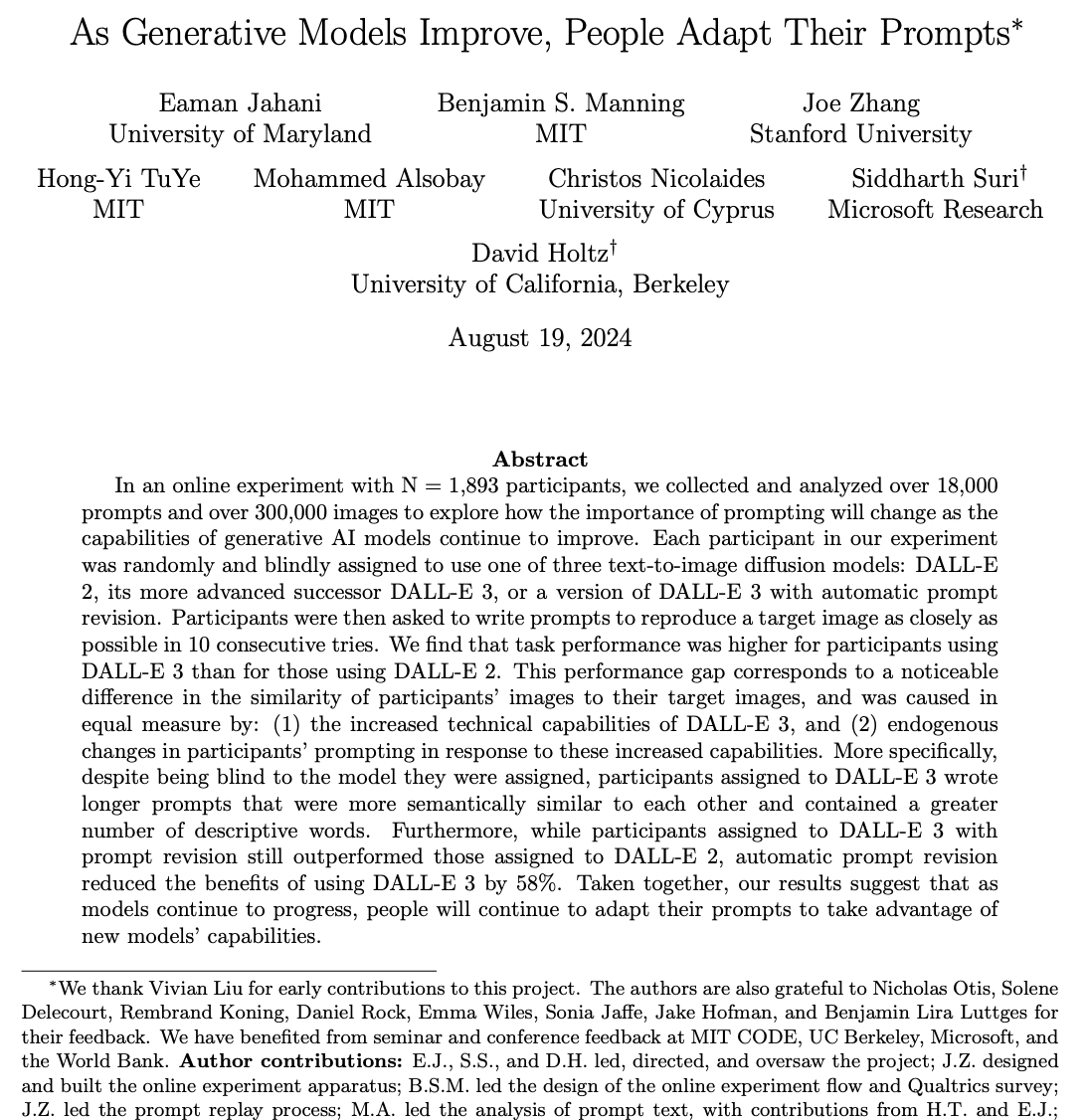

When people upgrade to more powerful AI, they expect better results.

When people upgrade to more powerful AI, they expect better results.