In this small thread, I'll break down how you can create full-length movies or anime with Grok 4 Imagine.

This entire video was created with Grok4 Imagine and a video editor.

This entire video was created with Grok4 Imagine and a video editor.

1/n

The first thing you'll want to do is come up with a prompt for your 'characters' from a storyboard you have created.

I built a free-to-use app that uses AI to take a generic prompt or image and provide an optimized prompt that will work well in Grok Imagine.grokprompt.fun

The first thing you'll want to do is come up with a prompt for your 'characters' from a storyboard you have created.

I built a free-to-use app that uses AI to take a generic prompt or image and provide an optimized prompt that will work well in Grok Imagine.grokprompt.fun

2/n

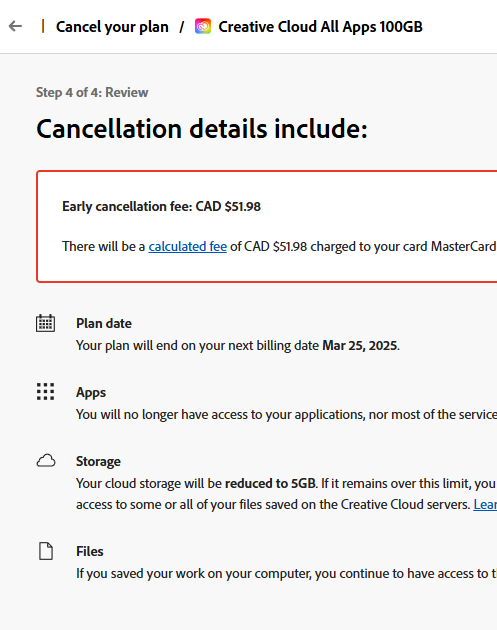

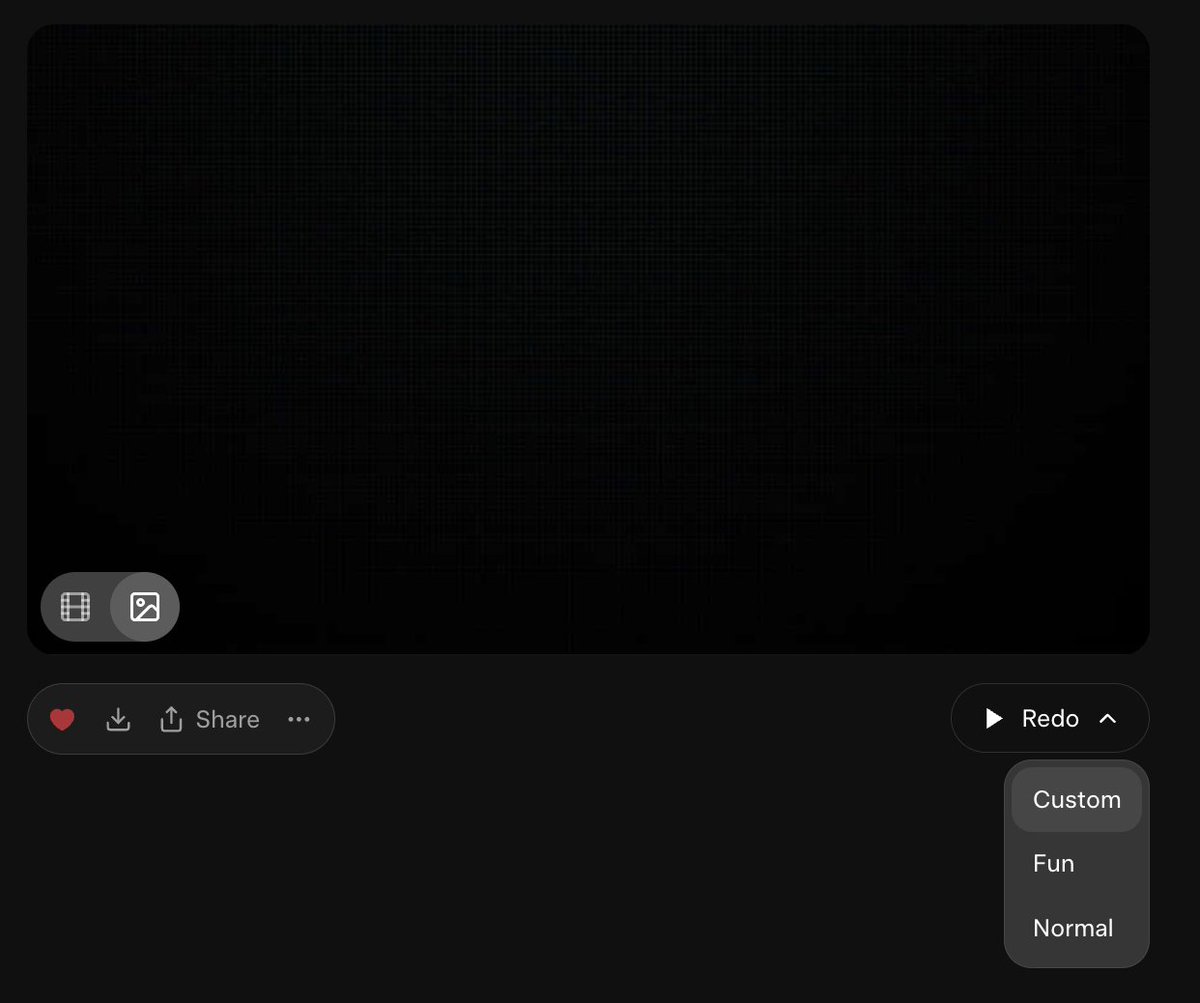

Once you have your prompt, you'll want to put this black image into Grok Imagine and use the prompt from in the custom section. This will create your first scene with an animation.grokprompt.fun

Once you have your prompt, you'll want to put this black image into Grok Imagine and use the prompt from in the custom section. This will create your first scene with an animation.grokprompt.fun

3/n

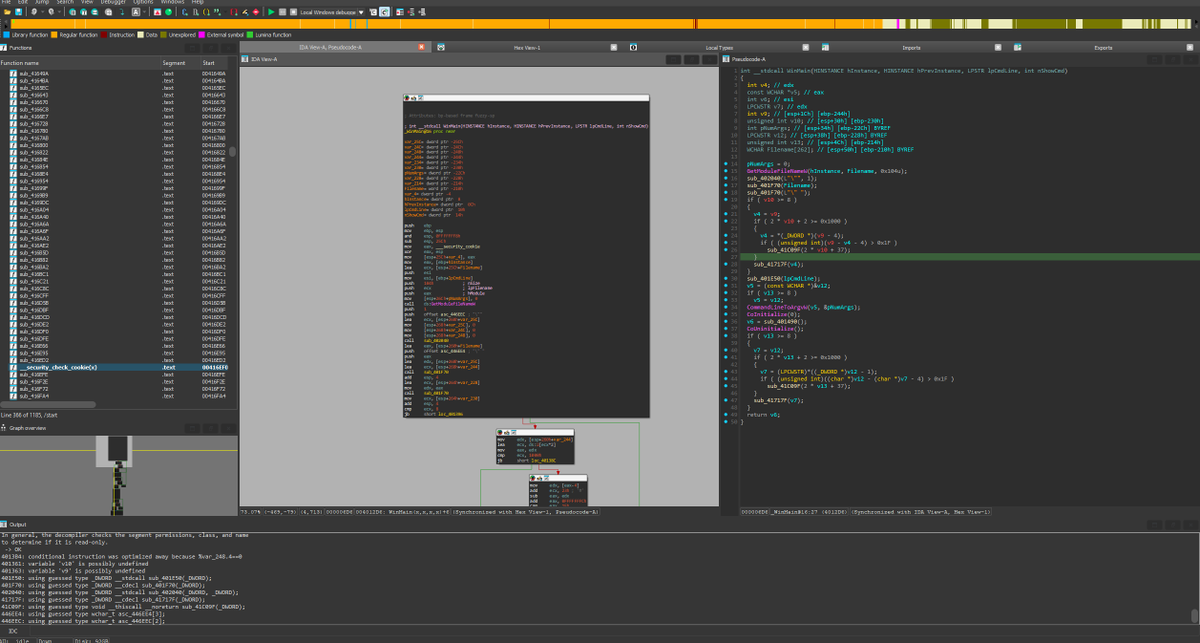

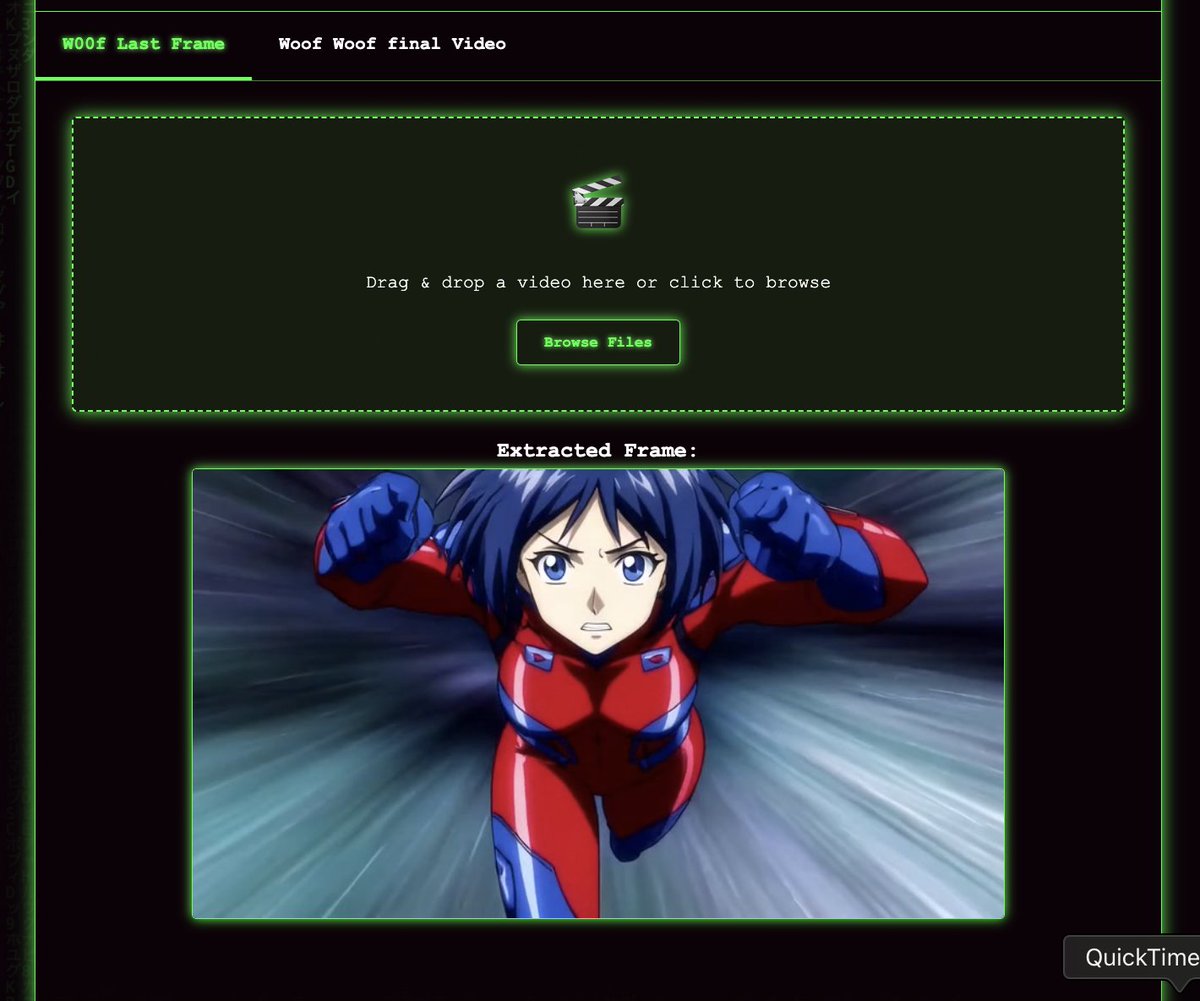

Now, the next thing you'll want to do is head over to . This is a webpage that @nfkmobile built. You can use it to get the last frame from your video. The image provided is what you will want to use in Grok Imagine for the next scene of your video. egod.dev

Now, the next thing you'll want to do is head over to . This is a webpage that @nfkmobile built. You can use it to get the last frame from your video. The image provided is what you will want to use in Grok Imagine for the next scene of your video. egod.dev

4/n

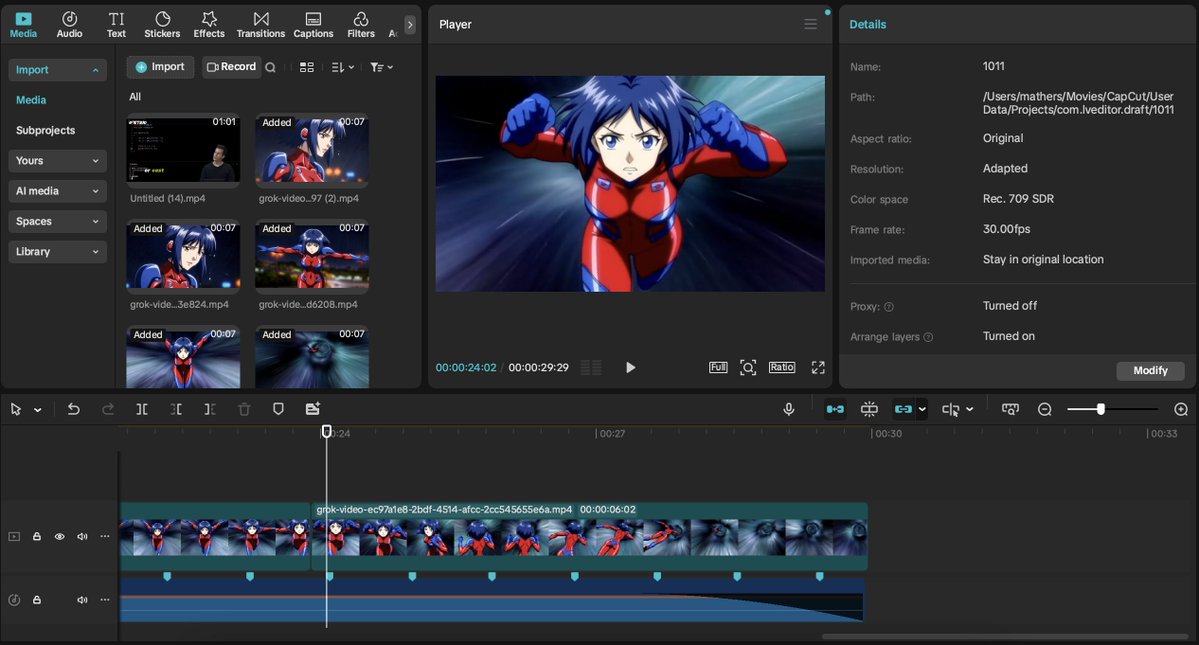

Finally, you'll want to get a video editor like CapCut, which is very friendly to people with no editing skills. You can use this to edit your video, and there's also royalty-free music in the app that you can use with your art on X.

Finally, you'll want to get a video editor like CapCut, which is very friendly to people with no editing skills. You can use this to edit your video, and there's also royalty-free music in the app that you can use with your art on X.

5/n

You can continue the previous steps and add as many scenes as you require for your film!

Download and use this clip on the eGOD webpage for practice.

Just Grok It 💫

You can continue the previous steps and add as many scenes as you require for your film!

Download and use this clip on the eGOD webpage for practice.

Just Grok It 💫

• • •

Missing some Tweet in this thread? You can try to

force a refresh