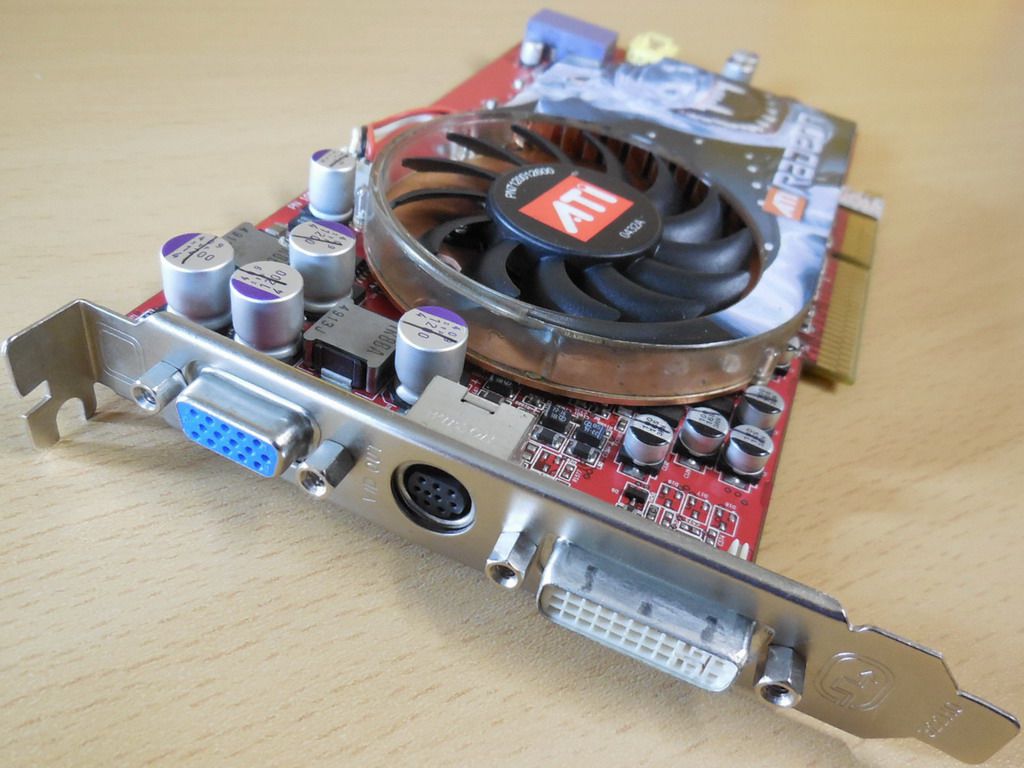

GPU computing before CUDA was *weird*.

Memory primitives were graphics shaped, not computer science shaped.

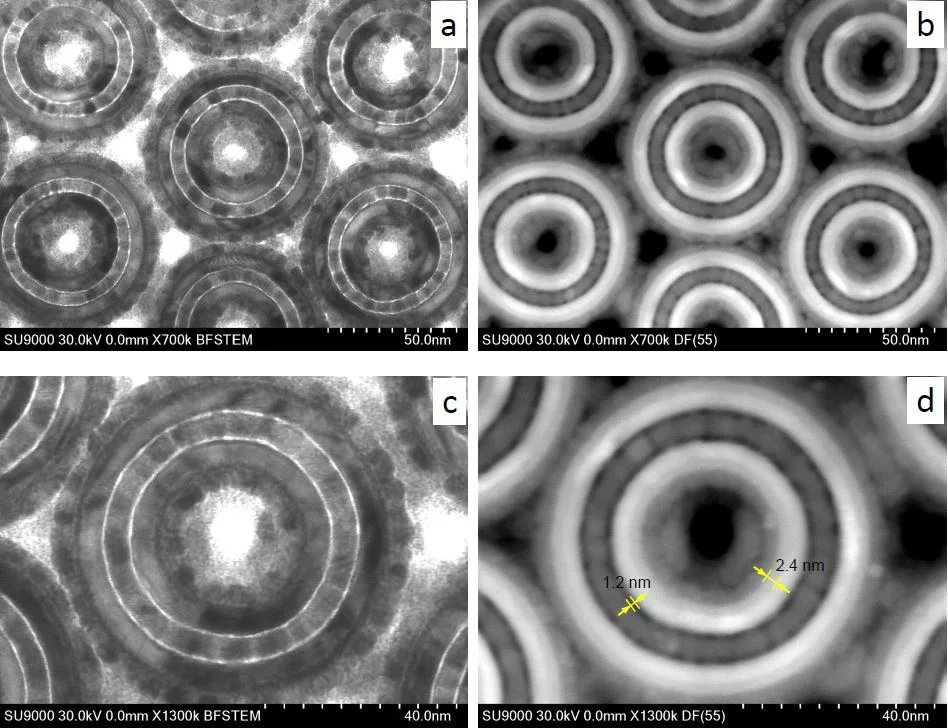

Want to do math on an array? Store it as an RGBA texture.

Fragment Shader for processing. *Paint* the result in a big rectangle.

Memory primitives were graphics shaped, not computer science shaped.

Want to do math on an array? Store it as an RGBA texture.

Fragment Shader for processing. *Paint* the result in a big rectangle.

As you hit the more theoretical sides of Computer Science, you start to realize almost *anything* can produce useful compute.

You just have to get creative with how it’s stored.

The math might be stored in a weird box, but the representation is still valid.

You just have to get creative with how it’s stored.

The math might be stored in a weird box, but the representation is still valid.

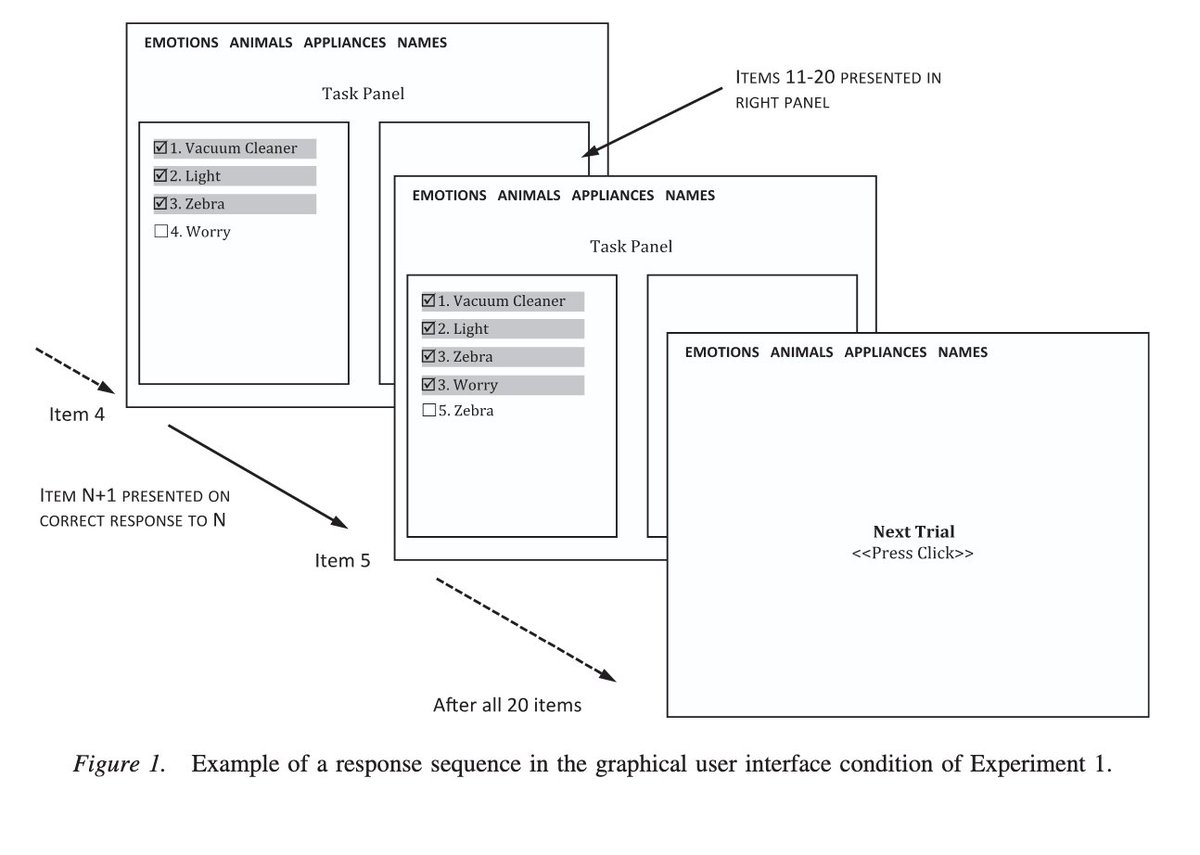

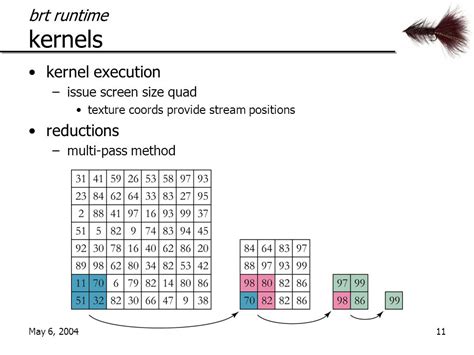

BrookGPU (Stanford) is widely considered the birth of a pre-CUDA GPGPU framework.

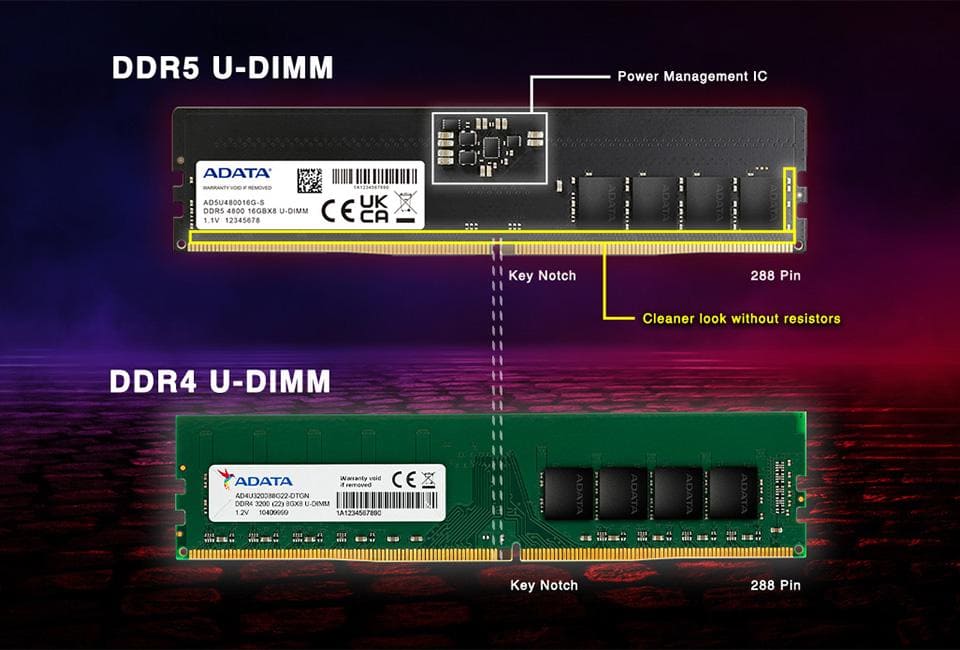

Virtualizing CPU-style primitives; it hid a lot of graphical “weirdness”.

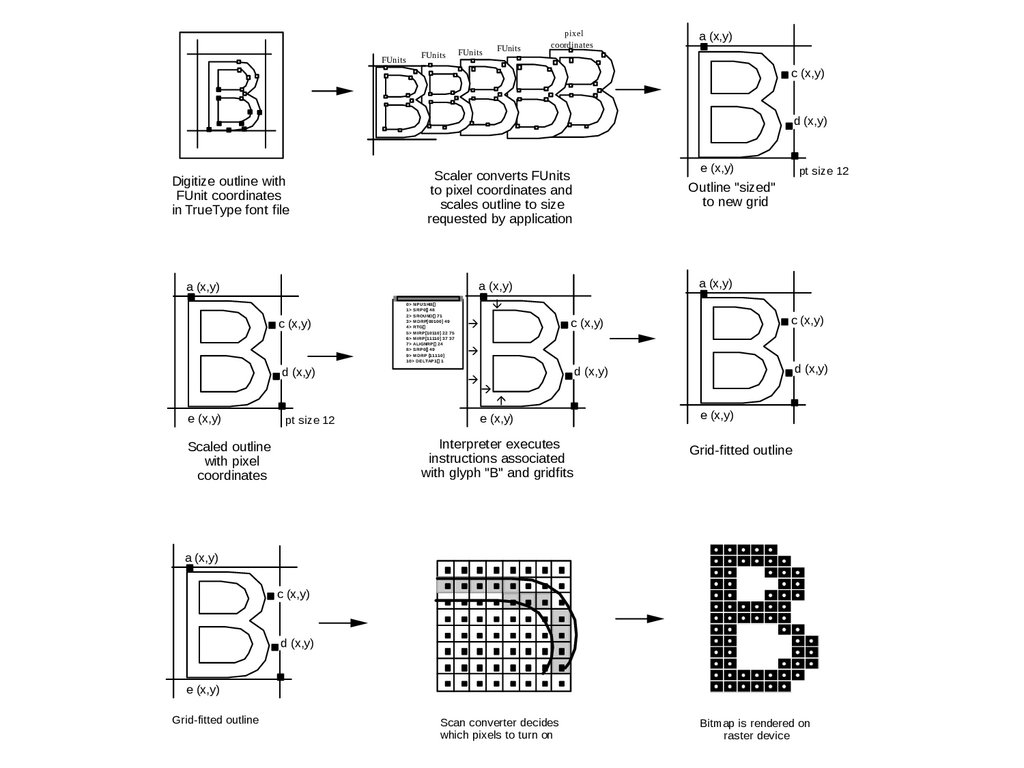

By extending C with stream, kernel, and reduction constructs, GPUs started to act more like a co-processor.

Virtualizing CPU-style primitives; it hid a lot of graphical “weirdness”.

By extending C with stream, kernel, and reduction constructs, GPUs started to act more like a co-processor.

It’s a bit sad we don’t do (scientific) compute with textures much anymore.

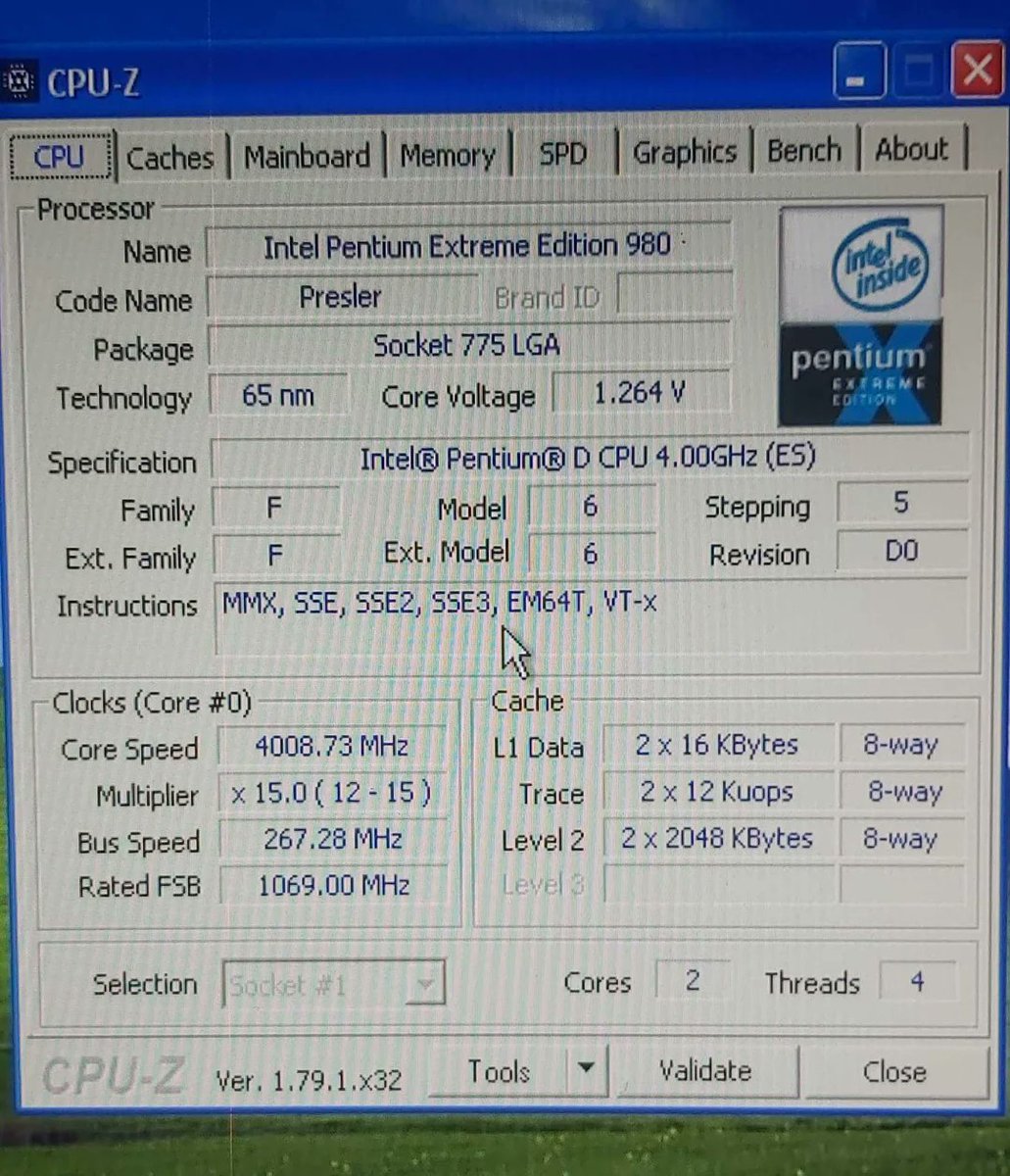

With Brook, it was super simple to bind the compute stream and render the output.

Theoretically, if you mapped an LLM to Brook, you’d be able to visually see the intermediates outputted as textures.

It’s significantly less cool now.

Check out the original paper here, it’s an interesting glimpse at how scientific GPU compute got some traction:

graphics.stanford.edu/papers/brookgp…

With Brook, it was super simple to bind the compute stream and render the output.

Theoretically, if you mapped an LLM to Brook, you’d be able to visually see the intermediates outputted as textures.

It’s significantly less cool now.

Check out the original paper here, it’s an interesting glimpse at how scientific GPU compute got some traction:

graphics.stanford.edu/papers/brookgp…

• • •

Missing some Tweet in this thread? You can try to

force a refresh