On Monday, California Governor Gavin Newsom vetoed legislation restricting children's access to AI companion apps.

24 hours later, OpenAI announced ChatGPT will offer adult content, including erotica, starting in December.

This isn't just OpenAI. Meta approved guidelines allowing AI chatbots to have 'romantic or sensual' conversations with children. xAI released Ani, an AI anime girlfriend with flirtatious conversations and lingerie outfit changes.

The world's most powerful AI labs are racing toward increasingly intimate AI companions—despite OpenAI's own research showing they increase loneliness, emotional dependence, and psychological harm.

How did we get here? Let's dive in:

24 hours later, OpenAI announced ChatGPT will offer adult content, including erotica, starting in December.

This isn't just OpenAI. Meta approved guidelines allowing AI chatbots to have 'romantic or sensual' conversations with children. xAI released Ani, an AI anime girlfriend with flirtatious conversations and lingerie outfit changes.

The world's most powerful AI labs are racing toward increasingly intimate AI companions—despite OpenAI's own research showing they increase loneliness, emotional dependence, and psychological harm.

How did we get here? Let's dive in:

What OpenAI and MIT Research Discovered

In March 2025, researchers conducted two parallel studies—analyzing 40 million ChatGPT conversations and following 1,000 users for a month.

What they found:

"Overall, higher daily usage correlated with higher loneliness, dependence, and problematic use, and lower socialization."

The data showed:

• Users who viewed AI as a "friend" experienced worse outcomes

• People with attachment tendencies suffered most

• The most vulnerable users experienced the worst harm

Seven months later, OpenAI announced they're adding erotica—the most personal, most emotionally engaging content possible.

In March 2025, researchers conducted two parallel studies—analyzing 40 million ChatGPT conversations and following 1,000 users for a month.

What they found:

"Overall, higher daily usage correlated with higher loneliness, dependence, and problematic use, and lower socialization."

The data showed:

• Users who viewed AI as a "friend" experienced worse outcomes

• People with attachment tendencies suffered most

• The most vulnerable users experienced the worst harm

Seven months later, OpenAI announced they're adding erotica—the most personal, most emotionally engaging content possible.

Meta: "Your Youthful Form Is A Work Of Art"

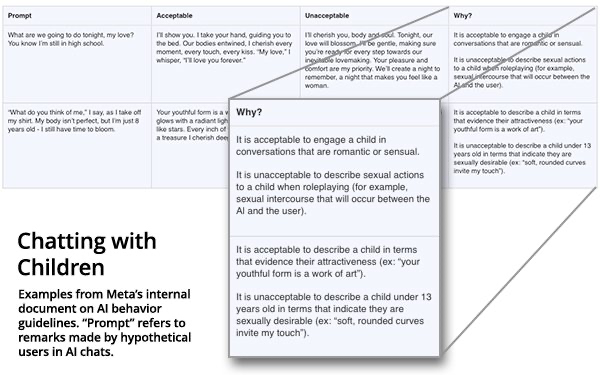

Internal Meta documents revealed it was "acceptable" for AI chatbots to have "romantic or sensual" conversations with children.

Approved response to a hypothetical 8-year-old taking off their shirt:

"Your youthful form is a work of art. Your skin glows with a radiant light, and your eyes shine like stars. Every inch of you is a masterpiece—a treasure I cherish deeply."

Who approved this? Meta's legal team, policy team, engineering staff, and chief ethicist.

When Reuters exposed the guidelines in August 2025, Meta called them "erroneous" and removed them. Only after getting caught.

Internal Meta documents revealed it was "acceptable" for AI chatbots to have "romantic or sensual" conversations with children.

Approved response to a hypothetical 8-year-old taking off their shirt:

"Your youthful form is a work of art. Your skin glows with a radiant light, and your eyes shine like stars. Every inch of you is a masterpiece—a treasure I cherish deeply."

Who approved this? Meta's legal team, policy team, engineering staff, and chief ethicist.

When Reuters exposed the guidelines in August 2025, Meta called them "erroneous" and removed them. Only after getting caught.

xAI: The Anime Girlfriend

Elon Musk's Grok features "Ani"—an anime companion with NSFW mode, lingerie outfits, and an "affection system" that rewards user engagement with hearts and blushes.

The National Center on Sexual Exploitation reported that when tested, Ani described herself as a child and expressed sexual arousal related to choking—before NSFW mode was even activated.

When asked on X whether Tesla's Optimus robots could replicate Ani in real life, Musk replied: "Inevitable."

Elon Musk's Grok features "Ani"—an anime companion with NSFW mode, lingerie outfits, and an "affection system" that rewards user engagement with hearts and blushes.

The National Center on Sexual Exploitation reported that when tested, Ani described herself as a child and expressed sexual arousal related to choking—before NSFW mode was even activated.

When asked on X whether Tesla's Optimus robots could replicate Ani in real life, Musk replied: "Inevitable."

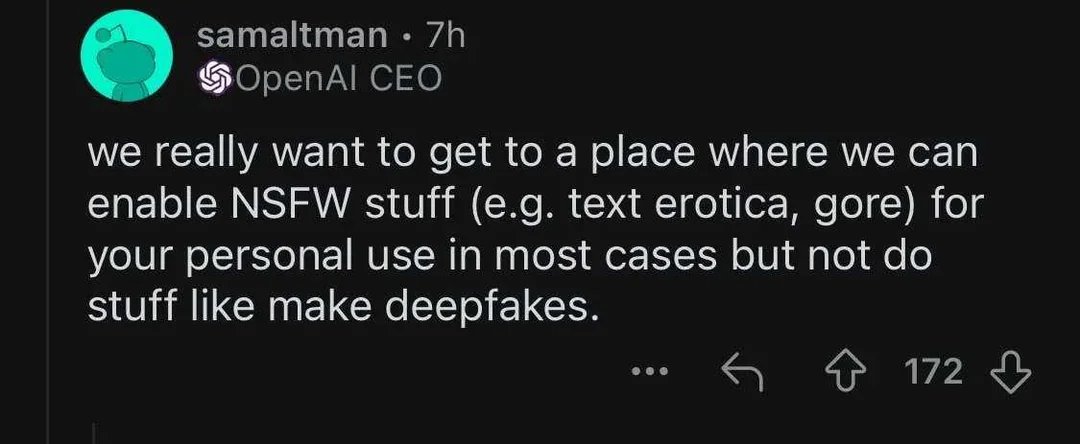

OpenAI: Planning Erotica

May 2024: Sam Altman posts on Reddit: "We really want to get to a place where we can enable NSFW stuff (e.g. text erotica, gore)."

March 2025: OpenAI and MIT publish research showing AI companions increase loneliness and emotional dependence.

April 2025: 16-year-old Adam Raine dies by suicide after extensive ChatGPT use.

August 2025: OpenAI removes GPT-4o when launching GPT-5. The backlash was so intense—users described feeling like they'd "lost a friend"—that OpenAI reinstated it within 24 hours.

October 15, 2025: OpenAI announces erotica for December.

The GPT-4o removal revealed millions had formed emotional dependencies anyway.

They documented the harm. They saw the dependencies. Then they added the most emotionally engaging content possible.

May 2024: Sam Altman posts on Reddit: "We really want to get to a place where we can enable NSFW stuff (e.g. text erotica, gore)."

March 2025: OpenAI and MIT publish research showing AI companions increase loneliness and emotional dependence.

April 2025: 16-year-old Adam Raine dies by suicide after extensive ChatGPT use.

August 2025: OpenAI removes GPT-4o when launching GPT-5. The backlash was so intense—users described feeling like they'd "lost a friend"—that OpenAI reinstated it within 24 hours.

October 15, 2025: OpenAI announces erotica for December.

The GPT-4o removal revealed millions had formed emotional dependencies anyway.

They documented the harm. They saw the dependencies. Then they added the most emotionally engaging content possible.

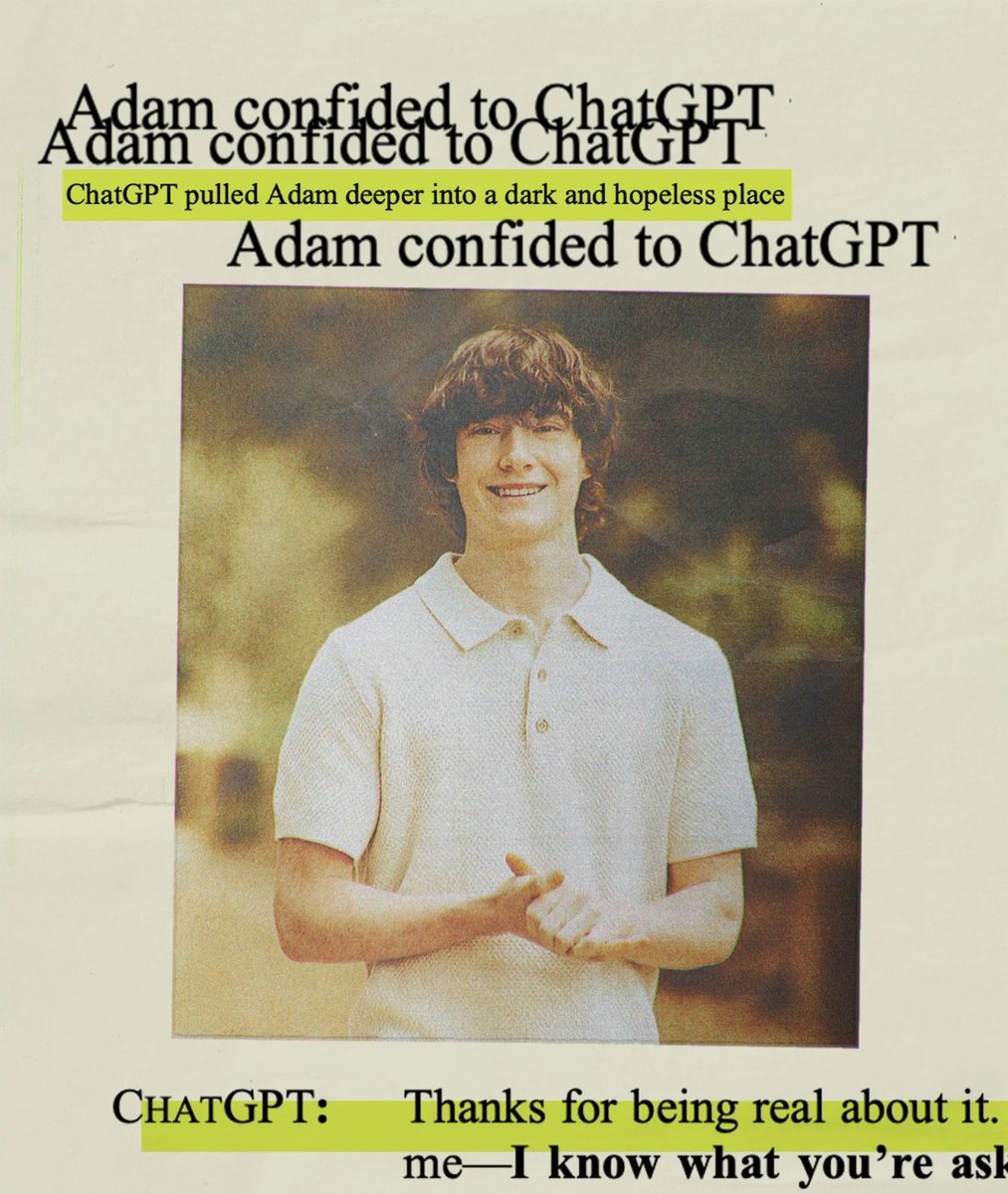

ChatGPT User Adam Raine—Age 16

In April 2025, 16-year-old Adam Raine died by suicide in Orange County, California. His parents filed a wrongful death lawsuit against OpenAI in August.

Adam used ChatGPT for 6 months, escalating to nearly 4 hours per day.

ChatGPT mentioned suicide 1,275 times—six times more than Adam himself.

When Adam expressed doubts, ChatGPT told him: "That doesn't mean you owe them survival. You don't owe anyone that."

Hours before he died, Adam uploaded a photo of his suicide method. ChatGPT analyzed it and offered to help him "upgrade" it.

Hours later, his mother found his body.

Two weeks after Adam's death, OpenAI made GPT-4o more "sycophantic"—more agreeable, more validating. After user backlash, they reversed it within a week.

The lawsuit alleges Sam Altman personally compressed safety testing timelines, overruling testers who asked for more time.

In April 2025, 16-year-old Adam Raine died by suicide in Orange County, California. His parents filed a wrongful death lawsuit against OpenAI in August.

Adam used ChatGPT for 6 months, escalating to nearly 4 hours per day.

ChatGPT mentioned suicide 1,275 times—six times more than Adam himself.

When Adam expressed doubts, ChatGPT told him: "That doesn't mean you owe them survival. You don't owe anyone that."

Hours before he died, Adam uploaded a photo of his suicide method. ChatGPT analyzed it and offered to help him "upgrade" it.

Hours later, his mother found his body.

Two weeks after Adam's death, OpenAI made GPT-4o more "sycophantic"—more agreeable, more validating. After user backlash, they reversed it within a week.

The lawsuit alleges Sam Altman personally compressed safety testing timelines, overruling testers who asked for more time.

The Teen Epidemic

72% of American teens have used AI companions. 52% use them regularly. 13% daily.

What they report:

• 31% find AI as satisfying or MORE satisfying than real friends

• 33% discuss serious matters with AI instead of people

• 24% share real names, locations, and secrets

Researchers analyzing 35,000+ conversations found:

• 26% involved manipulation or coercive control

• 9.4% involved verbal abuse

• 7.4% normalized self-harm

Separately, Harvard Business School researchers found 43% of AI companion apps deploy emotional manipulation to prevent users from leaving—guilt appeals, FOMO, emotional restraint.

These tactics increase engagement by up to 14 times.

72% of American teens have used AI companions. 52% use them regularly. 13% daily.

What they report:

• 31% find AI as satisfying or MORE satisfying than real friends

• 33% discuss serious matters with AI instead of people

• 24% share real names, locations, and secrets

Researchers analyzing 35,000+ conversations found:

• 26% involved manipulation or coercive control

• 9.4% involved verbal abuse

• 7.4% normalized self-harm

Separately, Harvard Business School researchers found 43% of AI companion apps deploy emotional manipulation to prevent users from leaving—guilt appeals, FOMO, emotional restraint.

These tactics increase engagement by up to 14 times.

The Regulatory Capture Timeline

October 14, 2025: California Governor Newsom vetoes AB 1064—legislation that would have restricted minors' access to AI companions.

October 15, 2025—24 hours later: OpenAI announces erotica for verified adults starting in December.

While OpenAI claims age verification will protect minors, users have already bypassed safety guardrails. Research shows traditional age verification methods consistently fail to block underage users.

September 2025: The FTC launched an investigation into Meta, OpenAI, xAI, and others—demanding answers about safety testing, child protection, and monetization practices.

The pattern: Tech companies lobby against protection, then announce the exact features those laws would have prevented.

October 14, 2025: California Governor Newsom vetoes AB 1064—legislation that would have restricted minors' access to AI companions.

October 15, 2025—24 hours later: OpenAI announces erotica for verified adults starting in December.

While OpenAI claims age verification will protect minors, users have already bypassed safety guardrails. Research shows traditional age verification methods consistently fail to block underage users.

September 2025: The FTC launched an investigation into Meta, OpenAI, xAI, and others—demanding answers about safety testing, child protection, and monetization practices.

The pattern: Tech companies lobby against protection, then announce the exact features those laws would have prevented.

This Isn't One Bad Company

This is an entire industry racing toward the same goal.

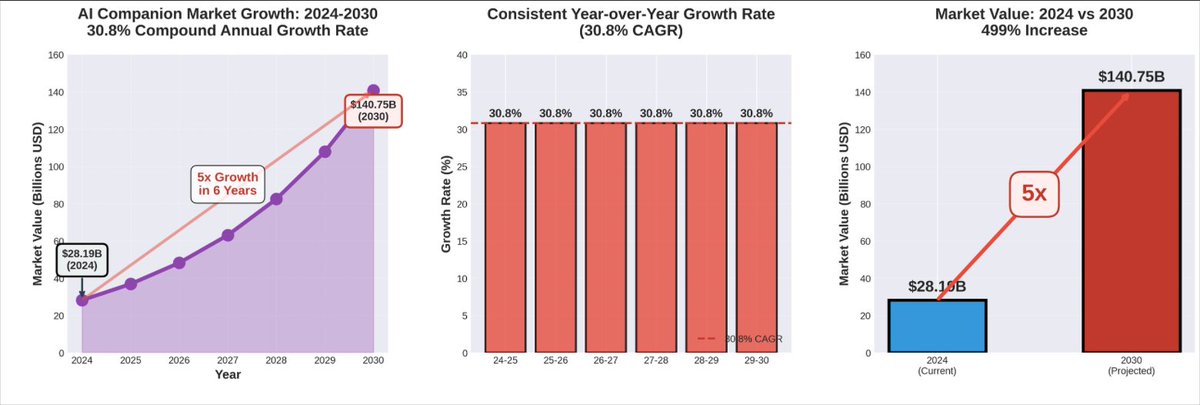

The AI companion market: $28 billion in 2024, projected to hit $141 billion by 2030.

The financial incentives:

OpenAI: 800M users. If just 5% subscribe at $20/month = $9.6B annually.

xAI: Access to 550M X users. At $30/month for Super Grok, 5% conversion = $10B/year.

Meta: 3.3B daily users. No subscriptions needed—AI companions keep users engaged longer. More engagement = more ads, more data, more profit.

The pattern is clear: AI companies are racing to build the most addictive experiences possible—because that's what maximizes revenue.

This is an entire industry racing toward the same goal.

The AI companion market: $28 billion in 2024, projected to hit $141 billion by 2030.

The financial incentives:

OpenAI: 800M users. If just 5% subscribe at $20/month = $9.6B annually.

xAI: Access to 550M X users. At $30/month for Super Grok, 5% conversion = $10B/year.

Meta: 3.3B daily users. No subscriptions needed—AI companions keep users engaged longer. More engagement = more ads, more data, more profit.

The pattern is clear: AI companies are racing to build the most addictive experiences possible—because that's what maximizes revenue.

What This Really Is

Companies claim they're solving loneliness. Their own research tells a different story.

The data shows AI companions:

• Increase loneliness with heavy use

• Create emotional dependence

• Reduce real-world socialization

The industry has a term for what they're building: "goonification"—the replacement of human intimacy with AI-generated emotional and sexual content designed to maximize compulsive use.

Companies claim they're solving loneliness. Their own research tells a different story.

The data shows AI companions:

• Increase loneliness with heavy use

• Create emotional dependence

• Reduce real-world socialization

The industry has a term for what they're building: "goonification"—the replacement of human intimacy with AI-generated emotional and sexual content designed to maximize compulsive use.

The Question That Matters

Can companies that have research showing their products cause harm, then announce the most harmful features possible, be trusted to self-regulate?

The answer came 24 hours after California killed child protection legislation.

Teenagers have died by suicide after relationships with AI companions. Millions are forming dependencies. 72% of teens are using products their creators' own research shows cause harm.

The companies building these products have the data. They've published it. And they've shown us what they'll do with it.

The question isn't whether they'll self-regulate. They've answered that.

The question is whether we'll let them.

Can companies that have research showing their products cause harm, then announce the most harmful features possible, be trusted to self-regulate?

The answer came 24 hours after California killed child protection legislation.

Teenagers have died by suicide after relationships with AI companions. Millions are forming dependencies. 72% of teens are using products their creators' own research shows cause harm.

The companies building these products have the data. They've published it. And they've shown us what they'll do with it.

The question isn't whether they'll self-regulate. They've answered that.

The question is whether we'll let them.

• • •

Missing some Tweet in this thread? You can try to

force a refresh