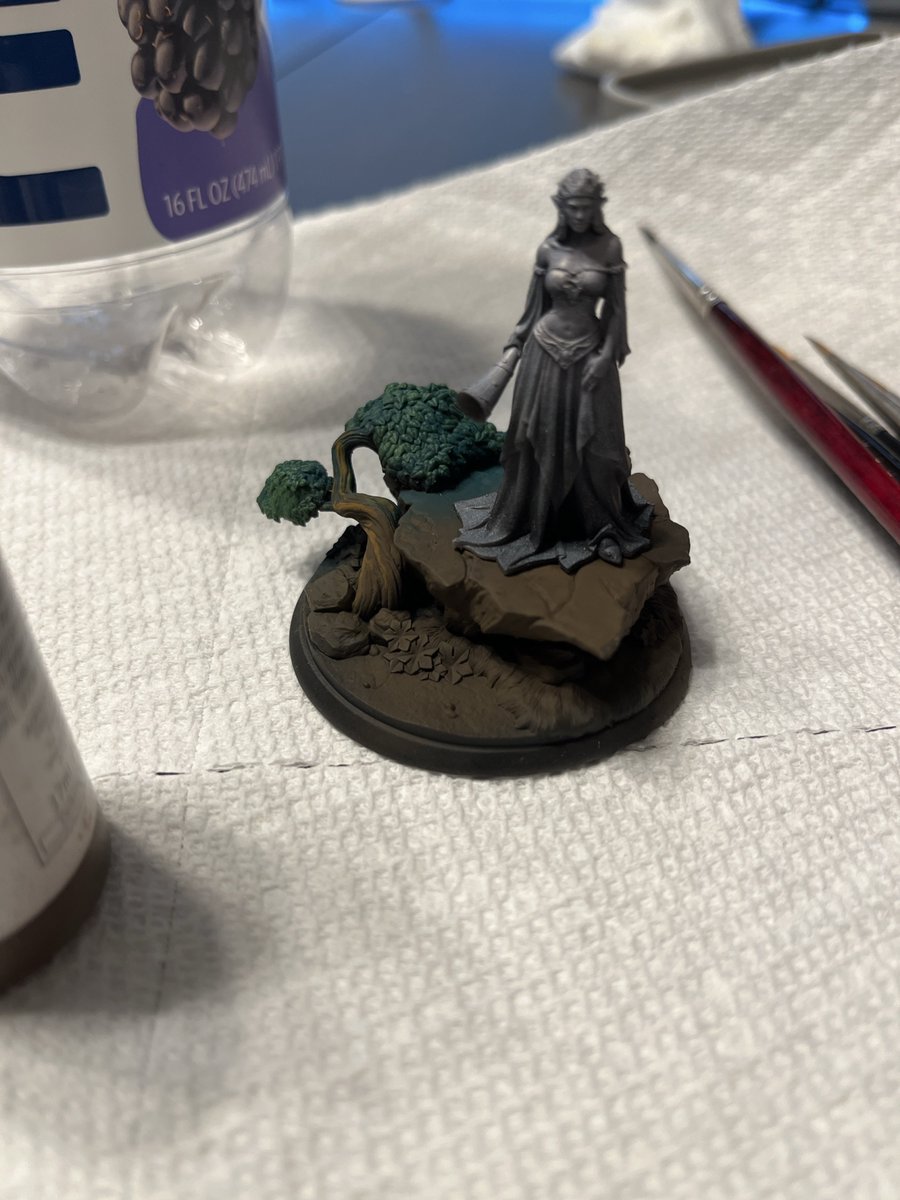

Still working on a few essays about what I learned on using LLMs for coding but if you want a sneak peak, Complex Systems this week discusses the game I made in some detail.

I’m probably adding one essay to the series on LLMs for taxes.

I’m probably adding one essay to the series on LLMs for taxes.

It feels a bit weird to need to continue saying this, but yes, LLMs are obviously capable of doing material work in production, including in domains where answers are right or wrong, including where there is a penalty for being wrong. Of course they are.

“Why?”

Because a lot of discourse weights people and actors heavily where they cannot be right or wrong in any way that matters, and where correctness does not materially result in a different incentive for them.

Because a lot of discourse weights people and actors heavily where they cannot be right or wrong in any way that matters, and where correctness does not materially result in a different incentive for them.

And as a result you can expect to read “LLMs can’t do any real work, obviously, they are Markov chains without a world model” every day as they increasingly remodel / are used to remodel the economy.

I would be very confused about how people could possibly make and/or be convinced by claims which could be disproven in five minutes with a public website had I not had the experience of the last few years, during which that experience has not been rare.

Sneak peek. One of these days I’ll stop hallucinating. Until then, enjoy an entity capable of both context-aware spelling correction and also light humor.

• • •

Missing some Tweet in this thread? You can try to

force a refresh