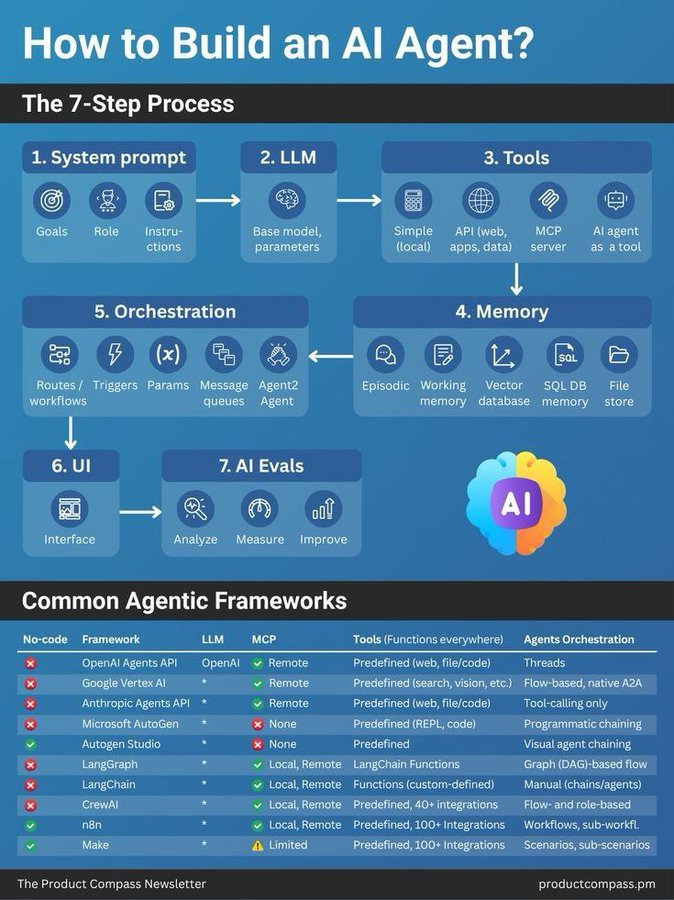

1️⃣ System Prompt: Define your agent’s role, capabilities, and boundaries. This gives your agent the necessary context.

2️⃣ LLM (Large Language Model): Choose the engine. GPT-5, Claude, Mistral, or an open-source model — pick based on reasoning needs, latency, and cost.

2️⃣ LLM (Large Language Model): Choose the engine. GPT-5, Claude, Mistral, or an open-source model — pick based on reasoning needs, latency, and cost.

3️⃣ Tools - Equip your agent with tools: API access, code interpreters, database queries, web search, etc. More tools = more utility. Max 20.

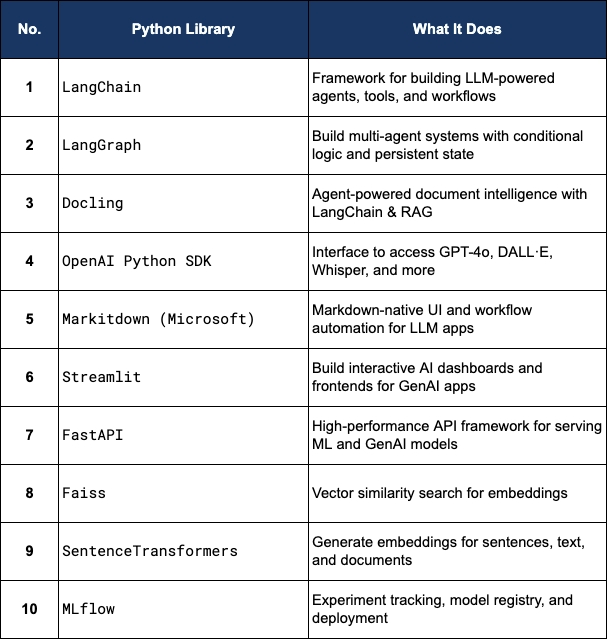

4️⃣ Orchestration: Use frameworks (like LangChain, AutoGen, CrewAI) to manage reasoning, task decomposition, and multi-agent collaboration.

4️⃣ Orchestration: Use frameworks (like LangChain, AutoGen, CrewAI) to manage reasoning, task decomposition, and multi-agent collaboration.

5️⃣ Memory: Implement both short-term (context window) and long-term memory (Vector DBs like Pinecone, Weaviate, Chroma).

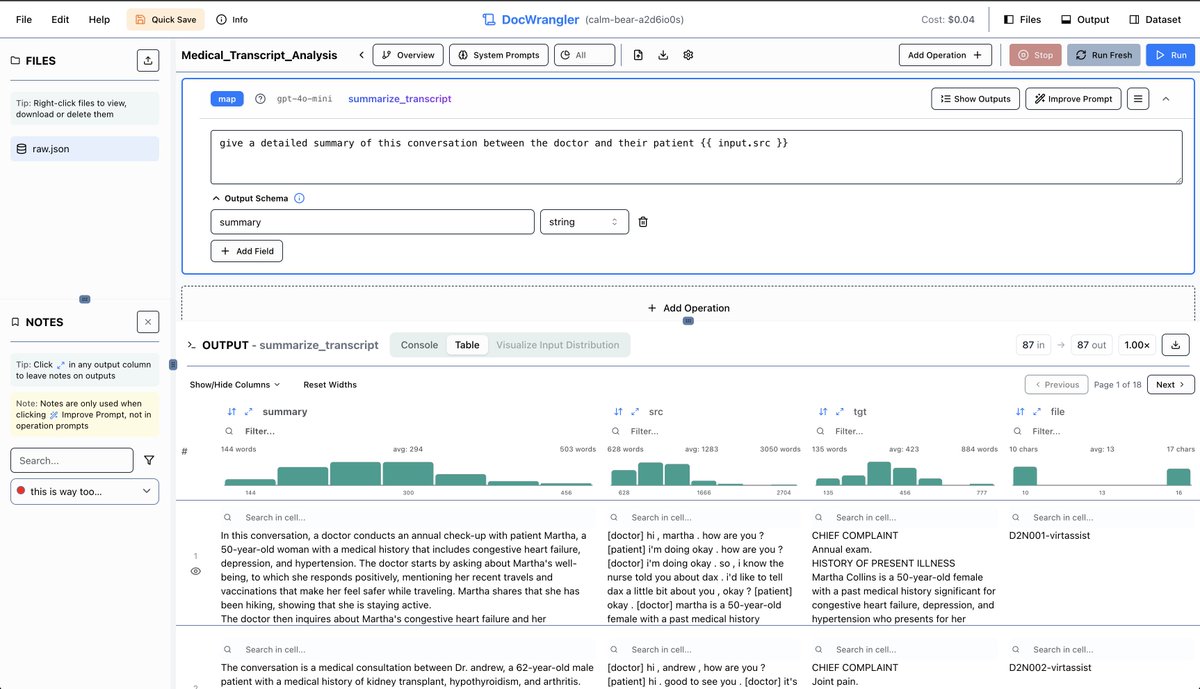

6️⃣ UI (User Interface): Design an intuitive chat UI or business automation workflow interface that enables smooth interaction with your agent (and automated actions).

6️⃣ UI (User Interface): Design an intuitive chat UI or business automation workflow interface that enables smooth interaction with your agent (and automated actions).

7️⃣ AI Evals: Test your agent's performance with real-world tasks. Use tools like TruLens, Rebuff, or custom evals to measure effectiveness, reliability, and safety.

I have one more thing before you go.

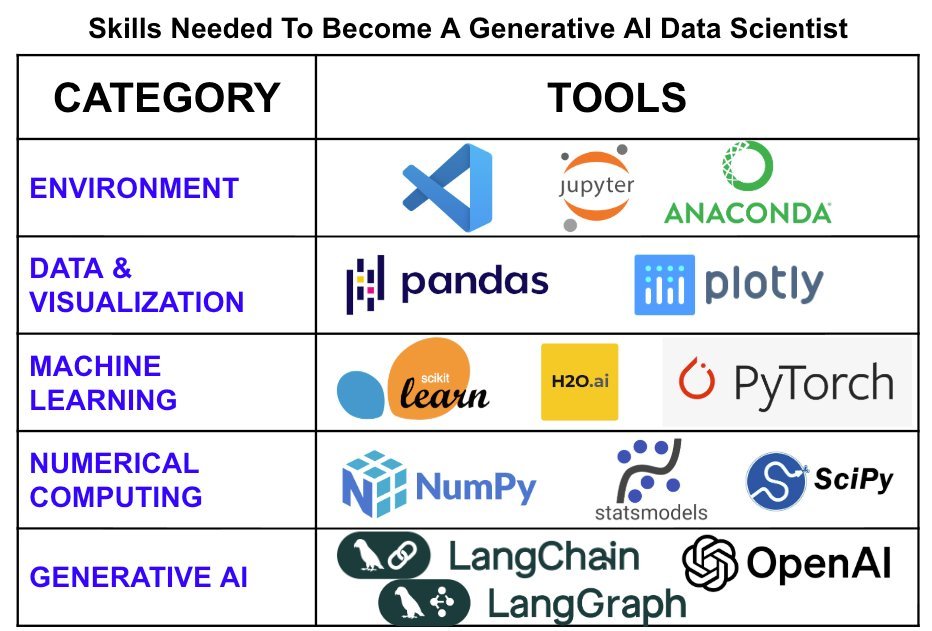

If you want to become a generative AI data scientist in 2025 ($200,000 career), then I'd like to help:

I have one more thing before you go.

If you want to become a generative AI data scientist in 2025 ($200,000 career), then I'd like to help:

On Wednesday, October 29th, I'm sharing one of my best AI Projects:

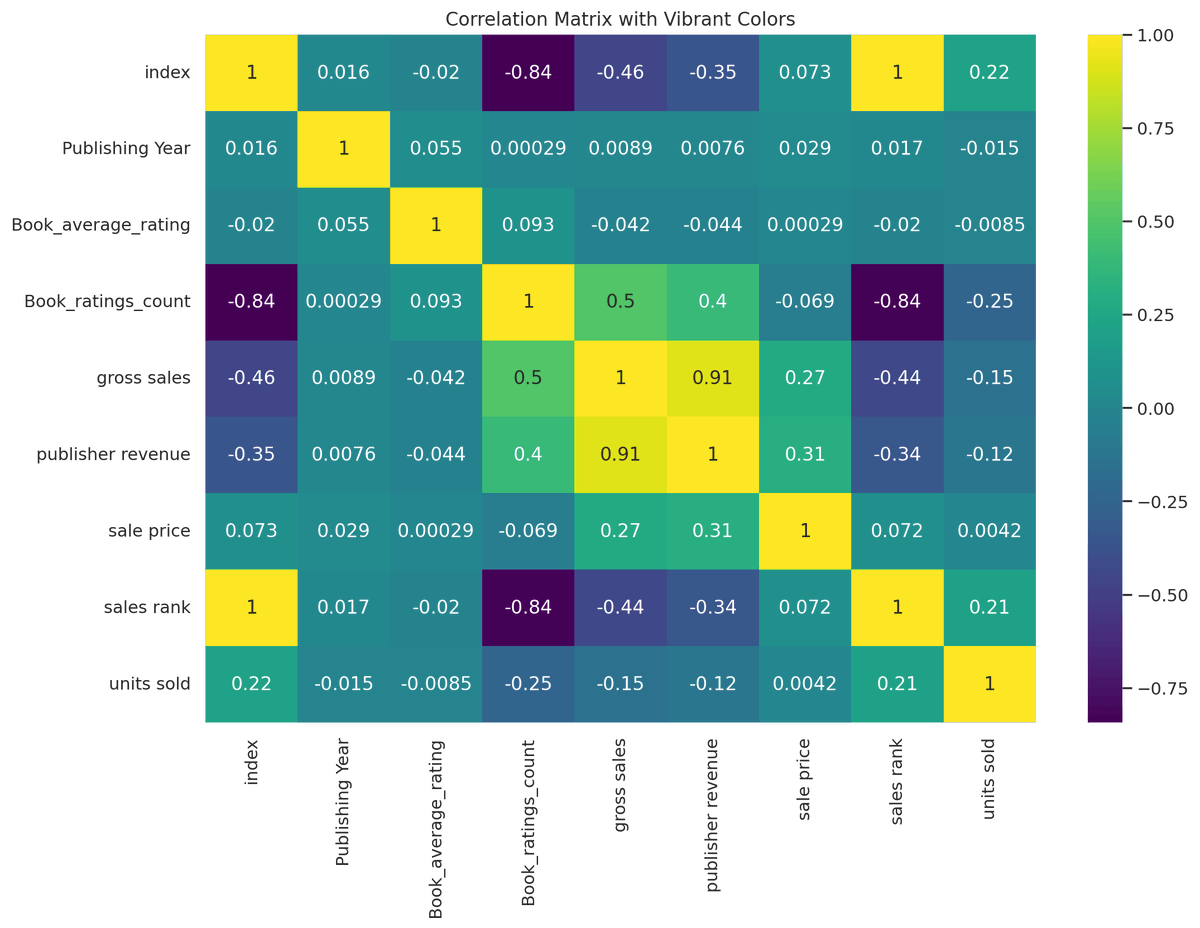

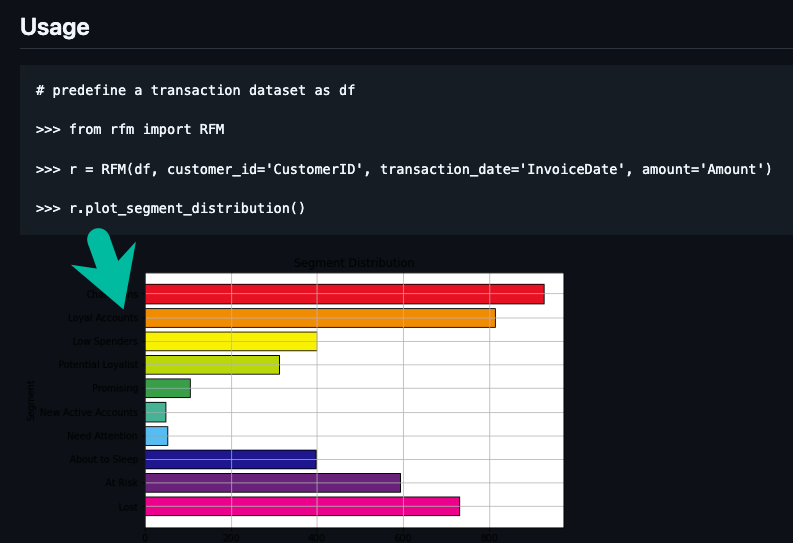

How I built an AI Customer Segmentation Agent with Python

👉Register here (740+ Registered): learn.business-science.io/ai-register

How I built an AI Customer Segmentation Agent with Python

👉Register here (740+ Registered): learn.business-science.io/ai-register

That's a wrap! Over the next 24 days, I'm sharing the 24 concepts that helped me become a data scientist.

If you enjoyed this thread:

1. Follow me @mdancho84 for more of these

2. RT the tweet below to share this thread with your audience

If you enjoyed this thread:

1. Follow me @mdancho84 for more of these

2. RT the tweet below to share this thread with your audience

https://twitter.com/815555071517872128/status/1980960662873338218

• • •

Missing some Tweet in this thread? You can try to

force a refresh