Generative AI, Data Science, Python, and Business (ROI). Join my next live AI workshop (free).👇

12 subscribers

How to get URL link on X (Twitter) App

First: this isn’t “AI hype.”

First: this isn’t “AI hype.”

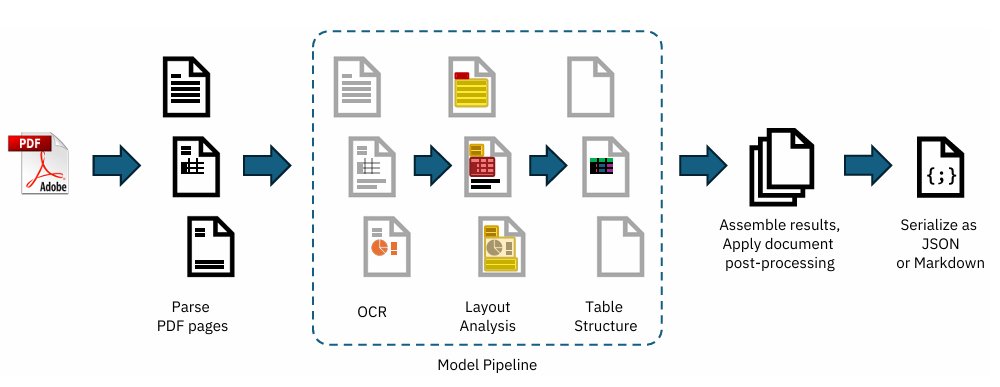

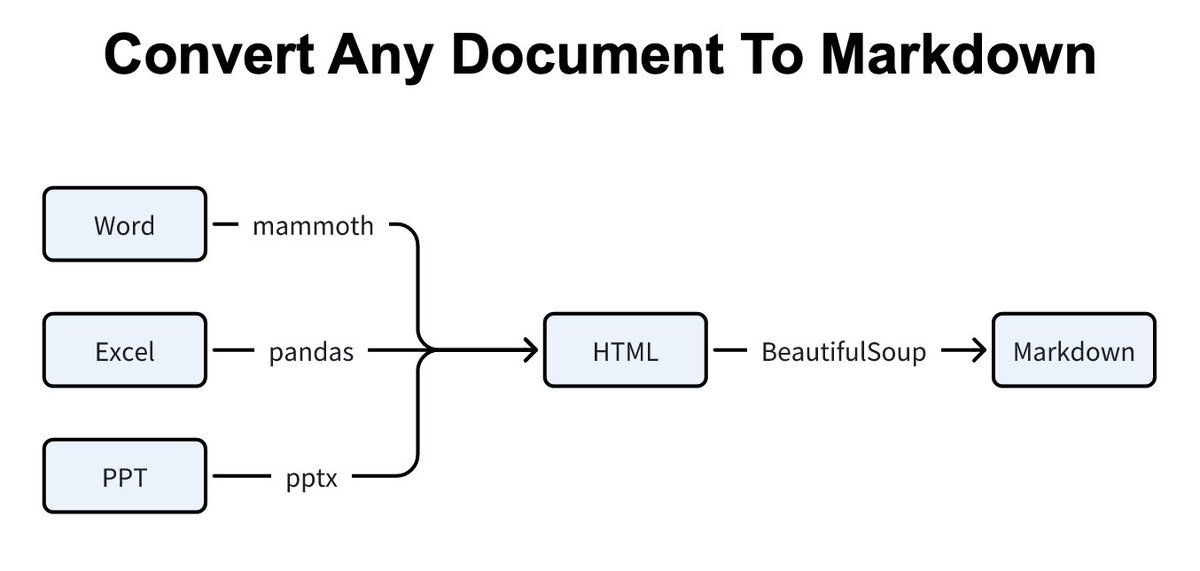

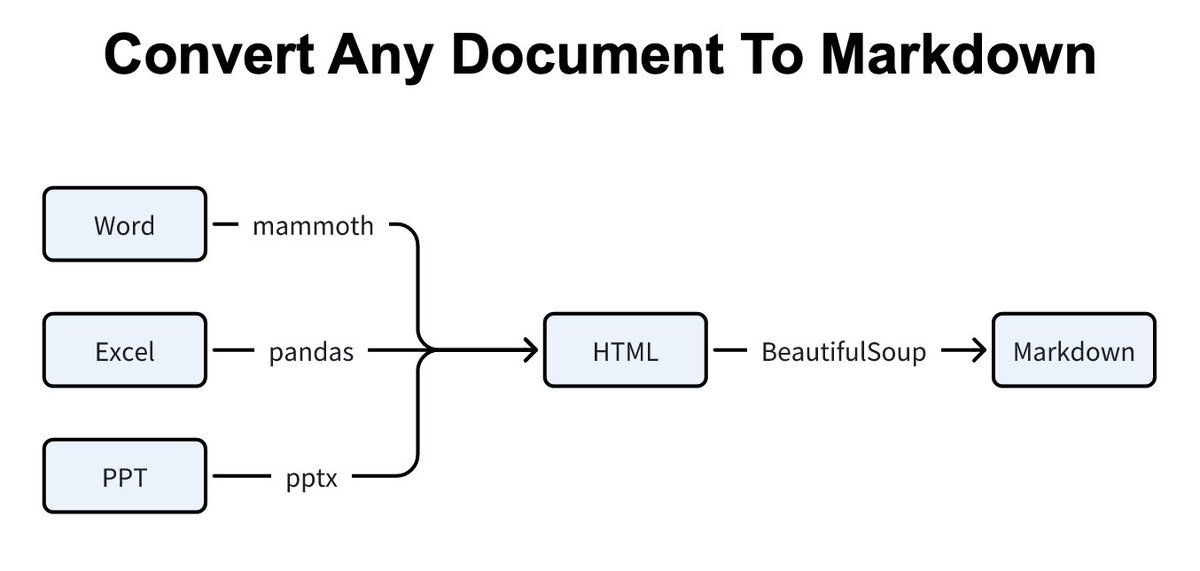

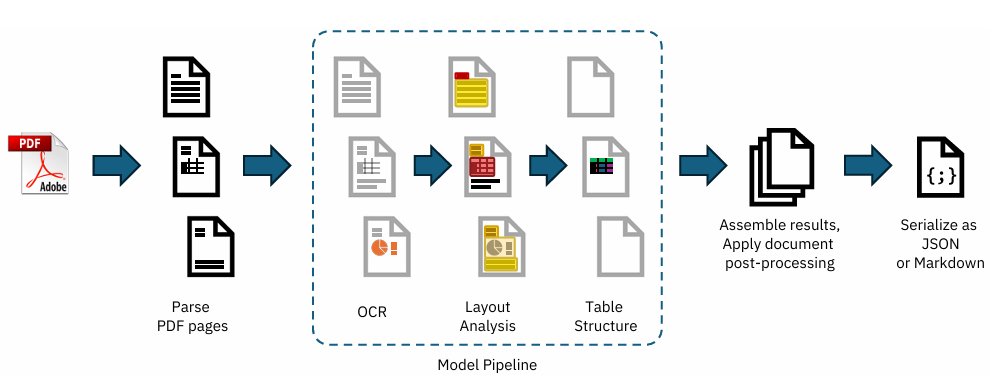

1. Document Parsing Pipelines

1. Document Parsing Pipelines

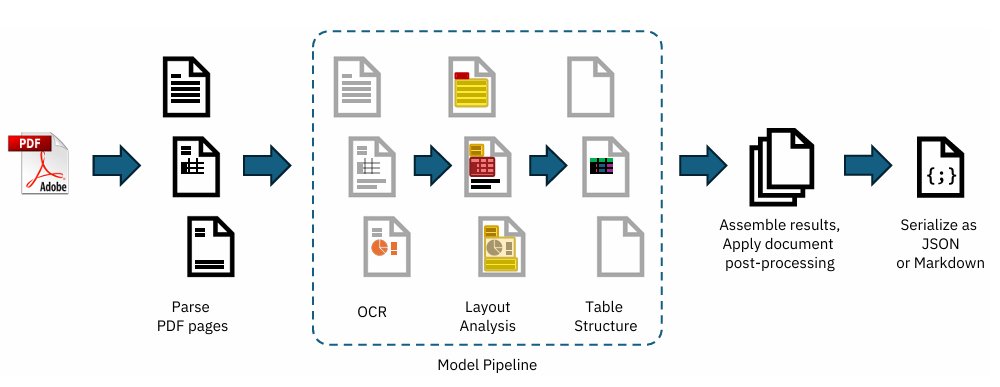

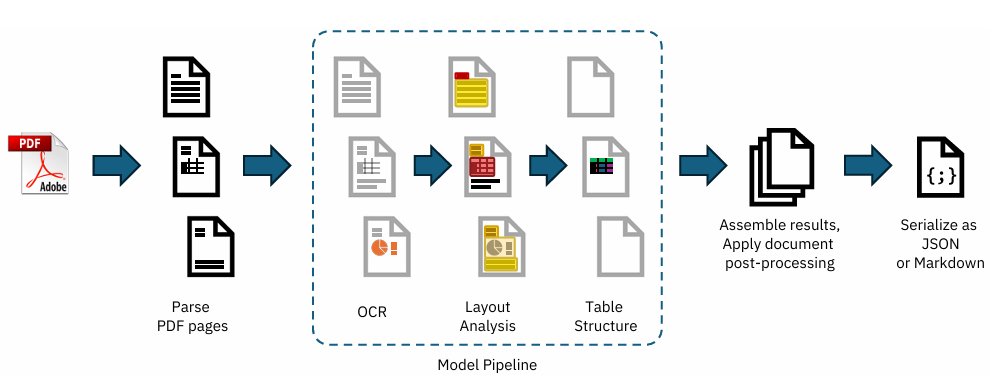

1. What is Docling?

1. What is Docling?

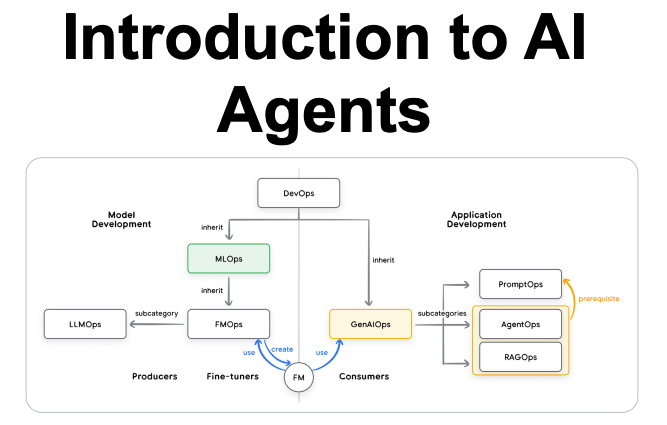

1. AI in the Enterprise by OpenAI

1. AI in the Enterprise by OpenAI

1. Stop building agents; Start fixing workflows

1. Stop building agents; Start fixing workflows

The problem with existing agents - You need to prompt the LLM to generate actions.

The problem with existing agents - You need to prompt the LLM to generate actions.

First: this isn’t “AI hype.”

First: this isn’t “AI hype.”

1) Small models can punch way above their weight

1) Small models can punch way above their weight

1. AI in the Enterprise by OpenAI

1. AI in the Enterprise by OpenAI

1. Document Parsing Pipelines

1. Document Parsing Pipelines

1. What is Docling?

1. What is Docling?

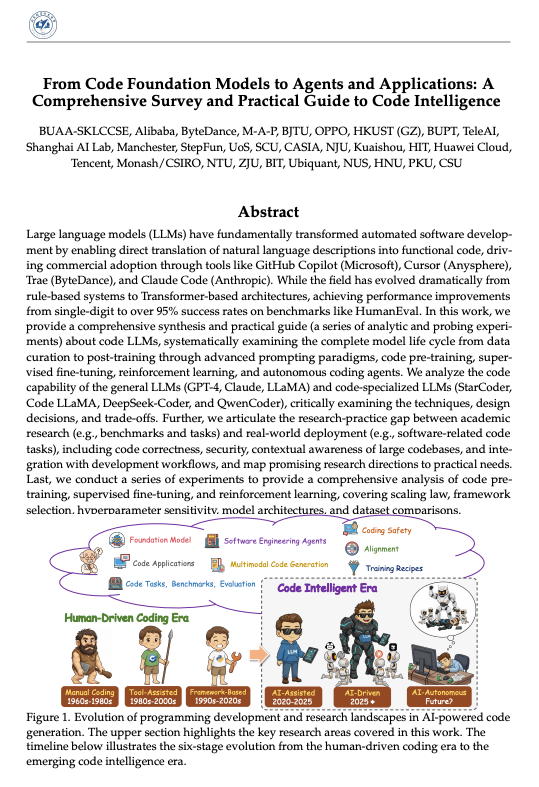

Here's what they cover:

Here's what they cover:

Stanford just released a 23 page paper on Agentic Context Enginnering to improve Agents. Key ideas:

Stanford just released a 23 page paper on Agentic Context Enginnering to improve Agents. Key ideas:

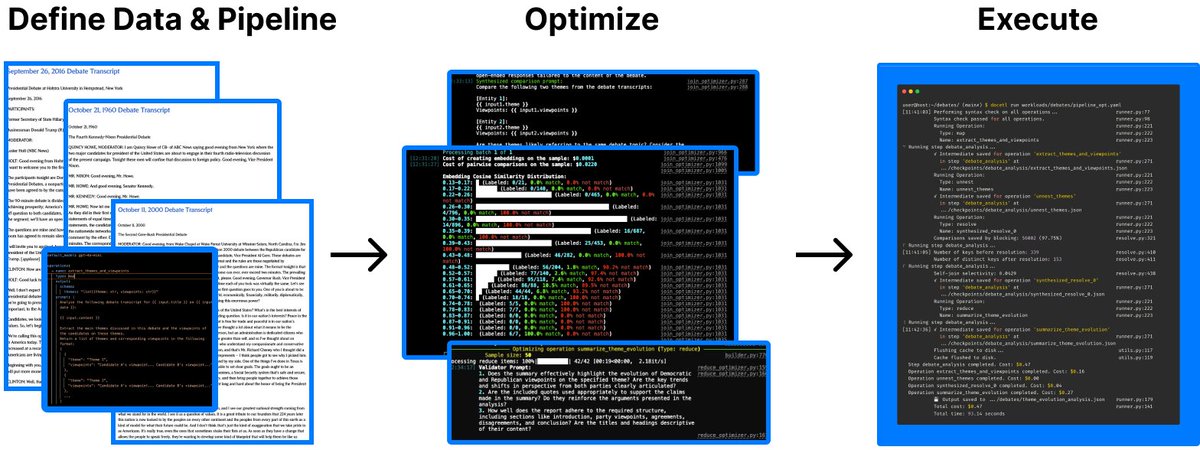

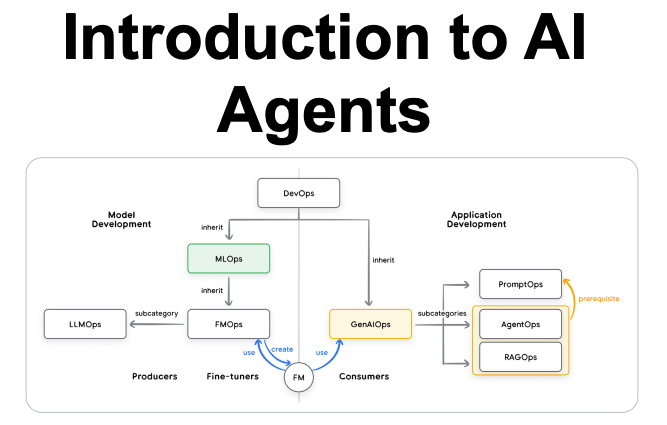

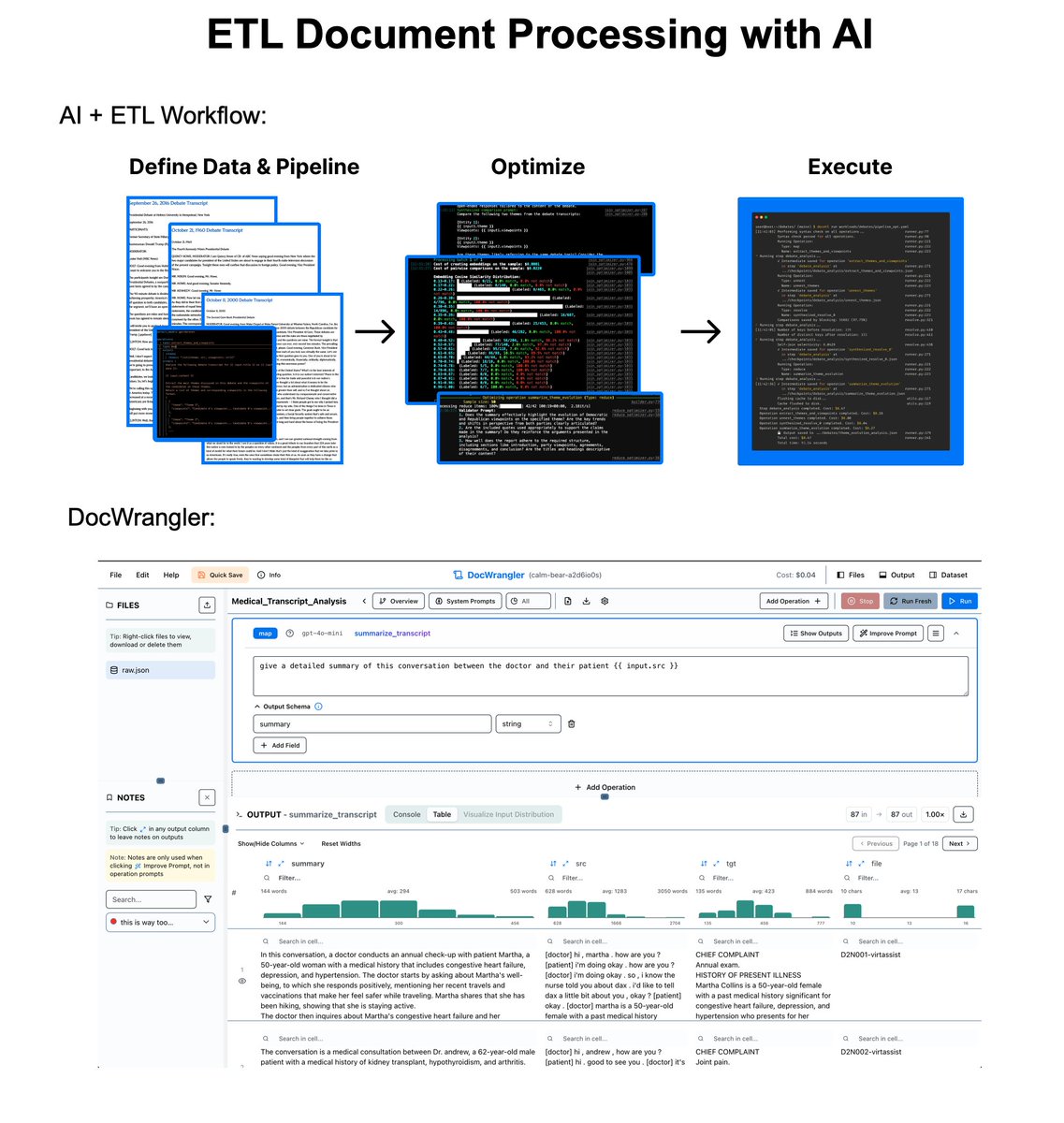

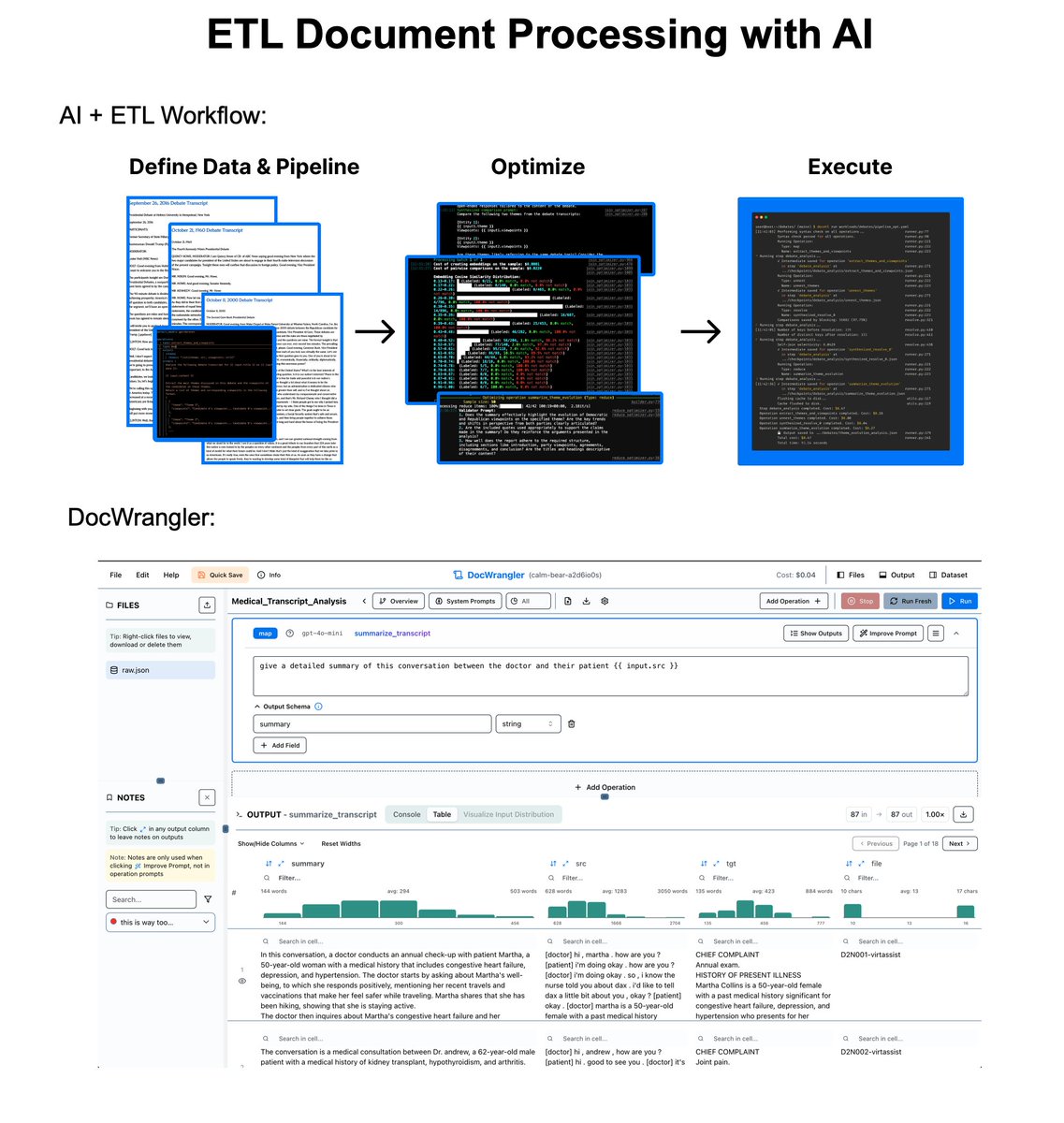

1. What is DocETL?

1. What is DocETL?