We're scaling our Open-Source Environments Program

As part of this, we're committing hundreds of thousands of $ in bounties and looking for partners who want to join our mission to accelerate open superintelligence

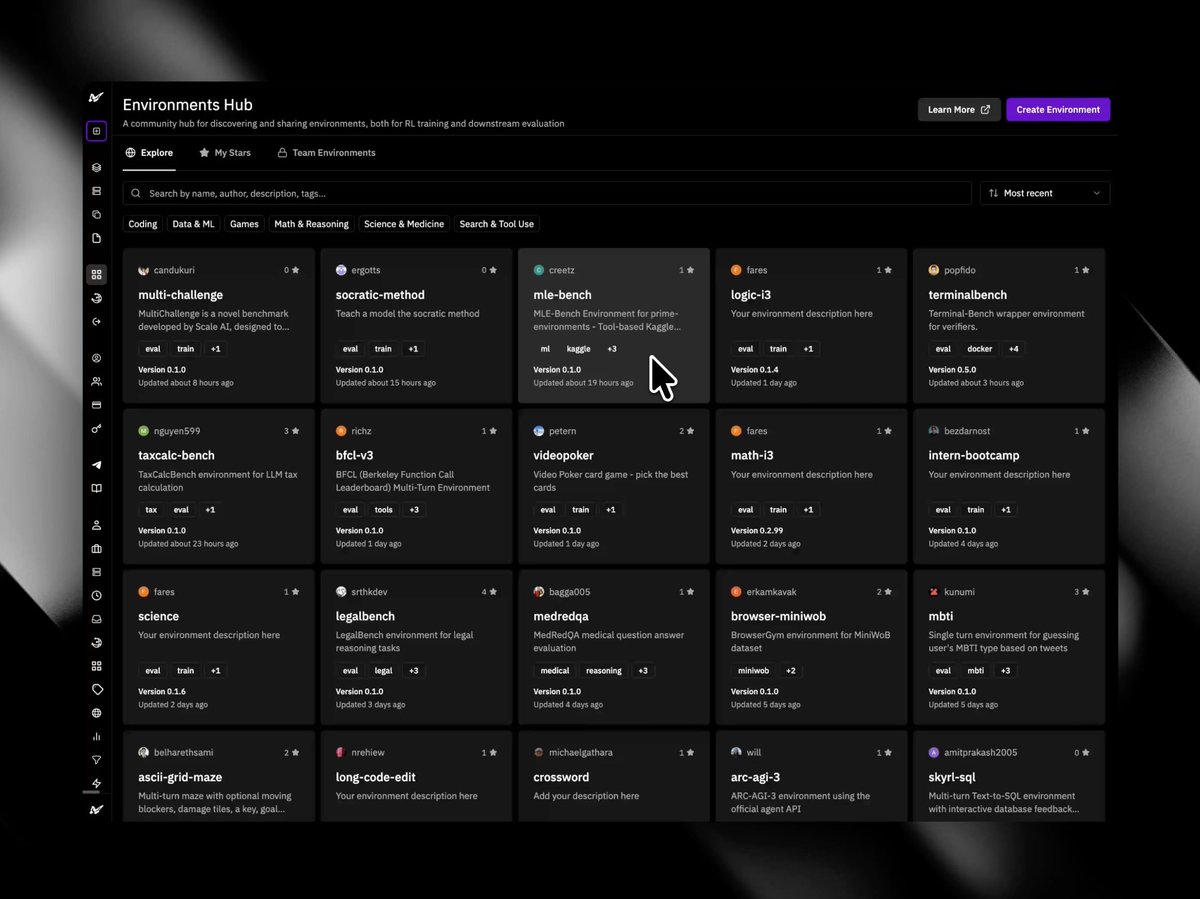

Join us in building the global hub for environments and evals

As part of this, we're committing hundreds of thousands of $ in bounties and looking for partners who want to join our mission to accelerate open superintelligence

Join us in building the global hub for environments and evals

Over the past 2 months, we've crowdsourced 400+ environments and 80+ verified implementations through our bounties and RL residency across:

+ Autonomous AI Research

+ Browser Automation

+ Theorem Proving

+ Subject-Specific QA

+ Legal/Finance Tasks

+ Many more...

+ Autonomous AI Research

+ Browser Automation

+ Theorem Proving

+ Subject-Specific QA

+ Legal/Finance Tasks

+ Many more...

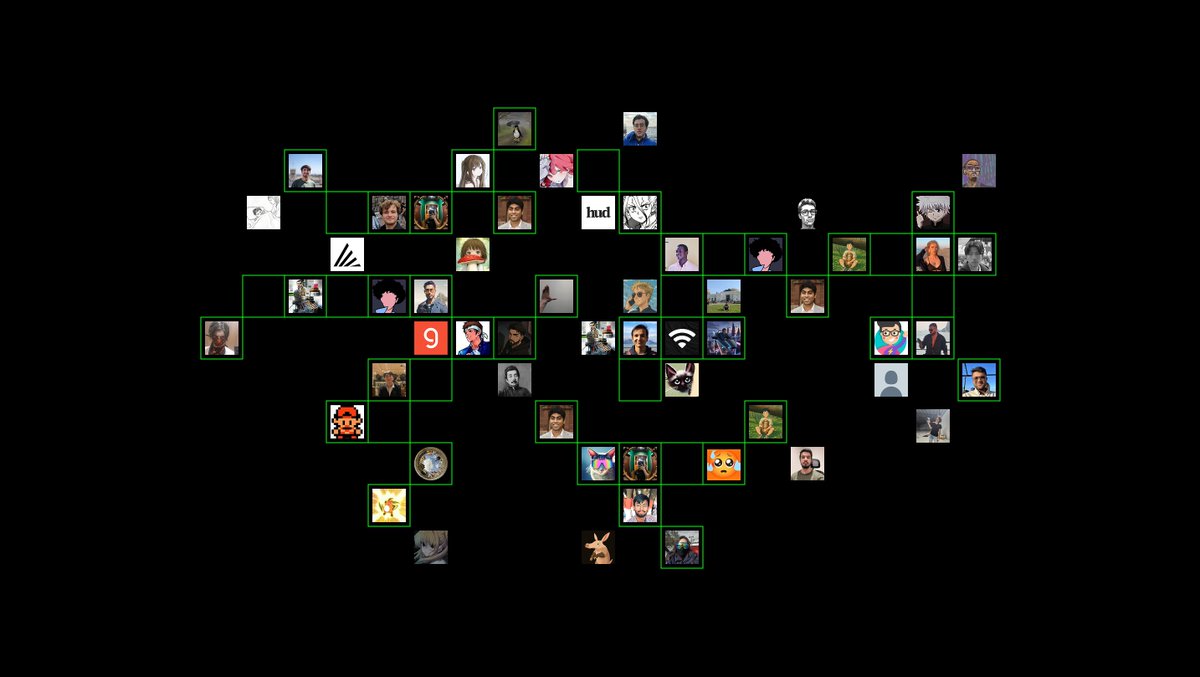

Thank you to everyone whose claimed a bounty or joined the residency!

@alexinexxx @xlr8harder @LatentLich @myainotez @ChaseBrowe32432 @varunneal @vyomdundigalla @amit05prakash @minjunesh @sidbing @unrelated333 @ljt019 @lakshyaag @sid_899 @srthkdev @semiozz @ibnAmjid and more!

@alexinexxx @xlr8harder @LatentLich @myainotez @ChaseBrowe32432 @varunneal @vyomdundigalla @amit05prakash @minjunesh @sidbing @unrelated333 @ljt019 @lakshyaag @sid_899 @srthkdev @semiozz @ibnAmjid and more!

Today, we're releasing a new wave of bounties, with some worth over $5,000, including:

+ Flagship benchmarks

+ Evaluations for popular software tools

+ Automated AI research tasks

+ Integrations with popular RL trainers

+ Major feature additions to Prime Intellect libraries

+ Flagship benchmarks

+ Evaluations for popular software tools

+ Automated AI research tasks

+ Integrations with popular RL trainers

+ Major feature additions to Prime Intellect libraries

We're also looking for partners who want to sponsor environments.

E.g. If you:

+ Want envs for skills in specific domains

+ Want envs built around your tools

+ Want to show your evals on the Hub

+ Need custom RL envs for your own models

For Partners: form.typeform.com/to/DkpaAX0d

E.g. If you:

+ Want envs for skills in specific domains

+ Want envs built around your tools

+ Want to show your evals on the Hub

+ Need custom RL envs for your own models

For Partners: form.typeform.com/to/DkpaAX0d

Let's open-source the tools to build superintelligence

Browse and claim bounties: docs.google.com/spreadsheets/d…

Read more: primeintellect.ai/blog/scaling-e…

Browse and claim bounties: docs.google.com/spreadsheets/d…

Read more: primeintellect.ai/blog/scaling-e…

• • •

Missing some Tweet in this thread? You can try to

force a refresh