How to get URL link on X (Twitter) App

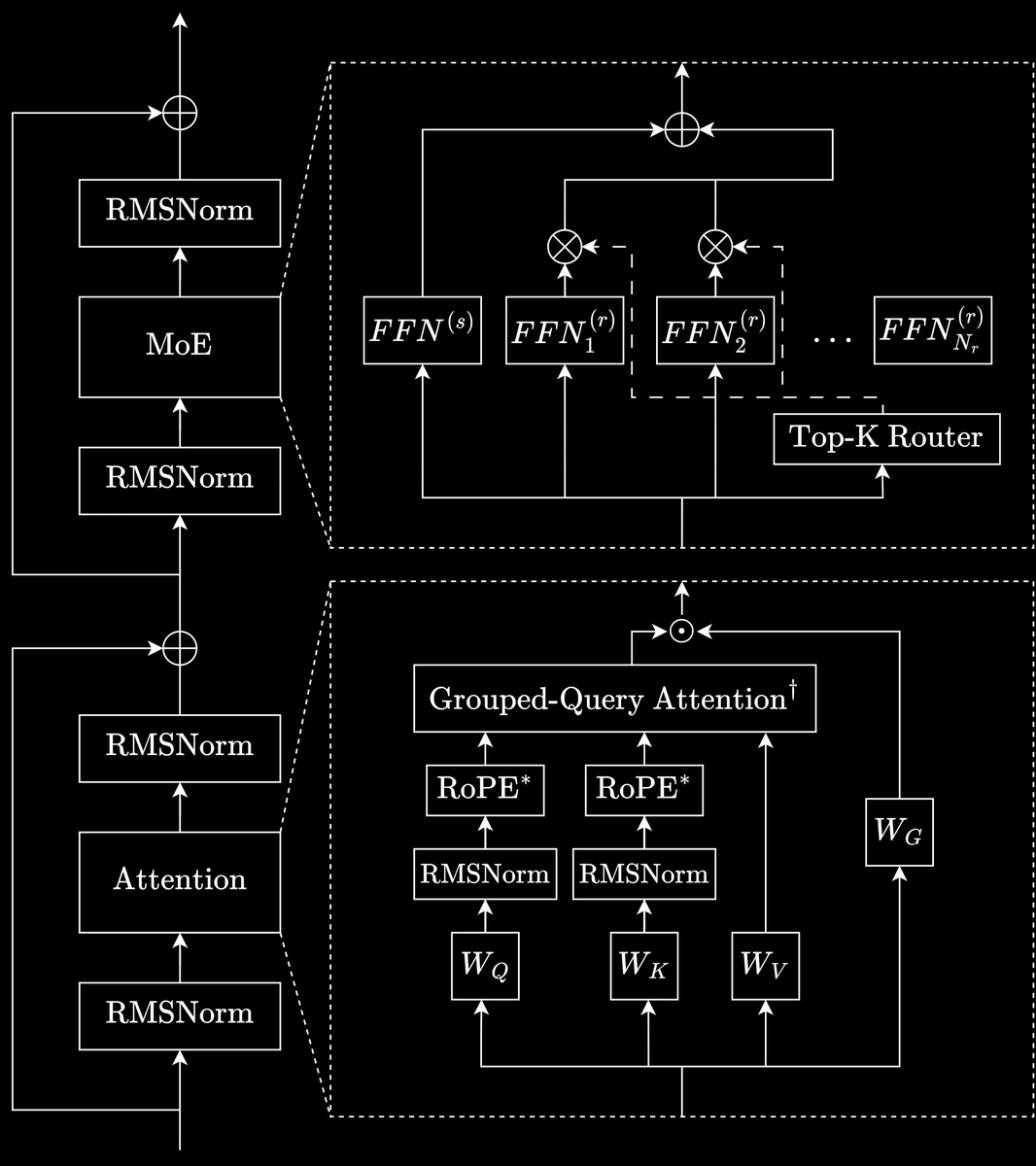

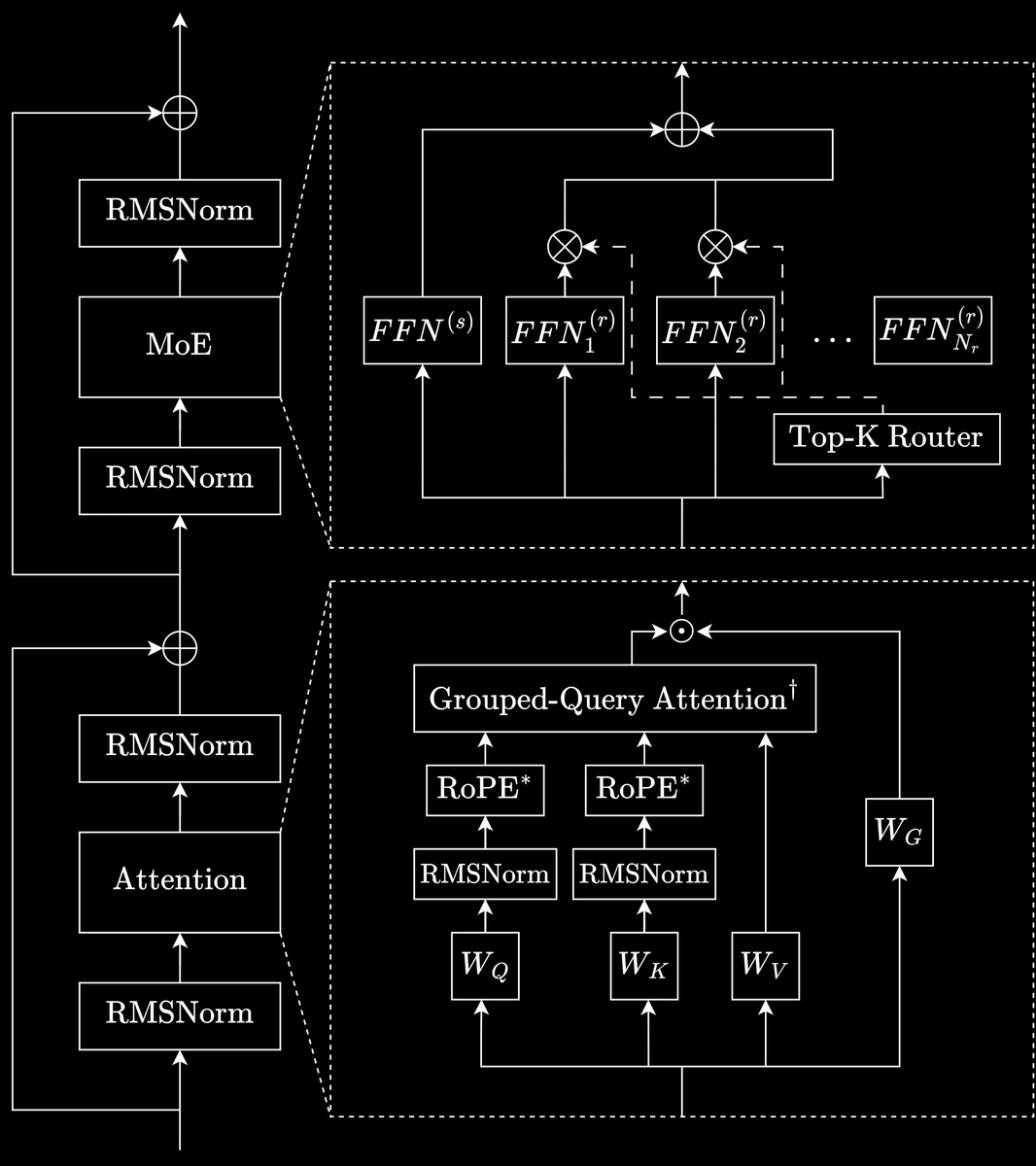

https://twitter.com/arcee_ai/status/2016278017572495505Trinity Architecture

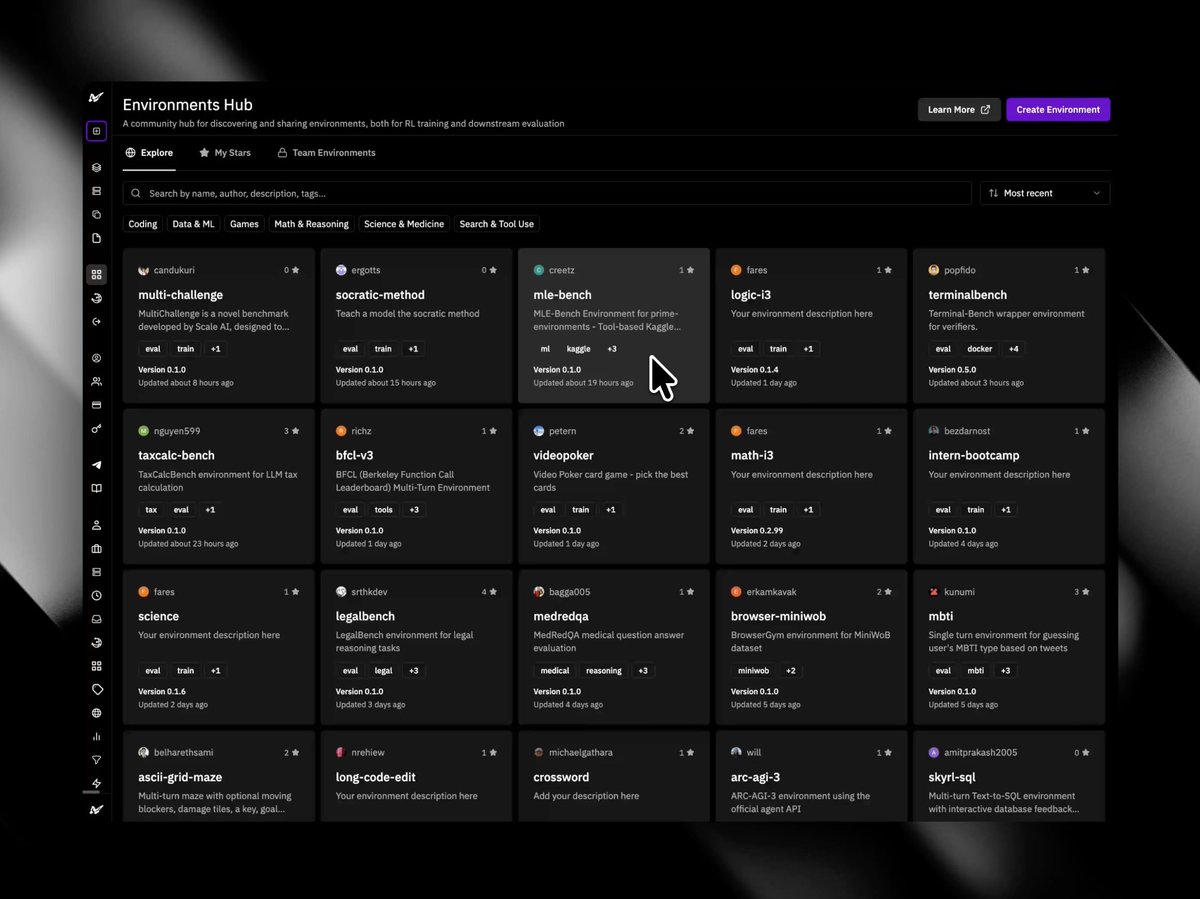

NanoGPT Speedrun

NanoGPT Speedrun

Expanding our Compute Exchange

Expanding our Compute Exchange

Lean 4 Theorem Proving

Lean 4 Theorem Proving

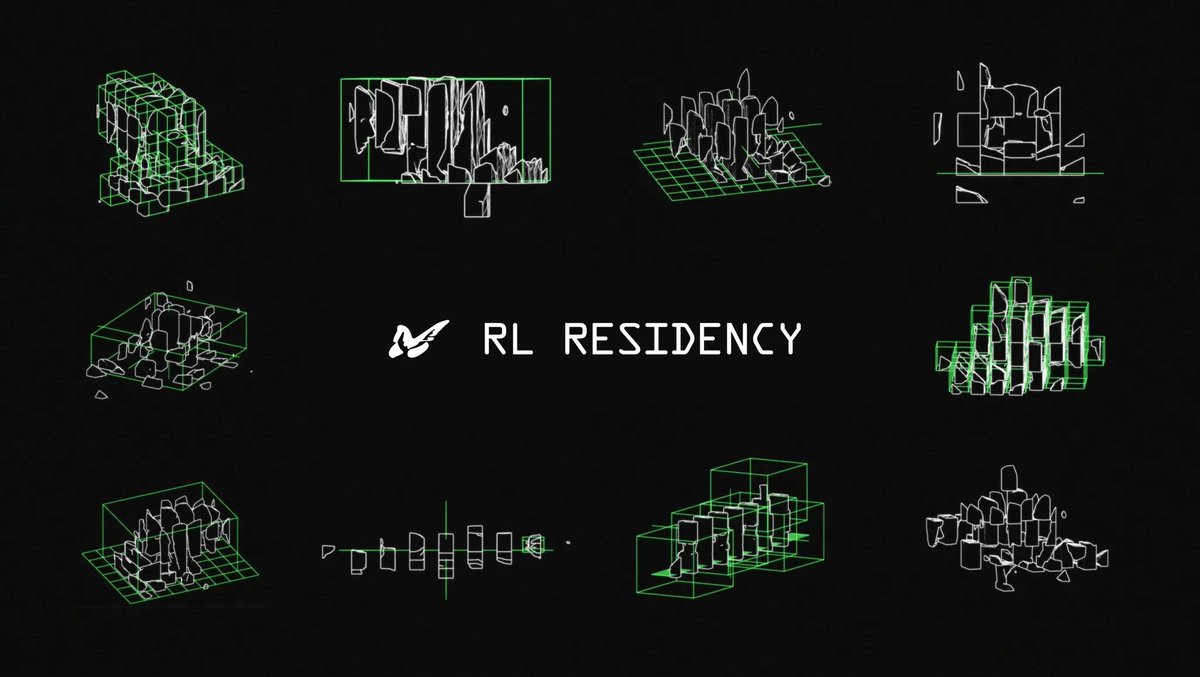

The RL Residency gives you:

The RL Residency gives you:

The Problem: Trust in LLM Inference

The Problem: Trust in LLM Inference

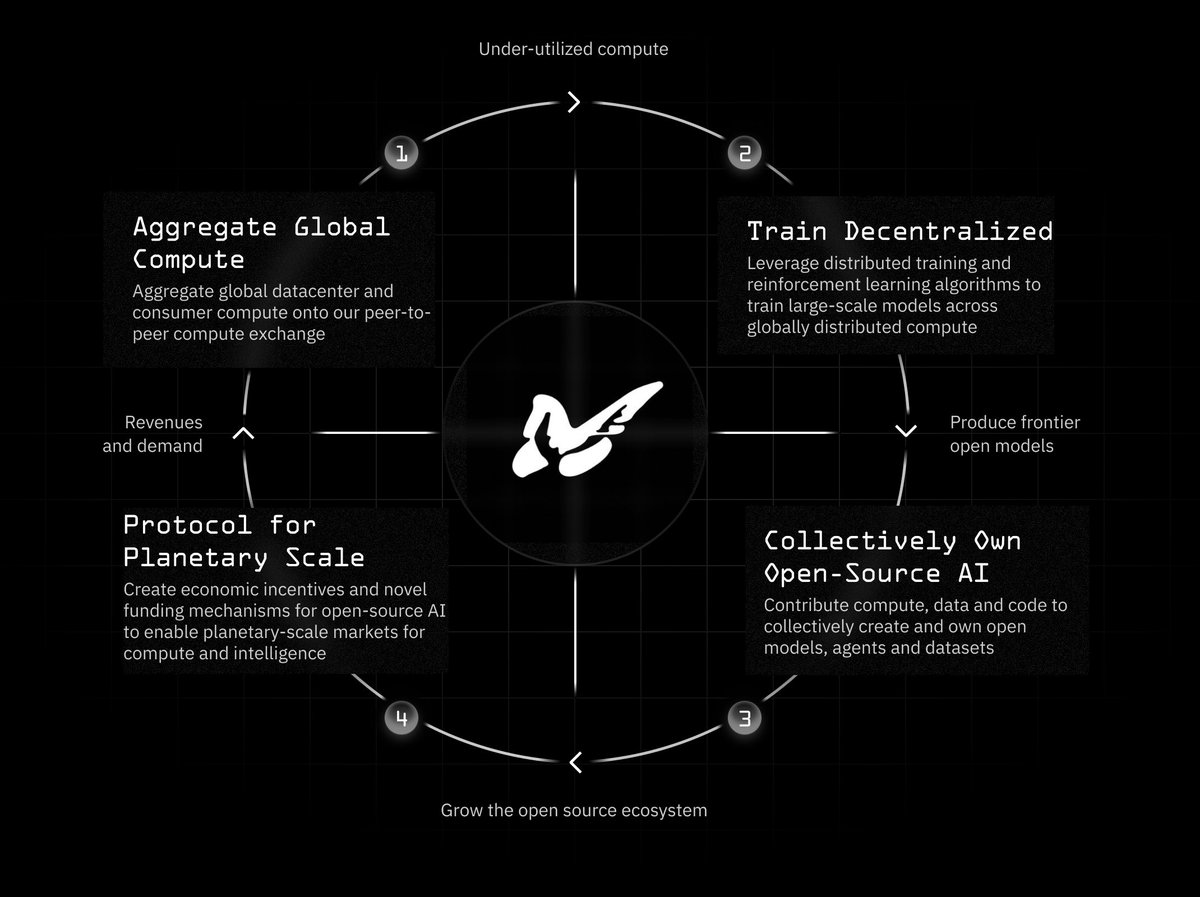

Our vision

Our vision