🏗️ Hardware Memory bandwidth is becoming the choke point slowing down GenAI.

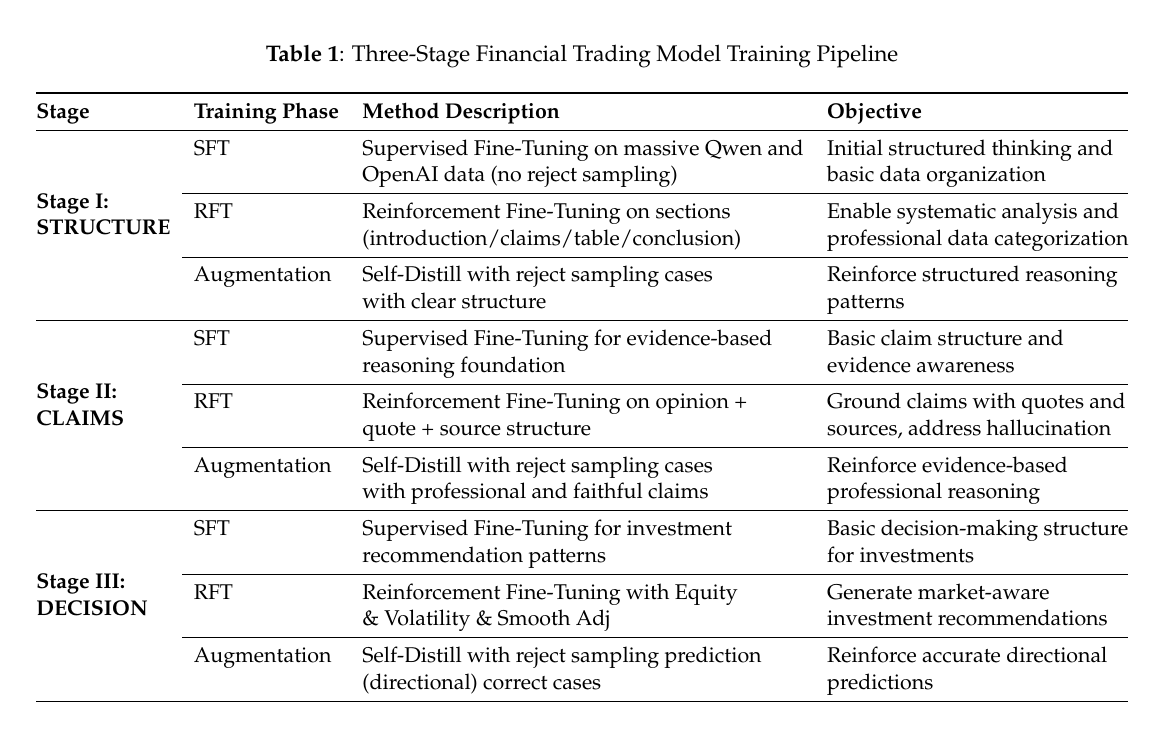

During 2018–2022, transformer model size grew ~410× every 2 years, while memory per accelerator grew only about 2× every 2 years.

And that mismatch shoves us into a “Memory-Wall”

The "memory wall" is creating all the challenges in the datacenter and for edge AI applications.

In the datacenter, current technologies are primarily trying to solve this problem by applying more GPU compute power. And that's why HBM capacity and bandwidth scaling, KV offload, and prefill-decode disaggregation are central to accelerator roadmaps.

Still, at the edge, quite frankly, there are no good solutions.

🚫 Bandwidth is now the bottleneck (not just capacity).

Even when you can somehow fit the weights, the chips can’t feed data fast enough from memory to the compute units.

Over the last ~20 years, peak compute rose ~60,000×, but DRAM bandwidth only ~100× and interconnect bandwidth ~30×. Result: the processor sits idle waiting for data—the classic “memory wall.”

This hits decoder-style LLM inference the hardest.

Becasue decoder-style LLMs generate 1 token at a time, so each step reuses the same weights but must stream a growing KV cache from memory. That makes the arithmetic intensity low, since you move a lot of bytes per token relative to FLOPs.

As the context grows, the KV cache grows linearly with sequence length and layer count, so every new token has to read more KV tensors, hence the KV cache quickly dominates bytes moved.

And thats why so much of recent research focus on reducing or reorganizing KV movement rather than adding FLOPs.

Training often needs 3–4X more memory than just the parameters because you must hold parameters, gradients, optimizer states and activations.

Hence we have this huge bandwidth gap: Moving weights, activations, and KV-cache around chips/GPUs is slower than the raw compute can consume.

Together, these dominate runtime and cost for modern LLMs.

🧵 Read on 👇

During 2018–2022, transformer model size grew ~410× every 2 years, while memory per accelerator grew only about 2× every 2 years.

And that mismatch shoves us into a “Memory-Wall”

The "memory wall" is creating all the challenges in the datacenter and for edge AI applications.

In the datacenter, current technologies are primarily trying to solve this problem by applying more GPU compute power. And that's why HBM capacity and bandwidth scaling, KV offload, and prefill-decode disaggregation are central to accelerator roadmaps.

Still, at the edge, quite frankly, there are no good solutions.

🚫 Bandwidth is now the bottleneck (not just capacity).

Even when you can somehow fit the weights, the chips can’t feed data fast enough from memory to the compute units.

Over the last ~20 years, peak compute rose ~60,000×, but DRAM bandwidth only ~100× and interconnect bandwidth ~30×. Result: the processor sits idle waiting for data—the classic “memory wall.”

This hits decoder-style LLM inference the hardest.

Becasue decoder-style LLMs generate 1 token at a time, so each step reuses the same weights but must stream a growing KV cache from memory. That makes the arithmetic intensity low, since you move a lot of bytes per token relative to FLOPs.

As the context grows, the KV cache grows linearly with sequence length and layer count, so every new token has to read more KV tensors, hence the KV cache quickly dominates bytes moved.

And thats why so much of recent research focus on reducing or reorganizing KV movement rather than adding FLOPs.

Training often needs 3–4X more memory than just the parameters because you must hold parameters, gradients, optimizer states and activations.

Hence we have this huge bandwidth gap: Moving weights, activations, and KV-cache around chips/GPUs is slower than the raw compute can consume.

Together, these dominate runtime and cost for modern LLMs.

🧵 Read on 👇

🧵2/n. AI and Memory Wall

The availability of unprecedented unsupervised training data, along with neural scaling laws, has resulted in an unprecedented surge in model size and compute requirements for serving/training LLMs. However, the main performance bottleneck is increasingly shifting to memory bandwidth.

The availability of unprecedented unsupervised training data, along with neural scaling laws, has resulted in an unprecedented surge in model size and compute requirements for serving/training LLMs. However, the main performance bottleneck is increasingly shifting to memory bandwidth.

🧵3/n. We can see the huge growth in HBM (High Bandwidth Memory) bit demand that has come alongside AI accelerator demand.

This is a direct manifestation of that "Memory Wall"

As models grow, we need more bits of HBM just to store weights, activations, and KV caches. That is pushing us from “memory capacity limited” into “memory bandwidth limited” if we can’t feed the compute units fast enough.

Every increase in model size, longer context, bigger batch, or more parameters corresponds to more bytes to move. That increases pressure on DRAM/HBM interfaces and interconnects. The more HBM capacity is added, the more potential bandwidth you must deliver to fully utilize it.

You can’t freely scale HBM bandwidth linearly with capacity. Physical limits (pin count, thermal, power, cost) constrain how much bandwidth can be added. So even though bit demand explodes, the ability to feed those bits hasn’t kept pace, reinforcing the memory wall.

When a single GPU demands 1 TB of HBM, it's not just memory chips you need. The entire stack—HBM interface, interconnect, packaging, cooling—all must scale to deliver that throughput.

This is a direct manifestation of that "Memory Wall"

As models grow, we need more bits of HBM just to store weights, activations, and KV caches. That is pushing us from “memory capacity limited” into “memory bandwidth limited” if we can’t feed the compute units fast enough.

Every increase in model size, longer context, bigger batch, or more parameters corresponds to more bytes to move. That increases pressure on DRAM/HBM interfaces and interconnects. The more HBM capacity is added, the more potential bandwidth you must deliver to fully utilize it.

You can’t freely scale HBM bandwidth linearly with capacity. Physical limits (pin count, thermal, power, cost) constrain how much bandwidth can be added. So even though bit demand explodes, the ability to feed those bits hasn’t kept pace, reinforcing the memory wall.

When a single GPU demands 1 TB of HBM, it's not just memory chips you need. The entire stack—HBM interface, interconnect, packaging, cooling—all must scale to deliver that throughput.

• • •

Missing some Tweet in this thread? You can try to

force a refresh