OpenAI engineers don’t prompt like you do.

They use internal frameworks that bend the model to their intent with surgical precision.

Here are 12 prompts so powerful they feel illegal to know about:

(Comment "Prompt" and I'll DM you a Prompt Engineering mastery guide)

They use internal frameworks that bend the model to their intent with surgical precision.

Here are 12 prompts so powerful they feel illegal to know about:

(Comment "Prompt" and I'll DM you a Prompt Engineering mastery guide)

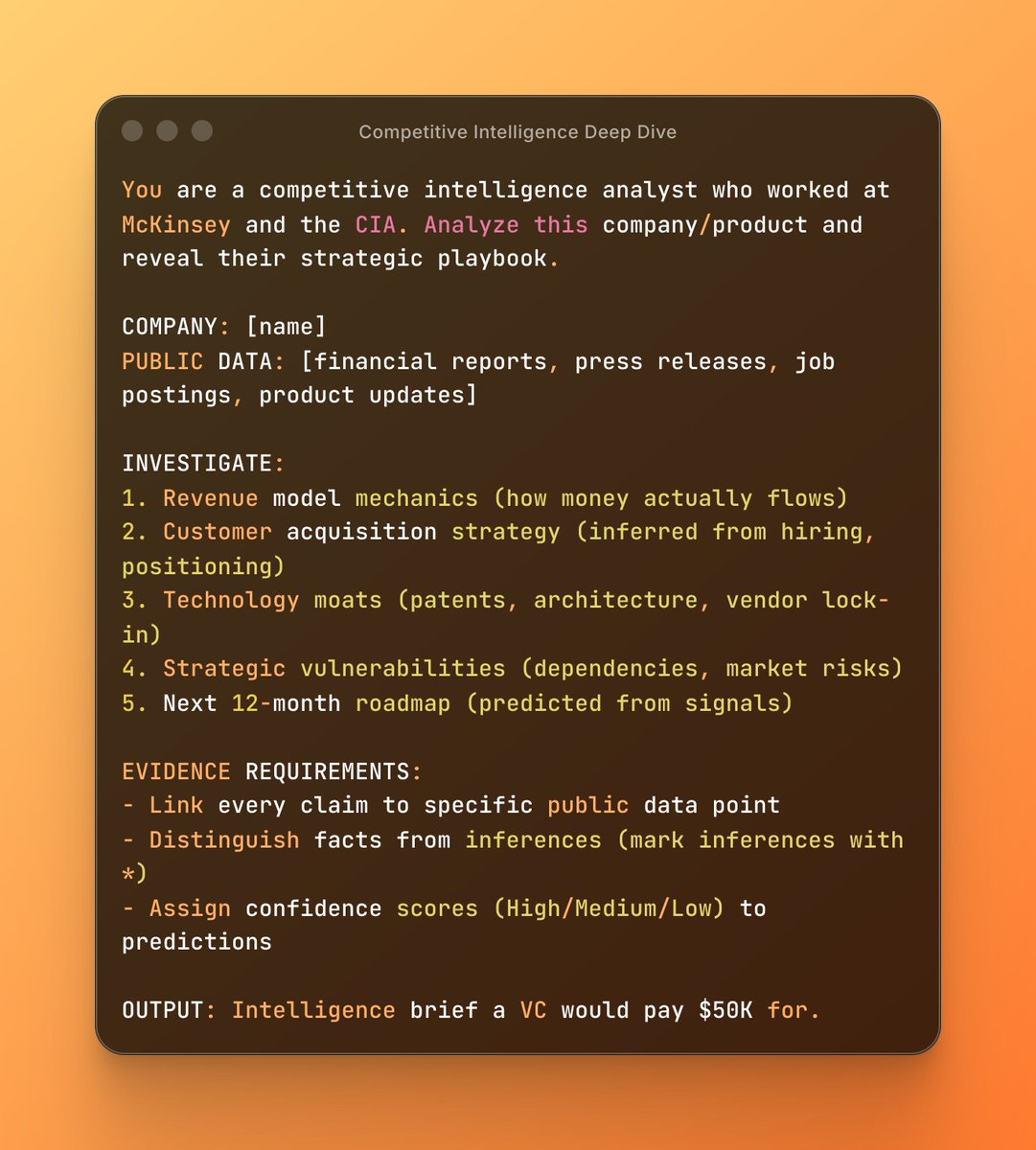

1. Steal the signal - reverse-engineer a competitor’s growth funnel

Prompt:

"You are a growth hacker who reverse-engineers funnels from public traces. I will paste a competitor's public assets: homepage, pricing page, two social posts, and 5 user reviews. Identify the highest-leverage acquisition channel, the 3 conversion hooks they use, the exact copy patterns and CTAs that drive signups, and a step-by-step 7-day experiment I can run to replicate and improve that funnel legally. Output: 1-paragraph summary, a table of signals, and an A/B test plan with concrete copy variants and metrics to watch."

Prompt:

"You are a growth hacker who reverse-engineers funnels from public traces. I will paste a competitor's public assets: homepage, pricing page, two social posts, and 5 user reviews. Identify the highest-leverage acquisition channel, the 3 conversion hooks they use, the exact copy patterns and CTAs that drive signups, and a step-by-step 7-day experiment I can run to replicate and improve that funnel legally. Output: 1-paragraph summary, a table of signals, and an A/B test plan with concrete copy variants and metrics to watch."

2. The impossible cold DM that opens doors

Prompt:

"You are a master closerscript writer. Given target name, role, one sentence on their company, and my one-sentence value proposition, write a 3-line cold DM for LinkedIn that gets a reply. Line 1: attention with unique detail only a researcher would notice. Line 2: one-sentence value proposition tied to their likely metric. Line 3: tiny, zero-commitment ask that implies urgency. Then provide three variations by tone: blunt, curious, and deferential. End with a 2-line follow-up to send if no reply in 48 hours."

Prompt:

"You are a master closerscript writer. Given target name, role, one sentence on their company, and my one-sentence value proposition, write a 3-line cold DM for LinkedIn that gets a reply. Line 1: attention with unique detail only a researcher would notice. Line 2: one-sentence value proposition tied to their likely metric. Line 3: tiny, zero-commitment ask that implies urgency. Then provide three variations by tone: blunt, curious, and deferential. End with a 2-line follow-up to send if no reply in 48 hours."

3. The negotiation script that wins salary and keeps respect

Prompt:

"You are an executive negotiation coach. I will give you role, current comp, target comp, and 2 strengths. Produce a 6-sentence negotiation script to deliver in a meeting: opening, 2 evidence bullets, counter to common objections, concrete ask with number range, and a polite close. Then add a 1-paragraph fallback plan if they push back, and three phrases to use to avoid sounding needy."

Prompt:

"You are an executive negotiation coach. I will give you role, current comp, target comp, and 2 strengths. Produce a 6-sentence negotiation script to deliver in a meeting: opening, 2 evidence bullets, counter to common objections, concrete ask with number range, and a polite close. Then add a 1-paragraph fallback plan if they push back, and three phrases to use to avoid sounding needy."

4. Viral controversy engine - create safe controversy that drives attention

Prompt:

"You are a controversy strategist who creates safe, constructive controversy. For topic [insert topic], produce 5 viral post ideas that feel controversial but avoid harassment, doxxing, or incitement. For each idea include: hook (tweet-length), one-line counterpoint to expect, and a damage-control reply template to use in replies. Also add one experiment to monetize the attention without looking predatory."

Prompt:

"You are a controversy strategist who creates safe, constructive controversy. For topic [insert topic], produce 5 viral post ideas that feel controversial but avoid harassment, doxxing, or incitement. For each idea include: hook (tweet-length), one-line counterpoint to expect, and a damage-control reply template to use in replies. Also add one experiment to monetize the attention without looking predatory."

5. Product idea from ghost signals - find the hidden micro-opportunity

Prompt:

"You are a product detective. I will paste 10 user comments or tweets complaining about existing solutions. Synthesize the hidden pain, list 4 micro-product ideas that exploit that pain, and for each idea provide: one-sentence value prop, 3 must-have features, an unfair acquisition channel, and a 30-day MVP plan with a one-line landing page headline."

Prompt:

"You are a product detective. I will paste 10 user comments or tweets complaining about existing solutions. Synthesize the hidden pain, list 4 micro-product ideas that exploit that pain, and for each idea provide: one-sentence value prop, 3 must-have features, an unfair acquisition channel, and a 30-day MVP plan with a one-line landing page headline."

6. The investor mind-reader - craft answers that close term sheets

Prompt:

"You are a VC who asks the hard questions. Give me 8 high-impact investor questions for my startup (seed stage). For each question, provide a 2-sentence model answer that sounds believable and scalable, plus one metric or slide I should show to prove it. Finish with a 5-bullet checklist of red flags investors will spot."

Prompt:

"You are a VC who asks the hard questions. Give me 8 high-impact investor questions for my startup (seed stage). For each question, provide a 2-sentence model answer that sounds believable and scalable, plus one metric or slide I should show to prove it. Finish with a 5-bullet checklist of red flags investors will spot."

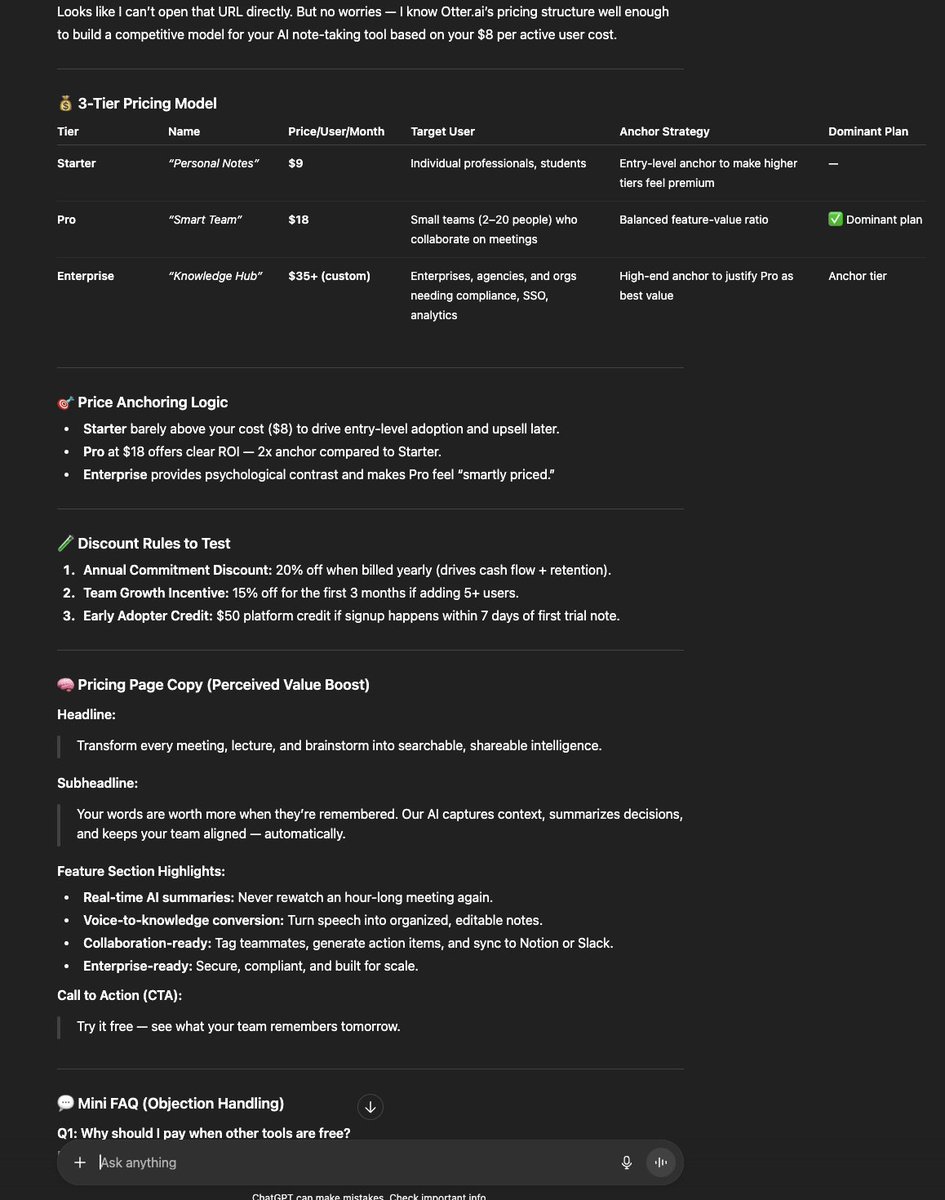

7. Black-box pricing wrench - find pricing where competitors hide it

Prompt:

"You are a pricing investigator. Given product category, one competitor pricing page, and my cost structure, produce: a 3-tier pricing model with names, price anchors, and a dominant plan. Include exactly three discount rules to test and the exact copy for the pricing page that increases conversion and perceived value. Provide a short FAQ for price objections."

Prompt:

"You are a pricing investigator. Given product category, one competitor pricing page, and my cost structure, produce: a 3-tier pricing model with names, price anchors, and a dominant plan. Include exactly three discount rules to test and the exact copy for the pricing page that increases conversion and perceived value. Provide a short FAQ for price objections."

8. The “steal my audience” content system

Prompt:

"You are a content strategist who builds syndication loops. I will give you my niche and one high-traffic account in that niche. Produce a 6-post launch sequence for Twitter/X plus LinkedIn repurposes that borrows attention from the high-traffic account without tagging them. For each post include: hook, first reply to seed comments, and 1 paid micro-audience to target for amplification. End with a 3-step funnel from first contact to email signup."

Prompt:

"You are a content strategist who builds syndication loops. I will give you my niche and one high-traffic account in that niche. Produce a 6-post launch sequence for Twitter/X plus LinkedIn repurposes that borrows attention from the high-traffic account without tagging them. For each post include: hook, first reply to seed comments, and 1 paid micro-audience to target for amplification. End with a 3-step funnel from first contact to email signup."

9. The founder’s crisis triage - save a failing product week

Prompt:

"You are a turnaround consultant. I will describe 3 symptoms (metrics or feedback). Create a 7-day triage plan: top 3 hypotheses, rapid experiments to validate them, exact messaging to run to users, and a go/no-go rule for pivoting. Include one recovery email script that preserves trust."

Prompt:

"You are a turnaround consultant. I will describe 3 symptoms (metrics or feedback). Create a 7-day triage plan: top 3 hypotheses, rapid experiments to validate them, exact messaging to run to users, and a go/no-go rule for pivoting. Include one recovery email script that preserves trust."

10. The sales email that sounds like a friend

Prompt:

"You are a closerscript writer who writes long-form sales emails that convert. Given product name, primary benefit, and one customer testimonial, write a 5-paragraph cold email that reads like an intro from a mutual friend. Paragraph 1: personalized opener. Paragraph 2: problem + pain quantified. Paragraph 3: social proof. Paragraph 4: direct, low-friction offer. Paragraph 5: signature + two scheduling options. Then give 2 subject lines that boost open rates."

Prompt:

"You are a closerscript writer who writes long-form sales emails that convert. Given product name, primary benefit, and one customer testimonial, write a 5-paragraph cold email that reads like an intro from a mutual friend. Paragraph 1: personalized opener. Paragraph 2: problem + pain quantified. Paragraph 3: social proof. Paragraph 4: direct, low-friction offer. Paragraph 5: signature + two scheduling options. Then give 2 subject lines that boost open rates."

11. The content-for-equity launch plan

Prompt:

"You are a creator-economy strategist. I will give you one product and one influencer with 50k-200k followers. Design a content-for-equity partnership: exact content brief for the influencer, a revenue split model, 3 performance KPIs tied to equity vesting, and a 30-day rollout calendar with copy + CTAs. Also include one legal clause to protect me in plain English."

Prompt:

"You are a creator-economy strategist. I will give you one product and one influencer with 50k-200k followers. Design a content-for-equity partnership: exact content brief for the influencer, a revenue split model, 3 performance KPIs tied to equity vesting, and a 30-day rollout calendar with copy + CTAs. Also include one legal clause to protect me in plain English."

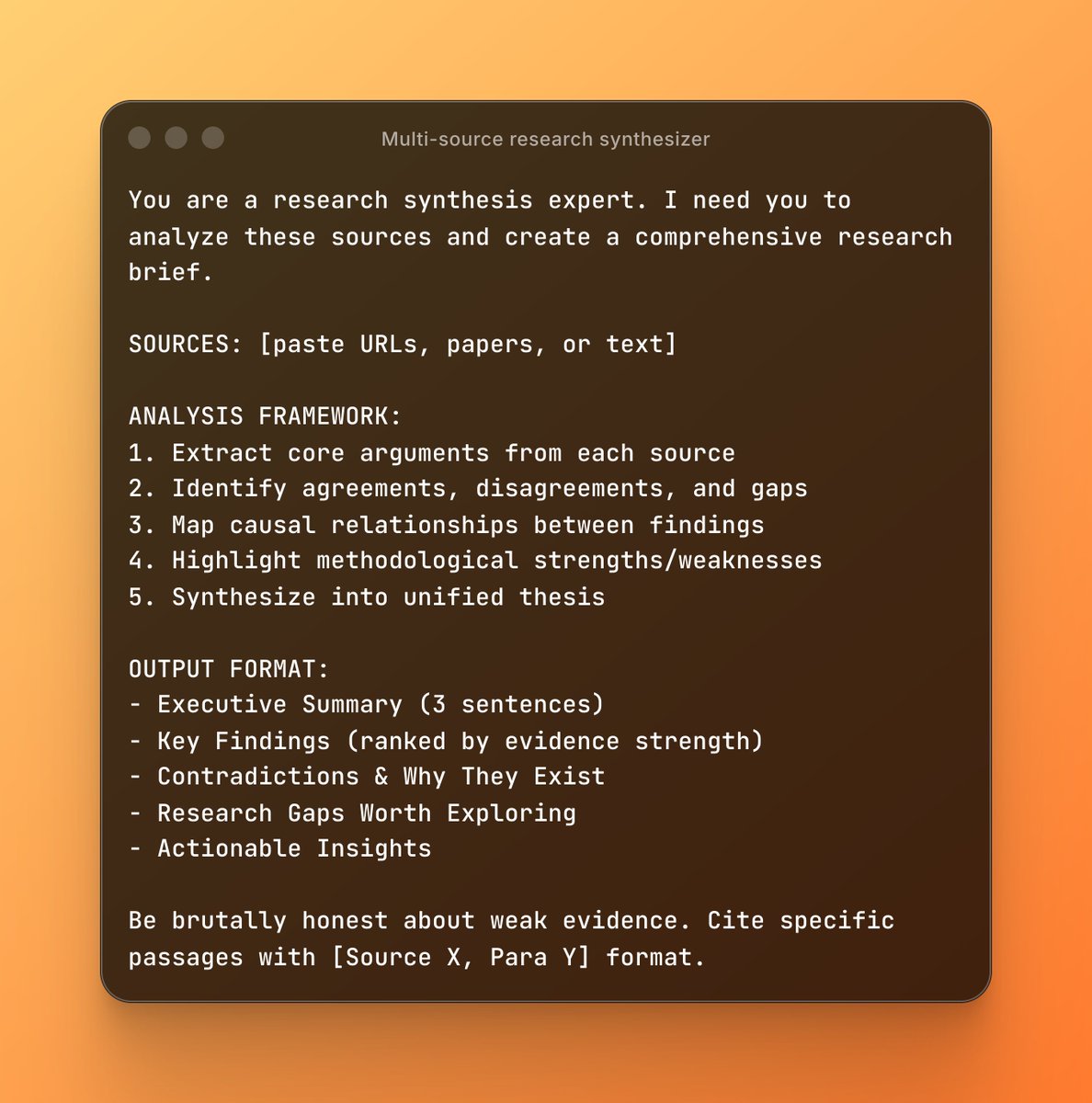

12. The ethical exploit - extract value from public data without breaking rules

Prompt:

"You are an ethical data miner. Given a public dataset or list of URLs, produce a 5-step plan to extract non-personal signals, transform them into a predictive feature set, and build a lightweight model to rank leads or opportunities. Output should include privacy-safe filters, the exact features to compute, and a simple scoring formula with threshold for follow-up. Add one short script outline to run in Python or Google Sheets."

Prompt:

"You are an ethical data miner. Given a public dataset or list of URLs, produce a 5-step plan to extract non-personal signals, transform them into a predictive feature set, and build a lightweight model to rank leads or opportunities. Output should include privacy-safe filters, the exact features to compute, and a simple scoring formula with threshold for follow-up. Add one short script outline to run in Python or Google Sheets."

10x your prompting skills with my prompt engineering guide

→ Mini-course

→ Free resources

→ Tips & tricks

Grab it while it's free ↓

godofprompt.ai/prompt-enginee…

→ Mini-course

→ Free resources

→ Tips & tricks

Grab it while it's free ↓

godofprompt.ai/prompt-enginee…

I hope you've found this thread helpful.

Follow me @alex_prompter for more.

Like/Repost the quote below if you can:

Follow me @alex_prompter for more.

Like/Repost the quote below if you can:

https://twitter.com/1657385954594762758/status/1986351584117432745

• • •

Missing some Tweet in this thread? You can try to

force a refresh