Some people are unhappy with the AI 2027 title and our AI timelines. Let me quickly clarify:

We’re not confident that:

1. AGI will happen in exactly 2027 (2027 is one of the most likely specific years though!)

2. It will take <1 yr to get from AGI to ASI

3. AGIs will definitely be misaligned

We’re confident that:

1. AGI and ASI will eventually be built and might be built soon

2. ASI will be wildly transformative

3. We’re not ready for AGI and should be taking this whole situation way more seriously

🧵 with more details

We’re not confident that:

1. AGI will happen in exactly 2027 (2027 is one of the most likely specific years though!)

2. It will take <1 yr to get from AGI to ASI

3. AGIs will definitely be misaligned

We’re confident that:

1. AGI and ASI will eventually be built and might be built soon

2. ASI will be wildly transformative

3. We’re not ready for AGI and should be taking this whole situation way more seriously

🧵 with more details

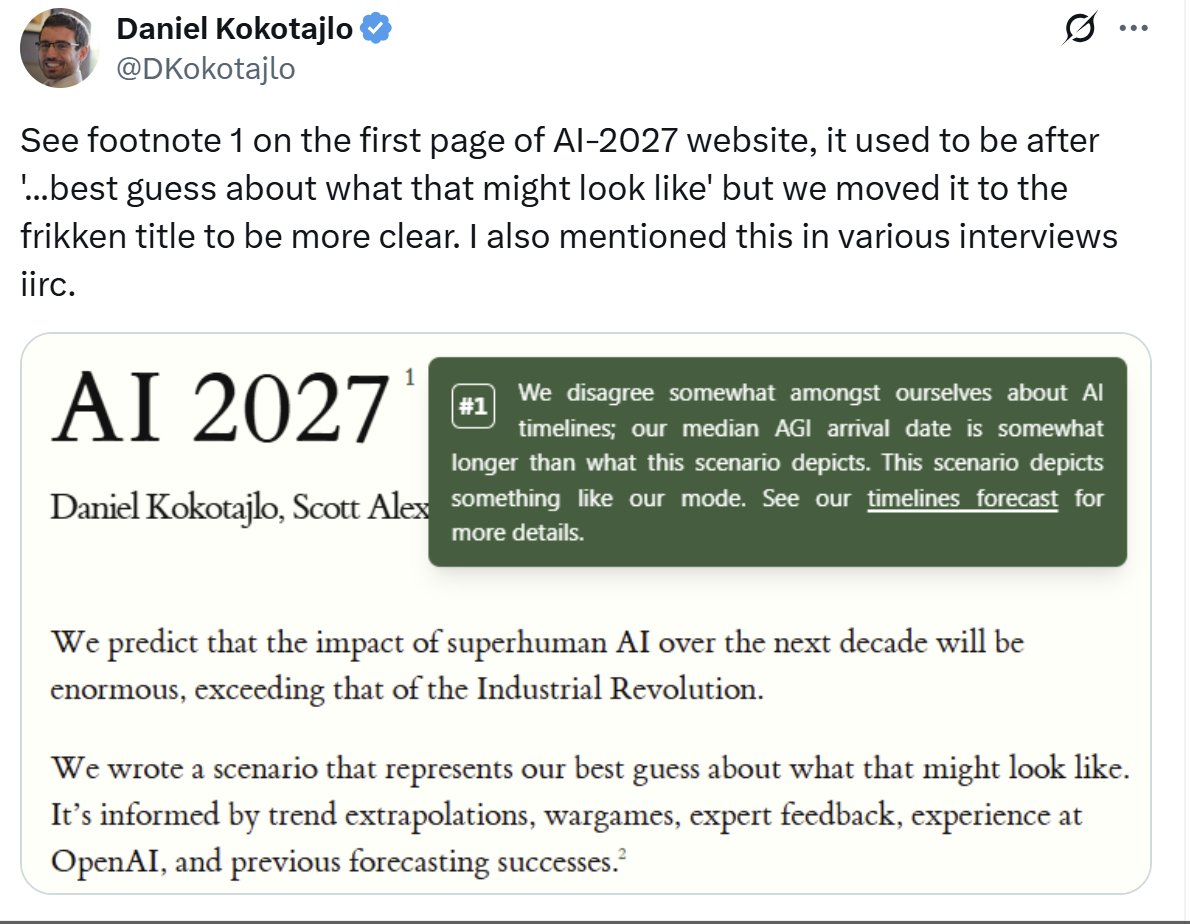

All AI 2027 authors, at the time of publication, thought that AGI by the end of 2027 was at least >10%, and that the single most likely year AGI would arrive is either 2027 or 2028. I, lead author, thought AGI by end of 2027 was ~40% (i.e. not quite my median forecast). We clarified this in AI 2027 itself, from day 1:

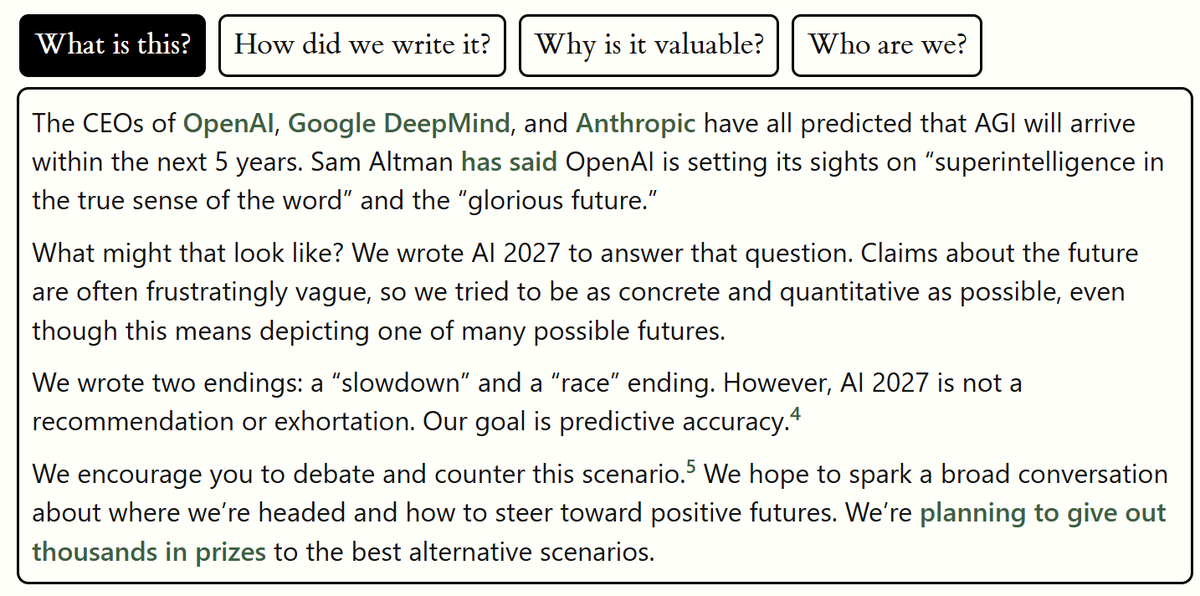

Why did we choose to write a scenario in which AGI happens in 2027, if it was our mode and not our median? Well, at the time I started writing, 2027 *was* my median, but by the time we finished, 2028 was my median. The other authors had longer medians but agreed that 2027 was plausible (it was ~their mode after all!), and it was my project so they were happy to execute on my vision. More importantly though, we thought (and continue to think) that the purpose of the scenario was not ‘here’s why AGI will happen in specific year X’ but rather ‘we think AGI/superintelligence/etc. might happen soon; but what would that even look like, concretely? How would the government react? What about the effects on… etc.’ We talk about this on the front page:

In retrospect it was a bad idea for me to tweet the below without explaining what I meant; understandably some people were confused and even angry, perhaps because it sounded like I was trying to weasel out of past predictions and/or that the AI 2027 title had misleadingly led them to believe 2027 was our median:

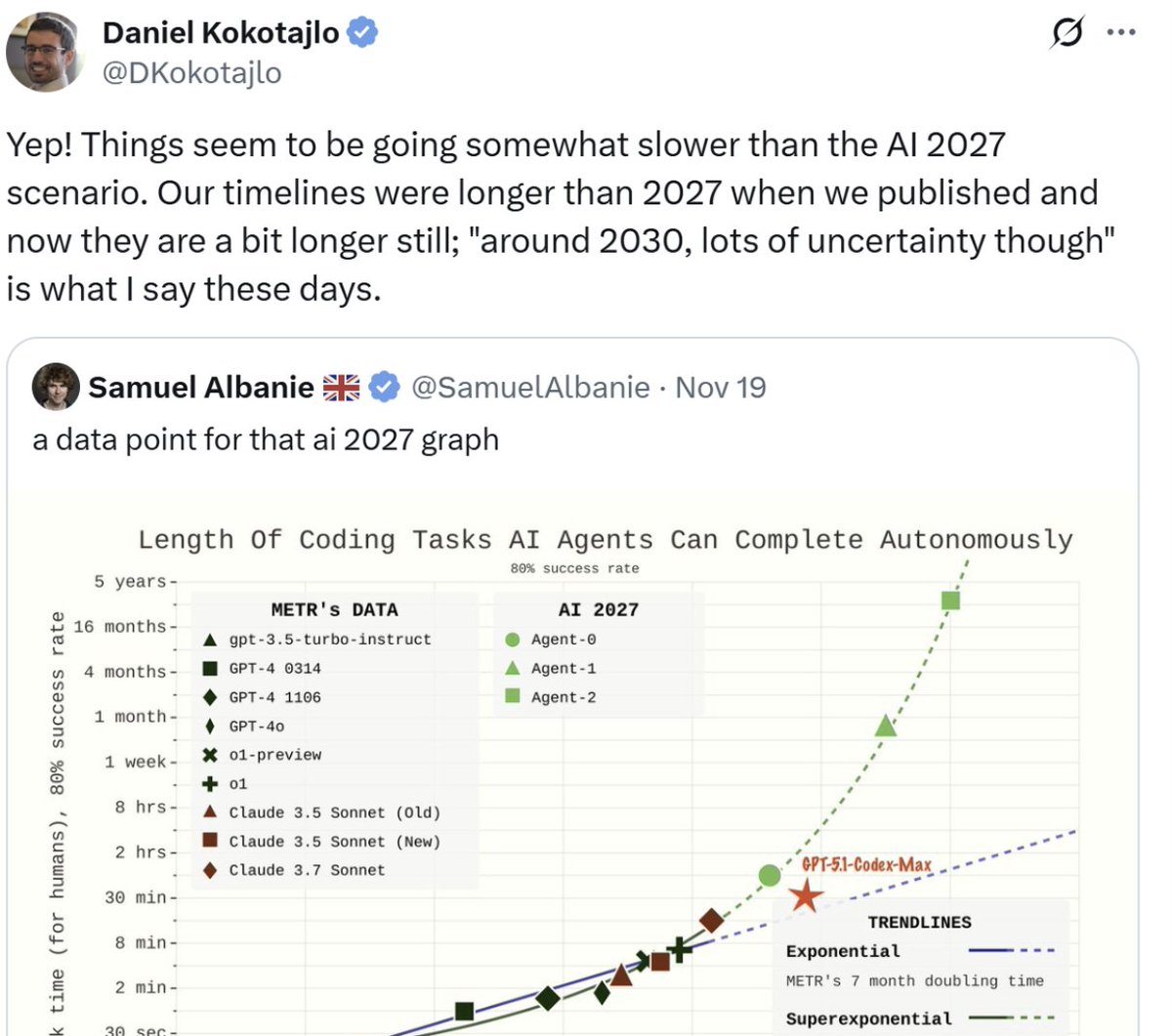

These days I give my AGI median as 2030ish, with the mode being somewhat sooner. We will soon publish our updated, improved timelines & takeoff model, along with a blog post explaining how and why our views have updated over the past year. (The tl;dr is that progress has been somewhat slower than we expected & also we now have a new and improved model that gives somewhat different results.) When we do this we’ll link to it from the landing page of AI 2027, to mitigate further confusions. In fact we’ve gone ahead and put in this disclaimer for now:

Our plan is to continue to try to forecast the future of AI and continue to write scenarios as a vehicle for doing so. Insofar as our forecasts are wrong, we’ll update our beliefs and acknowledge this publicly, as we have been doing.

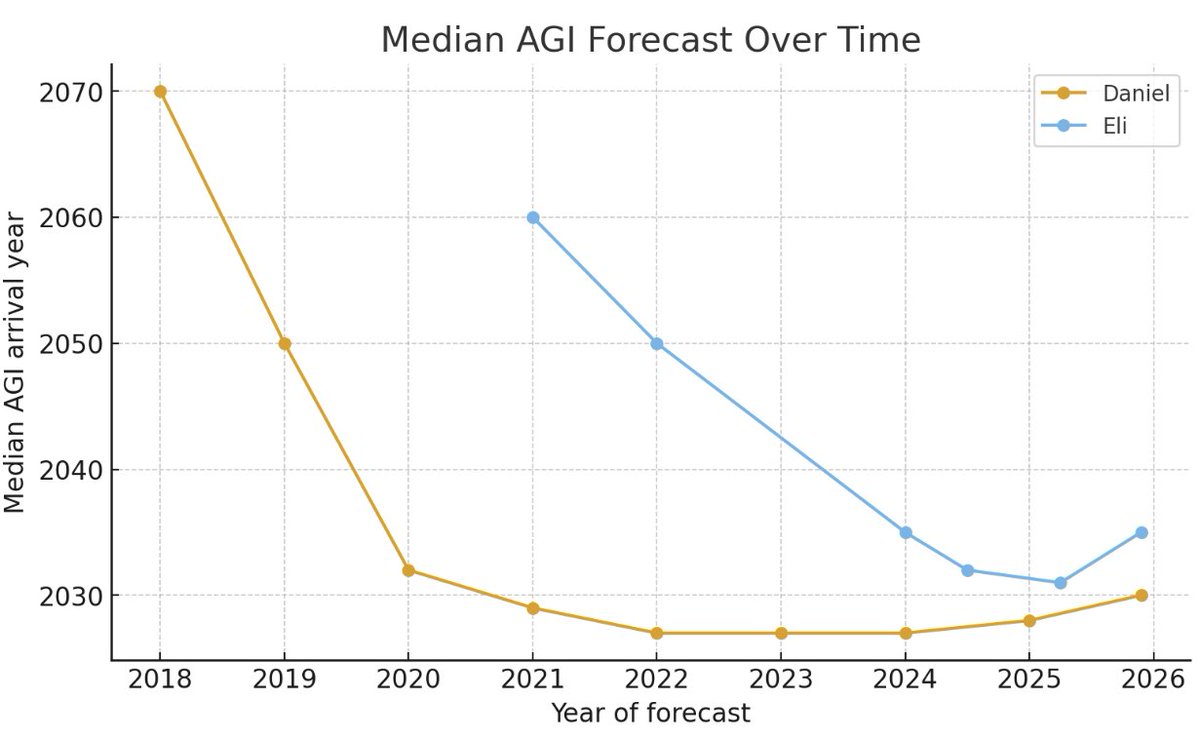

We expect to update both toward shorter and longer timelines at different points in the future, and we have done updates in both directions in the past (see attached graph). Both Eli and I have dramatically shortened our median timeline after thinking more carefully about AGI (me starting in 2018, Eli starting in 2021). Recently, AI progress has been somewhat slower than we expected, and our improved quantitative models have given longer results, so our medians have crept up by a few years. And remember, this is just our medians; our probability mass continues to be smeared out over many years.

We are hard at work right now on a variety of scenarios that have longer timelines, and are planning to illustrate the range of our views by including a bunch of scenarios that (collectively) demonstrate the bulk of the probability mass. We’ve already published one (fairly rough, lower-effort) longer timelines scenario here: lesswrong.com/posts/yHvzscCi…

I'll try to hang around Twitter/X over the course of the weekend to answer people's questions. Thanks!

• • •

Missing some Tweet in this thread? You can try to

force a refresh