Today, Pennsylvania is landing some of the largest AI infrastructure commitments in the US.

Recent projects illustrate the shift: (1/3) 🧵

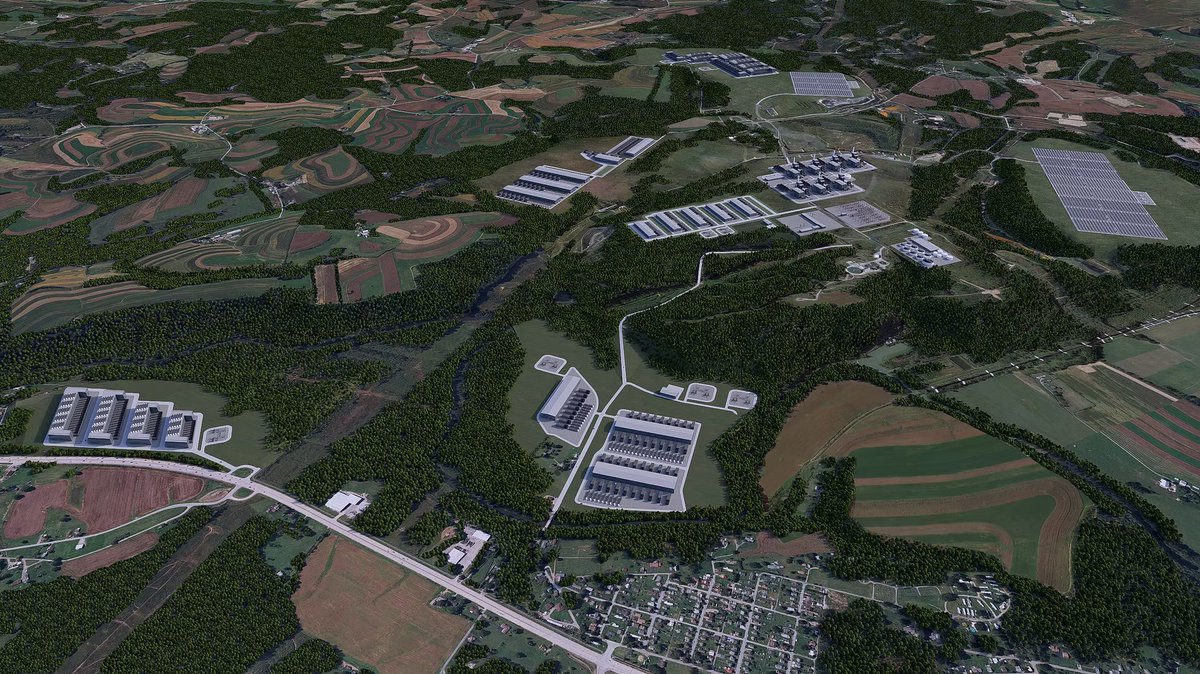

⚆ The Homer City Energy Campus, redeveloping a former coal plant into a gas-powered datacenter hub with up to 4.5 GW of new generation

⚆ TECfusions’ Keystone Connect, a 1,395-acre campus designed to scale toward ~3 GW of IT capacity using a hybrid grid + on-site generation model

⚆ Multiple additional multi-hundred-MW and gigawatt-scale campuses announced across western and central Pennsylvania

Recent projects illustrate the shift: (1/3) 🧵

⚆ The Homer City Energy Campus, redeveloping a former coal plant into a gas-powered datacenter hub with up to 4.5 GW of new generation

⚆ TECfusions’ Keystone Connect, a 1,395-acre campus designed to scale toward ~3 GW of IT capacity using a hybrid grid + on-site generation model

⚆ Multiple additional multi-hundred-MW and gigawatt-scale campuses announced across western and central Pennsylvania

Pennsylvania offers a rare combination of: (2/3)

⚆ Abundant, low-cost energy, anchored by natural gas, nuclear, and legacy generation infrastructure

⚆ Brownfield megasites (retired coal and industrial plants) that already have transmission access, water rights, and industrial zoning

⚆ State-level coordination, where permitting, utility alignment, and local approvals are increasingly moving in parallel rather than sequentially

⚆ Geographic proximity to Northern Virginia without Northern Virginia’s congestion, pricing, or political friction

⚆ Execution certainty, as projects are anchored to power plants, substations, and real permits rather than speculative land options.

⚆ Abundant, low-cost energy, anchored by natural gas, nuclear, and legacy generation infrastructure

⚆ Brownfield megasites (retired coal and industrial plants) that already have transmission access, water rights, and industrial zoning

⚆ State-level coordination, where permitting, utility alignment, and local approvals are increasingly moving in parallel rather than sequentially

⚆ Geographic proximity to Northern Virginia without Northern Virginia’s congestion, pricing, or political friction

⚆ Execution certainty, as projects are anchored to power plants, substations, and real permits rather than speculative land options.

For datacenter developers, Pennsylvania removes the hardest constraint in AI infrastructure: delivering power at real scale, on real timelines. When a market can do that, momentum compounds quickly. Pennsylvania is no longer an alternative, it is becoming one of the places where the next generation of AI infrastructure actually gets built. (3/3)

• • •

Missing some Tweet in this thread? You can try to

force a refresh