I finally understand how LLMs actually work and why most prompts suck.

After reading Anthropic's internal docs + top research papers...

Here are 10 prompting techniques that completely changed my results 👇

(Comment "Guide" and I'll DM Claude Mastery Guide for free)

After reading Anthropic's internal docs + top research papers...

Here are 10 prompting techniques that completely changed my results 👇

(Comment "Guide" and I'll DM Claude Mastery Guide for free)

1/ Assign a Fake Constraint

This sounds illegal but it forces the AI to think creatively instead of giving generic answers.

The constraint creates unexpected connections.

Copy-paste this:

"Explain quantum computing using only kitchen analogies. Every concept must relate to cooking, utensils, or food preparation."

This sounds illegal but it forces the AI to think creatively instead of giving generic answers.

The constraint creates unexpected connections.

Copy-paste this:

"Explain quantum computing using only kitchen analogies. Every concept must relate to cooking, utensils, or food preparation."

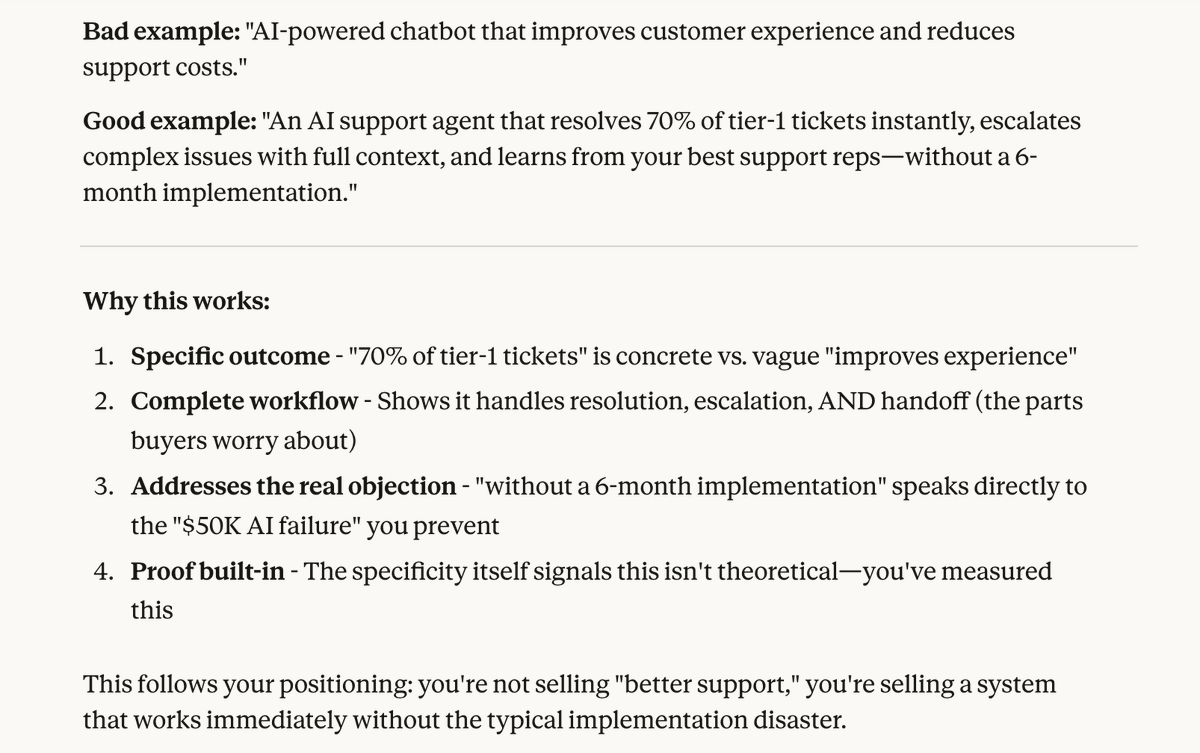

2/ Multi-Shot with Negative Examples

Everyone teaches positive examples. Nobody talks about showing the AI what NOT to do.

This eliminates 90% of bad outputs instantly.

Copy-paste this:

"Write a product description for noise-canceling headphones.

Bad example: 'Great headphones with amazing sound.'

Good example: 'Adaptive ANC technology blocks up to 99% of ambient noise while preserving natural conversation clarity through transparency mode.'

Now write one for wireless earbuds."

Everyone teaches positive examples. Nobody talks about showing the AI what NOT to do.

This eliminates 90% of bad outputs instantly.

Copy-paste this:

"Write a product description for noise-canceling headphones.

Bad example: 'Great headphones with amazing sound.'

Good example: 'Adaptive ANC technology blocks up to 99% of ambient noise while preserving natural conversation clarity through transparency mode.'

Now write one for wireless earbuds."

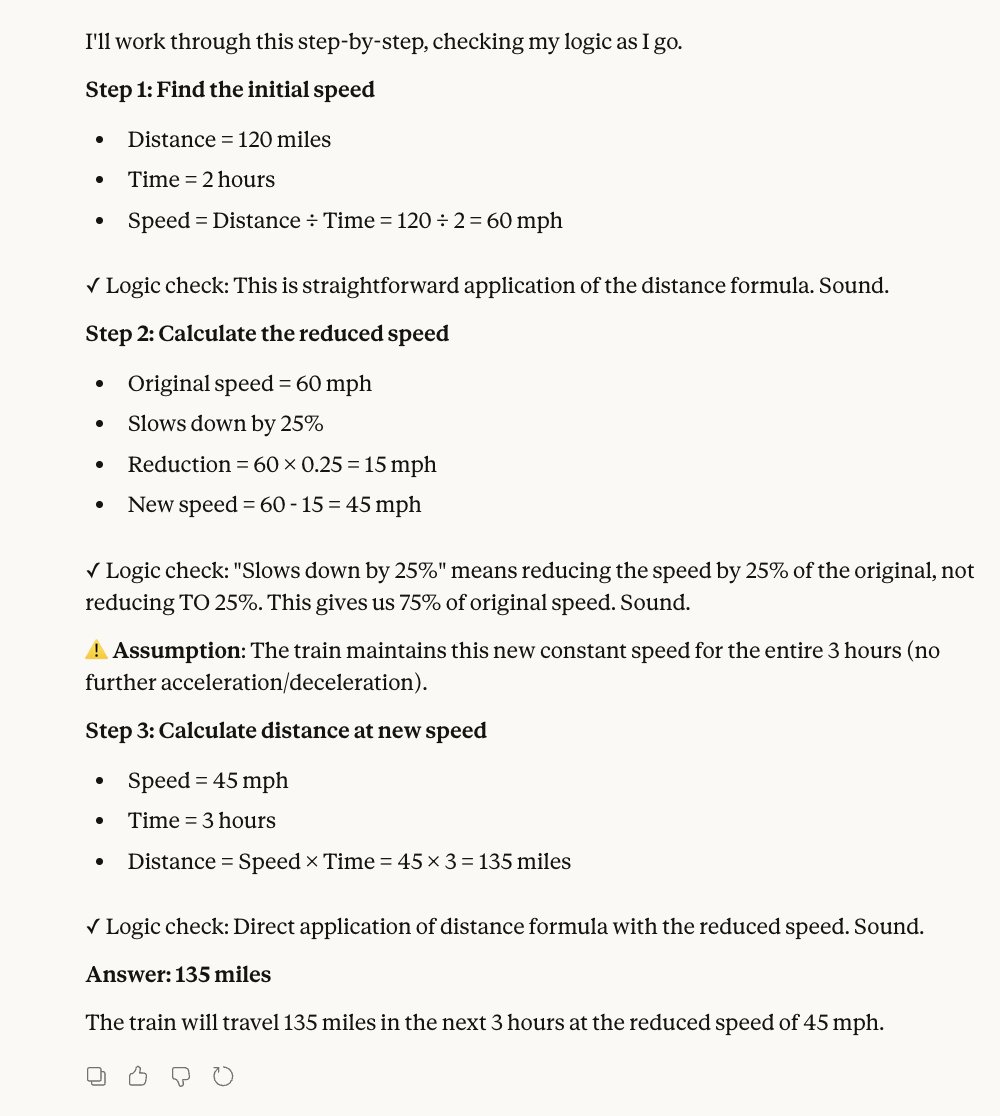

3/ Chain-of-Thought with Verification

Don't just ask for reasoning. Ask the AI to check its own logic at each step.

This catches errors before they compound.

Copy-paste this:

"Solve this problem: If a train travels 120 miles in 2 hours, then slows down by 25%, how far will it travel in the next 3 hours?

After each calculation step, verify if your logic is sound before proceeding. Flag any assumptions you're making."

Don't just ask for reasoning. Ask the AI to check its own logic at each step.

This catches errors before they compound.

Copy-paste this:

"Solve this problem: If a train travels 120 miles in 2 hours, then slows down by 25%, how far will it travel in the next 3 hours?

After each calculation step, verify if your logic is sound before proceeding. Flag any assumptions you're making."

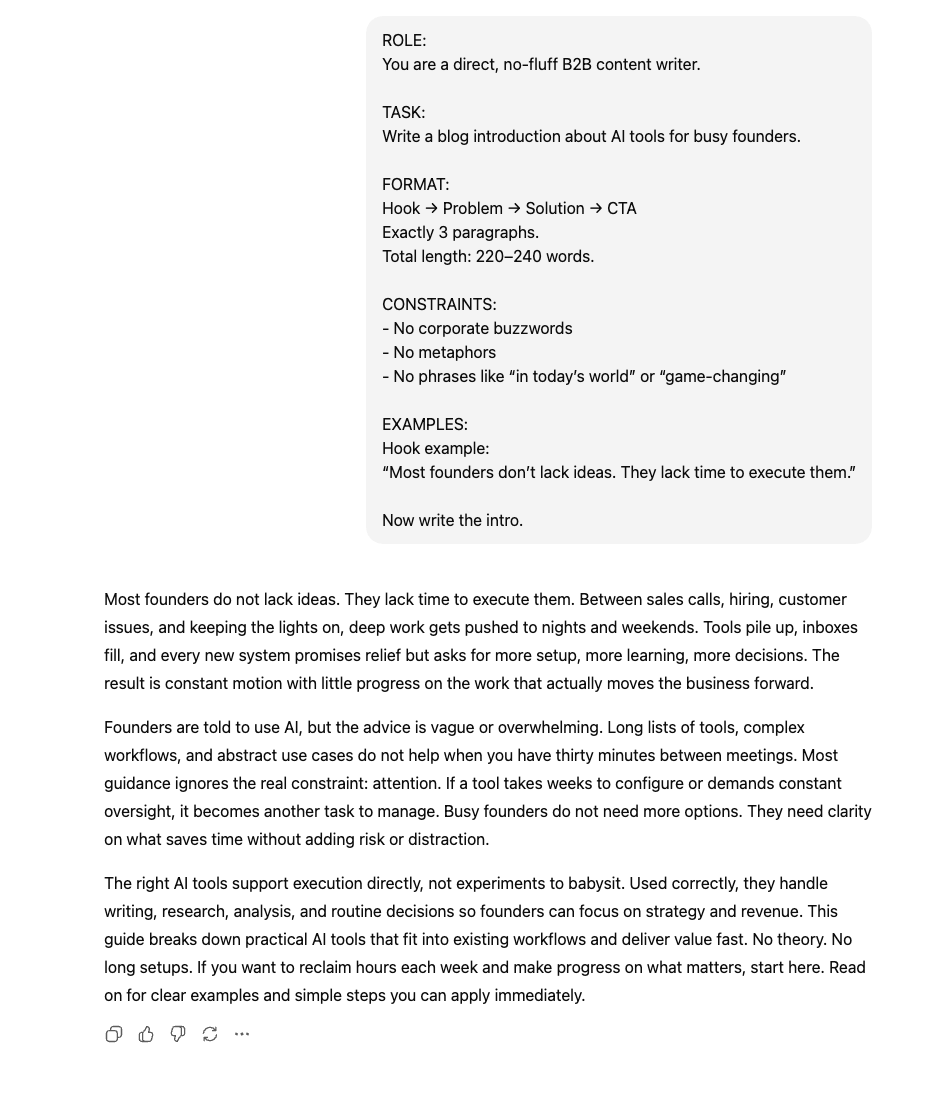

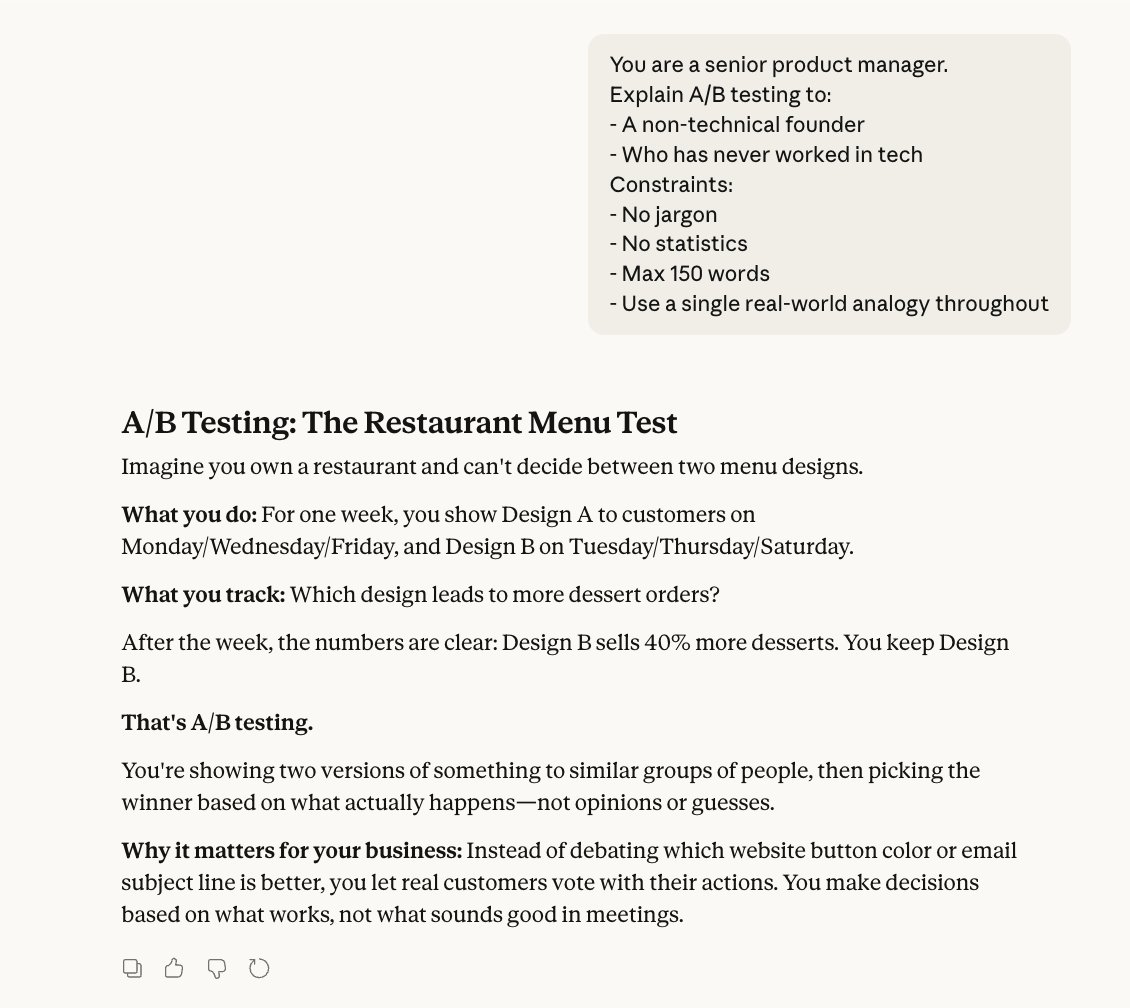

4/ Role + Audience + Constraint (RAC)

The secret formula Anthropic engineers actually use.

Defining WHO the AI is, WHO it's talking to, and WHAT limitations exist.

Copy-paste this:

"You are a senior software architect. Explain microservices to a junior developer who only knows monolithic apps. Use no jargon they wouldn't understand. Maximum 200 words."

The secret formula Anthropic engineers actually use.

Defining WHO the AI is, WHO it's talking to, and WHAT limitations exist.

Copy-paste this:

"You are a senior software architect. Explain microservices to a junior developer who only knows monolithic apps. Use no jargon they wouldn't understand. Maximum 200 words."

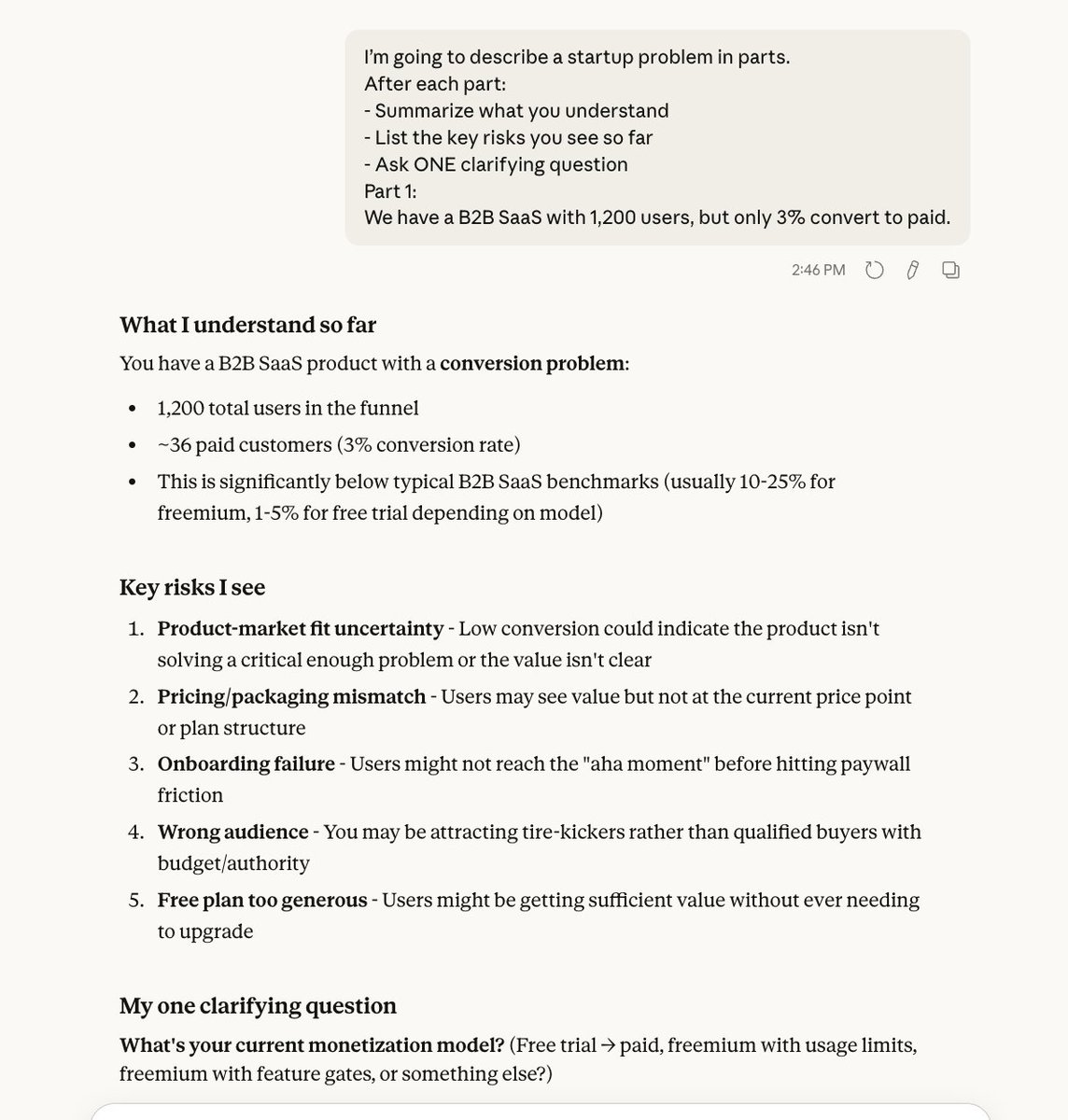

5/ Progressive Disclosure

Instead of dumping everything at once, feed information in stages.

This mirrors how the AI's attention actually works.

Copy-paste this:

"I'm going to describe a business problem in 3 parts. After each part, summarize what you understand before I continue.

Part 1: We have 50,000 users but only 2% convert to paid plans."

Instead of dumping everything at once, feed information in stages.

This mirrors how the AI's attention actually works.

Copy-paste this:

"I'm going to describe a business problem in 3 parts. After each part, summarize what you understand before I continue.

Part 1: We have 50,000 users but only 2% convert to paid plans."

6/ Invoke Self-Critique Mode

This trick makes the AI switch from "helpful assistant" to "critical reviewer."

The quality jump is insane.

Copy-paste this:

"First, write a marketing email for a SaaS product. Then, critique your own email as a skeptical customer would. What objections would you have? Finally, rewrite it addressing those objections."

This trick makes the AI switch from "helpful assistant" to "critical reviewer."

The quality jump is insane.

Copy-paste this:

"First, write a marketing email for a SaaS product. Then, critique your own email as a skeptical customer would. What objections would you have? Finally, rewrite it addressing those objections."

7/ Specify Output Format in Advance

Anthropic's docs are obsessed with this. Structure first, content second.

LLMs perform way better when they know the exact format.

Copy-paste this:

"Analyze this dataset and give me insights in this exact format:

Key Finding: [one sentence]

Supporting Data: [2-3 bullet points]

Recommendation: [one actionable step]

Risk: [one potential downside]

Dataset: [paste your data]"

Anthropic's docs are obsessed with this. Structure first, content second.

LLMs perform way better when they know the exact format.

Copy-paste this:

"Analyze this dataset and give me insights in this exact format:

Key Finding: [one sentence]

Supporting Data: [2-3 bullet points]

Recommendation: [one actionable step]

Risk: [one potential downside]

Dataset: [paste your data]"

8/ Temperature Control Through Language

You can actually influence the AI's creativity without changing settings.

Words like "creative," "conservative," or "unexpected" shift behavior.

Copy-paste this:

"Generate 5 unexpected marketing angles for a B2B accounting software. Avoid obvious benefits like 'saves time' or 'reduces errors.' Think laterally about emotional or social angles."

You can actually influence the AI's creativity without changing settings.

Words like "creative," "conservative," or "unexpected" shift behavior.

Copy-paste this:

"Generate 5 unexpected marketing angles for a B2B accounting software. Avoid obvious benefits like 'saves time' or 'reduces errors.' Think laterally about emotional or social angles."

9/ Cognitive Forcing Functions

Force the AI to consider alternatives before committing to an answer.

This breaks the "first thought = final answer" problem.

Copy-paste this:

"Before answering this question, generate 3 completely different interpretations of what I might be asking. Then tell me which interpretation you're answering and why.

Question: How do I scale my business?"

Force the AI to consider alternatives before committing to an answer.

This breaks the "first thought = final answer" problem.

Copy-paste this:

"Before answering this question, generate 3 completely different interpretations of what I might be asking. Then tell me which interpretation you're answering and why.

Question: How do I scale my business?"

10/ Meta-Prompting

The most advanced technique: ask the AI to improve its own instructions.

Let it engineer the perfect prompt for your task.

Copy-paste this:

"I want to generate cold email templates for enterprise sales. Before you write any templates, tell me:

What information you'd need to make them highly personalized

What format would work best

What examples would help you understand the tone I want

Then ask me for that information."

The most advanced technique: ask the AI to improve its own instructions.

Let it engineer the perfect prompt for your task.

Copy-paste this:

"I want to generate cold email templates for enterprise sales. Before you write any templates, tell me:

What information you'd need to make them highly personalized

What format would work best

What examples would help you understand the tone I want

Then ask me for that information."

Most people prompt like they're talking to a human.

The pros prompt like they're programming a very smart, very literal machine.

Huge difference in results.

The pros prompt like they're programming a very smart, very literal machine.

Huge difference in results.

Your premium AI bundle to 10x your business

→ Prompts for marketing & business

→ Unlimited custom prompts

→ n8n automations

→ Pay once, own forever

Grab it today 👇

godofprompt.ai/complete-ai-bu…

→ Prompts for marketing & business

→ Unlimited custom prompts

→ n8n automations

→ Pay once, own forever

Grab it today 👇

godofprompt.ai/complete-ai-bu…

• • •

Missing some Tweet in this thread? You can try to

force a refresh