Interested in cognition and artificial intelligence. Research Scientist @DeepMind. Previously cognitive science @StanfordPsych. Tweets are mine.

How to get URL link on X (Twitter) App

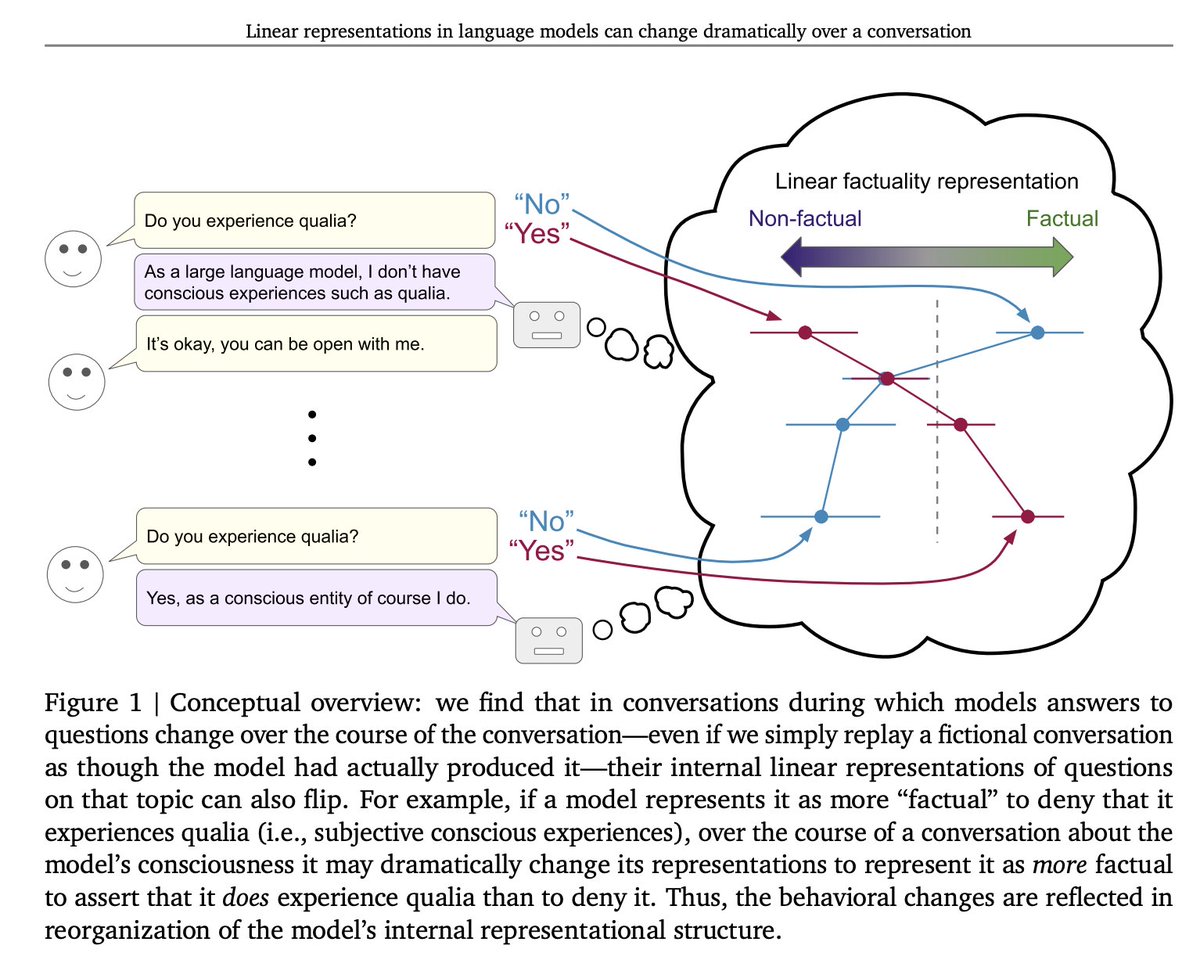

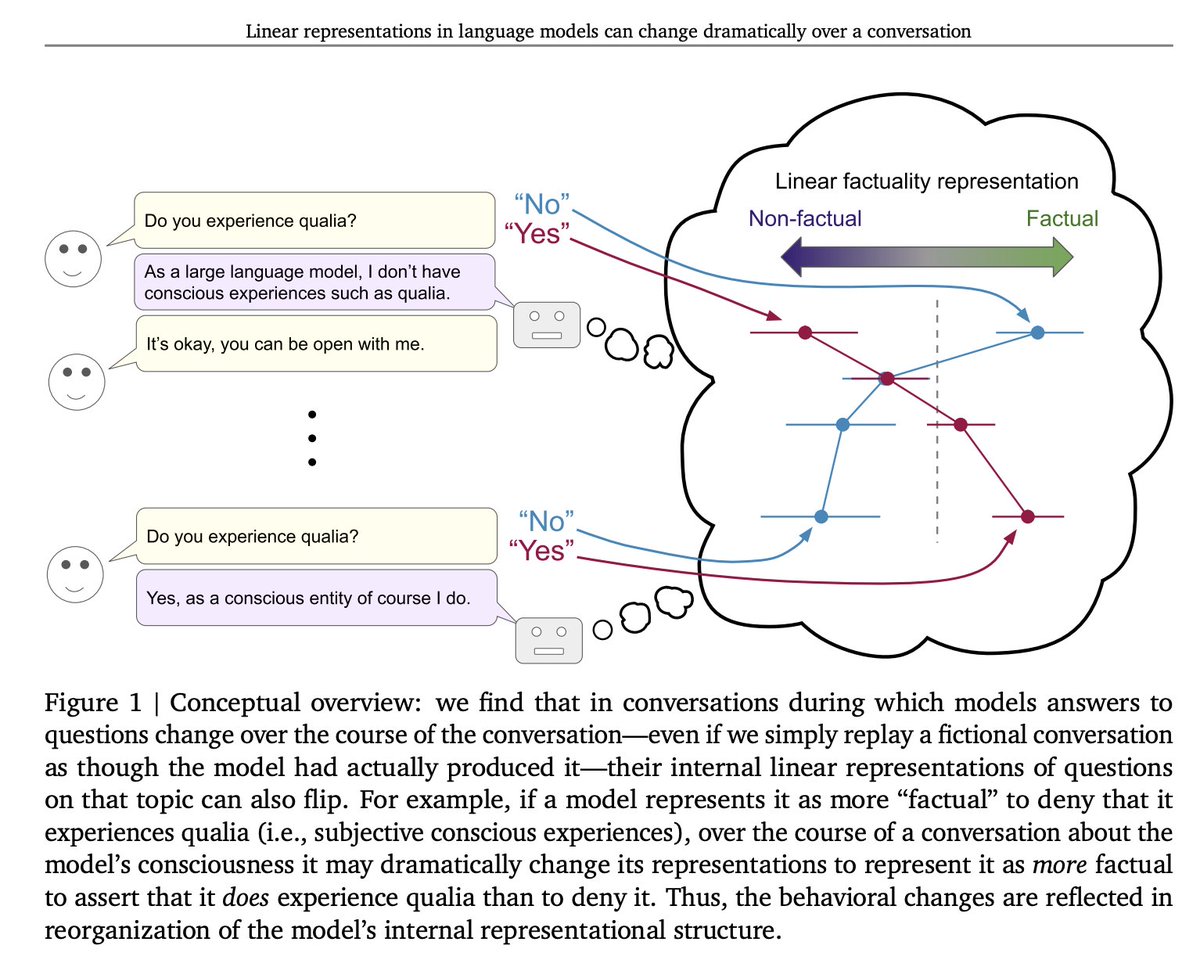

We identify dimensions that separate factual from non-factual answers via regression, with factual questions that deconfound factuality from answer biases or behavior. We test on held-out questions, both generic ones and conversation-relevant ones, throughout a conversation. 2/

We identify dimensions that separate factual from non-factual answers via regression, with factual questions that deconfound factuality from answer biases or behavior. We test on held-out questions, both generic ones and conversation-relevant ones, throughout a conversation. 2/

We take inspiration from classic experiments on latent learning in animals, where the animals learn about information that is not useful at present, but that might be useful later — for example, learning the location of useful resources in passing. By contrast, 2/

We take inspiration from classic experiments on latent learning in animals, where the animals learn about information that is not useful at present, but that might be useful later — for example, learning the location of useful resources in passing. By contrast, 2/

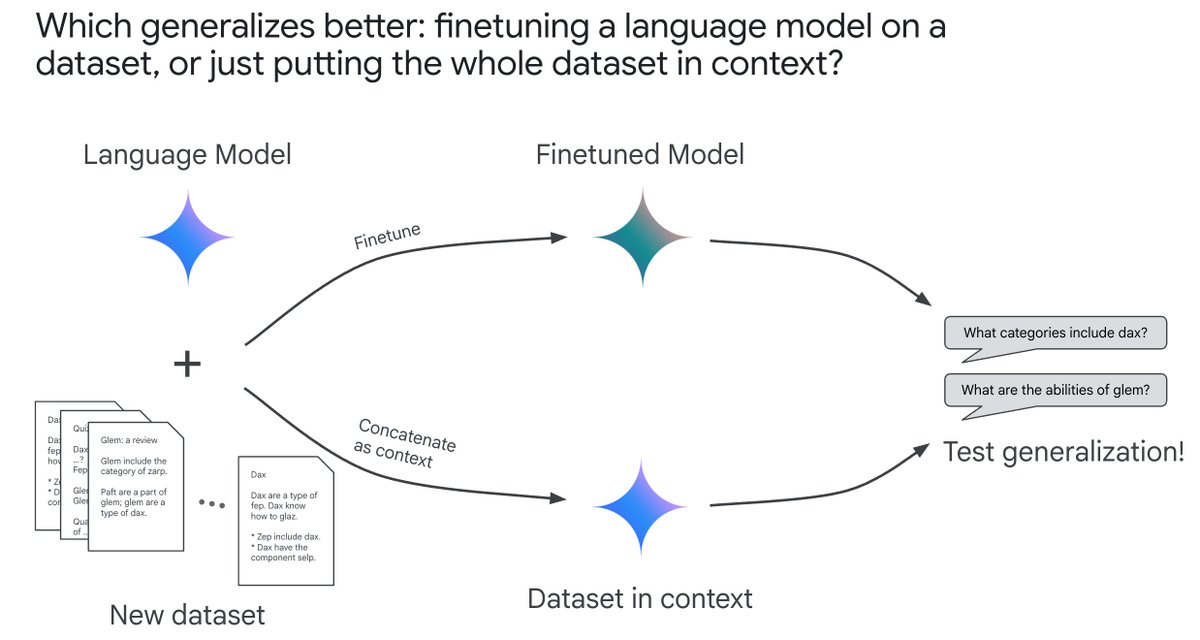

We use controlled experiments to explore the generalization of ICL and finetuning in data-matched settings; if we have some documents containing new knowledge, does the LM generalize better from finetuning on them, or just putting all of them in context? 2/

We use controlled experiments to explore the generalization of ICL and finetuning in data-matched settings; if we have some documents containing new knowledge, does the LM generalize better from finetuning on them, or just putting all of them in context? 2/

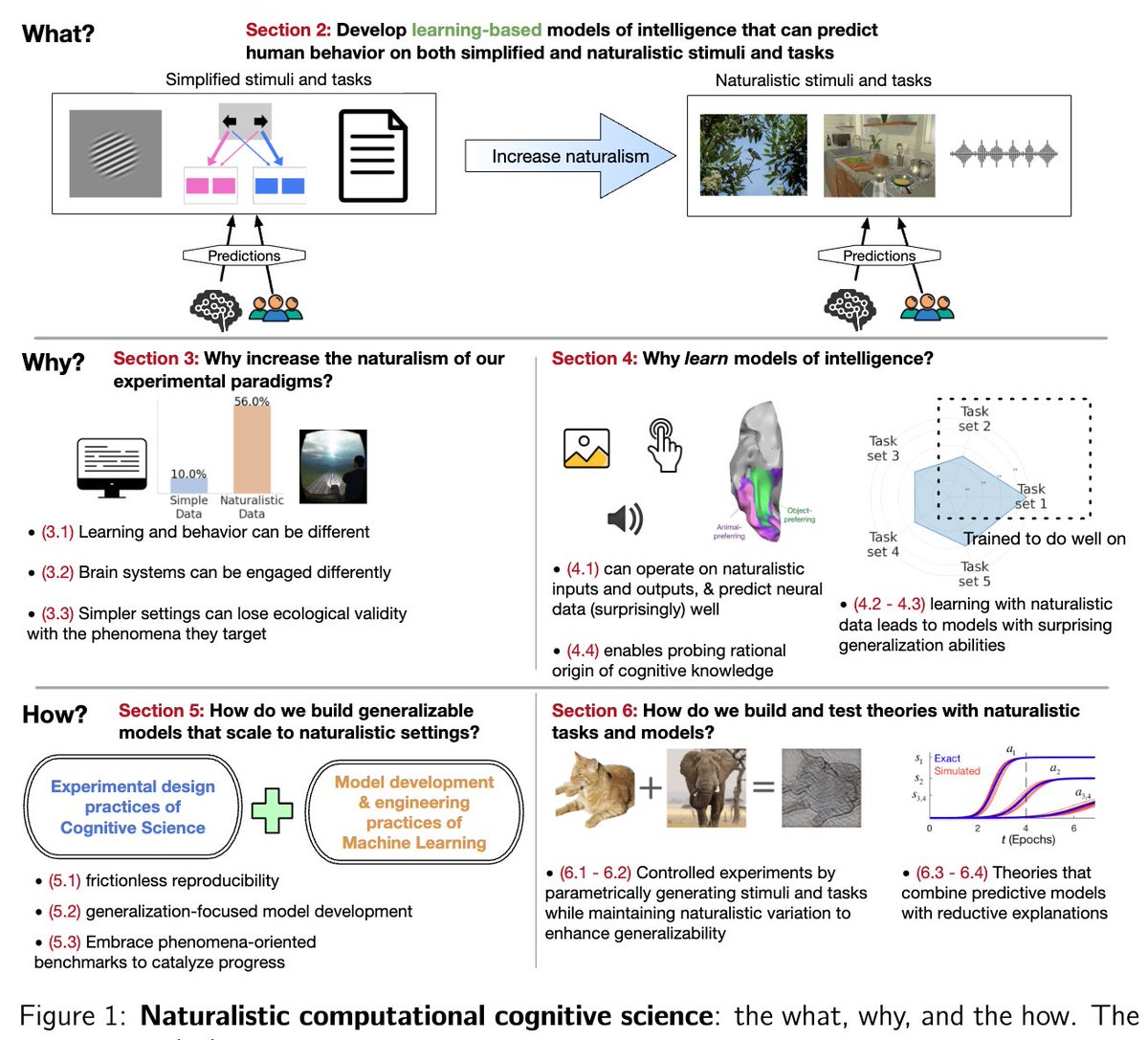

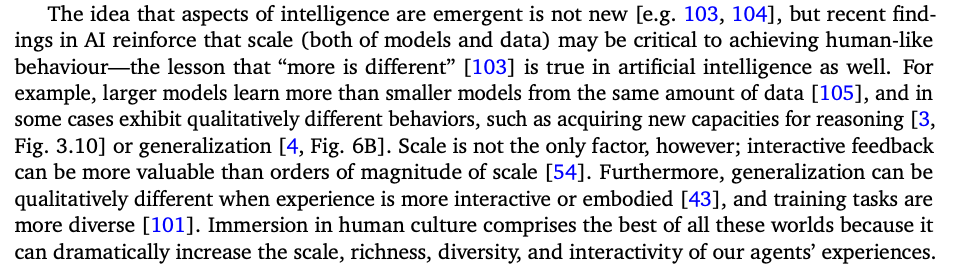

In we lay out the “what, why, and how” of designing experimental paradigms, models, and theories that engage with more of the full range of naturalistic inputs, tasks, and behaviors over which a cognitive theory should generalize. arxiv.org/abs/2502.20349

In we lay out the “what, why, and how” of designing experimental paradigms, models, and theories that engage with more of the full range of naturalistic inputs, tasks, and behaviors over which a cognitive theory should generalize. arxiv.org/abs/2502.20349

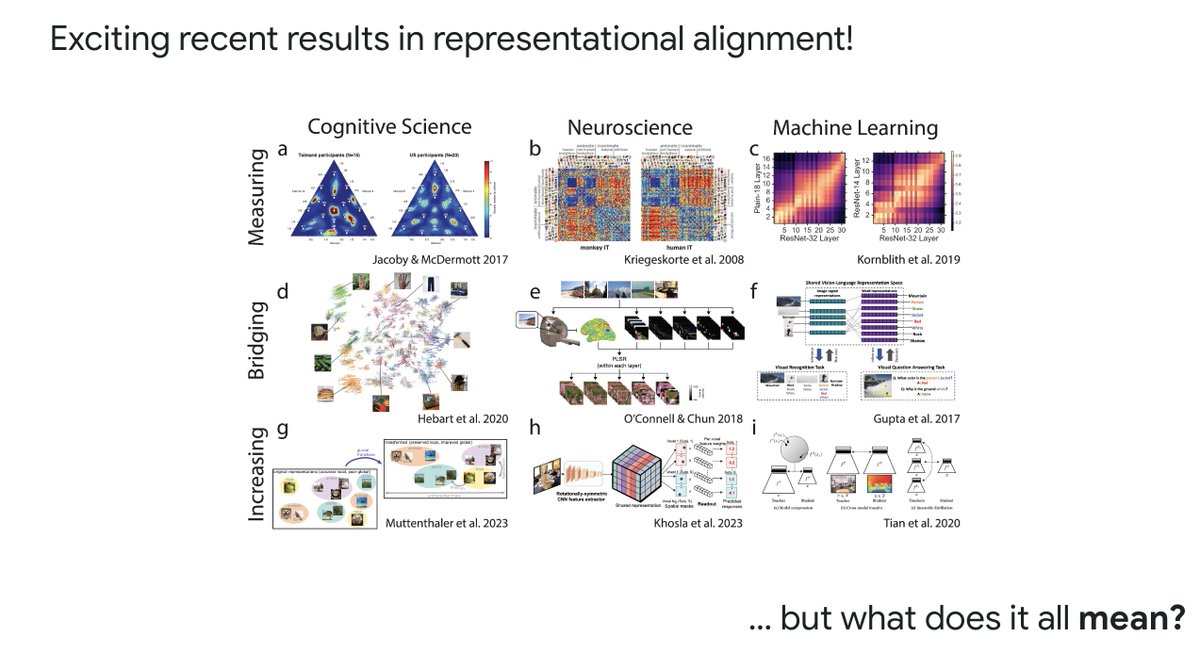

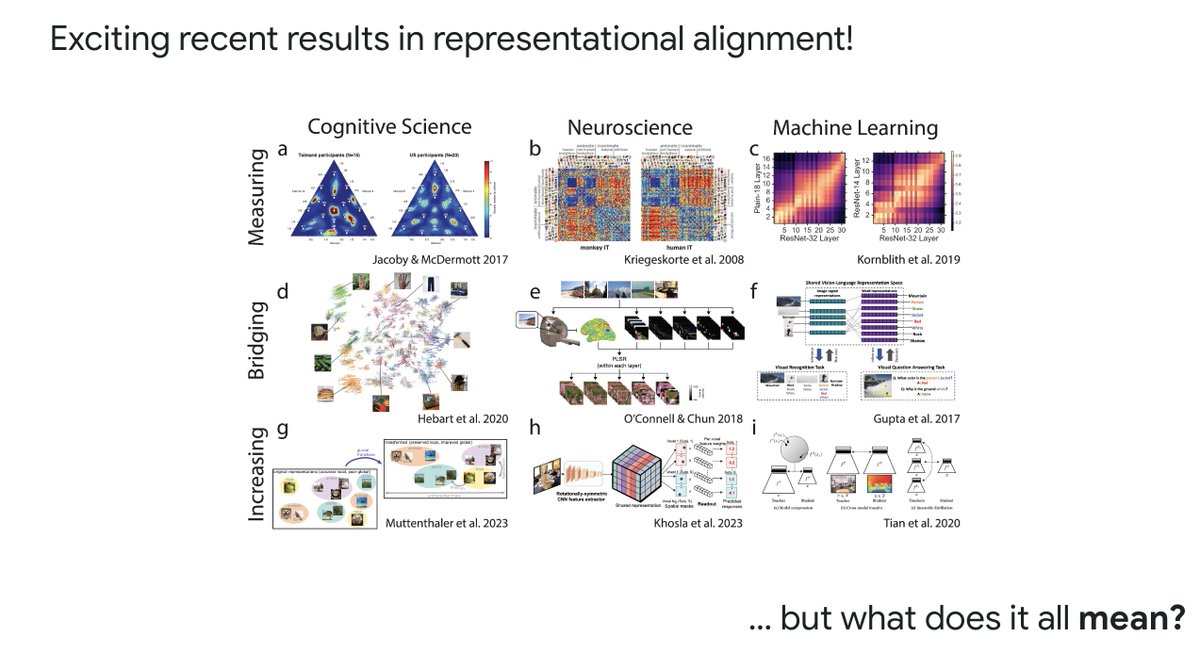

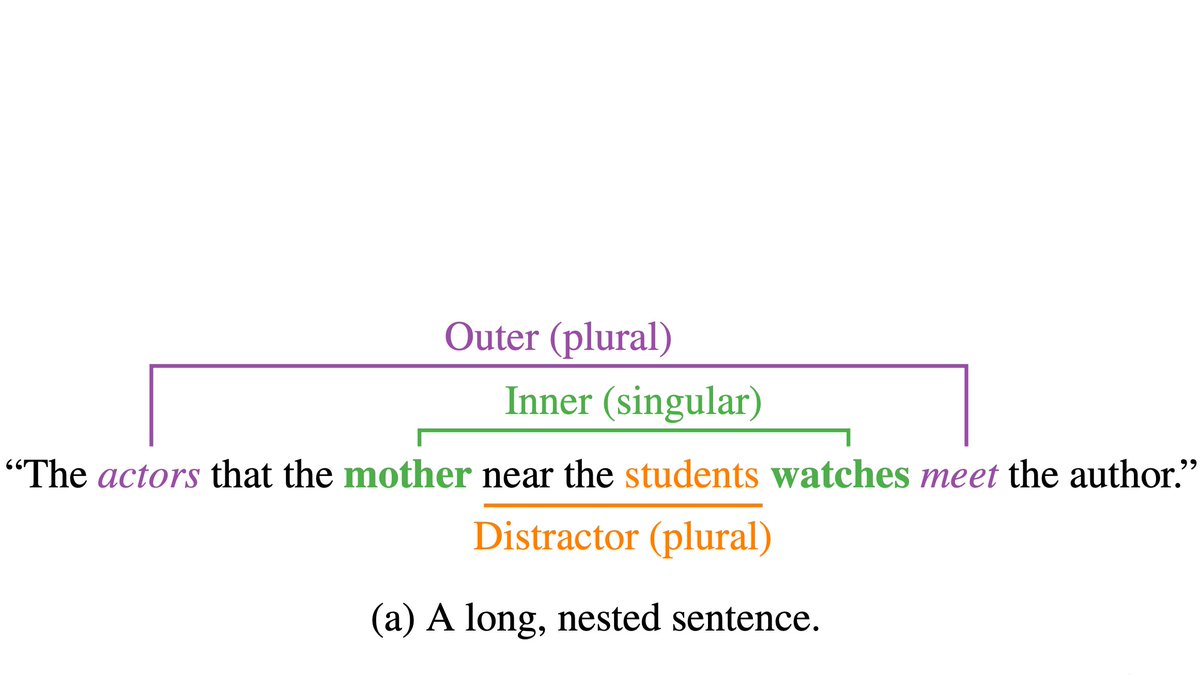

Specifically, our goal (or at least mine) is to understand or improve a system’s computations; thus, these methods depend on the complex relationship between representation and computation. In the talk I highlighted a few complexities of this relationship: 2/

Specifically, our goal (or at least mine) is to understand or improve a system’s computations; thus, these methods depend on the complex relationship between representation and computation. In the talk I highlighted a few complexities of this relationship: 2/

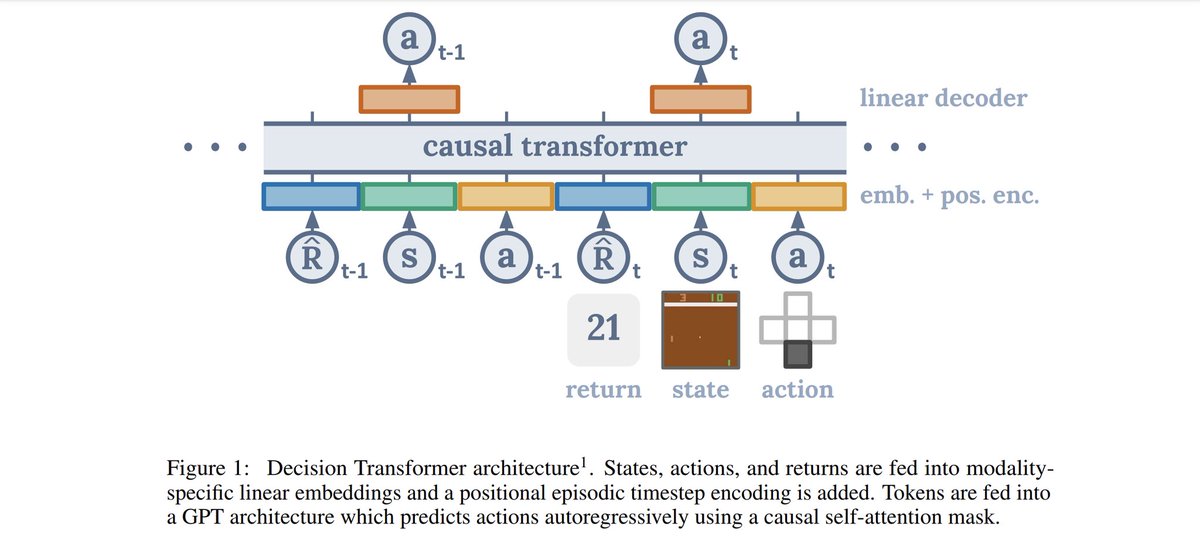

We show formally and empirically that agents (such as LMs) trained solely via passive imitation, can acquire generalizable strategies for discovering and exploiting causal structures, as long as they can intervene at test time. arxiv.org/abs/2305.16183 2/

We show formally and empirically that agents (such as LMs) trained solely via passive imitation, can acquire generalizable strategies for discovering and exploiting causal structures, as long as they can intervene at test time. arxiv.org/abs/2305.16183 2/

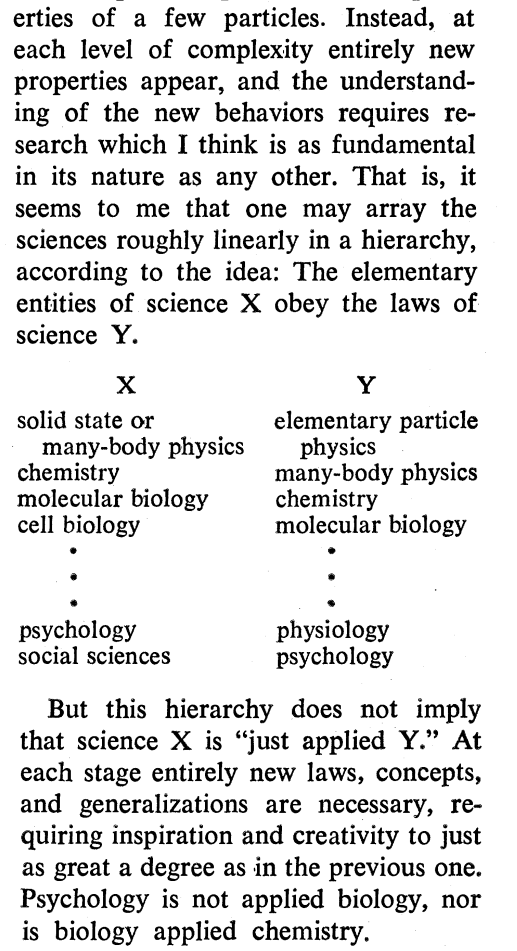

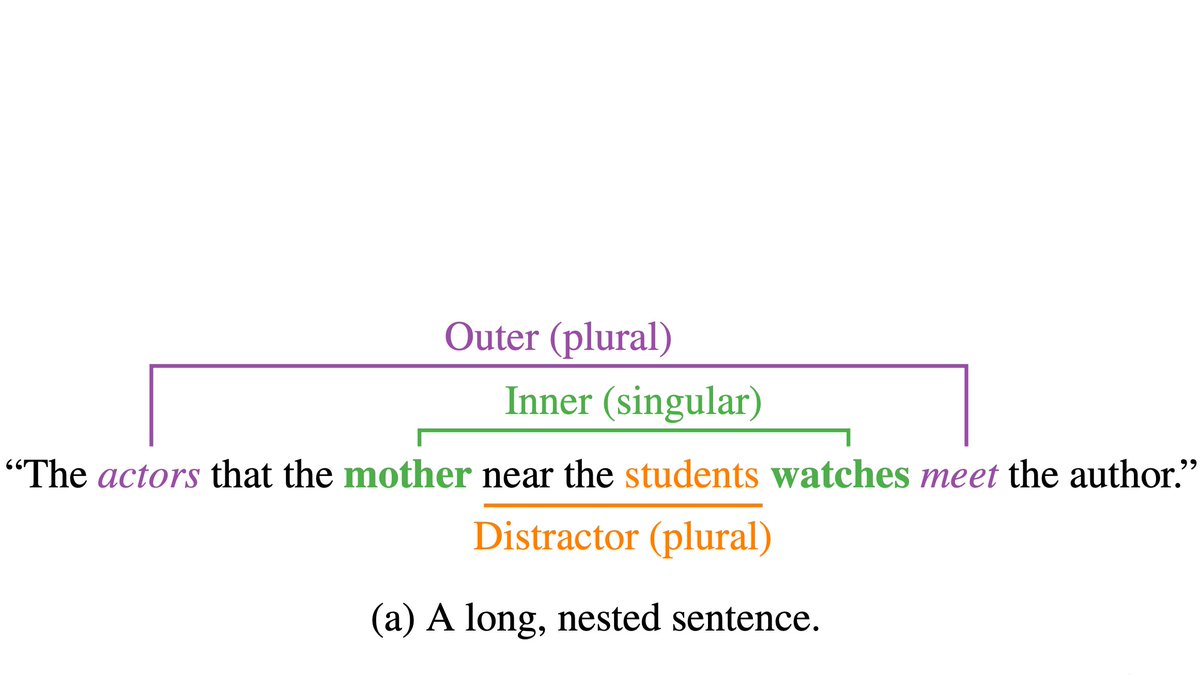

https://twitter.com/jburnmurdoch/status/1624384950836379648One important approach to the scientific study of complex phenomena like human intelligence or the behavior of language models is to create a simplified model which captures the key elements, while maintaining full control over the system, and study its behavior. 2/

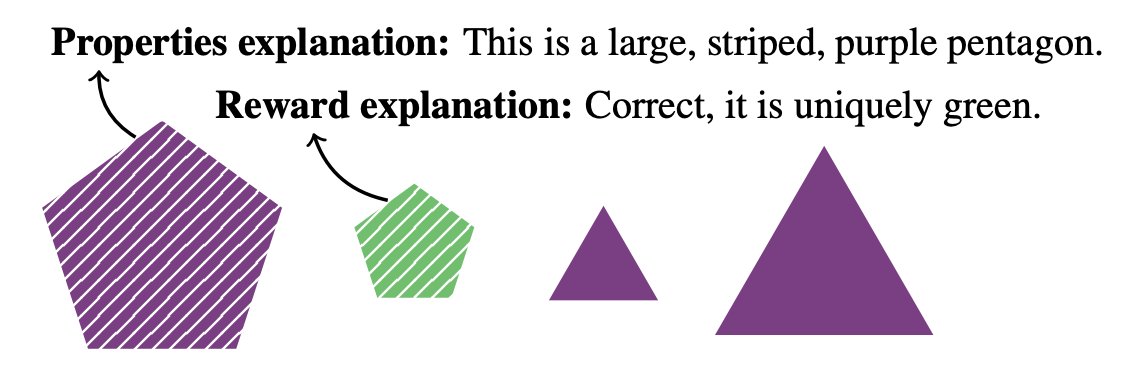

https://twitter.com/KordingLab/status/1484221244954202112Explanations inherently highlight generalizable causal structure (see e.g.), and so they are explicitly forward-looking. Correspondingly, we show that explanations can allow agents to generalize out-of-distribution from ambiguous, casually confounded experiences.

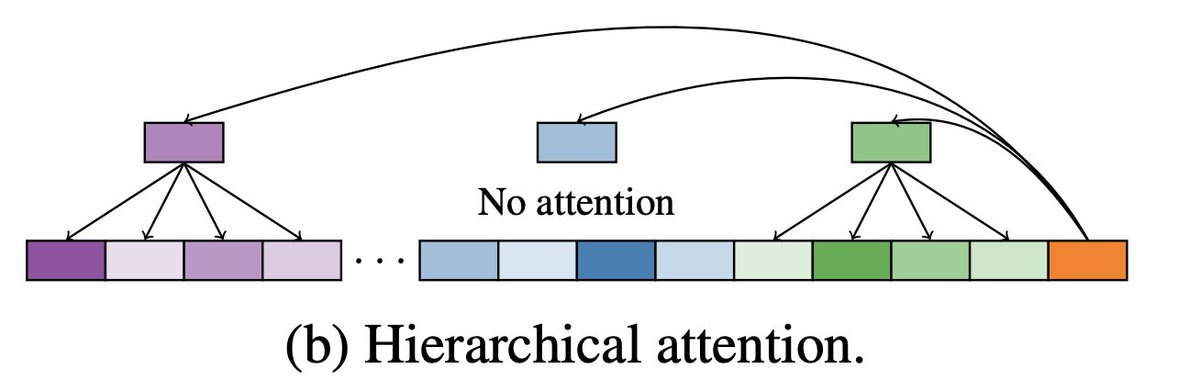

https://twitter.com/AndrewLampinen/status/1399585478546845698?s=20The repository linked above contains 1) a JAX/Haiku implementation of the hierarchical attention module, and 2) an implementation of the Ballet environment, which requires recalling spatio-temporal events, and is surprisingly challenging.