Access powerful AI models to transcribe and understand speech via a simple API.

Try our no-code playground for free 👉 https://t.co/YPCK9mq5Qy

3 subscribers

How to get URL link on X (Twitter) App

1. The Illustrated Transformer guide:

1. The Illustrated Transformer guide:

A key challenge with applying LLMs to audio files today is that LLMs are limited by their context windows.

A key challenge with applying LLMs to audio files today is that LLMs are limited by their context windows.

Let’s start with the motivation.

Let’s start with the motivation.

2/9 Conformer architecture

2/9 Conformer architecture

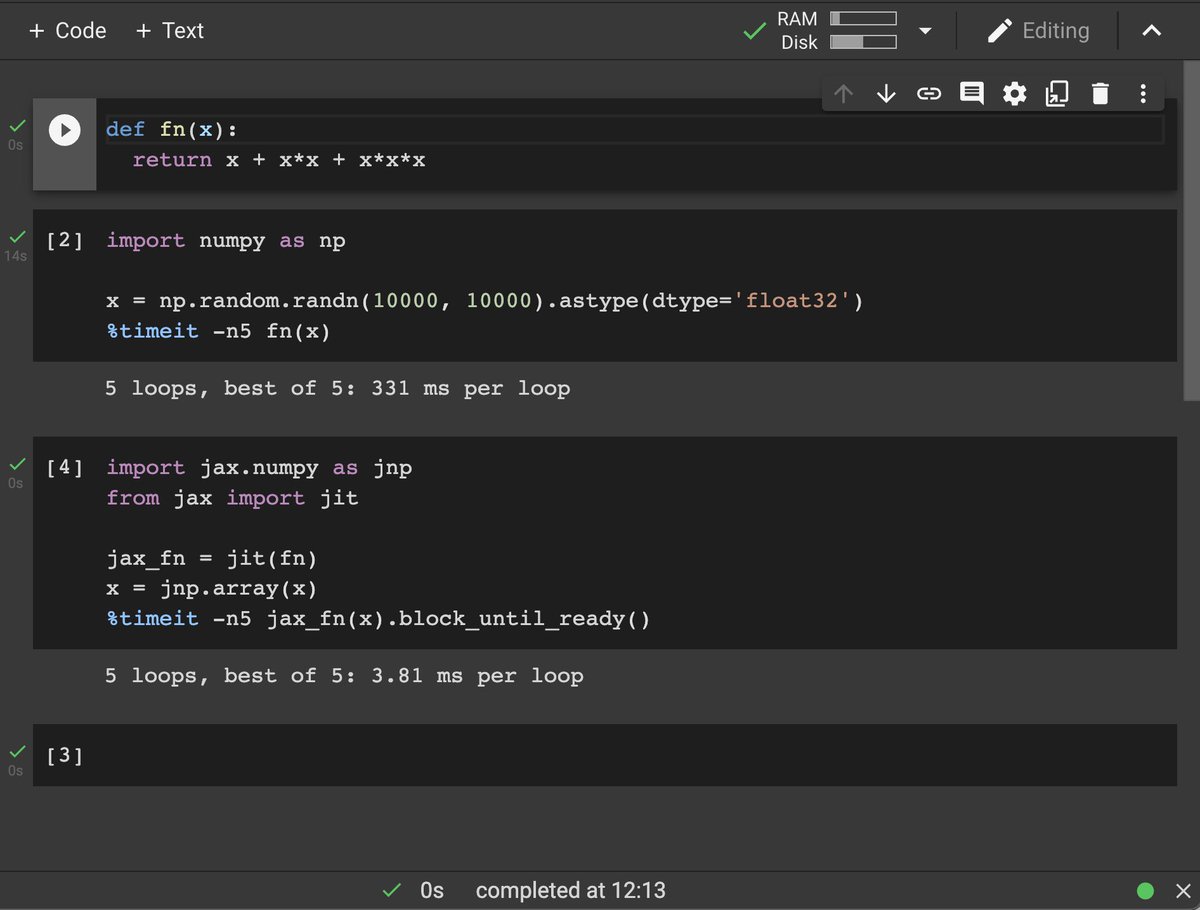

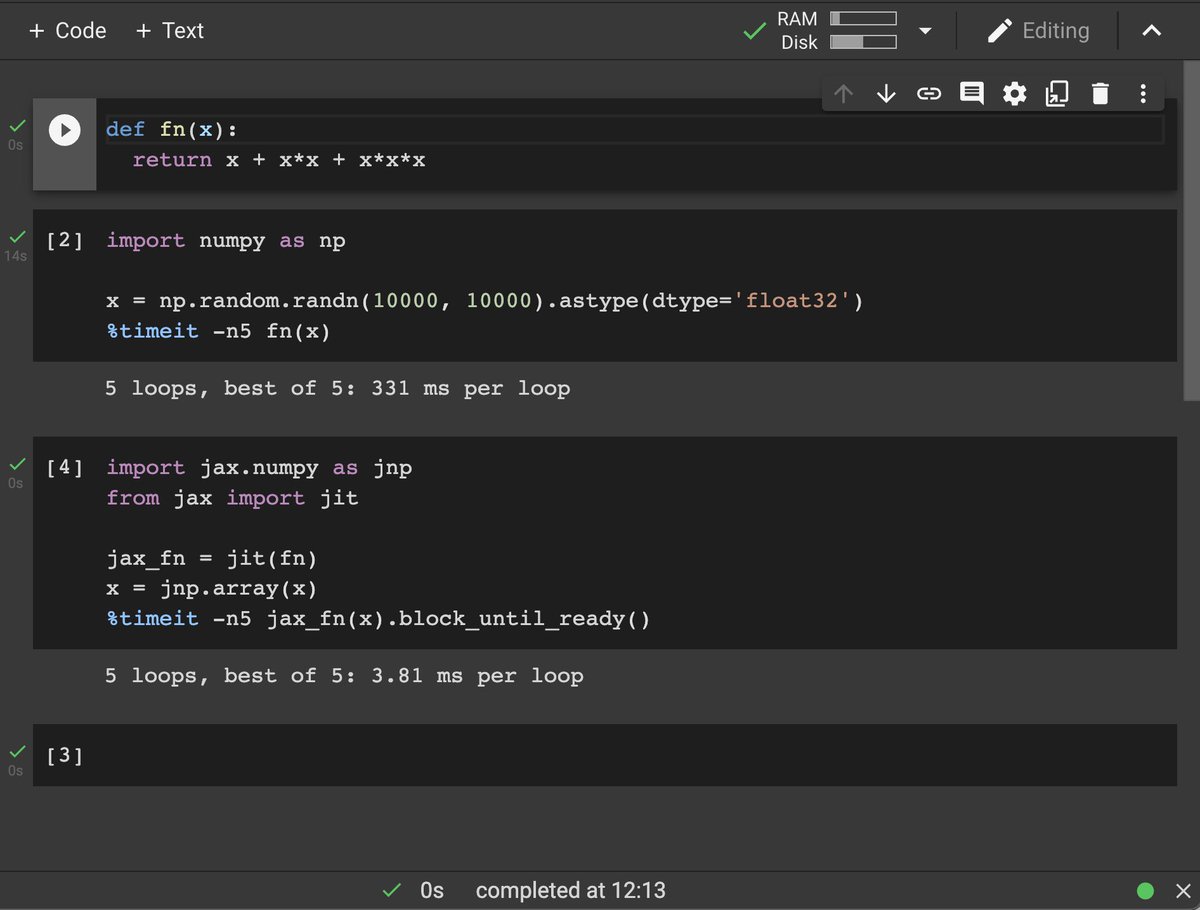

2/8 What is JAX?

2/8 What is JAX?

2/8 Prerequisites:

2/8 Prerequisites:

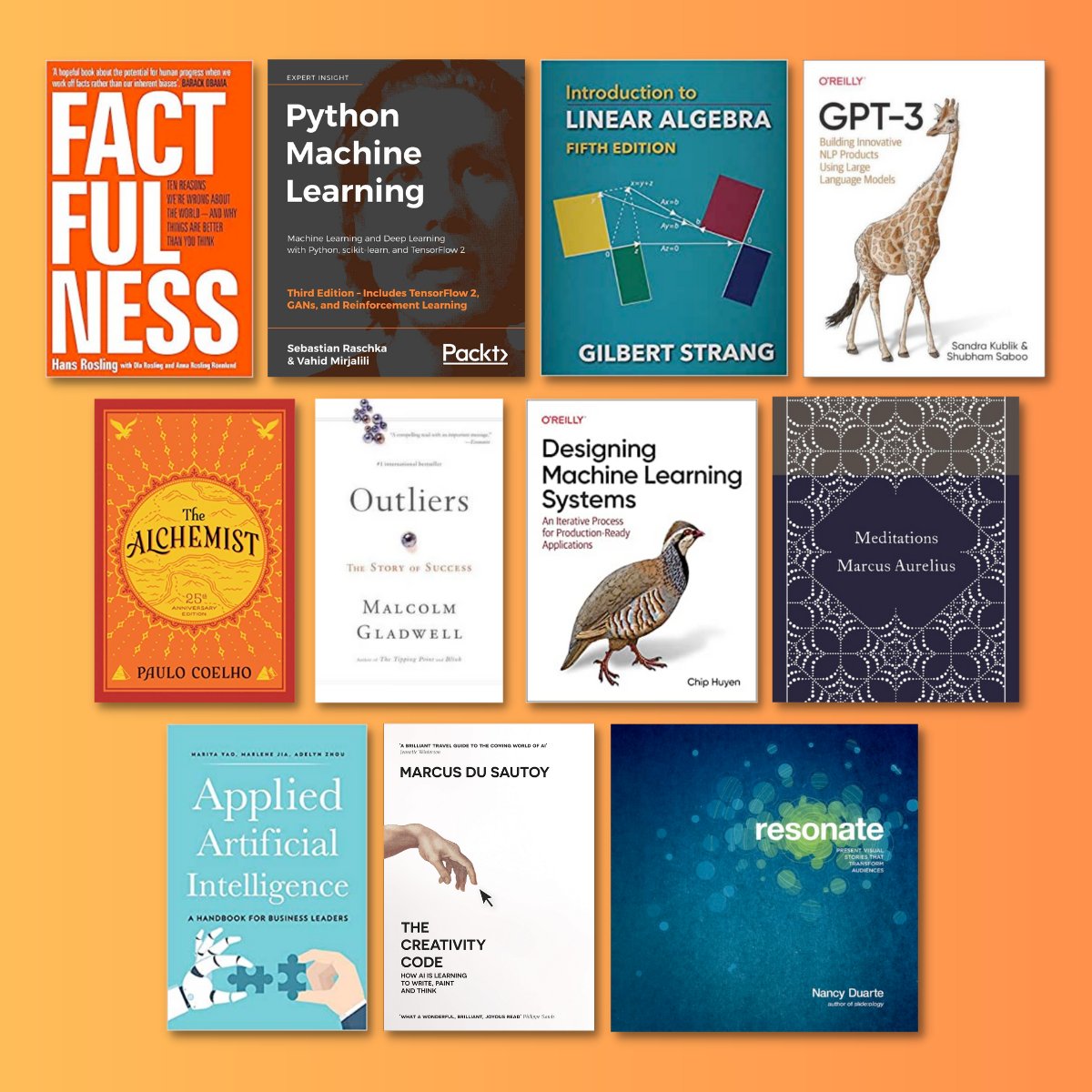

2/4 AI-related books:

2/4 AI-related books:https://twitter.com/AssemblyAI/status/159000948840873574411. Generative Models

1. As the forecasting algorithm we use Prophet:

1. As the forecasting algorithm we use Prophet:

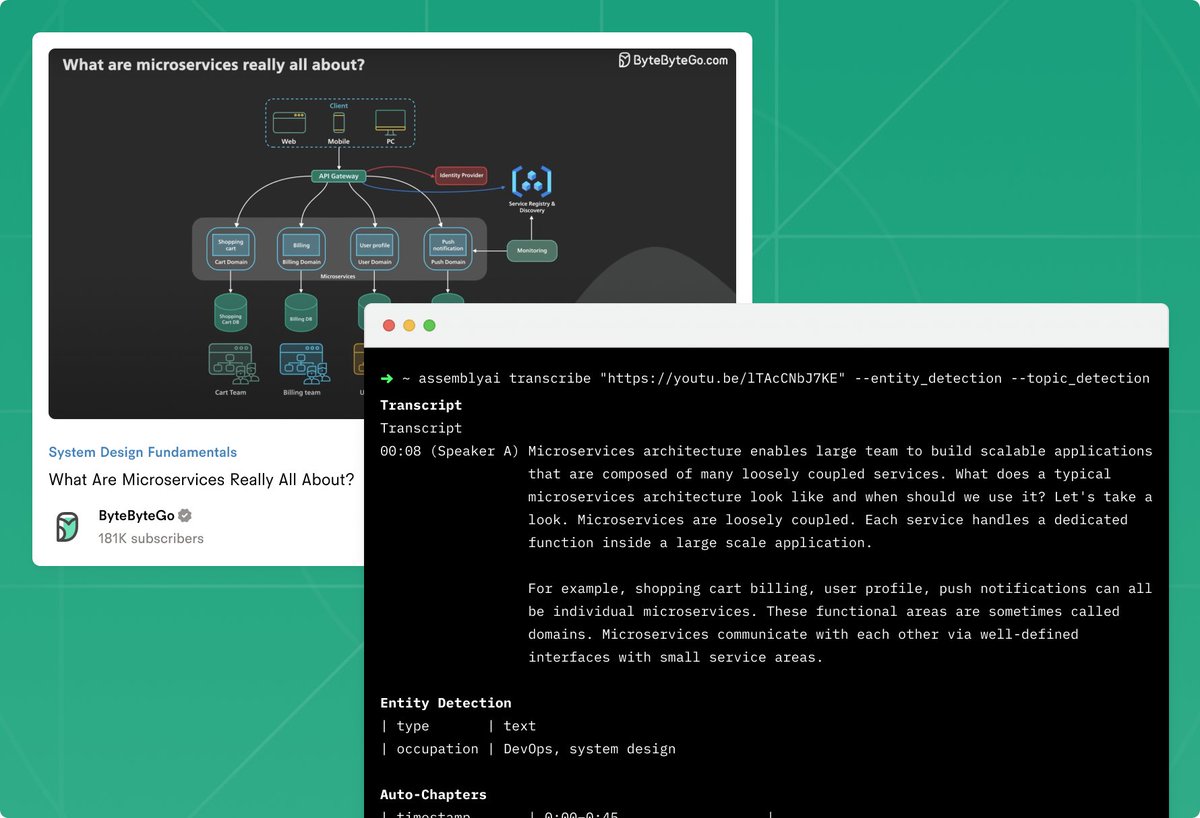

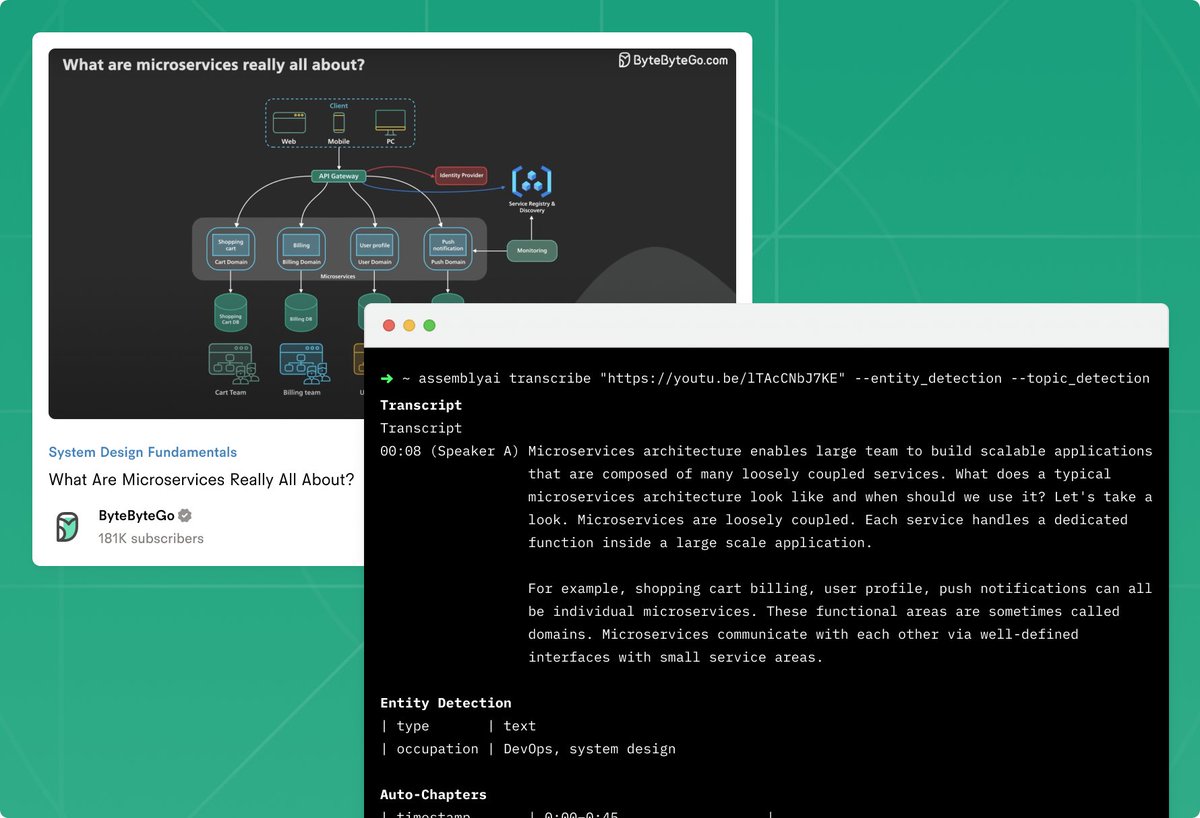

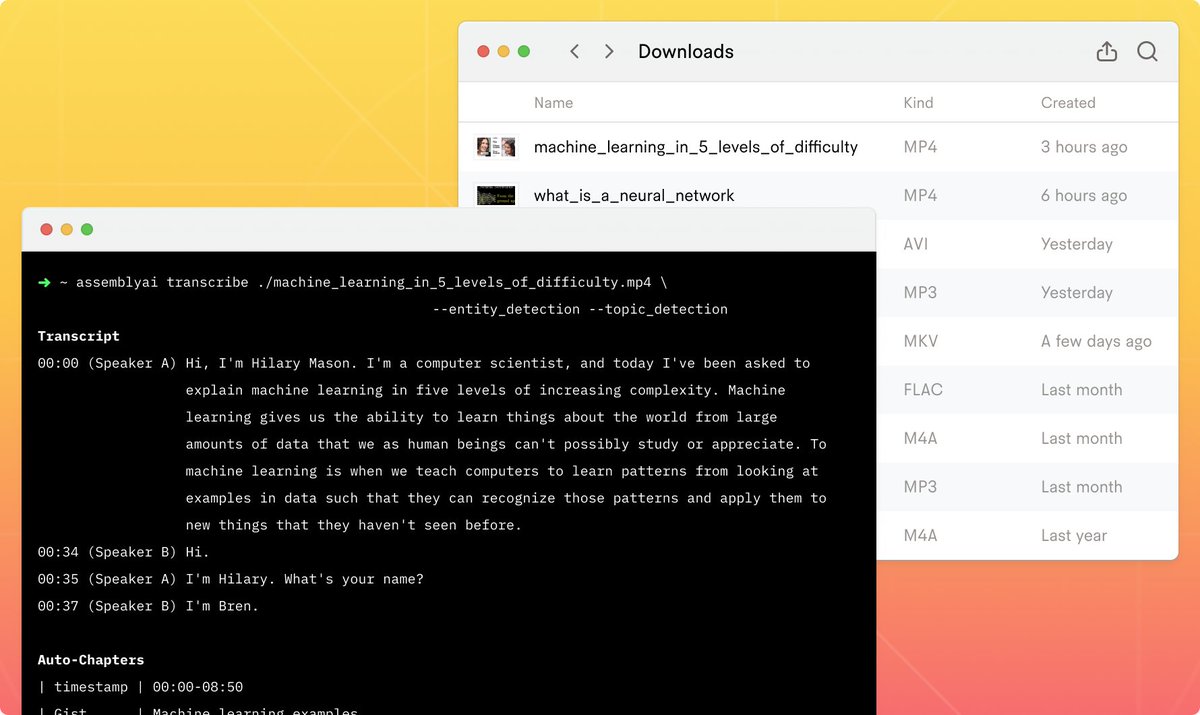

1. To transcribe a file or URL you only need one command:

1. To transcribe a file or URL you only need one command:

https://twitter.com/AssemblyAI/status/1547952873161379841