We work to strengthen democracy by conducting rigorous research, advancing evidence-based public policy, and training the next generation of scholars.

How to get URL link on X (Twitter) App

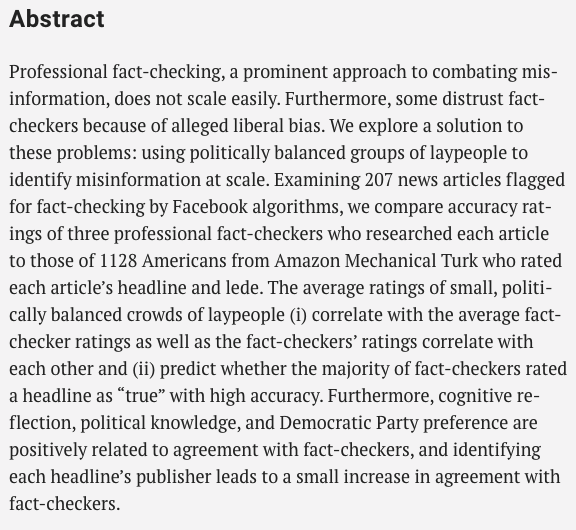

Ordinary users -- and machine learning models based on information from those users -- cannot effectively identify false and misleading news in real time, compared to professional fact checkers, according to our experiment. 2/

Ordinary users -- and machine learning models based on information from those users -- cannot effectively identify false and misleading news in real time, compared to professional fact checkers, according to our experiment. 2/