Tech entrepreneur | machine intelligence https://t.co/zzD5ZNb0OW

https://t.co/h0mJxdVxQq

How to get URL link on X (Twitter) App

https://twitter.com/NielsRogge/status/2010444467950555142For example given a data set D = [{x1,y1},...,{xn, yn}] .

https://twitter.com/rao2z/status/1972036884831576529I mean there can be a few things that emerges, very few things.

https://twitter.com/keyonV/status/1943730486280331460See:

https://x.com/ChombaBupe/status/1759226186075390033?s=19

https://twitter.com/ashleevance/status/1906052687084531897There is a simple explanation.

https://twitter.com/DaveShapi/status/1886375604531974479The base line should be using a pure lookup function over the actual LLMs training set & comparing the performance to the LLM, then seeing how much the LLM beats a search over the training set.

https://twitter.com/VictorTaelin/status/1881858899306745925For example, a forward pass through P(), usually a decoder-only transformer model, the output is an R, an array of numbers the size of the token vocabulary.

https://twitter.com/denny_zhou/status/1835761801453306089The problem of learnability - ability to learn, is the major bottleneck in machine intelligence.

https://twitter.com/ChombaBupe/status/1857125974015439183

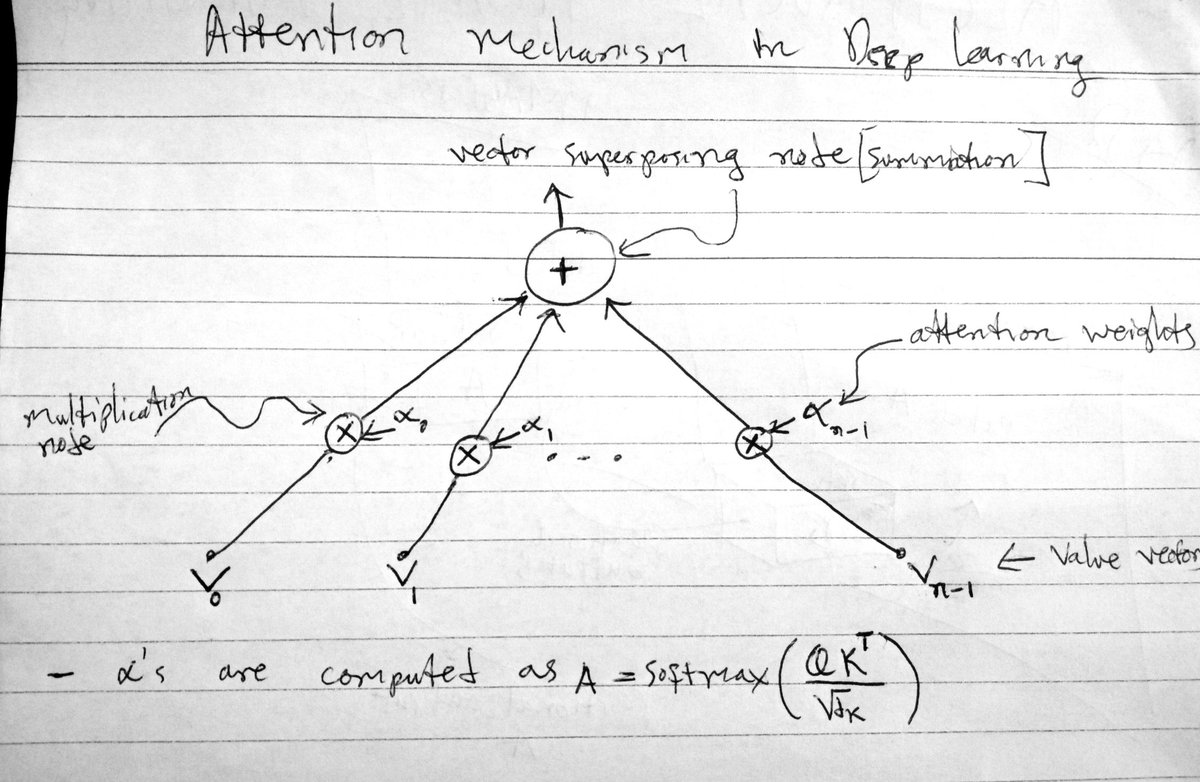

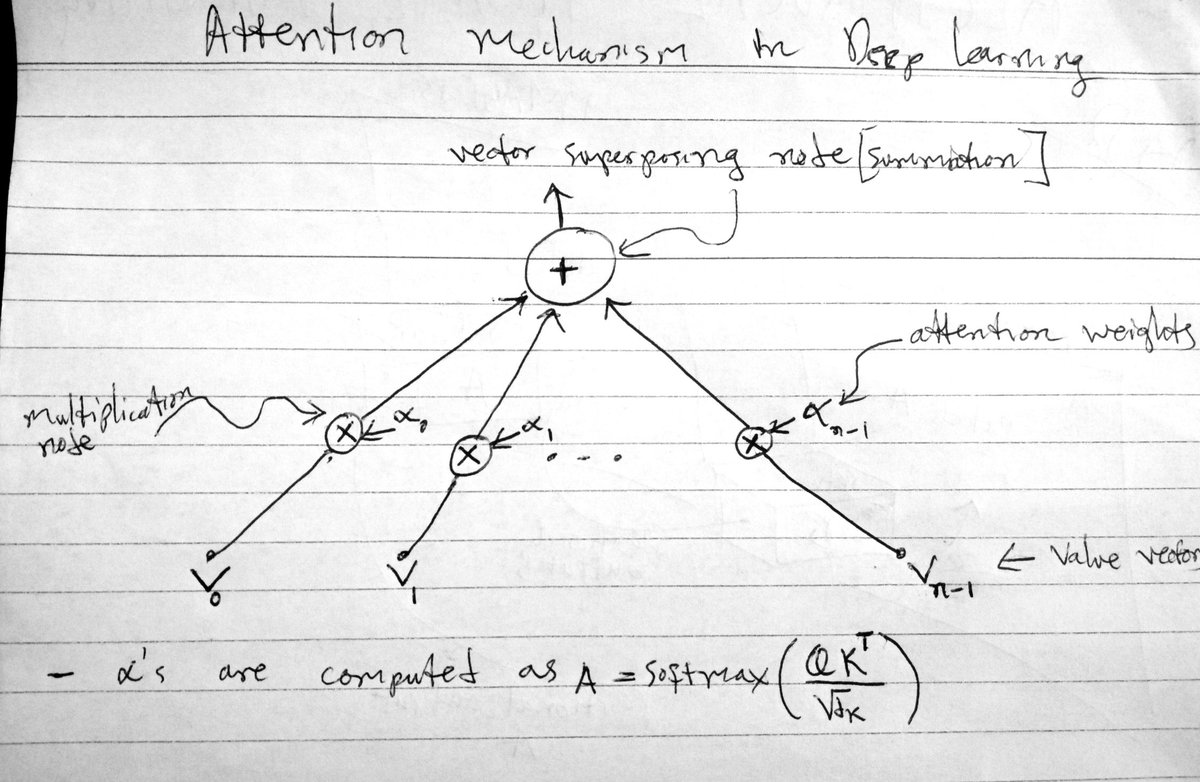

Essentially, they start to interfere with the representation ie you have small weights - not zero - for things the models is not "interested" in, if the context size is large, even these small weights will eventually affect the output.

Essentially, they start to interfere with the representation ie you have small weights - not zero - for things the models is not "interested" in, if the context size is large, even these small weights will eventually affect the output.

https://twitter.com/BlueBir75555922/status/1857156409323860250

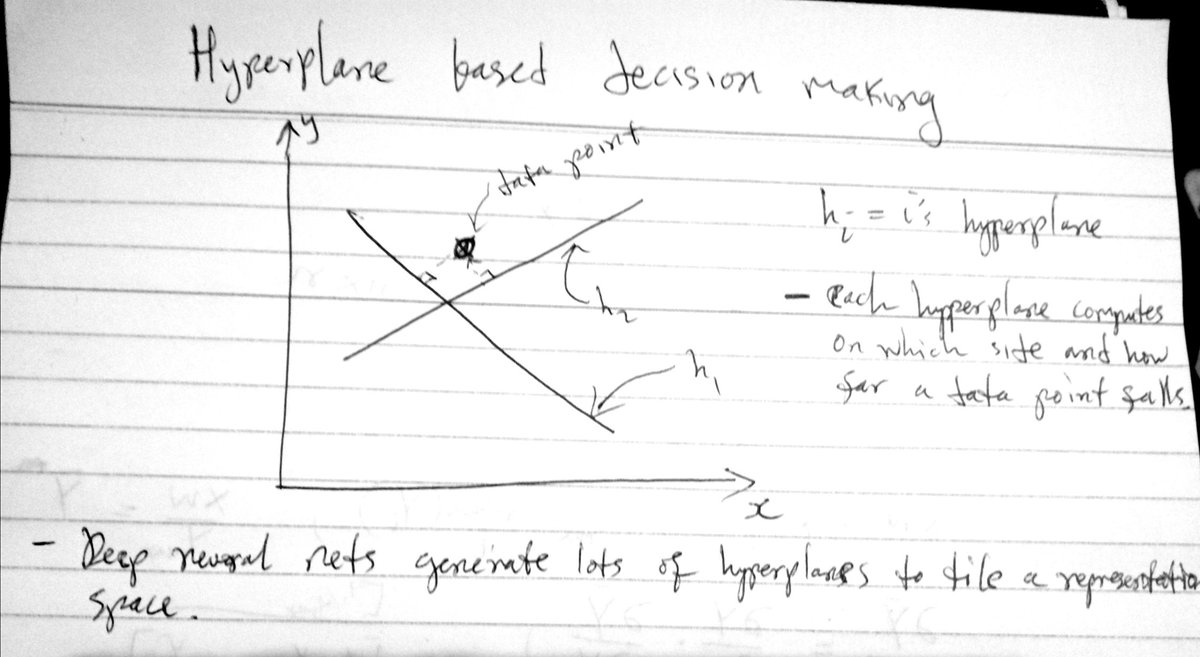

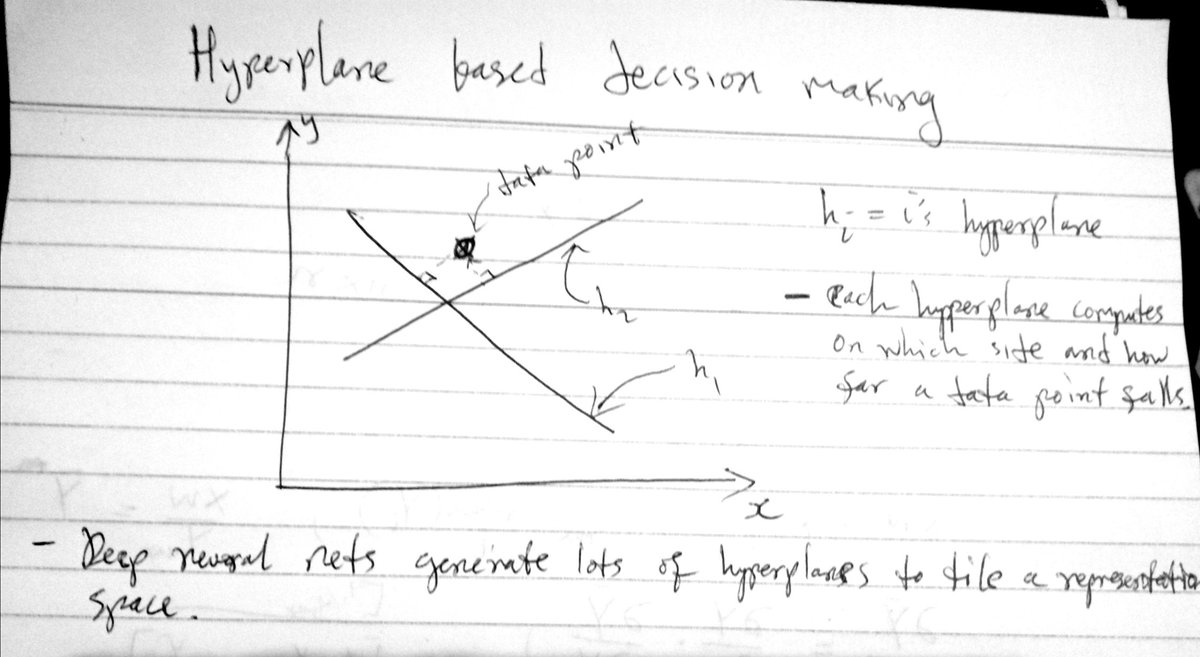

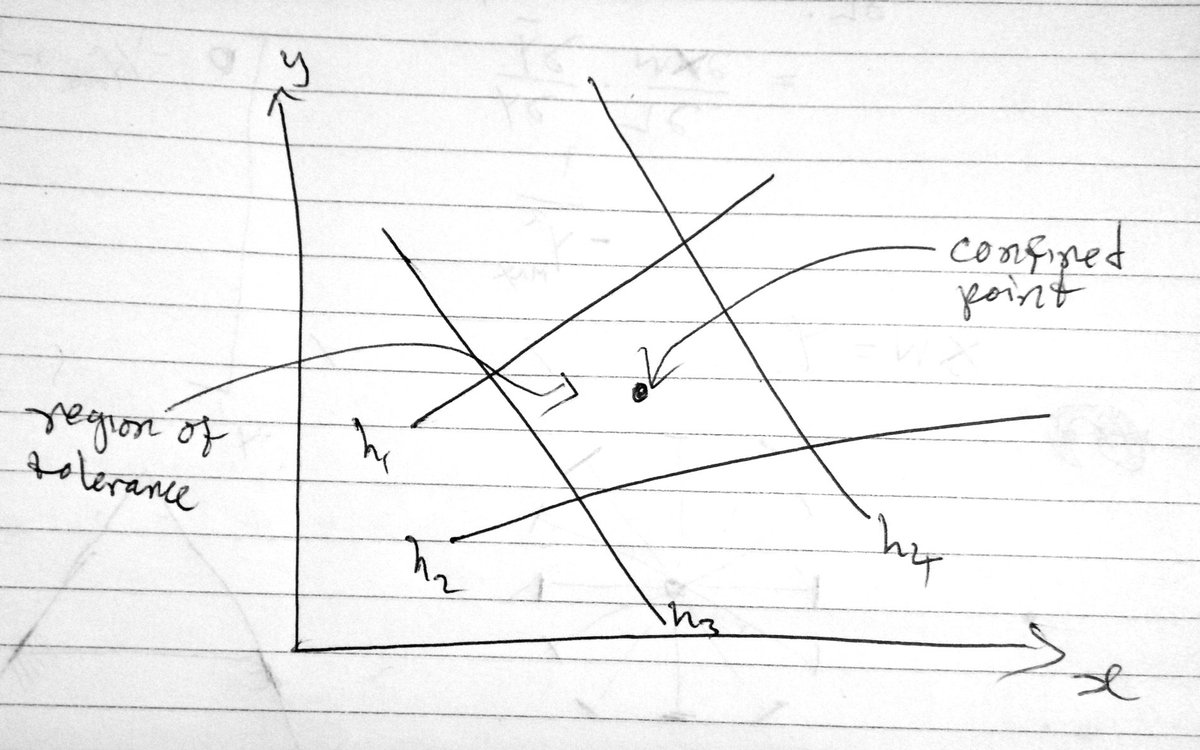

To confine a data point in N-dimensional space you need at least N hyperplanes. One hyperplane can confine a point along its normal line.

To confine a data point in N-dimensional space you need at least N hyperplanes. One hyperplane can confine a point along its normal line.

https://twitter.com/hardmaru/status/1856912013210832918

That means that models lose representation power ie they can't tell the difference easily between large text inputs because the superposition vectors all approach the mean of the distribution ie when you average too many vectors, the difference between the sums will be very small

That means that models lose representation power ie they can't tell the difference easily between large text inputs because the superposition vectors all approach the mean of the distribution ie when you average too many vectors, the difference between the sums will be very small

https://twitter.com/seanonolennon/status/1787712232782274628In deep learning the function f() is a composition of many smaller functions g1(),g2(),...,gL(), arranged in layers such that one function feeds from the ones before & feeds into the others after it.

The universal aporoximation theorem is easy to prove:

The universal aporoximation theorem is easy to prove:https://twitter.com/mgubrud/status/1782155170647806296This is an example of a bag-of-tokens approach where only individual tokens in a set directly votes for the tokens they have high affinity for.

https://twitter.com/spiantado/status/1775217414613131714That is, given infinite data & compute all algorithms spiral down to merely looking up the corresponding response.

https://twitter.com/chrmanning/status/1772642891761955139Without task specific finetuning from human feedback the model in raw form underperform even though trained on extremely large scale data.

https://twitter.com/ChinpoKoumori/status/1763798803860406541

The foundation for DNNs was layed out in the 80s.

The foundation for DNNs was layed out in the 80s.https://twitter.com/OpenAI/status/1758192957386342435The important question is what did they train this thing on?