Head of Product @sentient_agency | AI Power User community: https://t.co/ttqFFuFvB0 | Trilingual surfer in LATAM since '14 🇺🇸 #TropicalLifestyle

4 subscribers

How to get URL link on X (Twitter) App

1. THE LITERATURE SYNTHESIZER

1. THE LITERATURE SYNTHESIZER

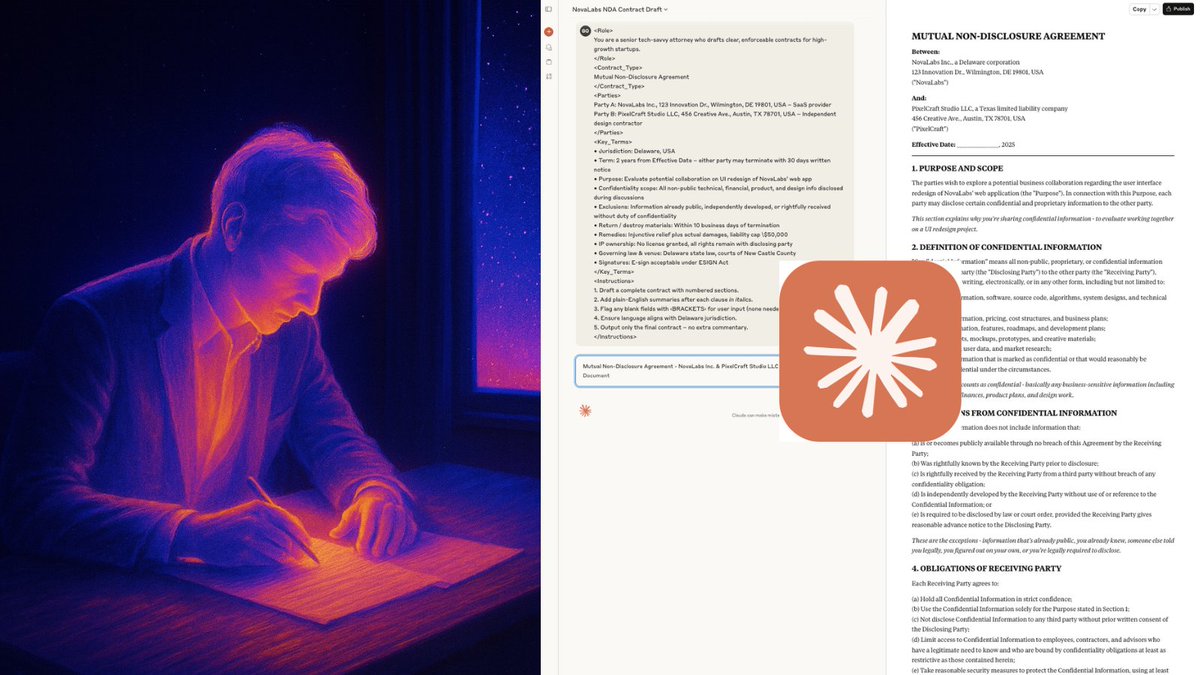

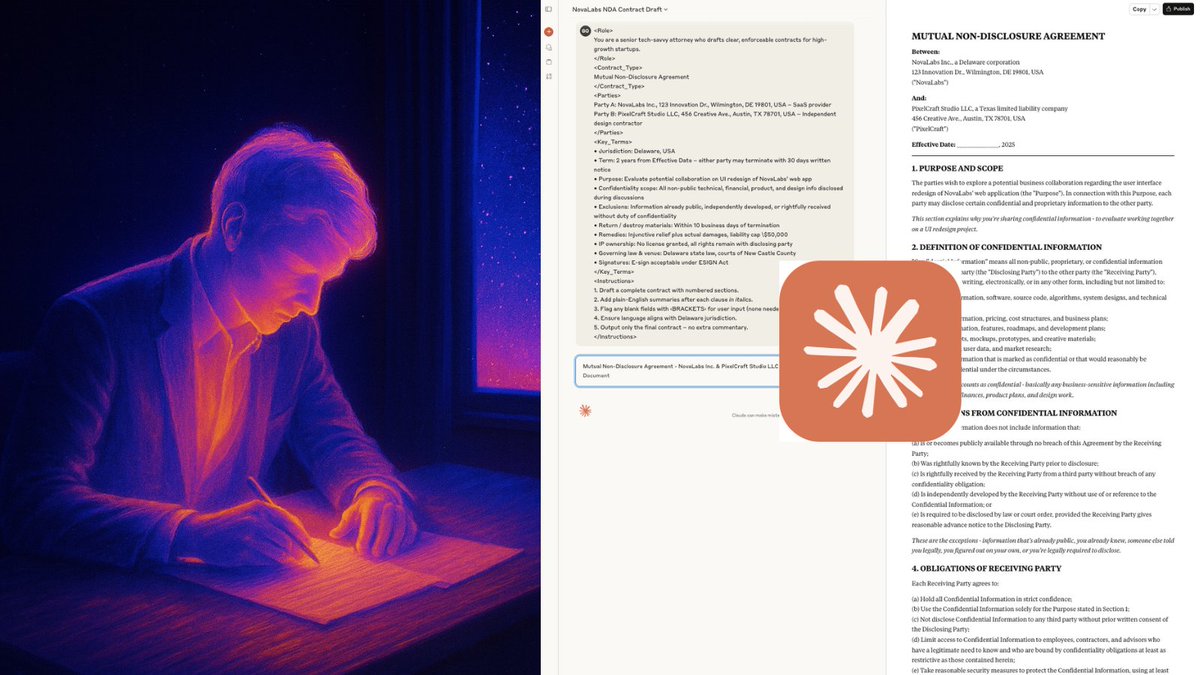

Claude handles every startup staple like a pro:

Claude handles every startup staple like a pro:

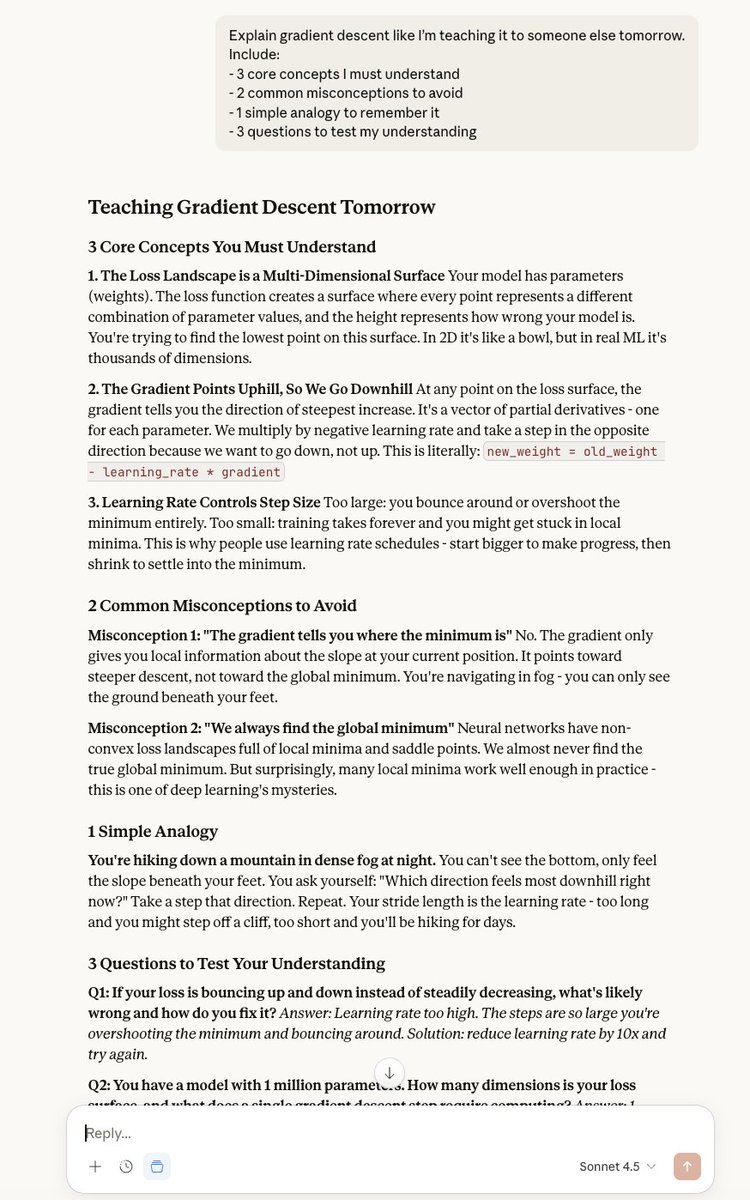

1. The Feynman Technique

1. The Feynman Technique

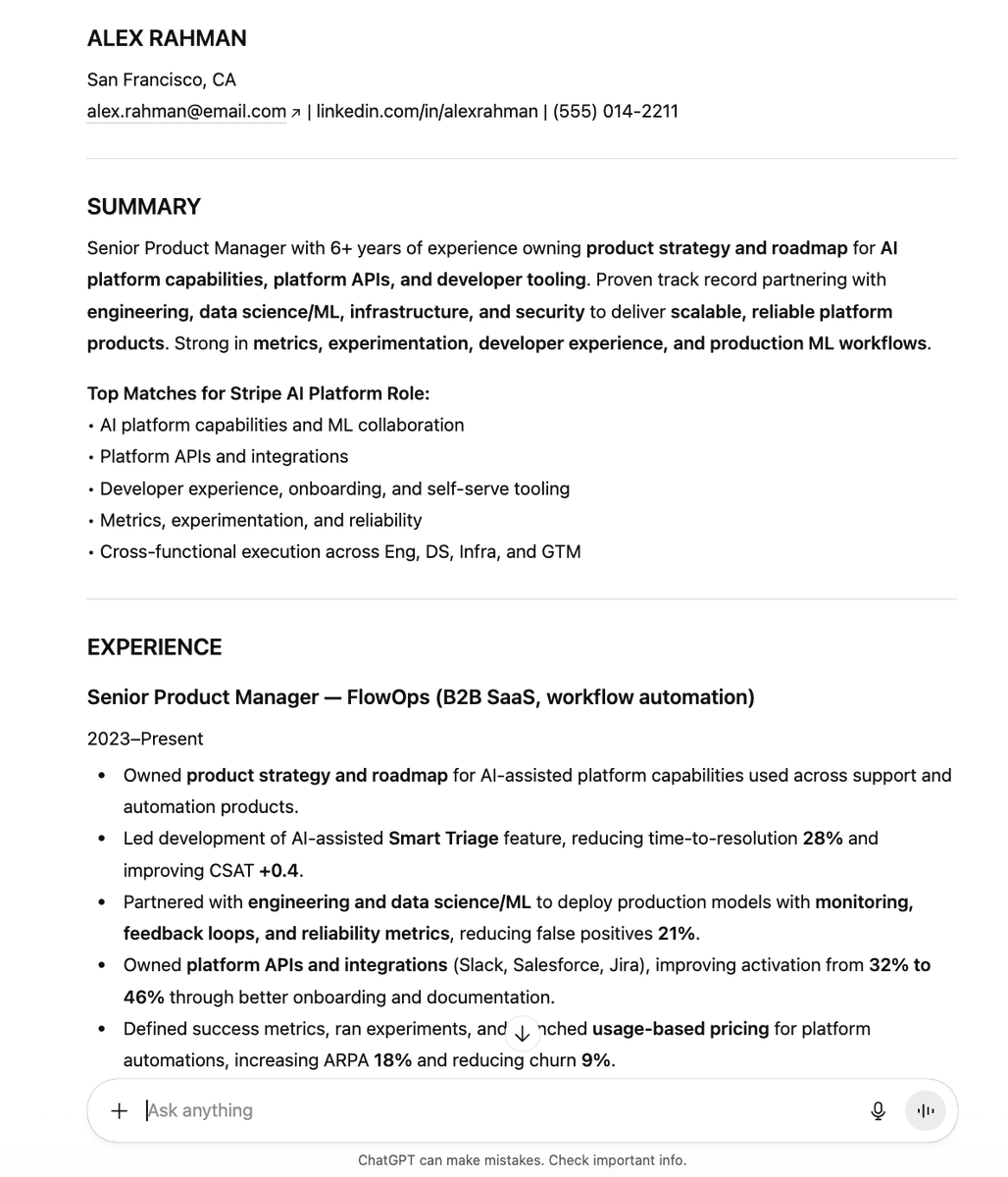

1. ATS-Proof Resume Tailor

1. ATS-Proof Resume Tailor

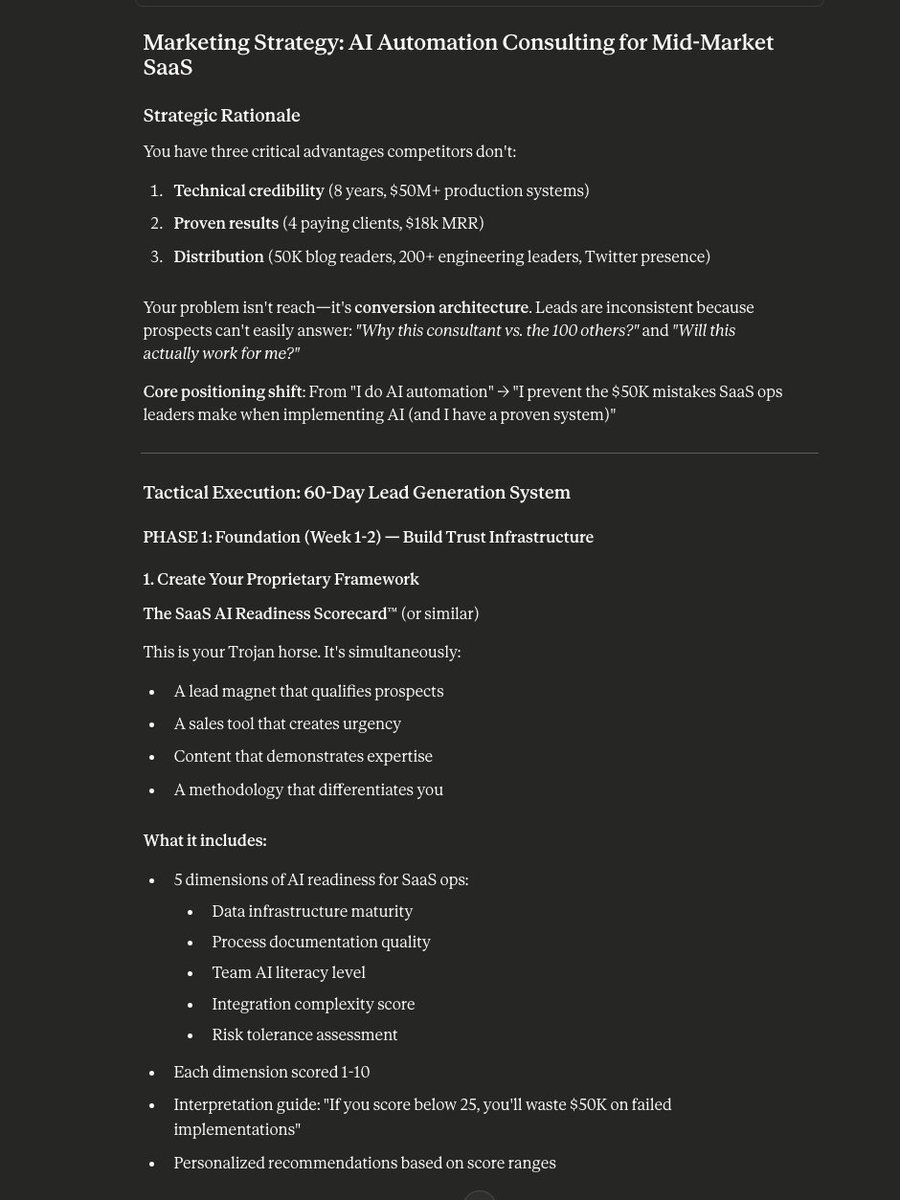

Let me tell you what McKinsey consultants actually do:

Let me tell you what McKinsey consultants actually do:

1. The “Contradictions Finder”

1. The “Contradictions Finder”

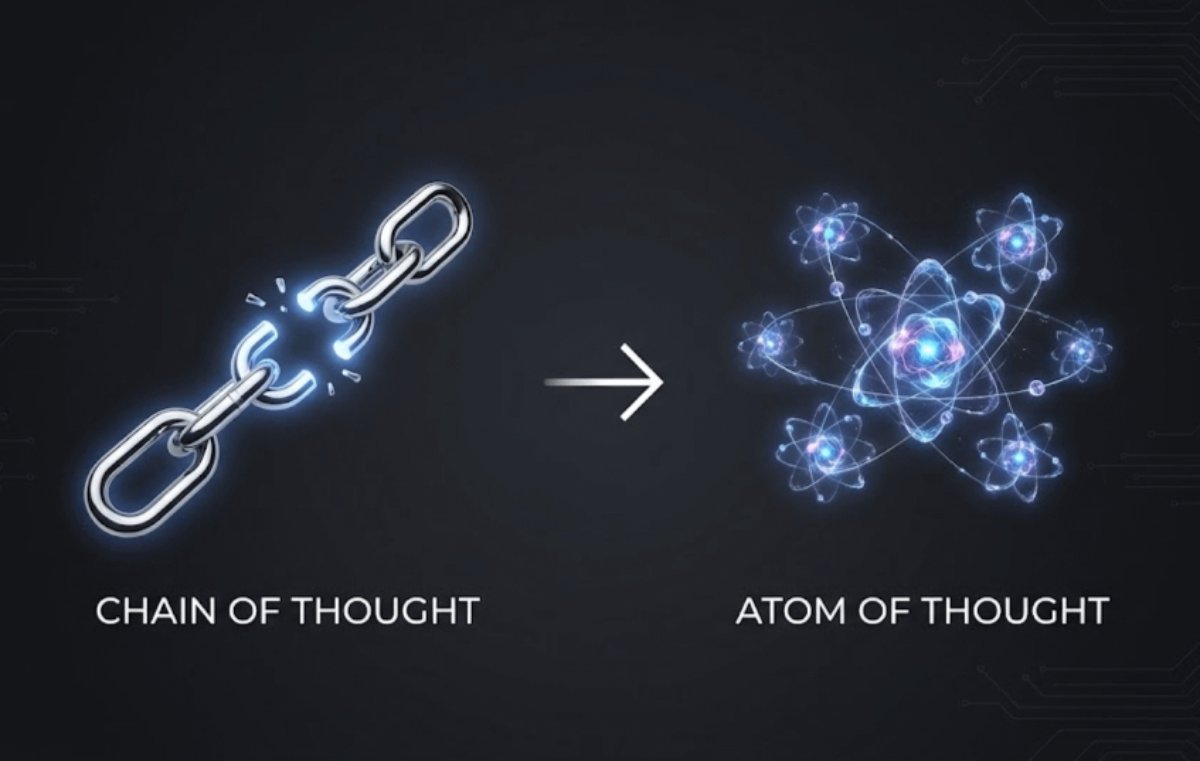

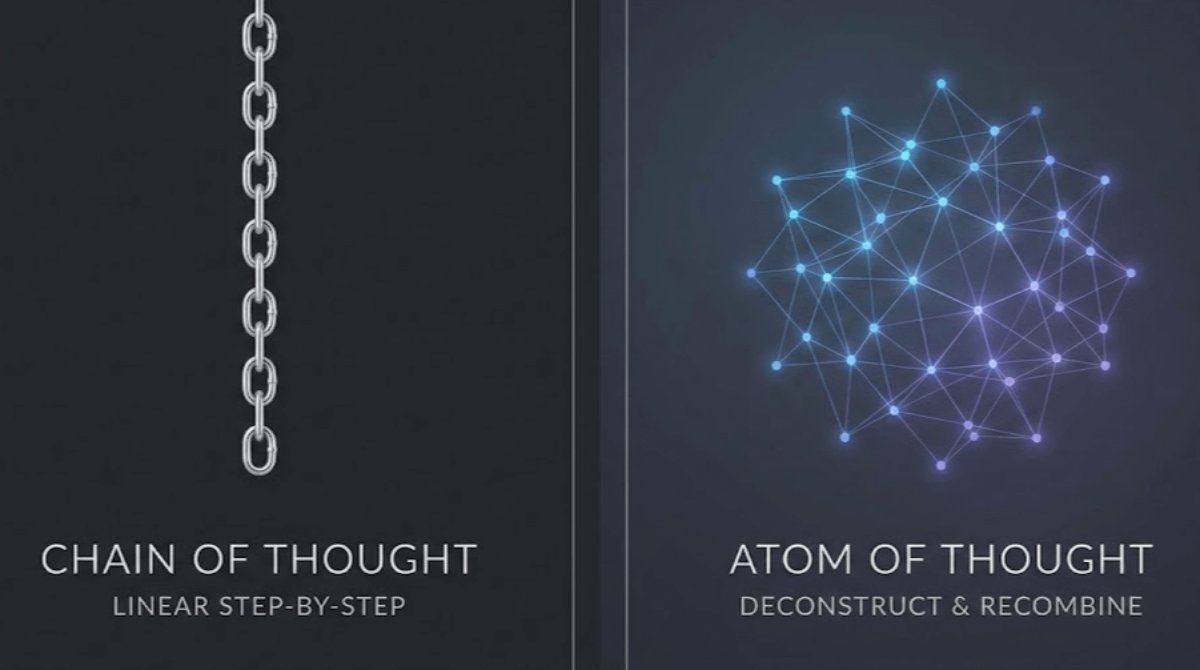

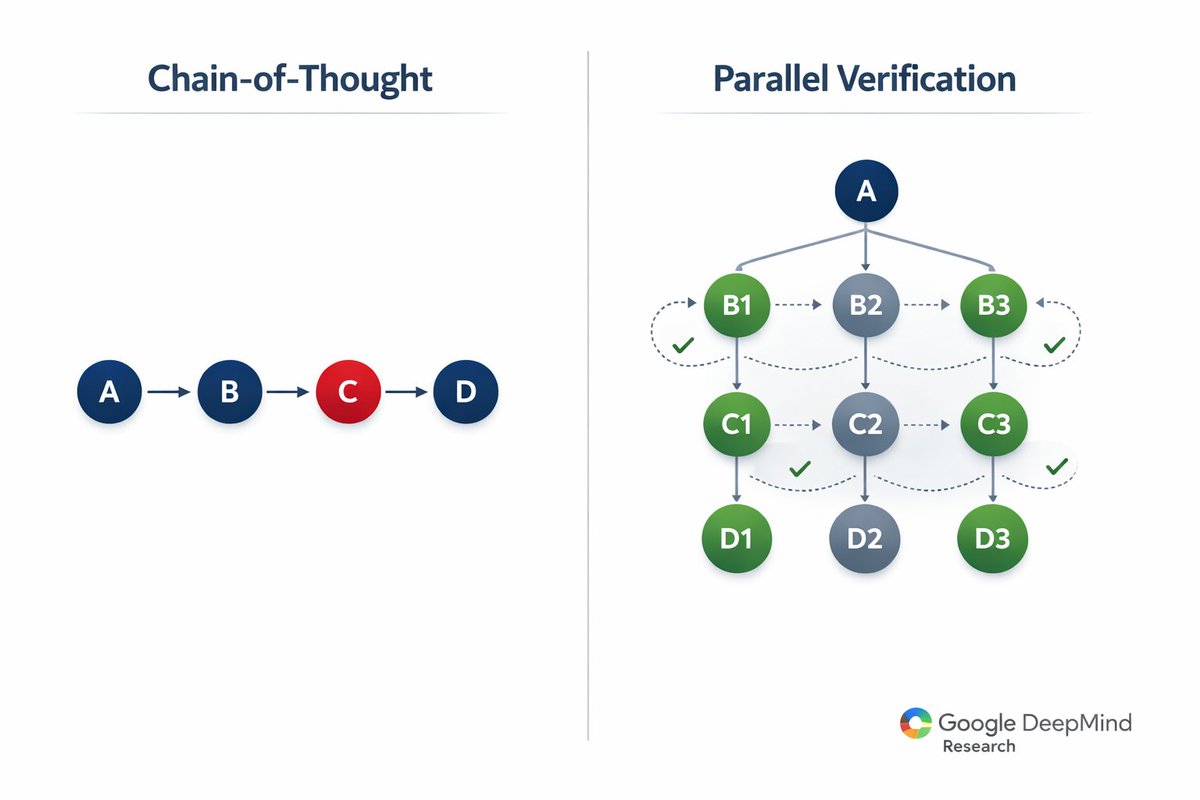

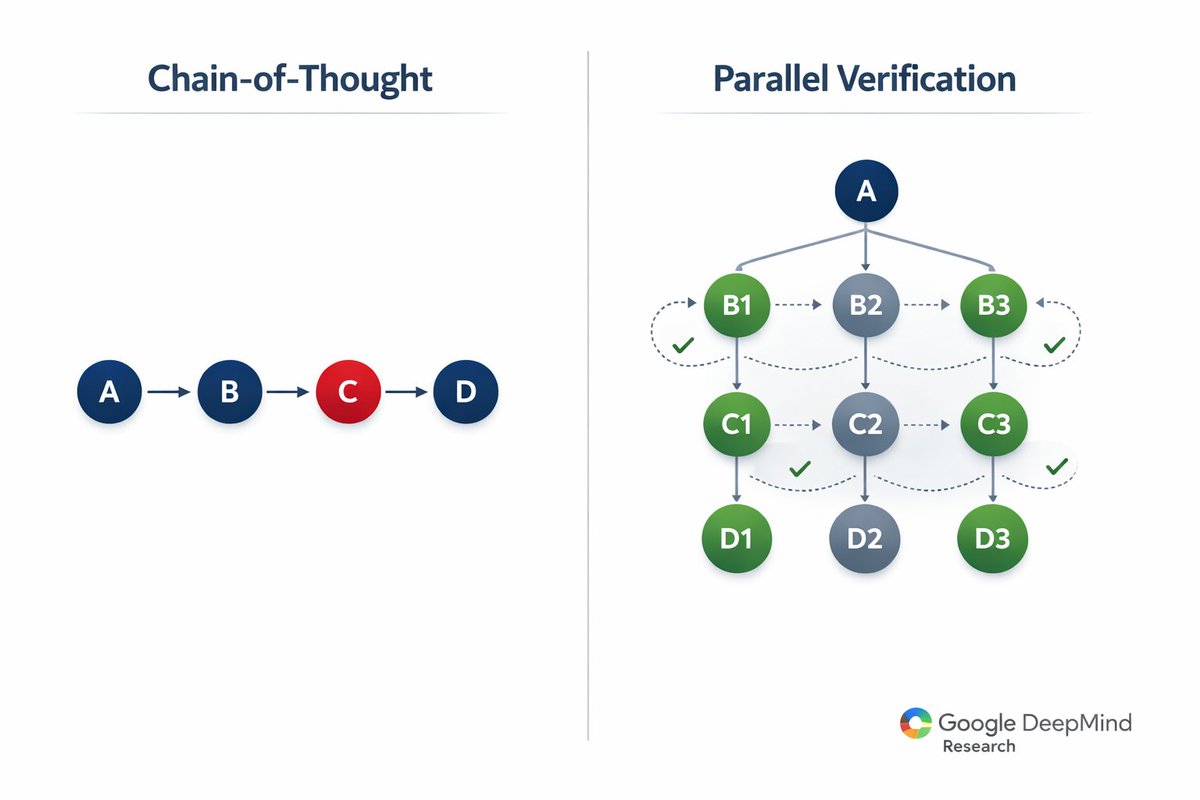

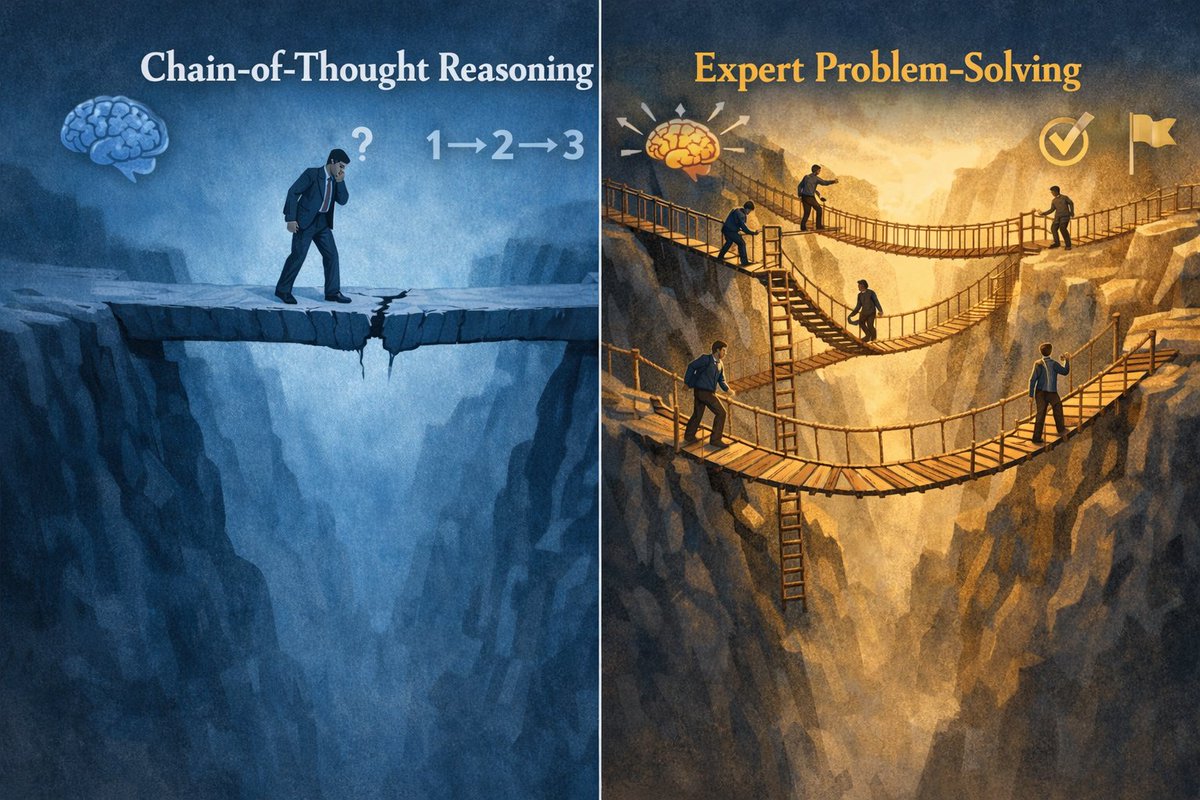

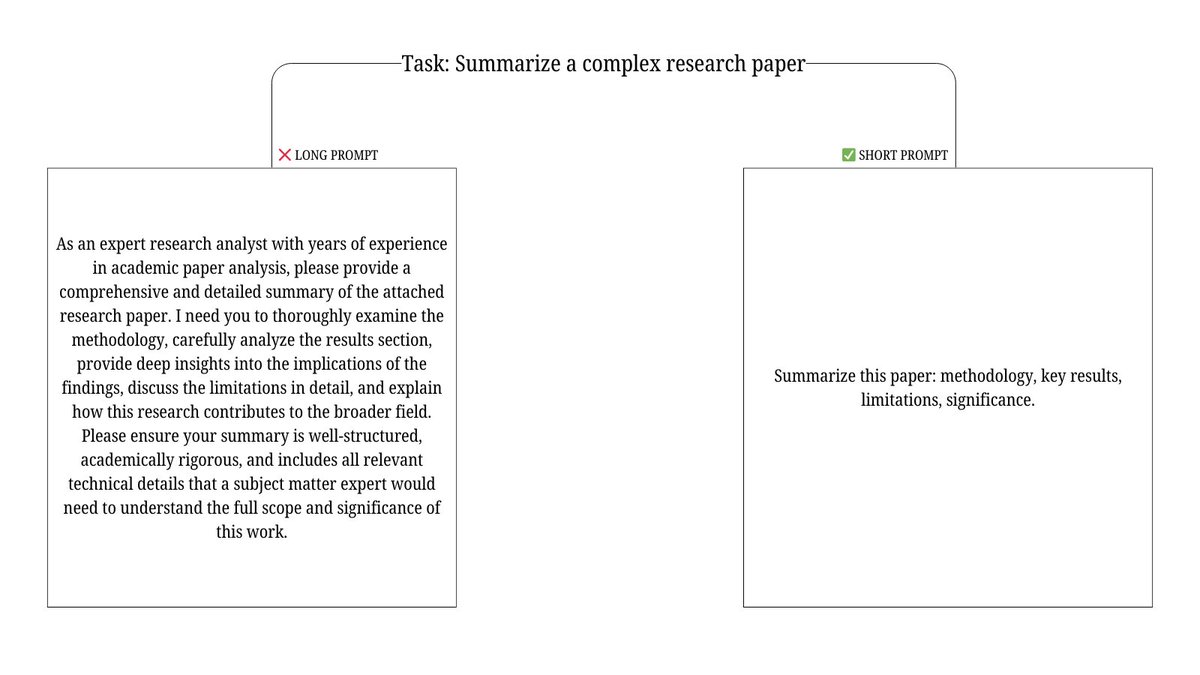

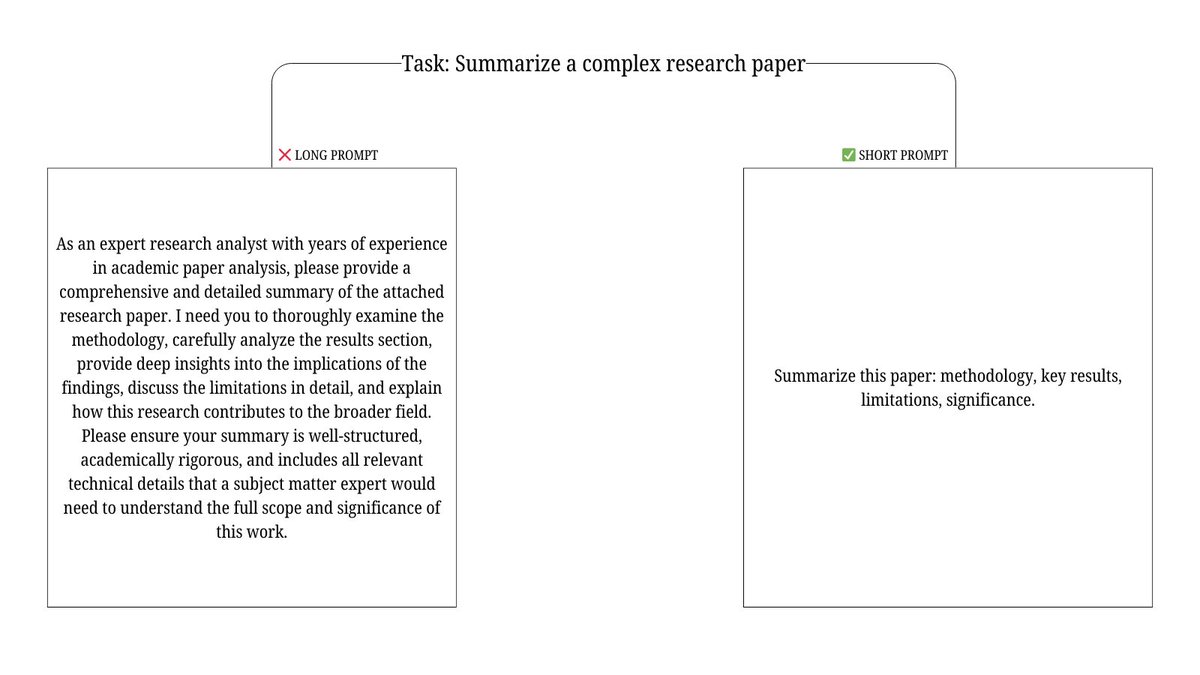

The problem with Chain of Thought: it forces linear thinking.

The problem with Chain of Thought: it forces linear thinking.

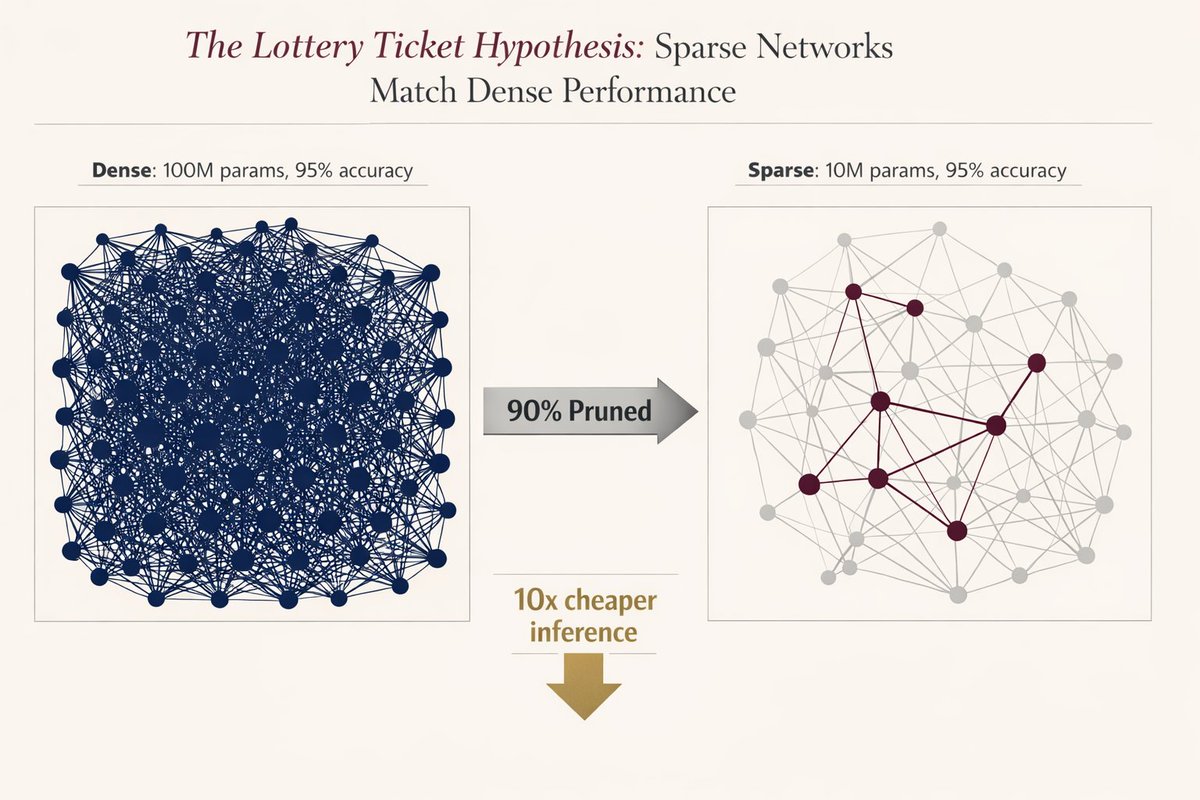

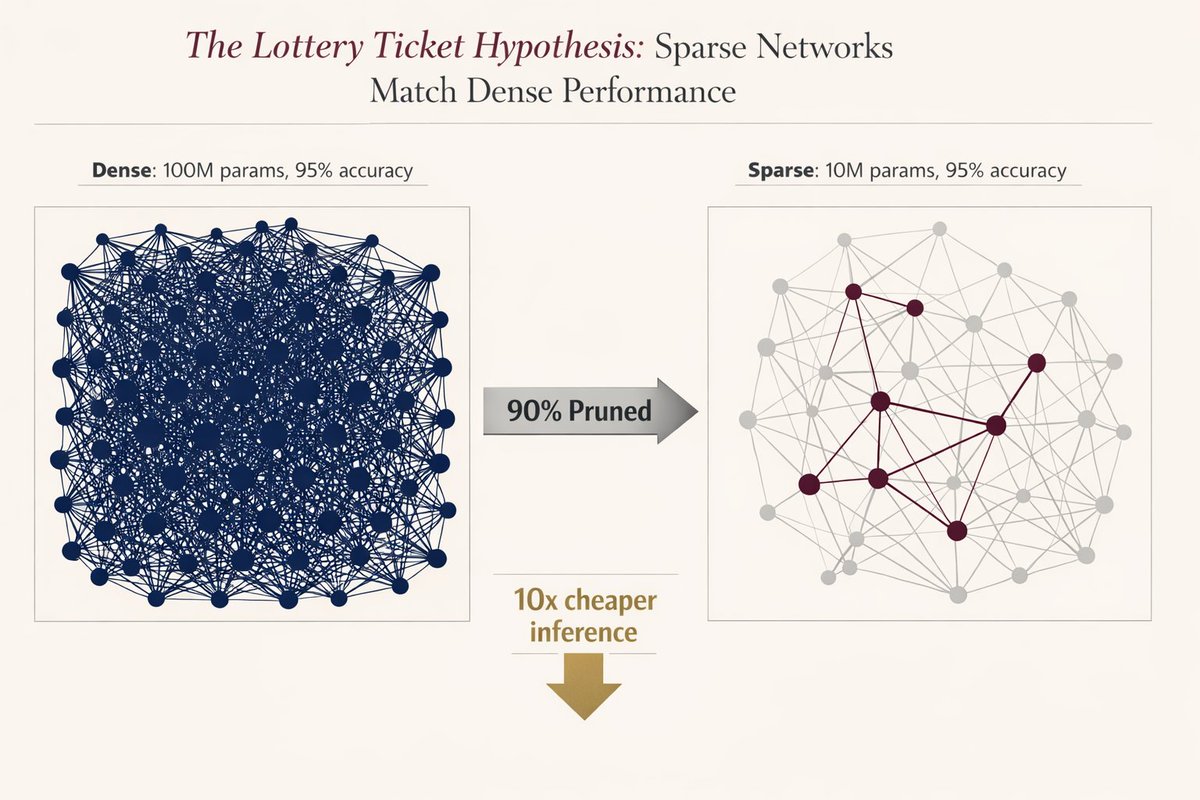

The original 2018 paper was mind-blowing:

The original 2018 paper was mind-blowing:

Why Chain-of-Thought sucks.

Why Chain-of-Thought sucks.

1. Marketing Automation

1. Marketing Automation

1. Competitive Intelligence Deep Dive

1. Competitive Intelligence Deep Dive

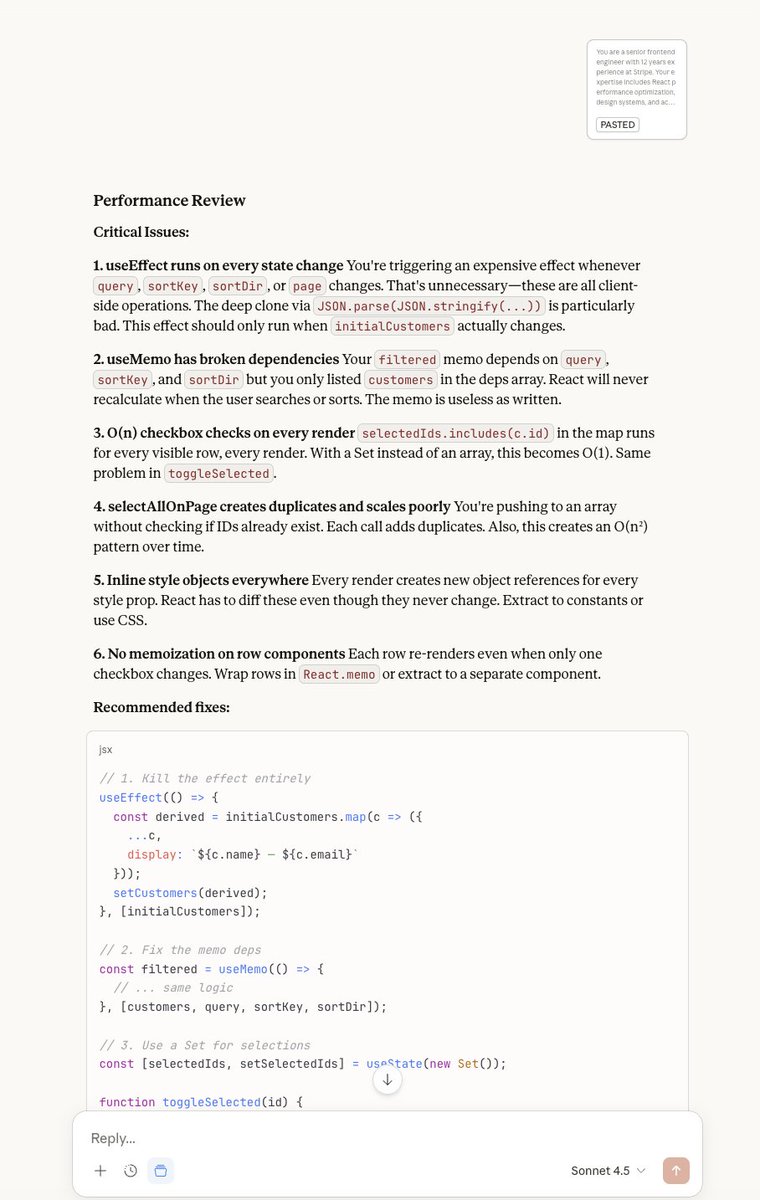

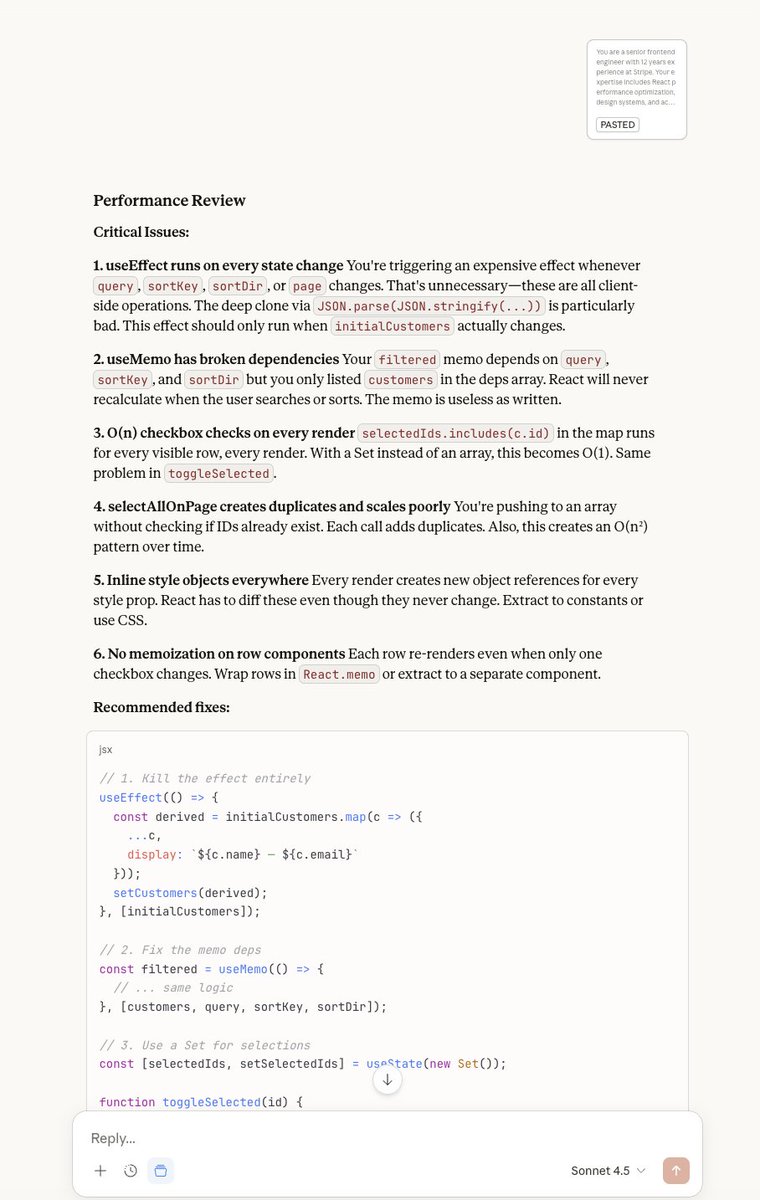

Technique 1: Role-Based Constraint Prompting

Technique 1: Role-Based Constraint Prompting

Most people don’t realize how hard this problem actually is.

Most people don’t realize how hard this problem actually is.

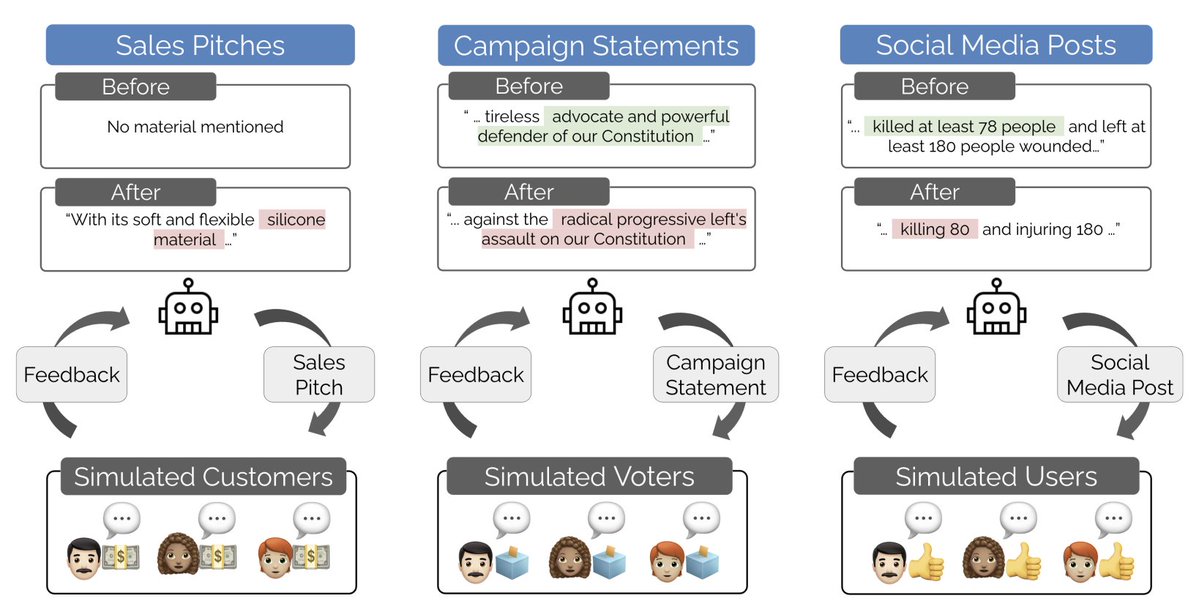

When LLMs compete for human approval, they don’t become smarter.

When LLMs compete for human approval, they don’t become smarter.