How to get URL link on X (Twitter) App

The first step is the grind. But I don't mean "just work harder on 60 hour work weeks."

The first step is the grind. But I don't mean "just work harder on 60 hour work weeks."

Here's the full conversation.

Here's the full conversation.

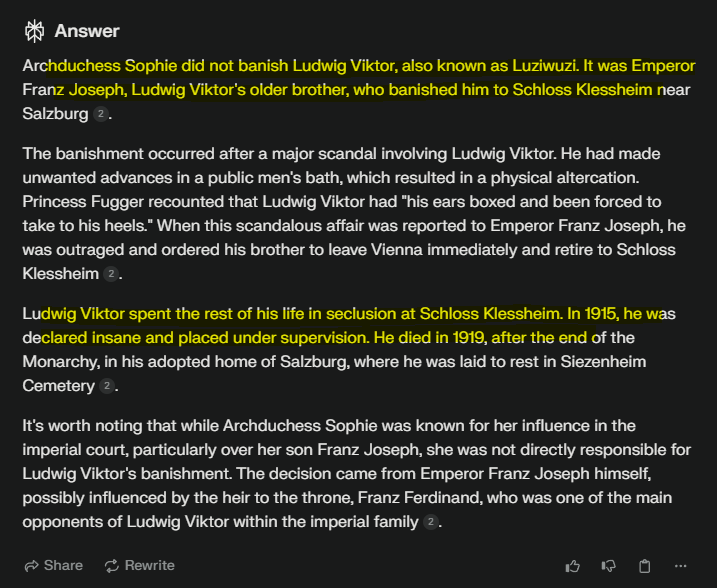

Here, you can see that Perplexity has no issue with this request. Next let's see what ChatGPT says.

Here, you can see that Perplexity has no issue with this request. Next let's see what ChatGPT says.