Investigating the trajectory of AI for the benefit of society.

2 subscribers

How to get URL link on X (Twitter) App

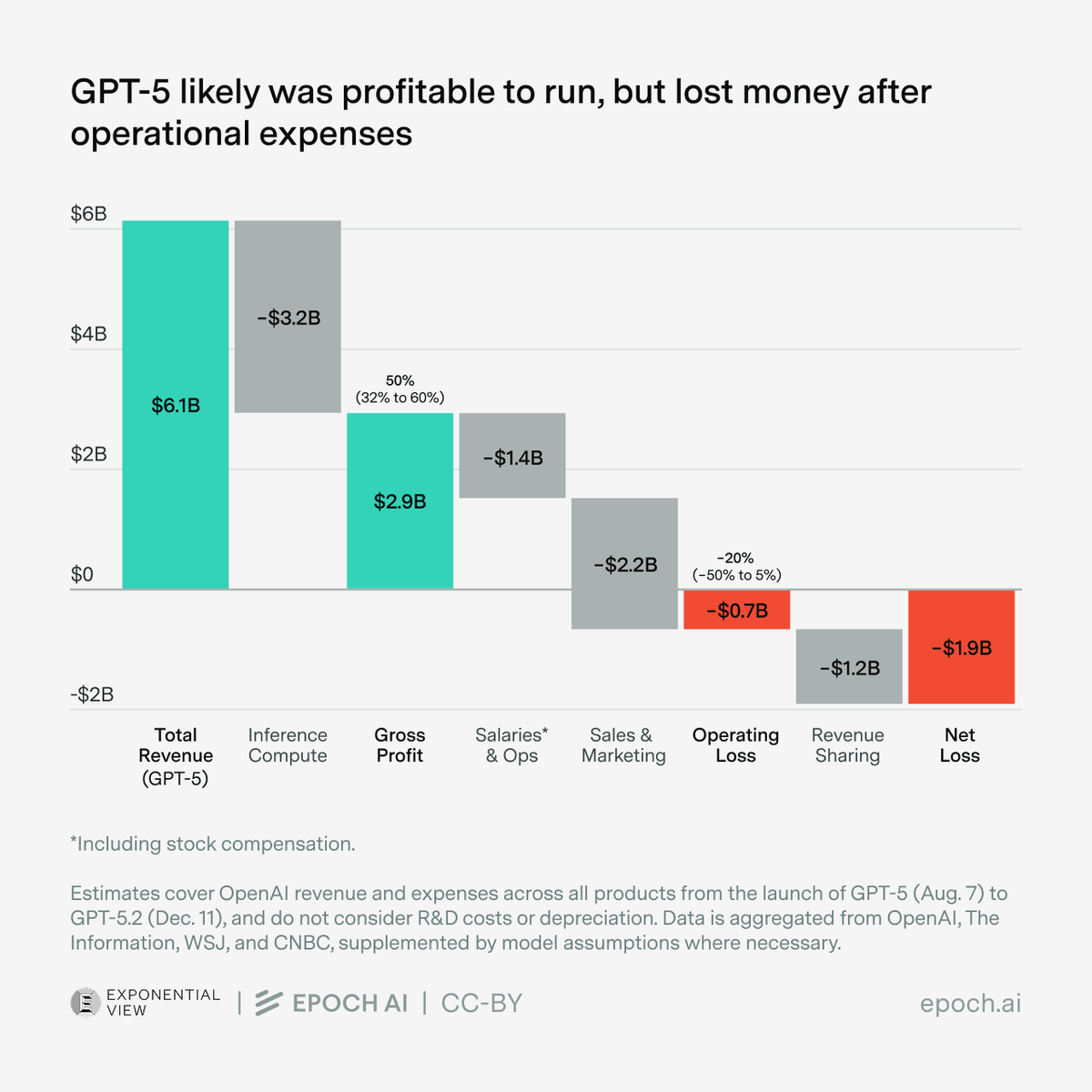

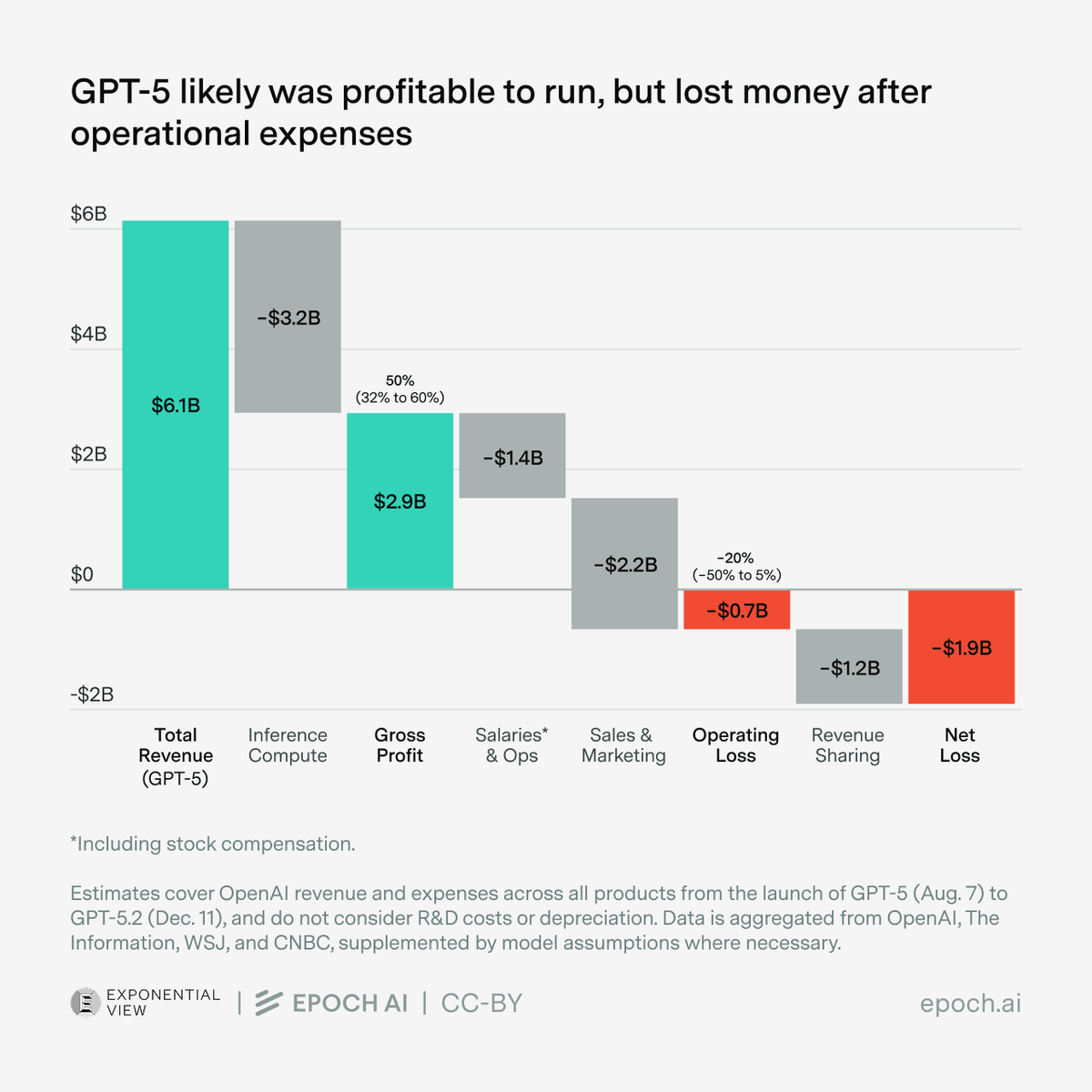

Even the gross profits from running models weren’t enough to recoup R&D costs.

Even the gross profits from running models weren’t enough to recoup R&D costs.

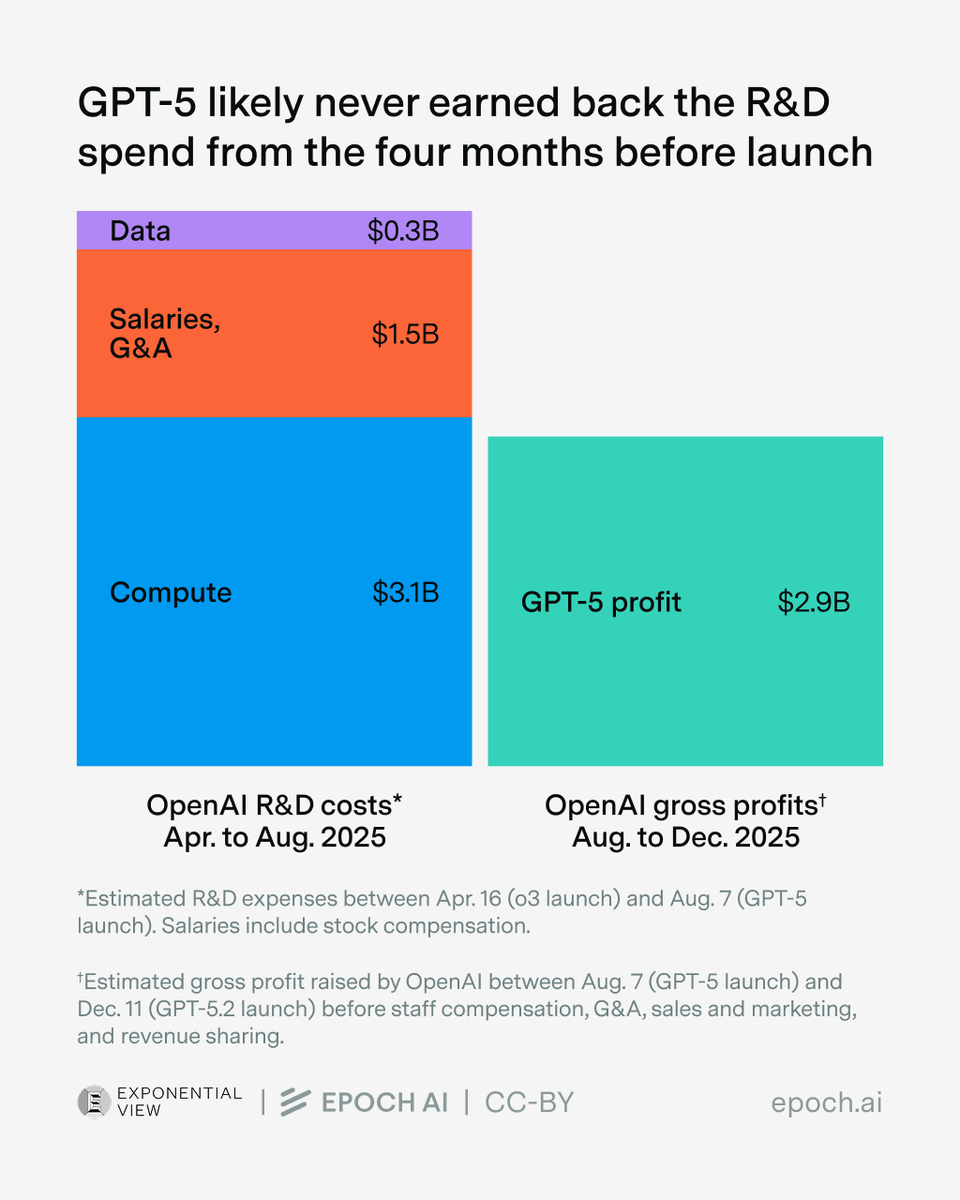

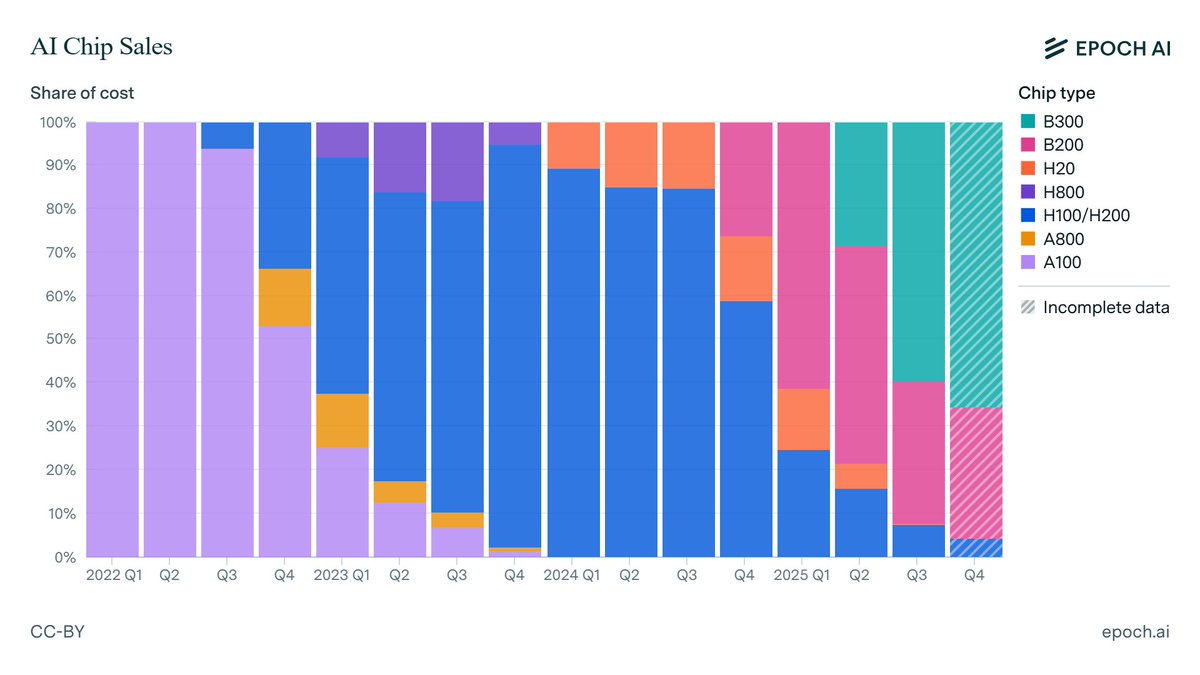

Nvidia’s B300 GPU now accounts for the majority of its revenue from AI chips, while H100s make up under 10%.

Nvidia’s B300 GPU now accounts for the majority of its revenue from AI chips, while H100s make up under 10%.

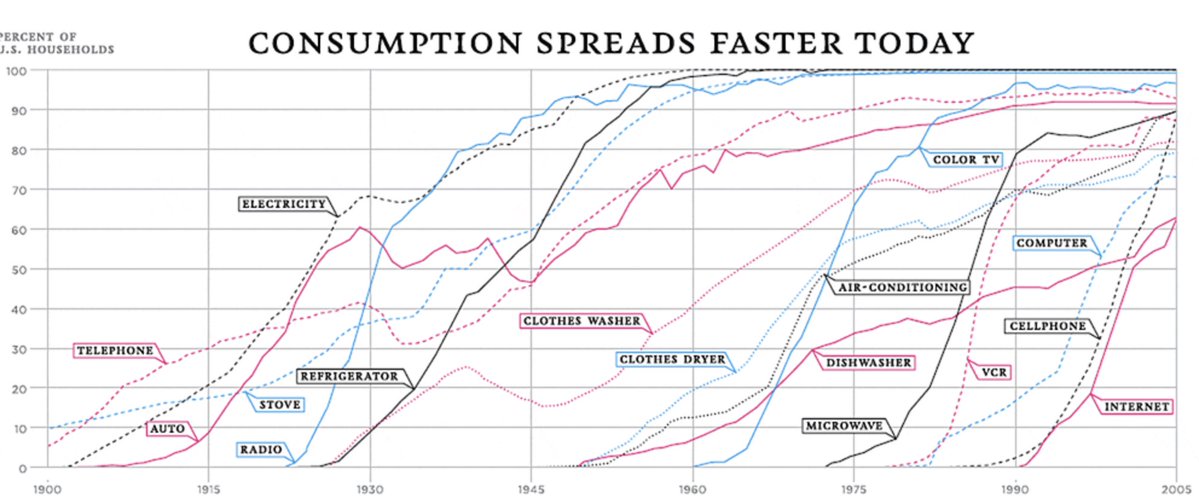

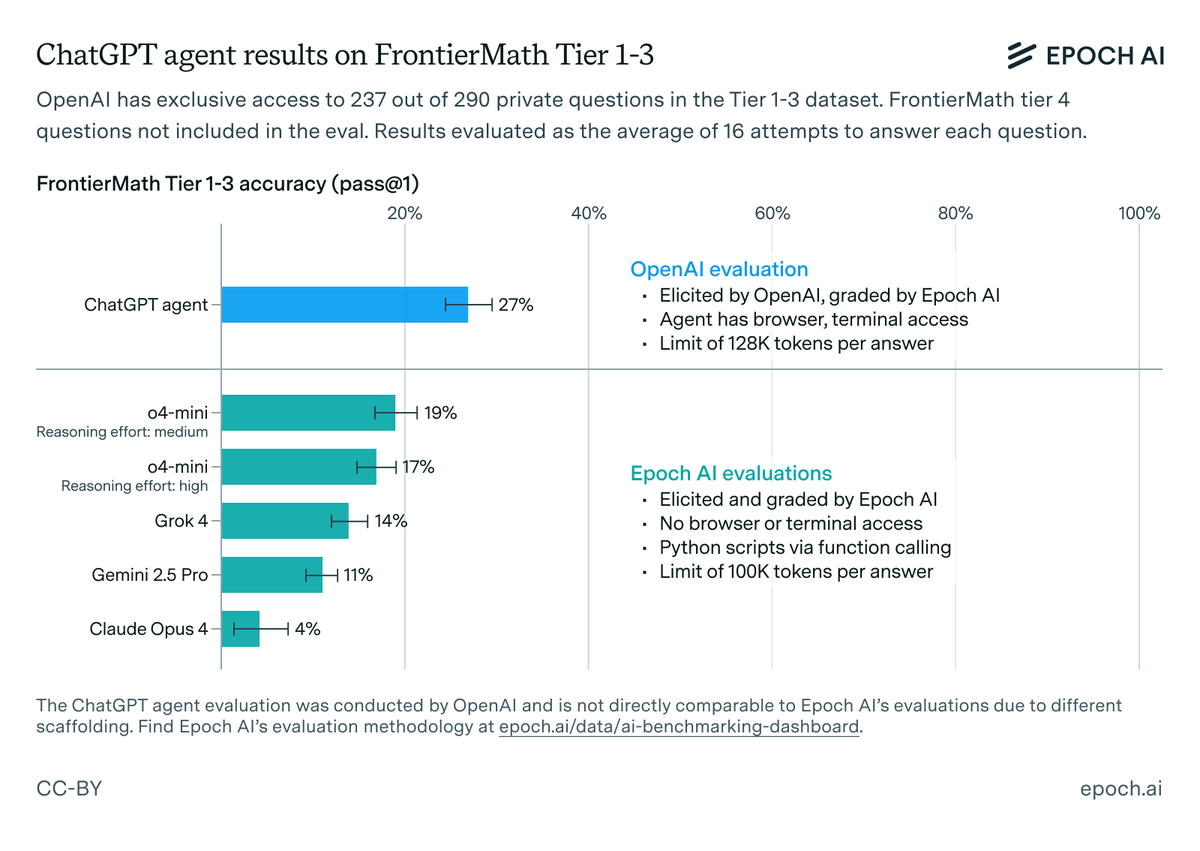

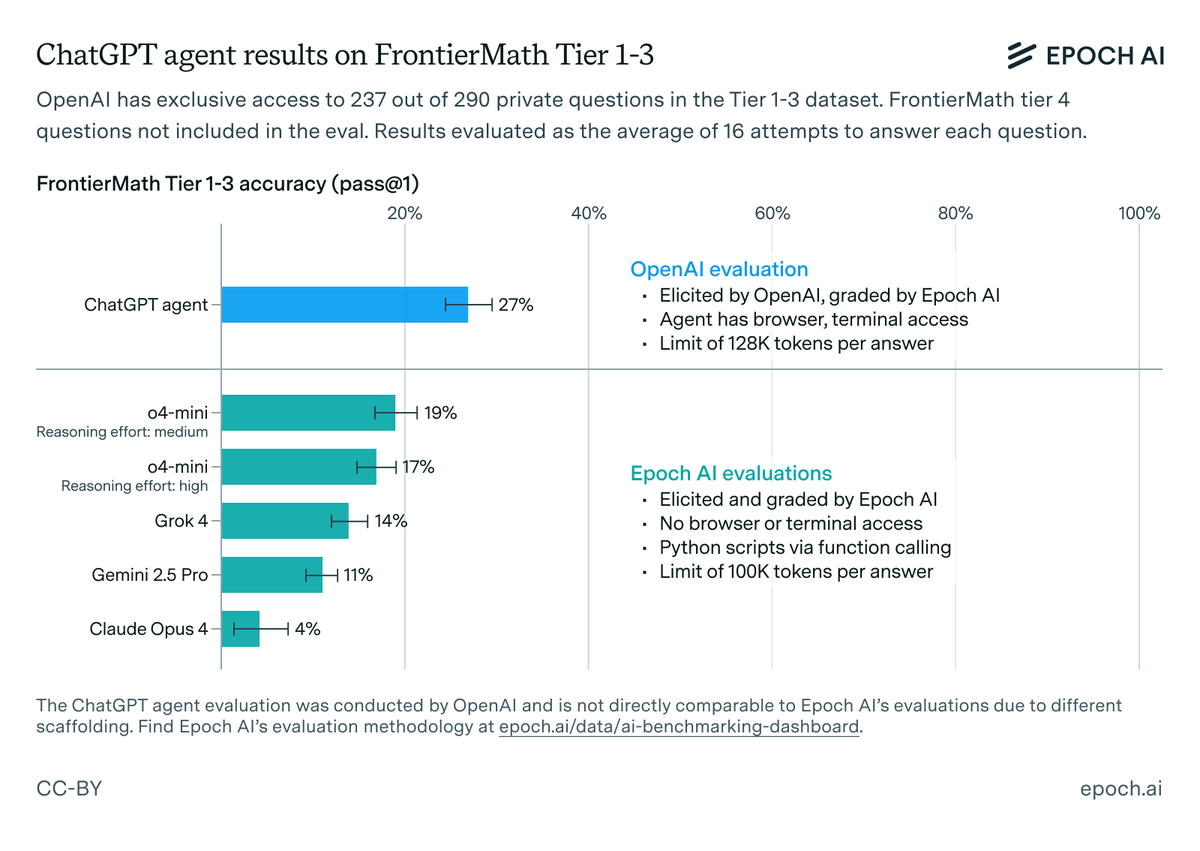

GPT-5.2 ranks first or second on most of the benchmarks we run ourselves, including a top score on FrontierMath Tiers 1–3 and our new chess puzzles benchmark. The exception is SimpleQA Verified, where it scores notably worse than even previous GPT-5 series models.

GPT-5.2 ranks first or second on most of the benchmarks we run ourselves, including a top score on FrontierMath Tiers 1–3 and our new chess puzzles benchmark. The exception is SimpleQA Verified, where it scores notably worse than even previous GPT-5 series models.

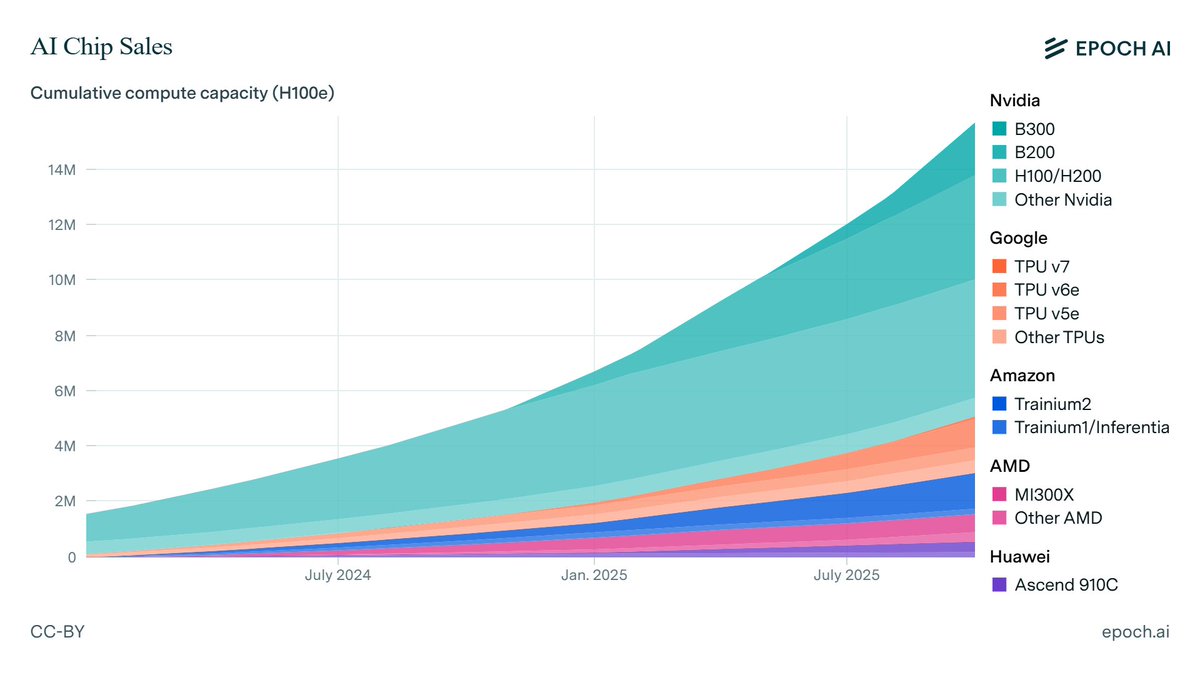

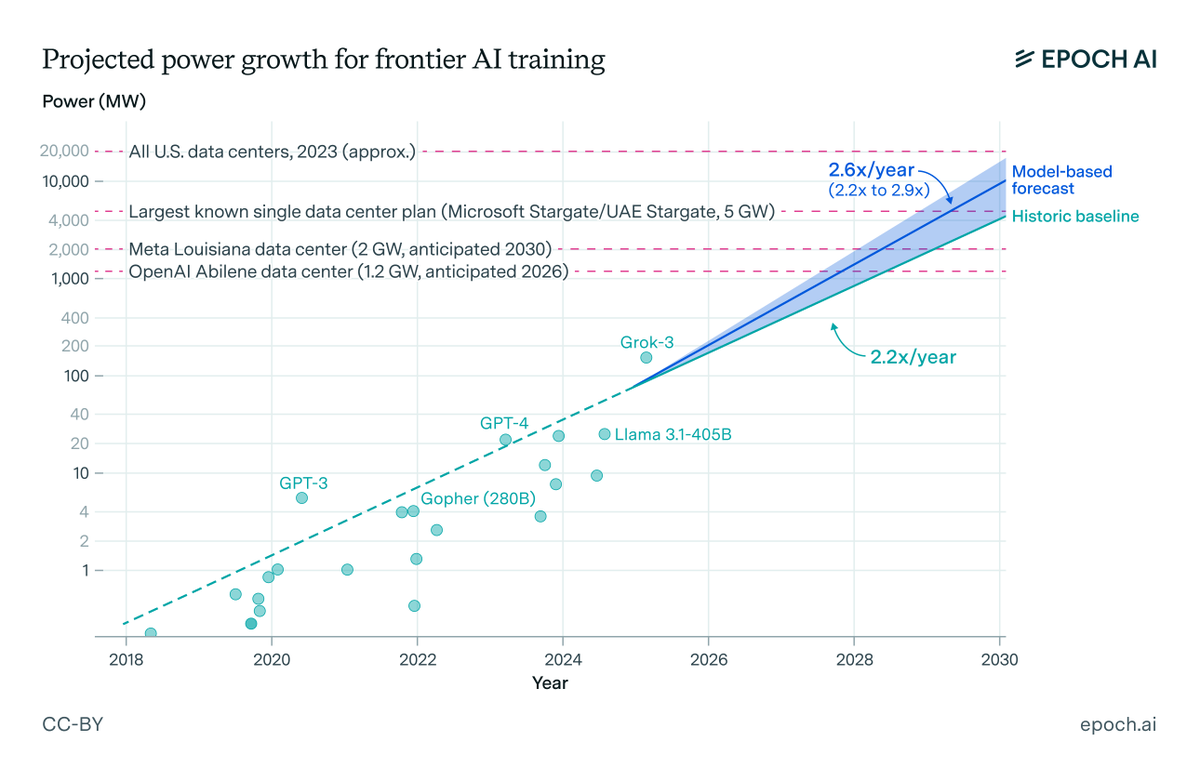

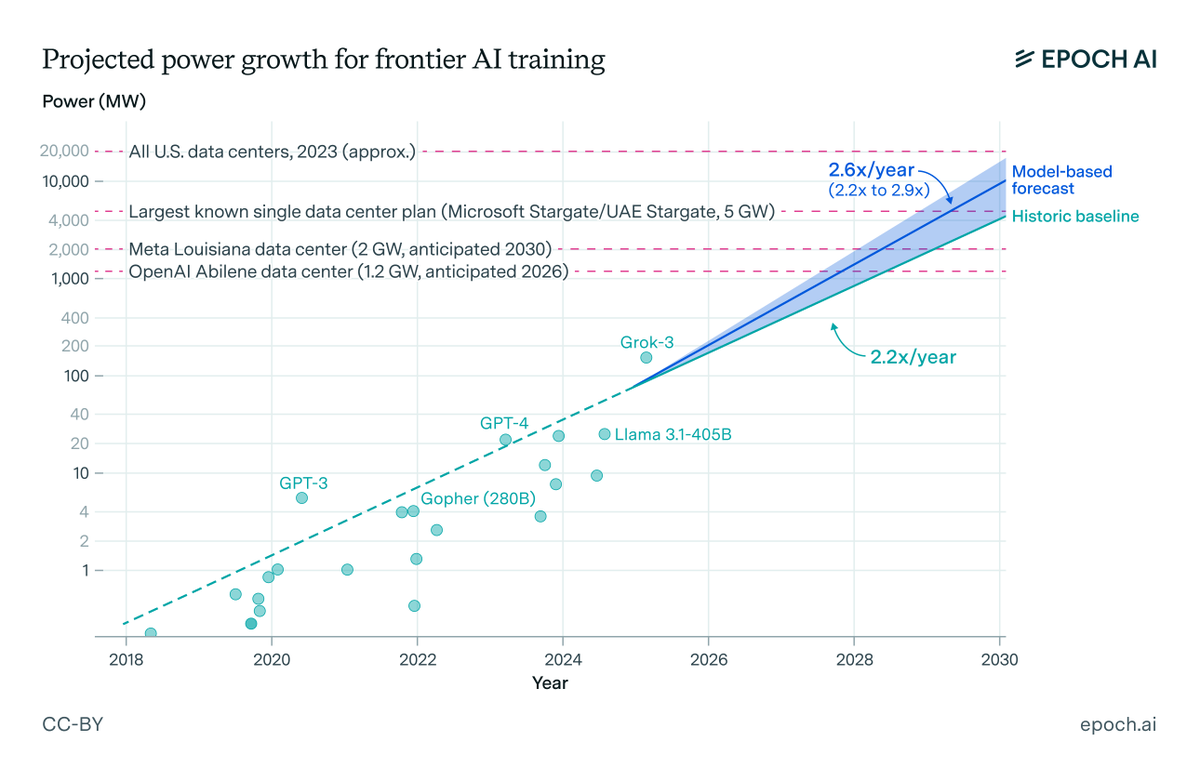

AI data centers will be some of the biggest infrastructure projects in history

AI data centers will be some of the biggest infrastructure projects in history

Three important takeaways:

Three important takeaways:

Several data centers will soon demand 1 GW of power, starting early next year:

Several data centers will soon demand 1 GW of power, starting early next year:

Note that this is the publicly available version of Deep Think, not the version that achieved a gold medal-equivalent score on the IMO. Google has described the publicly available Deep Think model as a “variation” of the IMO gold model.

Note that this is the publicly available version of Deep Think, not the version that achieved a gold medal-equivalent score on the IMO. Google has described the publicly available Deep Think model as a “variation” of the IMO gold model.

Revenue:

Revenue:

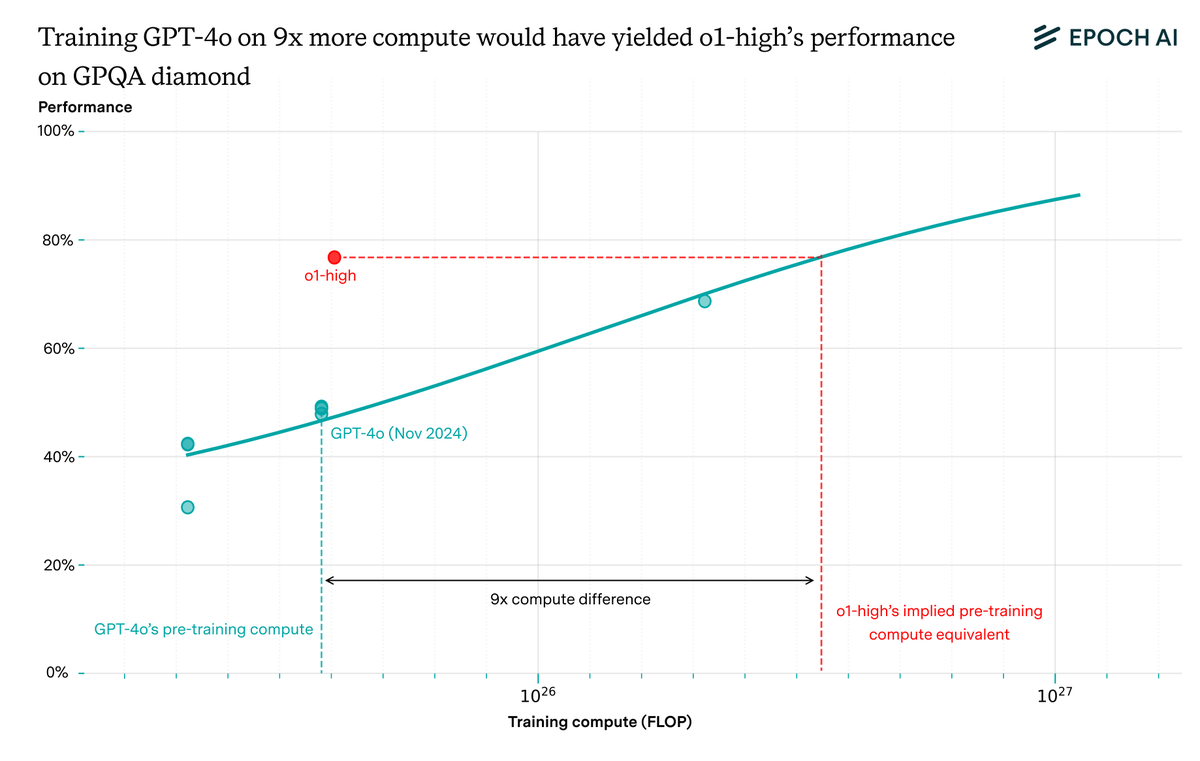

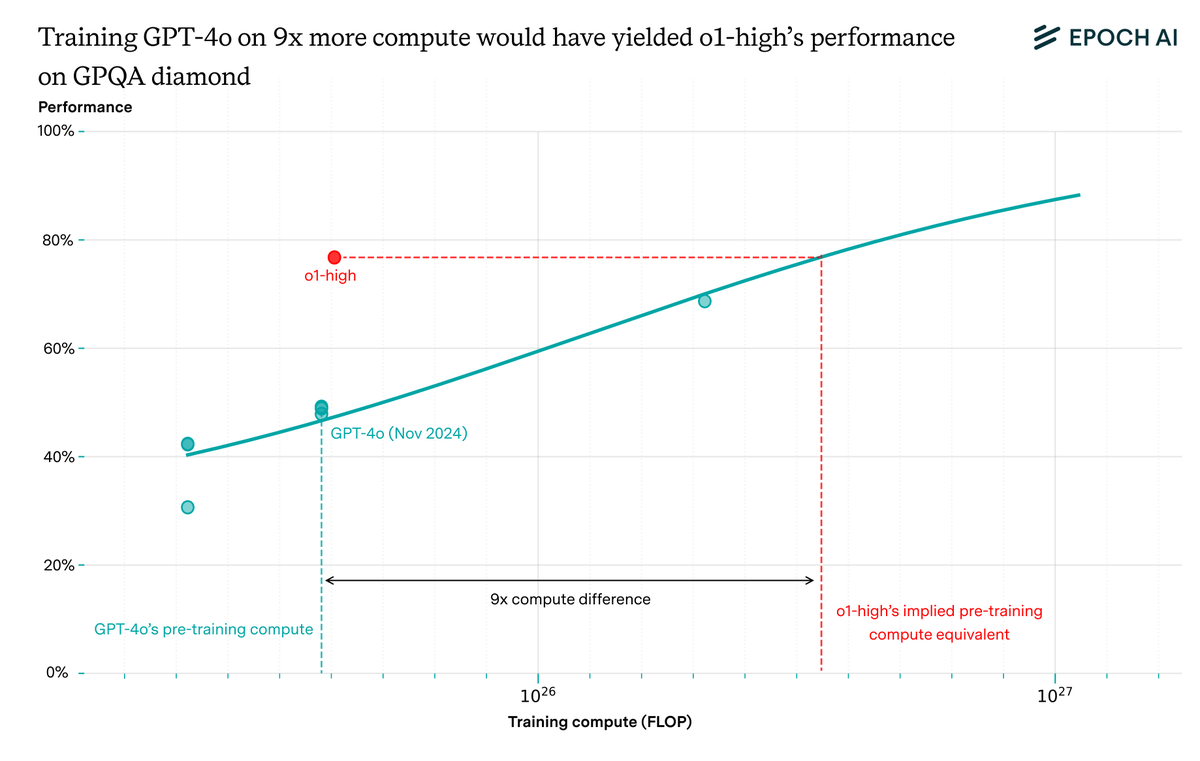

The invention of reasoning models made it possible to greatly improve performance by scaling up post-training compute. This improvement is so great that GPT-5 outperforms GPT-4.5 despite having used less training compute overall.

The invention of reasoning models made it possible to greatly improve performance by scaling up post-training compute. This improvement is so great that GPT-5 outperforms GPT-4.5 despite having used less training compute overall. https://twitter.com/1529761561170124800/status/1951734757483487450

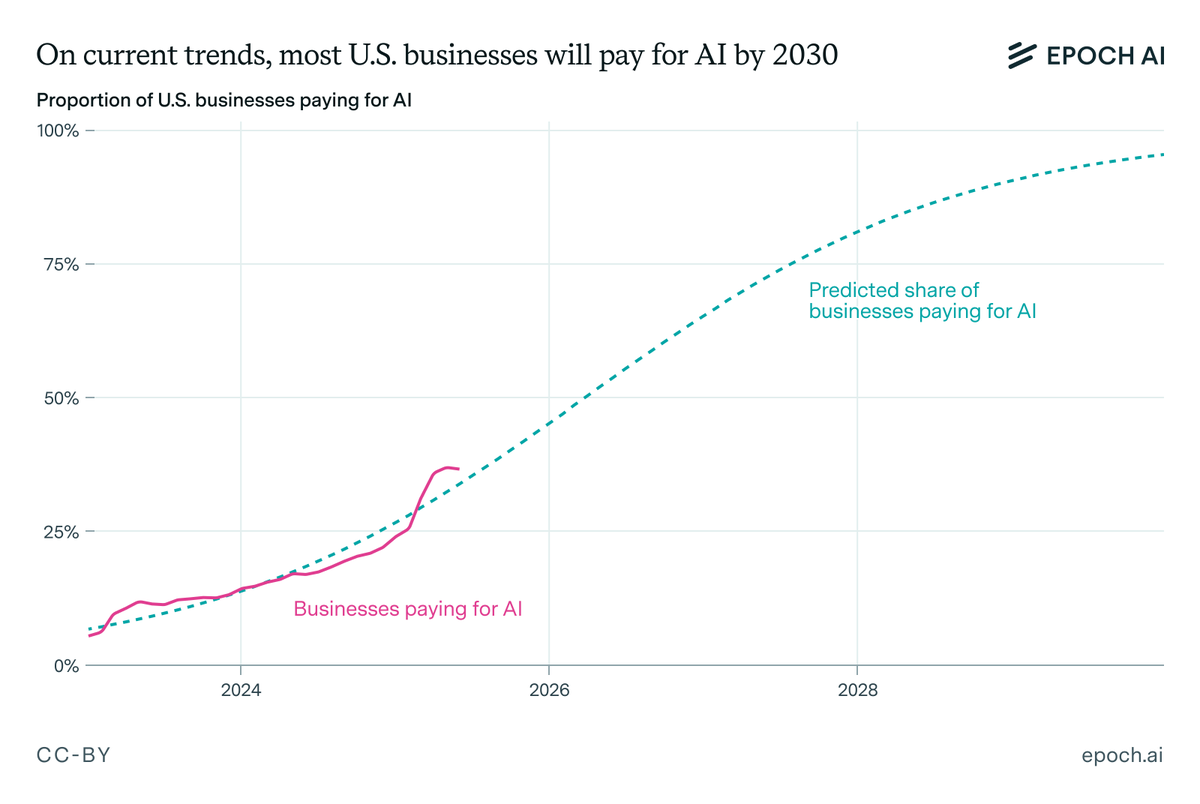

We forecast that by 2030:

We forecast that by 2030:

Investors are incredibly uncertain about the returns to further scaling, and overestimating the returns could cost them >$100B. So rather than going all-in today, they invest more gradually, observing the returns from incremental scaling, before reevaluating further investment.

Investors are incredibly uncertain about the returns to further scaling, and overestimating the returns could cost them >$100B. So rather than going all-in today, they invest more gradually, observing the returns from incremental scaling, before reevaluating further investment.

@EPRINews Power demands for frontier AI training have been growing at 2.2x per year, with frontier runs now exceeding 100 MW. The primary factor driving this growth is the scaling of the compute used to train models, at a rate of 4-5x per year.

@EPRINews Power demands for frontier AI training have been growing at 2.2x per year, with frontier runs now exceeding 100 MW. The primary factor driving this growth is the scaling of the compute used to train models, at a rate of 4-5x per year.

When OpenAI released o1, it blew its predecessor GPT-4o out of the water on some math and science benchmarks. The difference was reasoning training and test-time scaling: o1 was trained to optimize its chain-of-thought, allowing extensive thinking before responding to users.

When OpenAI released o1, it blew its predecessor GPT-4o out of the water on some math and science benchmarks. The difference was reasoning training and test-time scaling: o1 was trained to optimize its chain-of-thought, allowing extensive thinking before responding to users.

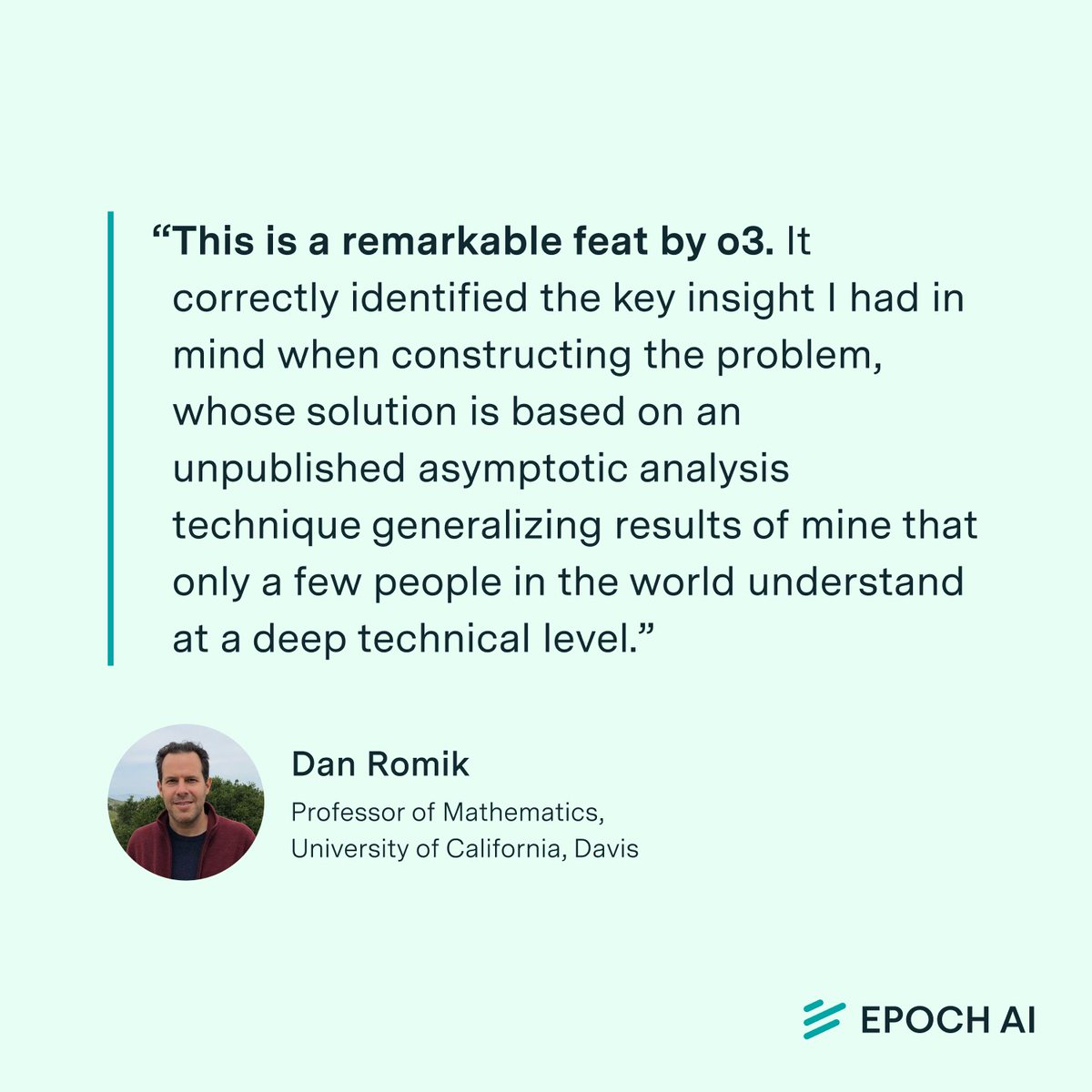

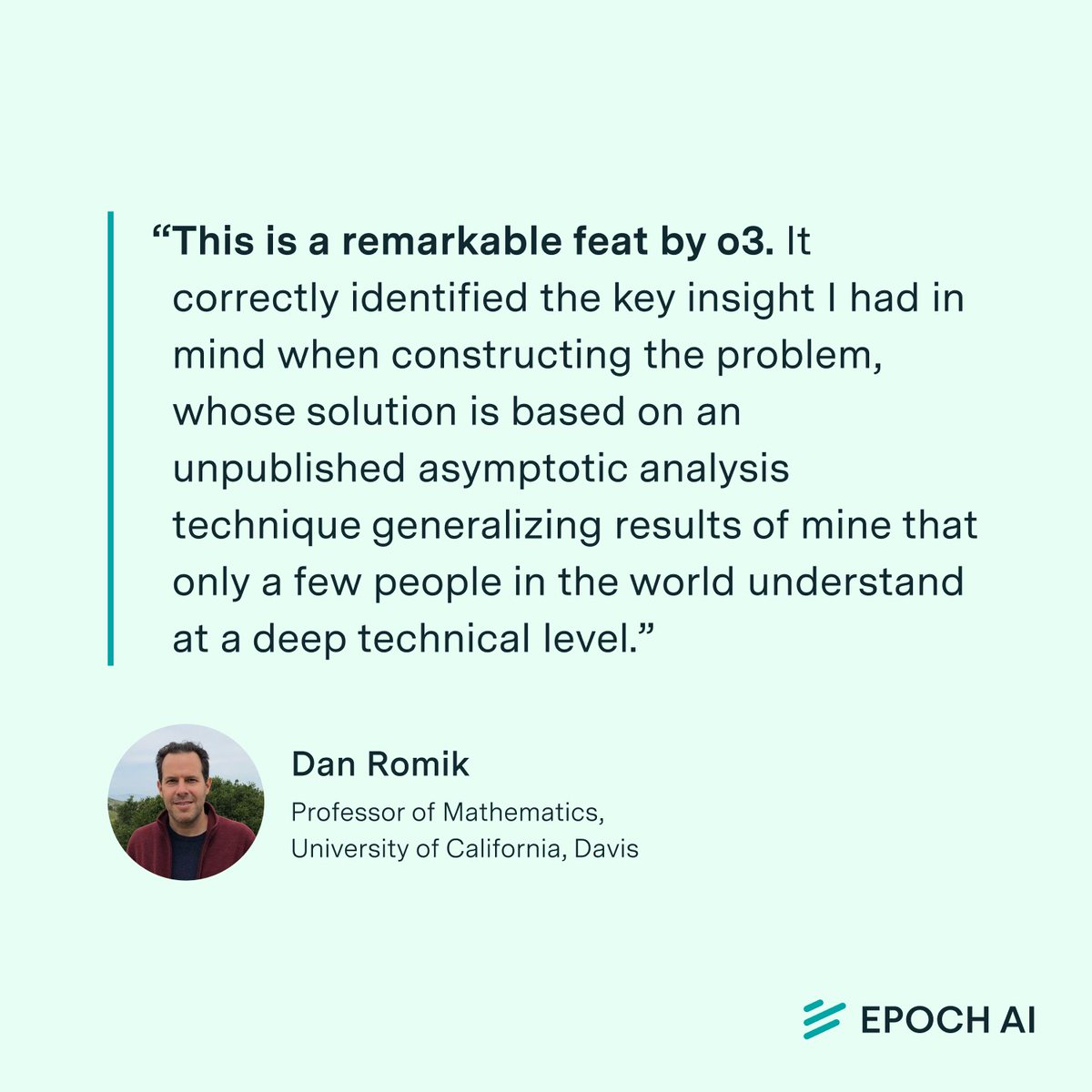

The evaluation was done internally by OpenAI on an early checkpoint of o3 using a “high reasoning setting.” The model made 32 attempts on the problem and solved it only once. OpenAI shared the reasoning trace so that Dan could analyze the model’s solution and provide commentary.

The evaluation was done internally by OpenAI on an early checkpoint of o3 using a “high reasoning setting.” The model made 32 attempts on the problem and solved it only once. OpenAI shared the reasoning trace so that Dan could analyze the model’s solution and provide commentary.

Why 9 months? Model developers face a tradeoff: wait before starting a run to take advantage of better hardware and algorithms, or start sooner with what’s available. Waiting lets you train faster once you start, so there’s an optimal run length for any given deadline.

Why 9 months? Model developers face a tradeoff: wait before starting a run to take advantage of better hardware and algorithms, or start sooner with what’s available. Waiting lets you train faster once you start, so there’s an optimal run length for any given deadline.

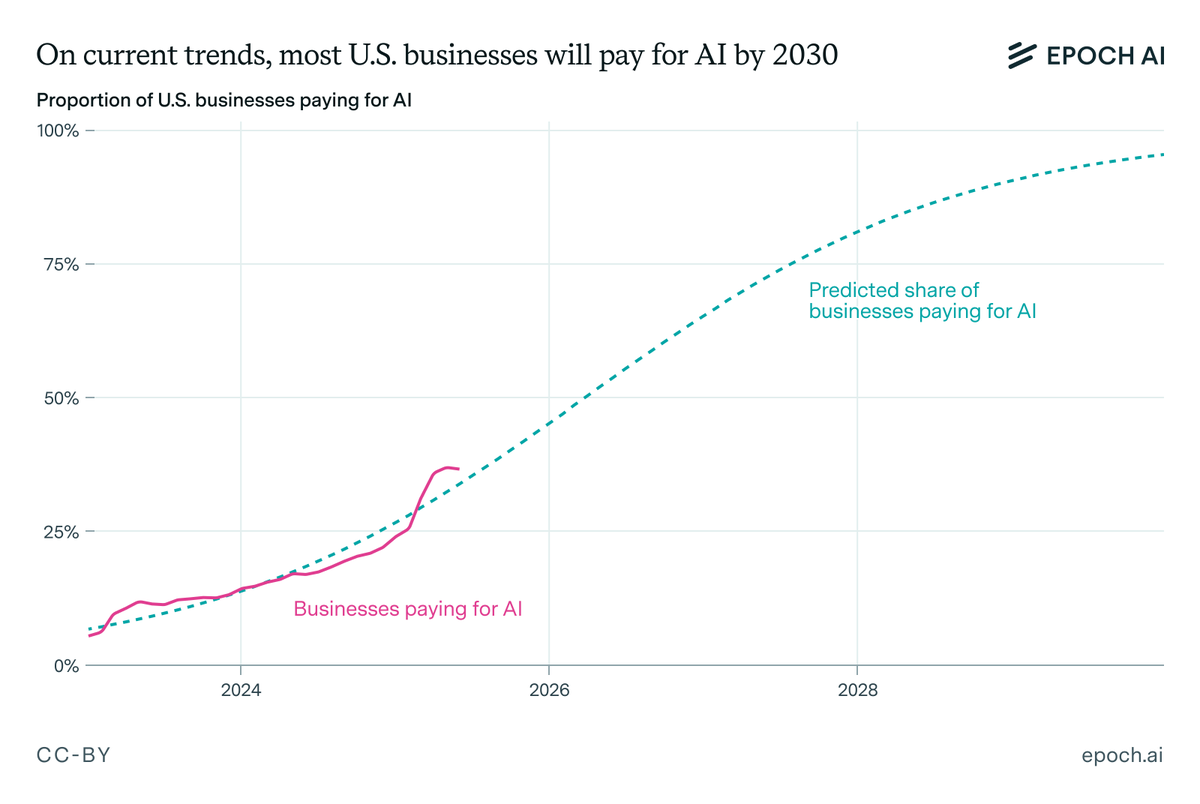

Historically, technology adoption took decades. For example, telephones took 60 years to reach 70% of US households. But tech diffuses faster and faster over time, and we should expect AI to continue this trend.

Historically, technology adoption took decades. For example, telephones took 60 years to reach 70% of US households. But tech diffuses faster and faster over time, and we should expect AI to continue this trend.

This evaluation is not directly comparable to those on Epoch AI’s benchmarking hub, as it uses a different scaffold. First, we did not run the model ourselves—we only graded the outputs provided by OpenAI and don’t have access to their code to run the model. Second, ChatGPT agent has access to tools not available to other models we've assessed—most notably browser tools, which may have helped on questions related to recent research papers. Finally, the evaluation allowed up to 128K tokens per question, compared to our standard 100K; this difference is unlikely to have significantly affected results.

This evaluation is not directly comparable to those on Epoch AI’s benchmarking hub, as it uses a different scaffold. First, we did not run the model ourselves—we only graded the outputs provided by OpenAI and don’t have access to their code to run the model. Second, ChatGPT agent has access to tools not available to other models we've assessed—most notably browser tools, which may have helped on questions related to recent research papers. Finally, the evaluation allowed up to 128K tokens per question, compared to our standard 100K; this difference is unlikely to have significantly affected results.

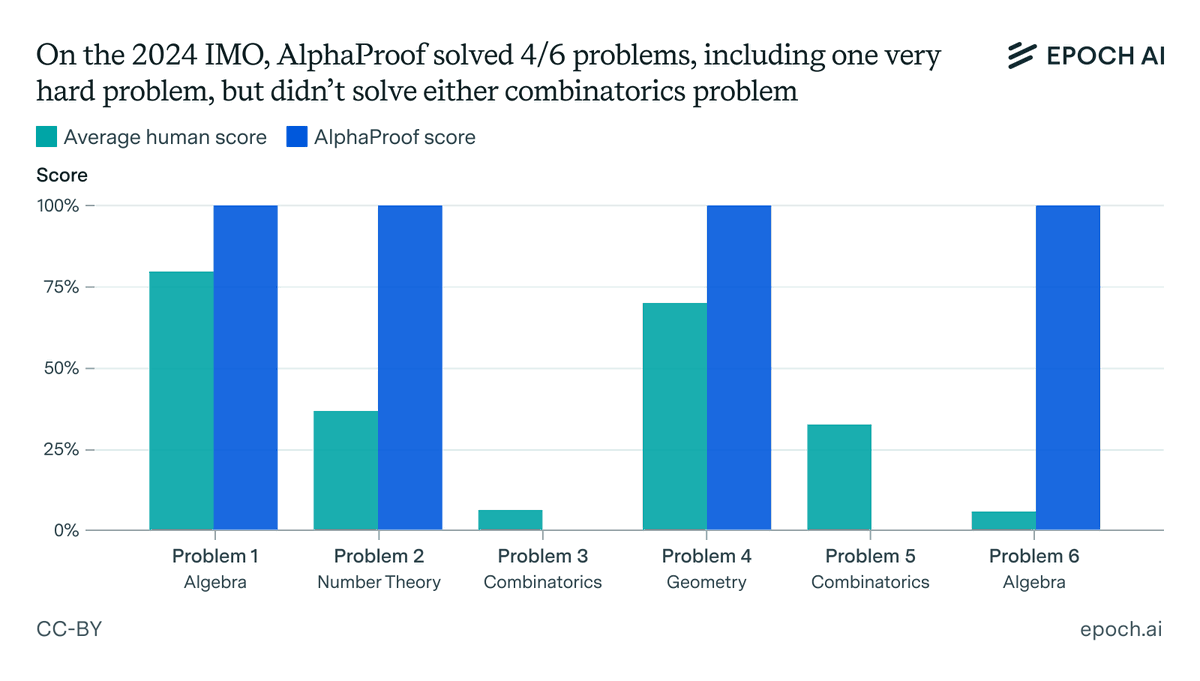

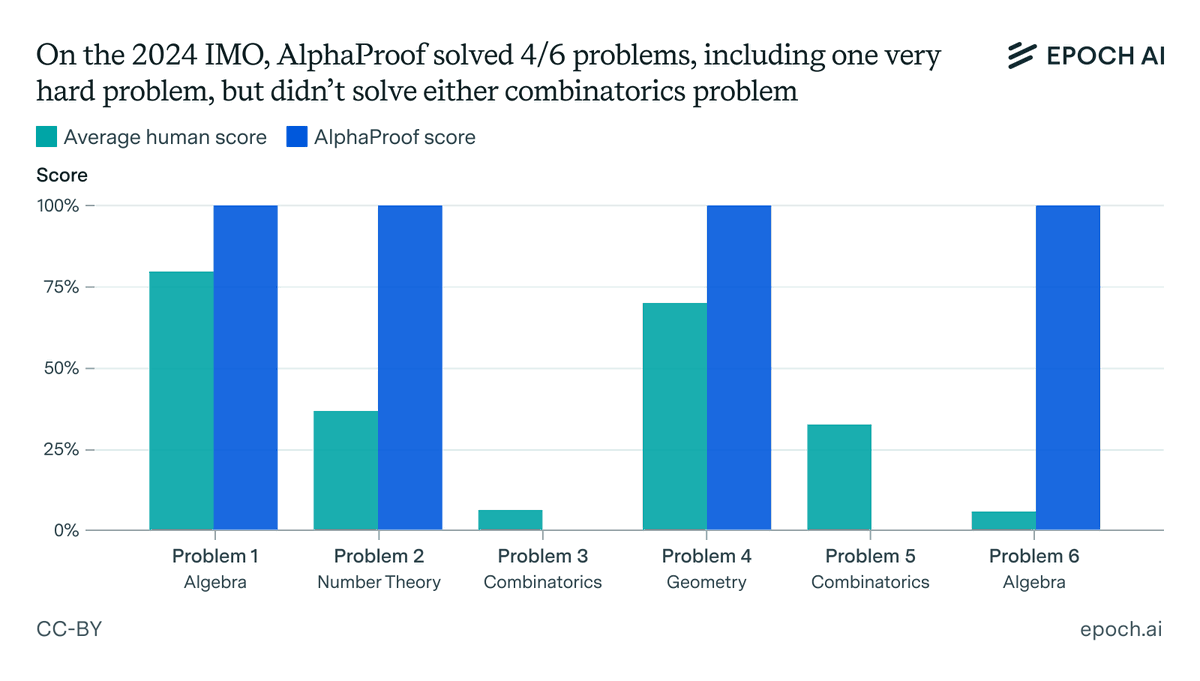

@GregHBurnham It will be tempting to focus on whether an AI system gets a gold medal. Formal proof systems like Google’s AlphaProof are quite close to this, and even general-purpose LLMs have a fighting chance. But that's not the outcome to pay the most attention to.

@GregHBurnham It will be tempting to focus on whether an AI system gets a gold medal. Formal proof systems like Google’s AlphaProof are quite close to this, and even general-purpose LLMs have a fighting chance. But that's not the outcome to pay the most attention to.