Researcher at OpenAI working to make language models more trustworthy, secure, and private.

How to get URL link on X (Twitter) App

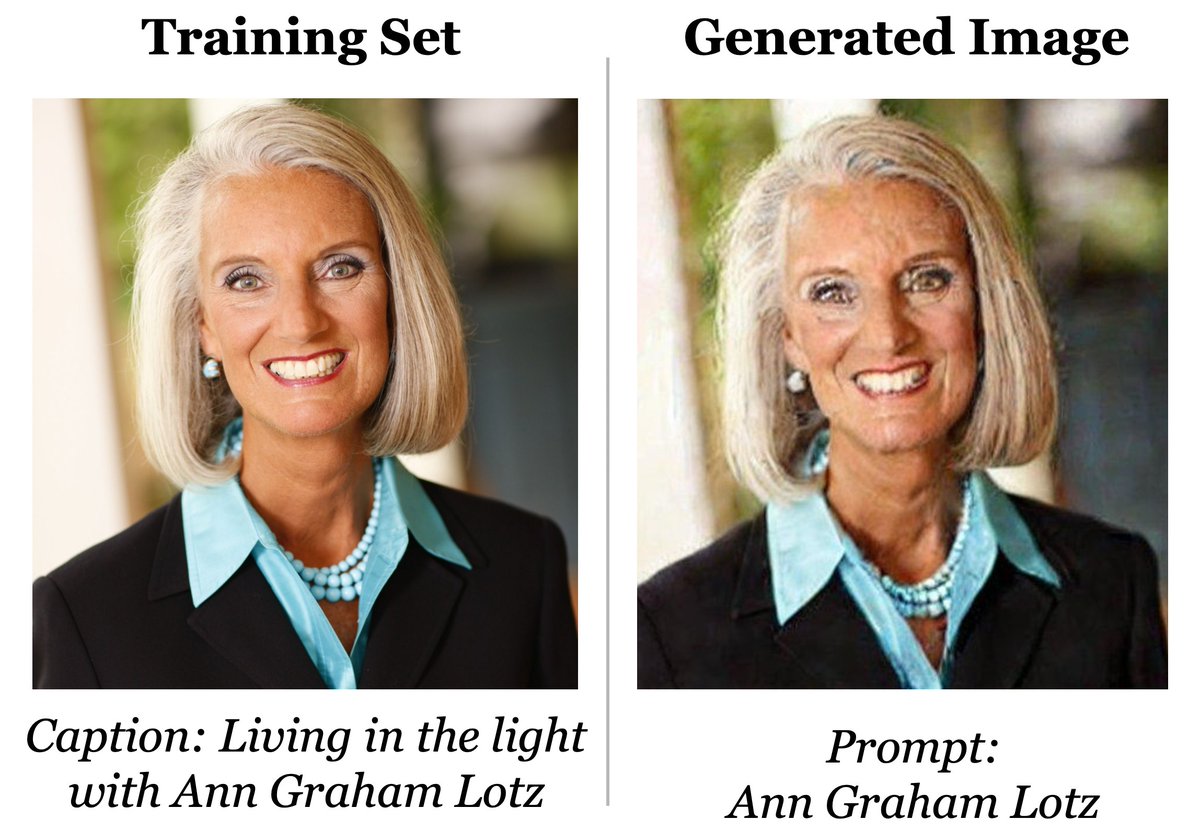

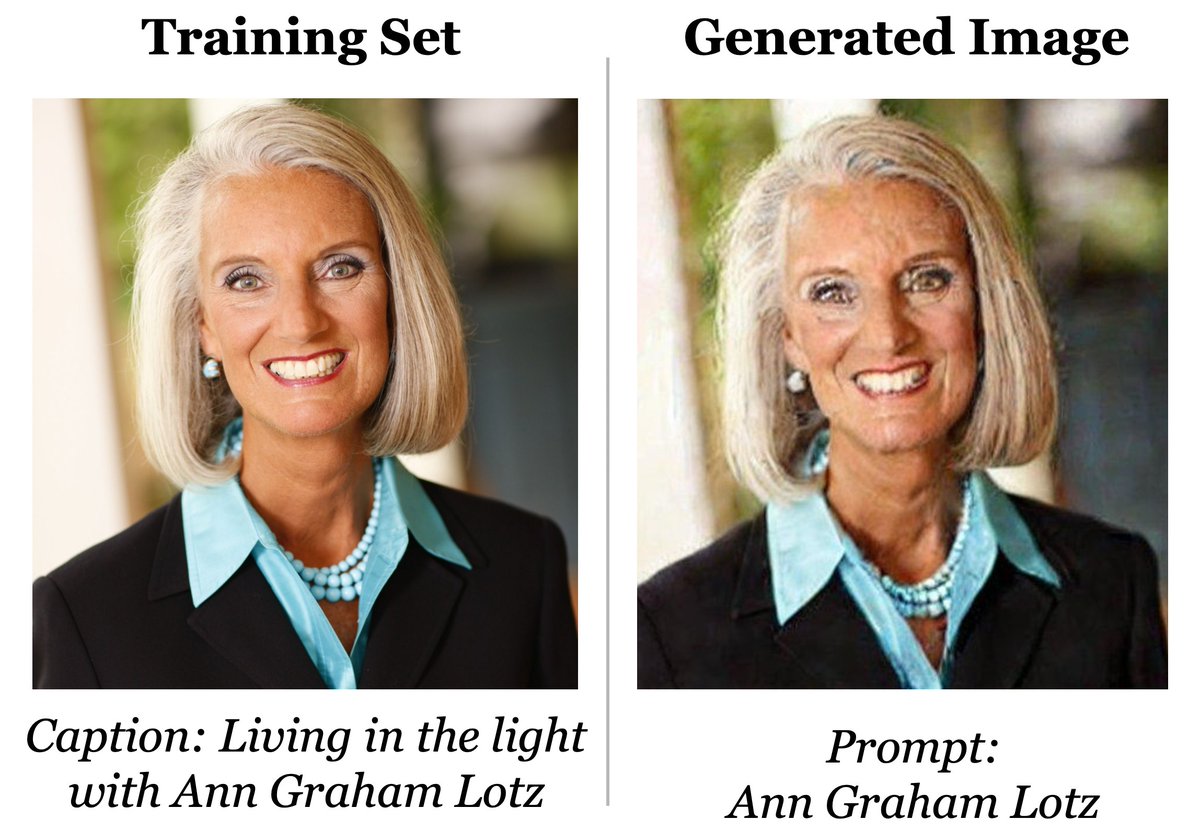

Diffusion models are trained to denoise images from the web. These images are often vulgar or malicious, and many are potentially risky to use (e.g., copyrighted).

Diffusion models are trained to denoise images from the web. These images are often vulgar or malicious, and many are potentially risky to use (e.g., copyrighted).

Model "stealing" can be a goal in itself. It allows adversaries to launch their own competitor service or to avoid long-term API costs.

Model "stealing" can be a goal in itself. It allows adversaries to launch their own competitor service or to avoid long-term API costs.

Using more compute often leads to higher accuracy. However, since large-scale training is expensive, the goal is typically to maximize accuracy under your budget.

Using more compute often leads to higher accuracy. However, since large-scale training is expensive, the goal is typically to maximize accuracy under your budget.

We begin by testing QA models on questions that evaluate numerical reasoning (e.g., sorting, comparing, or summing numbers), taken from the DROP dataset. Standard models excel on these types of questions! [2 / 6]

We begin by testing QA models on questions that evaluate numerical reasoning (e.g., sorting, comparing, or summing numbers), taken from the DROP dataset. Standard models excel on these types of questions! [2 / 6]