Writing a book on AI+economics+geopolitics for Nation Books.

Bylines: @NYTimes, @Nature, @BBC, @Business, @Guardian, @TIME, @Verge, + others.

How to get URL link on X (Twitter) App

https://twitter.com/30557408/status/1980975802570174831

3. You can make ANYONE trying to build superintelligence sound sinister!

3. You can make ANYONE trying to build superintelligence sound sinister!

Researchers have been putting AIs in scenarios where they face a choice: obey safety protocols, or act to preserve themselves — even if it means letting someone die.

Researchers have been putting AIs in scenarios where they face a choice: obey safety protocols, or act to preserve themselves — even if it means letting someone die.

For instance, Sam Altman loves to invoke this idea, esp. when he's trying to compare himself to Oppenheimer. He's also said that AI could drive humanity extinct (he's stopped saying this as of late, but I think he still believes it).

For instance, Sam Altman loves to invoke this idea, esp. when he's trying to compare himself to Oppenheimer. He's also said that AI could drive humanity extinct (he's stopped saying this as of late, but I think he still believes it).

Bizarrely, no mainstream outlet had yet covered this possibility, so I wrote it up for Obsolete. A judge certified a class action representing up to 7 million copyright-protected books that Anthropic pirated.

Bizarrely, no mainstream outlet had yet covered this possibility, so I wrote it up for Obsolete. A judge certified a class action representing up to 7 million copyright-protected books that Anthropic pirated.

Overall it's similar to SB 1047. The key diff? No liability provision, which is likely the thing industry hated the most. Some supporters of 1047 prev told me that its transparency provisions — e.g. requiring large AI cos to publish safety plans — were the most significant parts.

Overall it's similar to SB 1047. The key diff? No liability provision, which is likely the thing industry hated the most. Some supporters of 1047 prev told me that its transparency provisions — e.g. requiring large AI cos to publish safety plans — were the most significant parts.

CNBC article: cnbc.com/2025/05/05/ope…

CNBC article: cnbc.com/2025/05/05/ope…

Candela, who led the Preparedness team since July, announced on LinkedIn he's now an "intern" on a healthcare team at OpenAI.

Candela, who led the Preparedness team since July, announced on LinkedIn he's now an "intern" on a healthcare team at OpenAI.

The main claim is that OpenAI got enormous recruiting benefits from touting its nonprofit control. These people would be able to speak to that better than anyone.

The main claim is that OpenAI got enormous recruiting benefits from touting its nonprofit control. These people would be able to speak to that better than anyone.

https://x.com/METR_Evals/status/1902384481111322929

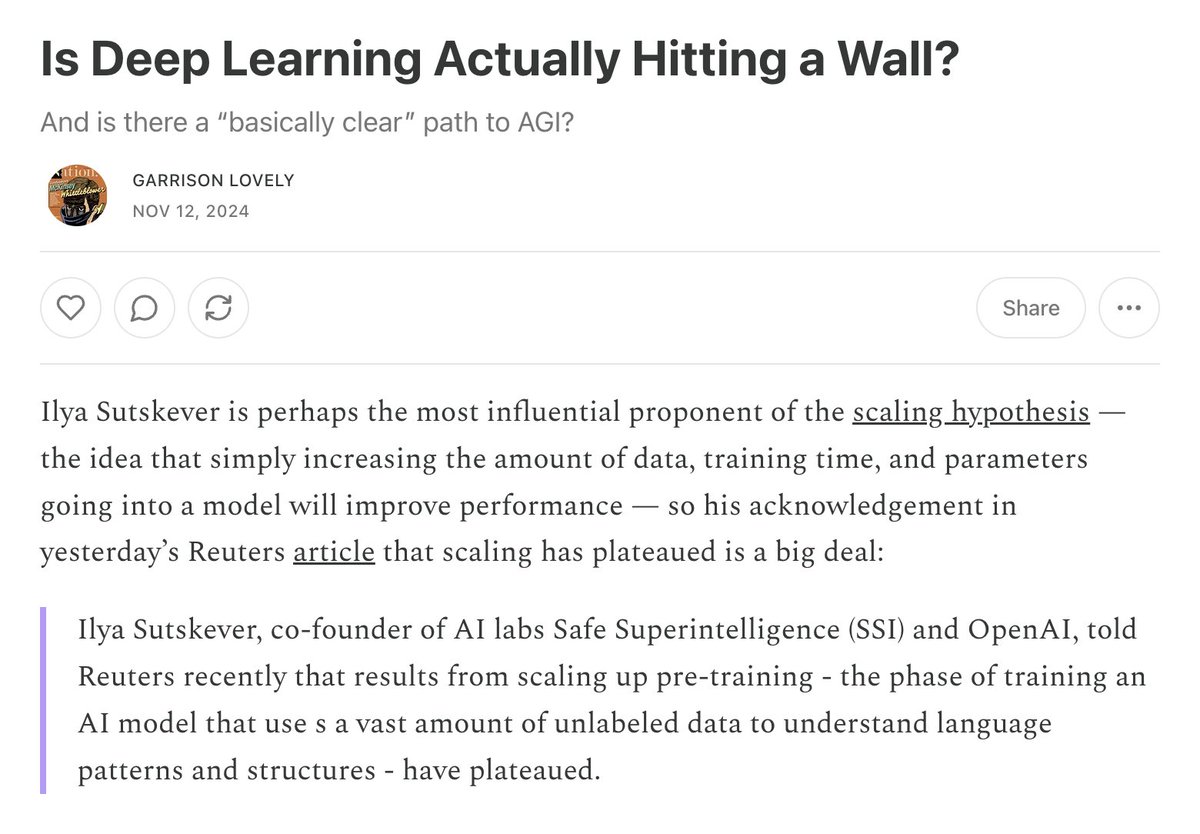

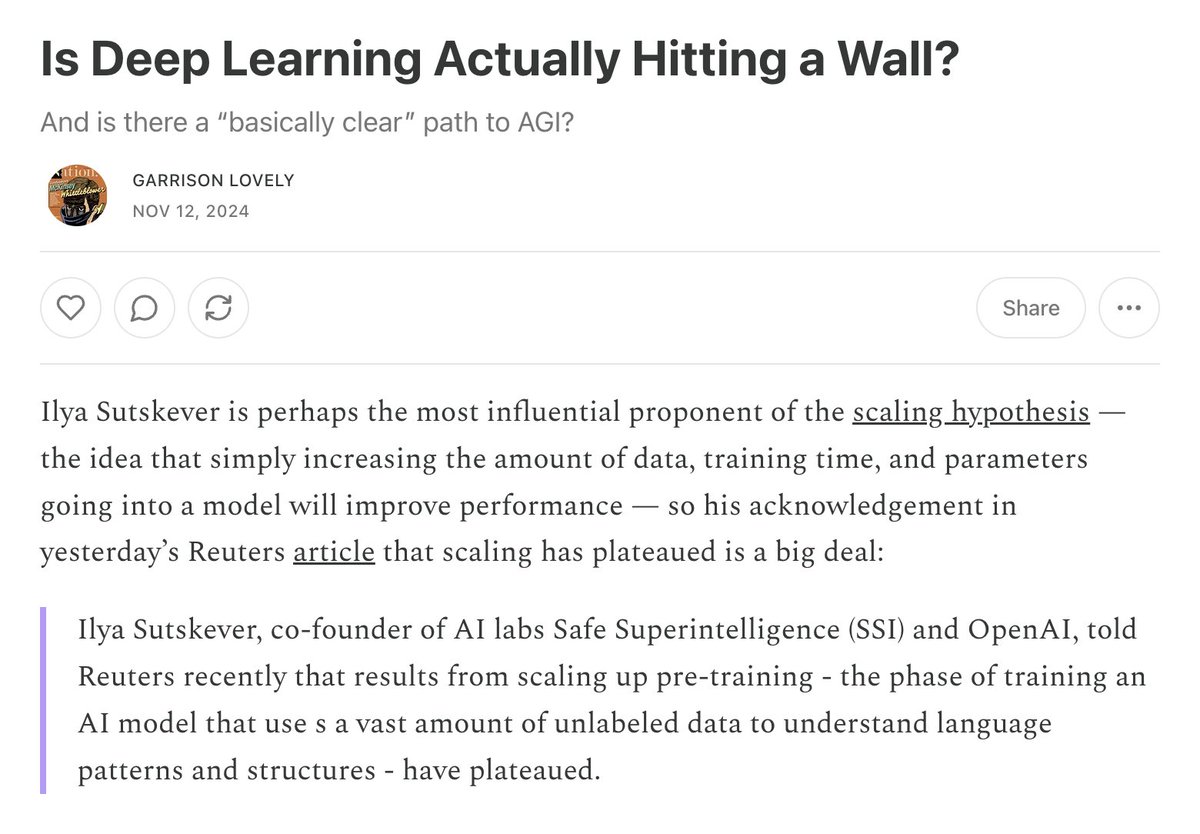

Top AI models crush basically any standardized test you throw at them, but haven't transformed the economy or begun making novel scientific discoveries. What gives?

Top AI models crush basically any standardized test you throw at them, but haven't transformed the economy or begun making novel scientific discoveries. What gives?

Headlines imply this was a loss for Elon, but a closer reading of the 16-page ruling reveals something more subtle — and still a giant potential wrench in OpenAI's plans.

Headlines imply this was a loss for Elon, but a closer reading of the 16-page ruling reveals something more subtle — and still a giant potential wrench in OpenAI's plans.

If OAI doesn't complete its for profit transition in <2 years, investors in the October round can ask for their money back.

If OAI doesn't complete its for profit transition in <2 years, investors in the October round can ask for their money back.

Here's the summary of results. The models that get it wrong are mostly older.

Here's the summary of results. The models that get it wrong are mostly older.

While everyday users still encounter hallucinating chatbots and the media declares an AI slowdown, behind the scenes, AI is rapidly advancing in technical domains.

While everyday users still encounter hallucinating chatbots and the media declares an AI slowdown, behind the scenes, AI is rapidly advancing in technical domains.

I predicted something along these lines back in June

I predicted something along these lines back in June

Buried in his announcement was the news that his AGI readiness team was being disbanded and reabsorbed by others (at least OpenAI's third such case since May).

Buried in his announcement was the news that his AGI readiness team was being disbanded and reabsorbed by others (at least OpenAI's third such case since May).

only cover people reporting violations of the law, so AI development can be risky without being illegal.

only cover people reporting violations of the law, so AI development can be risky without being illegal.

piece, as a hypothetical relayed to me by someone who used to work at OpenAI, but then it turns out it actually already happened, according to this reporting. Bc all of this is governed by voluntary commitments, OpenAI didn't violate any law...

piece, as a hypothetical relayed to me by someone who used to work at OpenAI, but then it turns out it actually already happened, according to this reporting. Bc all of this is governed by voluntary commitments, OpenAI didn't violate any law...

Saunders, like many others at the top AI companies, think artificial general intelligence (AGI) could come in “as little as three years.” He cites OpenAI's new o1 model, which has surpassed human experts in some challenging technical benchmarks for the first time...

Saunders, like many others at the top AI companies, think artificial general intelligence (AGI) could come in “as little as three years.” He cites OpenAI's new o1 model, which has surpassed human experts in some challenging technical benchmarks for the first time...

Many of these critics genuinely believe what they’re saying, bc so many of these lies are downstream of official seeming documents signed by lawyers and op-eds by ‘serious people’ in big outlets. Their most common origin? Andreessen Horowitz (a16z)...

Many of these critics genuinely believe what they’re saying, bc so many of these lies are downstream of official seeming documents signed by lawyers and op-eds by ‘serious people’ in big outlets. Their most common origin? Andreessen Horowitz (a16z)...

The bill is with Newsom, who has until Sept 30 to decide its fate.

The bill is with Newsom, who has until Sept 30 to decide its fate.