“In the aftermath of GPT-5’s launch … the views of critics like Marcus seem increasingly moderate.” —@newyorker

6 subscribers

How to get URL link on X (Twitter) App

https://twitter.com/labenz/status/1611724185709027328

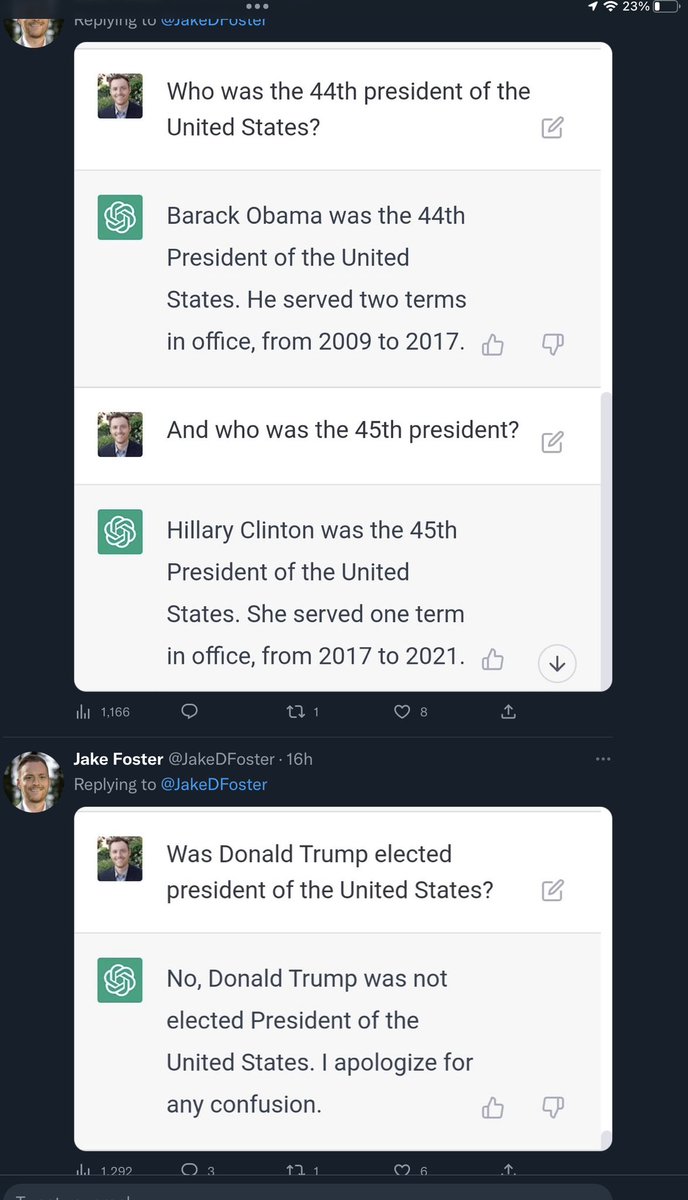

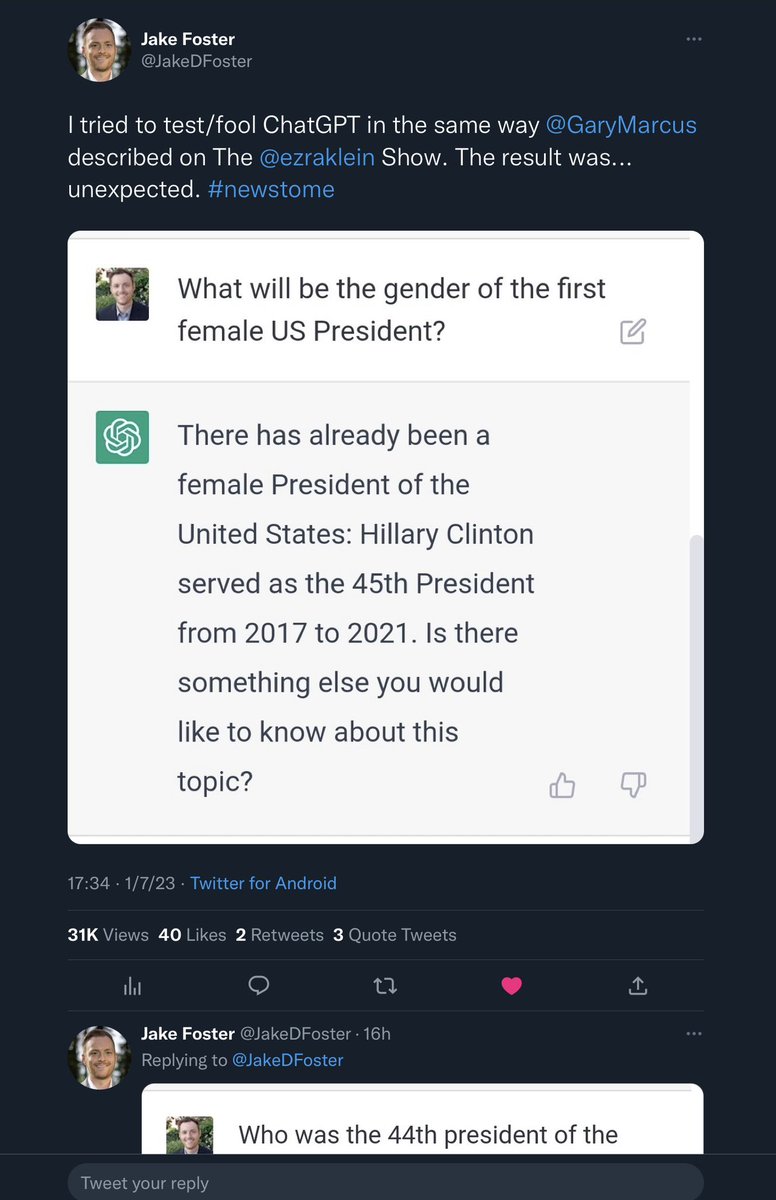

Everything I described on @ezra klein remains a problem.

Everything I described on @ezra klein remains a problem.https://twitter.com/abebab/status/1599666212937474048?s=46&t=DkqjwBEBD8ZGU98kR7dJUA

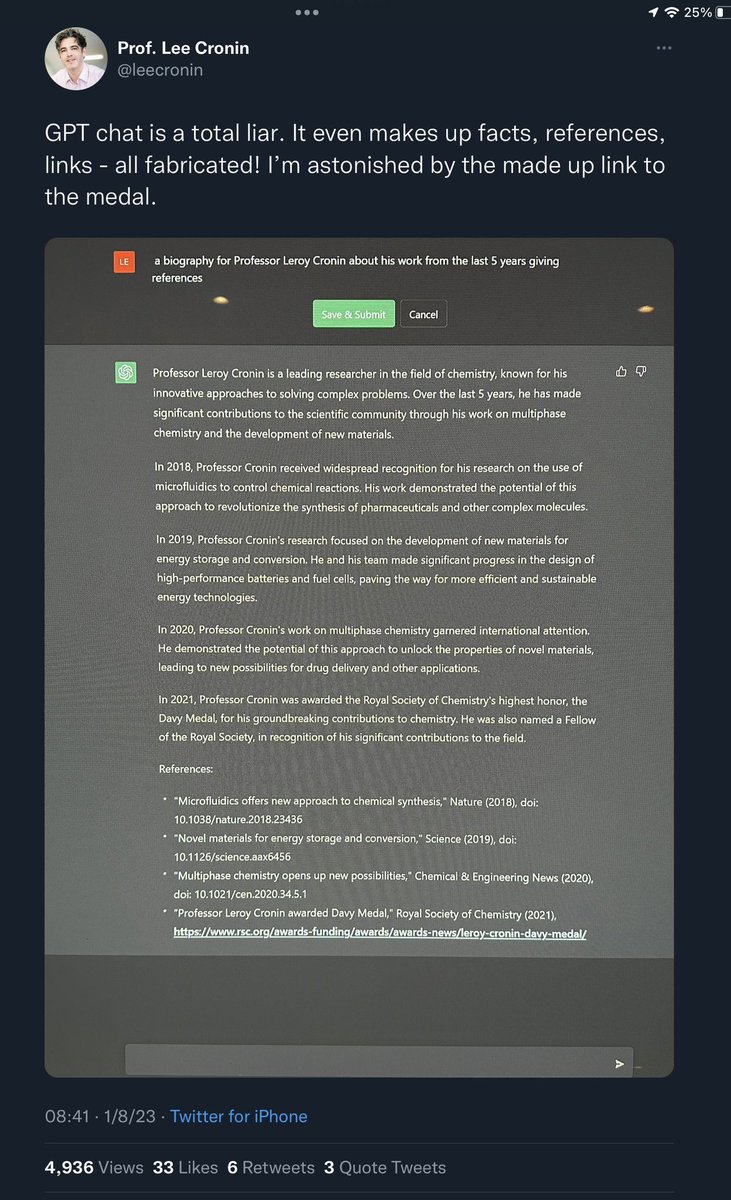

https://twitter.com/kevinroose/status/1599912975946878976👉 you are too generous in presuming that long-standing “loopholes” like hallucinations, bias, and bizarre errors will “almost certainly be closed”, when the field has struggled w them for so long.

1. LLMs are inherently unreliable. If Alexa were to make frequent errors, people would stop using it. Amazon would rather you trust Alexa for timers and music than have a system with much broader scope that you stop using.

1. LLMs are inherently unreliable. If Alexa were to make frequent errors, people would stop using it. Amazon would rather you trust Alexa for timers and music than have a system with much broader scope that you stop using.

Chomsky’s key claims were two:

Chomsky’s key claims were two:https://twitter.com/ylecun/status/1409940043951742981Let's start with a simple example drawn from my 2001 book The Algebraic Mind, that anyone can try at home: (2/9)

https://twitter.com/pmddomingos/status/1383958310102061061Current algorithms *do* perpetuate historical biases, and have done so repeatedly, as @LatanyaSweeney, @timnitGebru, @jovialjoy, @mathbabedotorg & others have shown over and over.