How to get URL link on X (Twitter) App

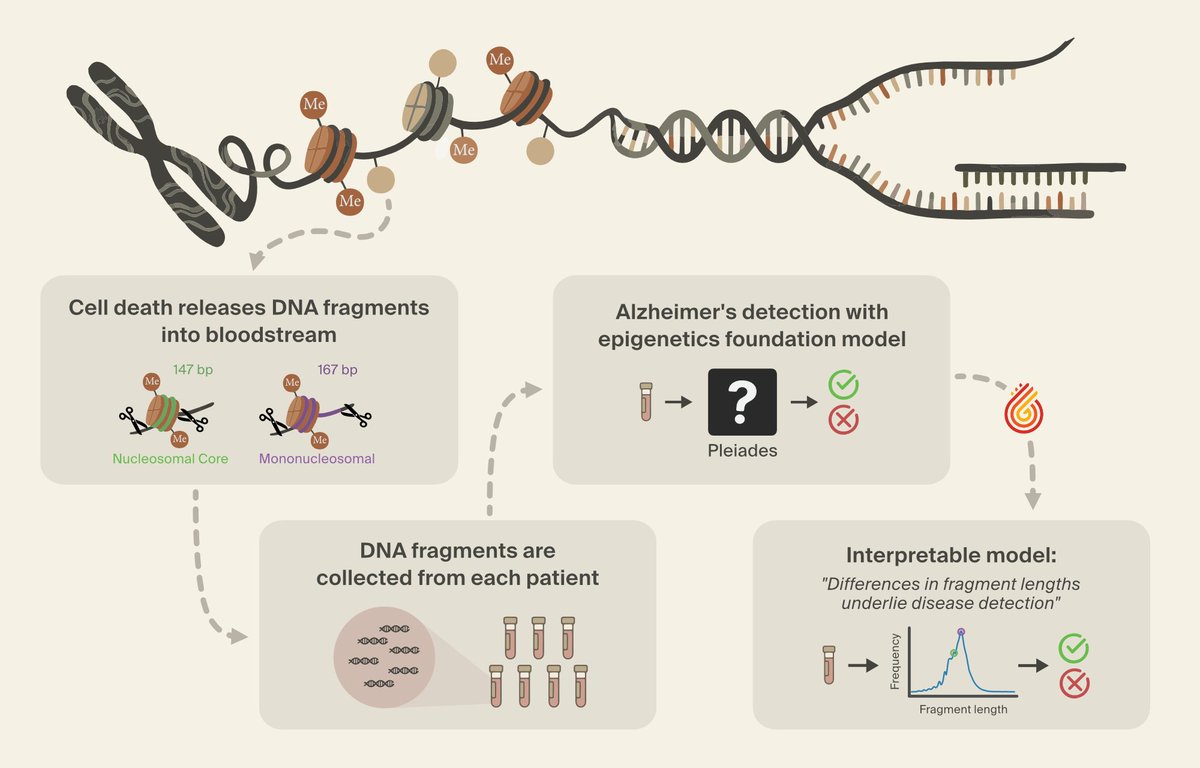

Bio foundation models (e.g. AlphaFold) can achieve superhuman performance, so they must contain novel scientific knowledge. @PrimaMente's Pleiades epigenetics model is one such case - it's SOTA on early Alzheimer's detection.

Bio foundation models (e.g. AlphaFold) can achieve superhuman performance, so they must contain novel scientific knowledge. @PrimaMente's Pleiades epigenetics model is one such case - it's SOTA on early Alzheimer's detection.

The paper formalizes a Bayesian framework for model control: altering a model's "beliefs" over which persona or data source it's emulating.

The paper formalizes a Bayesian framework for model control: altering a model's "beliefs" over which persona or data source it's emulating.https://twitter.com/67502027/status/1988314933248024801

The method is like PCA, but for loss curvature instead of variance: it decomposes weight matrices into components ordered by curvature, and removes the long tail of low-curvature ones.

The method is like PCA, but for loss curvature instead of variance: it decomposes weight matrices into components ordered by curvature, and removes the long tail of low-curvature ones.https://twitter.com/1547342006128586752/status/1986493472988356920

PII detection in production AI systems requires methods which are very lightweight, have high recall, and perform well after training on only synthetic data (can't train on customer PII!)

PII detection in production AI systems requires methods which are very lightweight, have high recall, and perform well after training on only synthetic data (can't train on customer PII!)

(2/7) Most interp methods study activations. But we want causal, mechanistic stories: "Activations tell you a lot about the dataset, but not as much about the computations themselves. … they’re shadows cast by the computations."

(2/7) Most interp methods study activations. But we want causal, mechanistic stories: "Activations tell you a lot about the dataset, but not as much about the computations themselves. … they’re shadows cast by the computations."

(2/) Reasoning models like DeepSeek R1, OpenAI’s o3, and Anthropic’s Claude 3.7 are changing how we use AI, providing more reliable and coherent responses for complex problems. But understanding their internal mechanisms remains challenging.

(2/) Reasoning models like DeepSeek R1, OpenAI’s o3, and Anthropic’s Claude 3.7 are changing how we use AI, providing more reliable and coherent responses for complex problems. But understanding their internal mechanisms remains challenging.

(2/) Today, Arc announced Evo 2, a next-generation biological model that processes million-base-pair sequences at nucleotide resolution. Trained across all life forms, it predicts and generates complex biological sequences. You can preview our interpretability work in the Evo 2 preprint and our blog post.

(2/) Today, Arc announced Evo 2, a next-generation biological model that processes million-base-pair sequences at nucleotide resolution. Trained across all life forms, it predicts and generates complex biological sequences. You can preview our interpretability work in the Evo 2 preprint and our blog post.