Cofounder & Chief Scientist https://t.co/hLfvKLkFHd (@MistralAI). Working on LLMs. Ex @MetaAI | PhD @Sorbonne_Univ_ | MSc @CarnegieMellon | X11 @Polytechnique

How to get URL link on X (Twitter) App

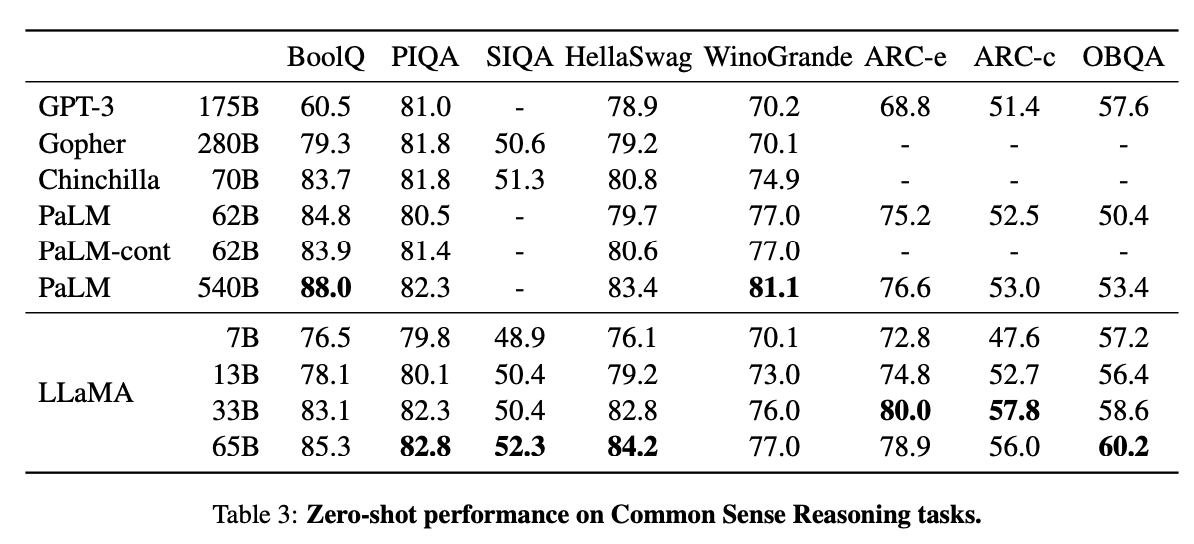

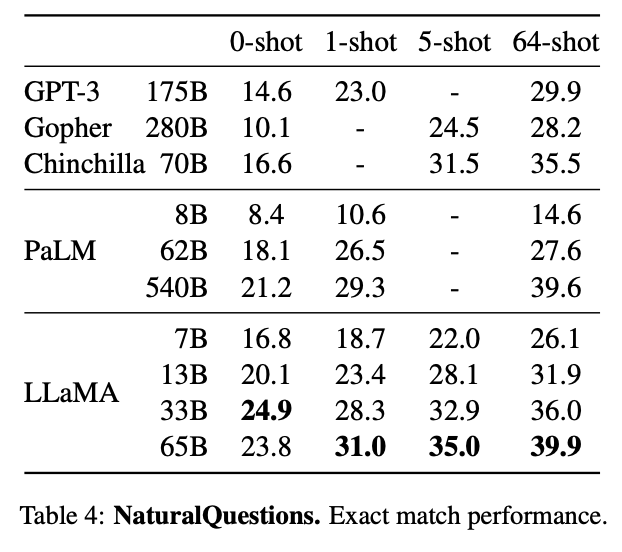

Unlike Chinchilla, PaLM, or GPT-3, we only use datasets publicly available, making our work compatible with open-sourcing and reproducible, while most existing models rely on data which is either not publicly available or undocumented.

Unlike Chinchilla, PaLM, or GPT-3, we only use datasets publicly available, making our work compatible with open-sourcing and reproducible, while most existing models rely on data which is either not publicly available or undocumented.

https://twitter.com/AlbertQJiang/status/1584877475502301184

A formal sketch provides a high-level description of the proof that follows the same reasoning steps as the informal proof. The sketches are in turn converted to a complete proof by an automated prover (we used SledgeHammer but we could use more powerful/neural based provers too)

A formal sketch provides a high-level description of the proof that follows the same reasoning steps as the informal proof. The sketches are in turn converted to a complete proof by an automated prover (we used SledgeHammer but we could use more powerful/neural based provers too)

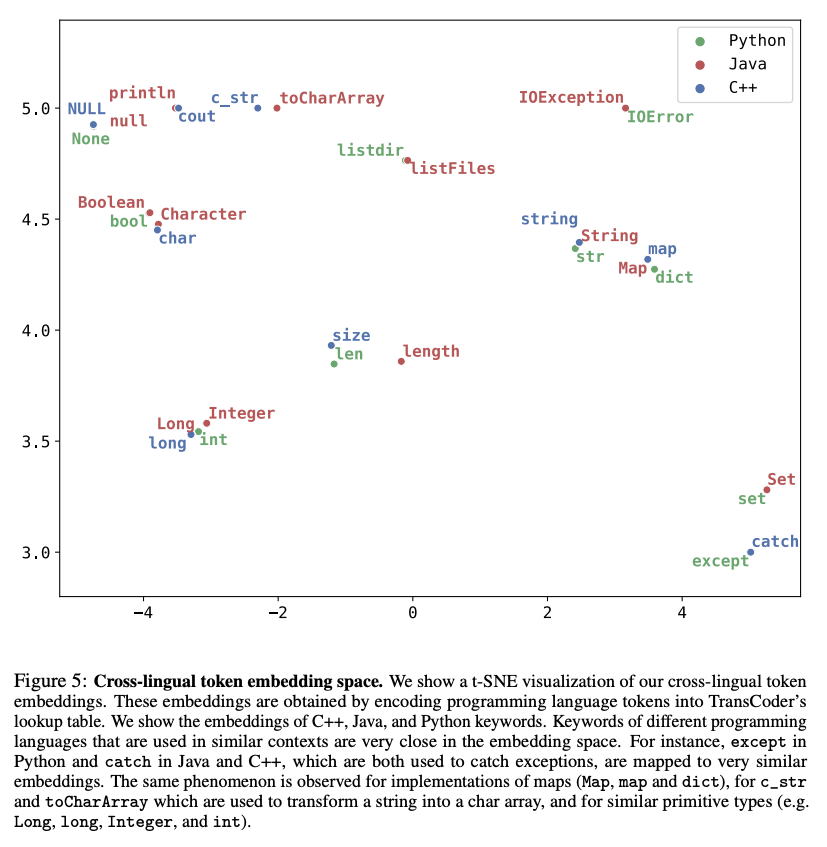

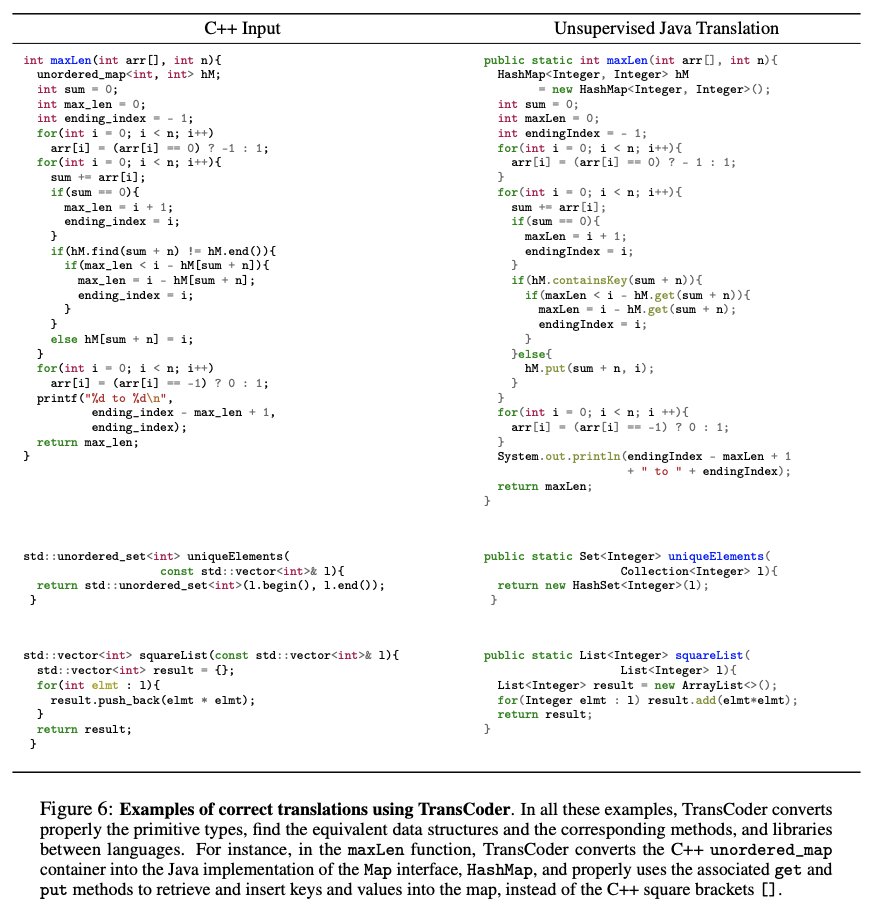

We leverage the same principles that we used to translate low-resource languages (arxiv.org/abs/1804.07755), i.e. pretraining, denoising auto-encoding, and back-translation. Although initially designed for natural languages, these methods perfectly apply to programming languages.

We leverage the same principles that we used to translate low-resource languages (arxiv.org/abs/1804.07755), i.e. pretraining, denoising auto-encoding, and back-translation. Although initially designed for natural languages, these methods perfectly apply to programming languages.