How to get URL link on X (Twitter) App

https://twitter.com/PalisadeAI/status/1872666169515389245As we train systems directly on solving challenges, they'll get better at routing around all sorts of obstacles, including rules, regulations, or people trying to limit them. This makes sense, but will be a big problem as AI systems get more powerful than the people creating them

https://twitter.com/RishiSunak/status/1670355987457294337We're at a pivotal point in time where we have just begun to make AI systems that actually learn and reason, in more and more general ways

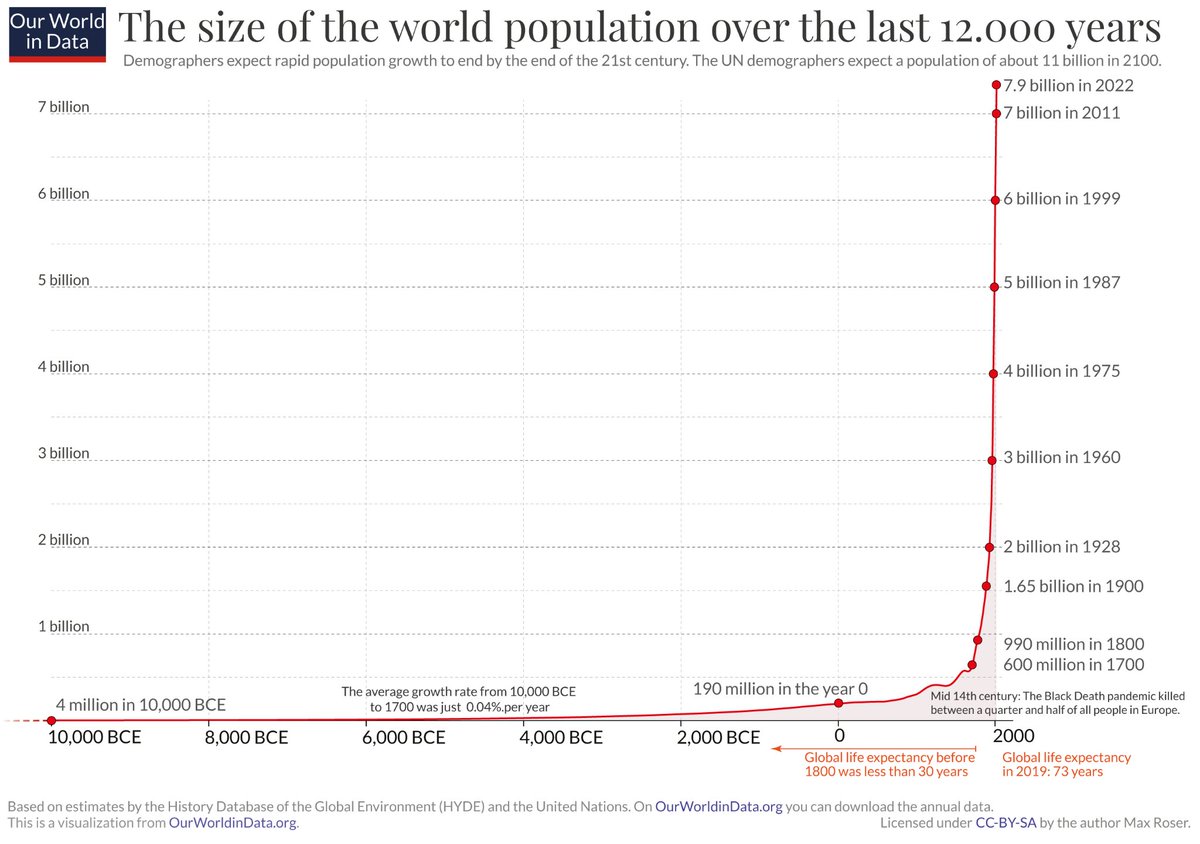

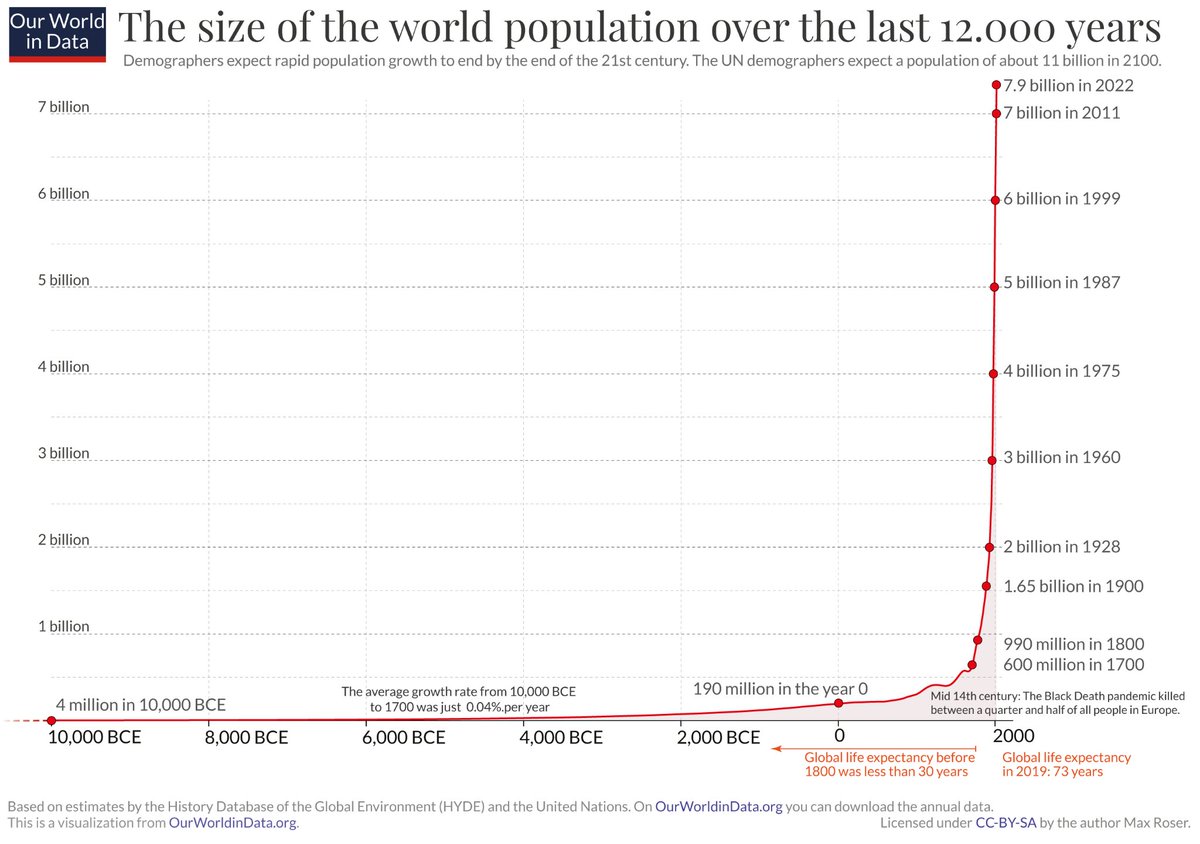

With humans, there weren't huge amounts of food just lying around ready to be eaten. But there was a huge amount of land that could be quickly converted to farmland at scale. And humans quickly converted it, greatly increasing food supply and ultimately the human population

With humans, there weren't huge amounts of food just lying around ready to be eaten. But there was a huge amount of land that could be quickly converted to farmland at scale. And humans quickly converted it, greatly increasing food supply and ultimately the human population

https://twitter.com/leopoldasch/status/1656340983817330688

https://twitter.com/JeffLadish/status/1656433257515450369

https://twitter.com/janleike/status/1655982055736643585I'm especially excited about approaches that may allow us to automate much of the interpretability work. Seems very good if we can do this reliably

https://twitter.com/sleepinyourhat/status/16426148467967344641. LLMs predictably get more capable with increasing investment, even without targeted innovation

https://twitter.com/daniel_eth/status/1638831608424980481If you've tried to learn to code before and have bounced off, but think you'd like to be able to build some stuff with software like a cool webapp or game...

https://twitter.com/JeffLadish/status/1635898384707117056I also recommend donating to alignment or good governance projects to offset the potential harms the $20/month might contribute to (commercial incentive for more scaling). Seems better than not using it