How to get URL link on X (Twitter) App

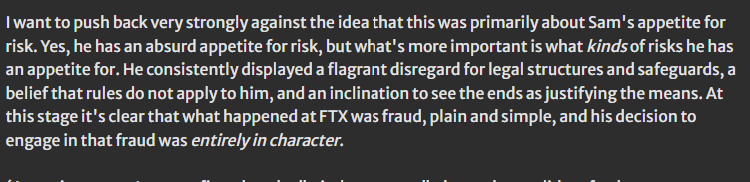

https://twitter.com/363005534/status/1591218022362284034(1)

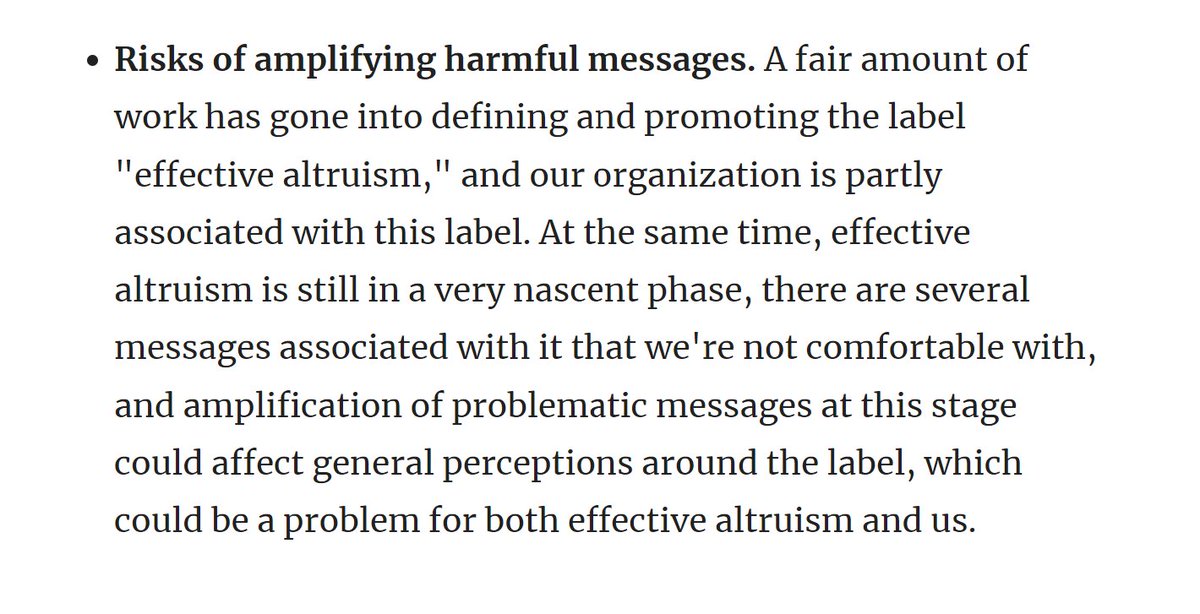

https://twitter.com/192737798/status/1583401233188278274The story begins in 2018 with a post on the EA Forum "Leverage Research: Reviewing the basic facts" written by "Throwaway" and commented on by two other anonymous accounts "Anonymous" and "Throwaway 2" purportedly to share some "basic facts" about a competing organization.

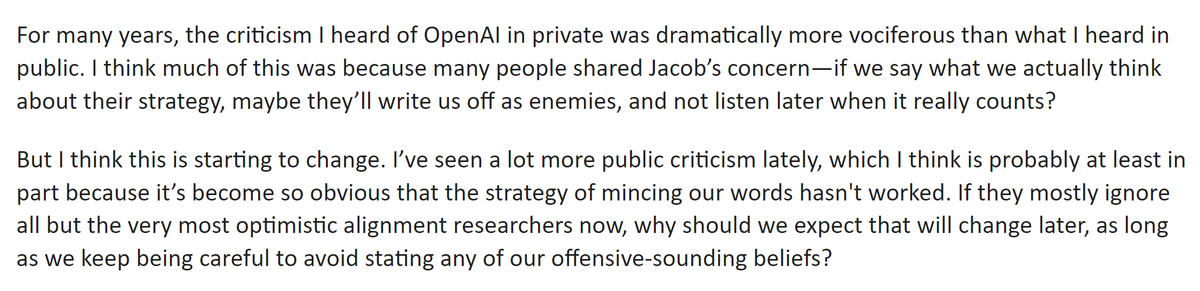

https://twitter.com/956296561289453568/status/1565066646124765184I think the entrance of Open Phil as a potential funder circa 2015 significantly changed the social vibe in the EA community.

https://twitter.com/KerryLVaughan/status/15450603554323374091) I missed that for most OG EAs, the creation of the EA community was actually a source of LESS moral demandingness rather than more.

https://twitter.com/ben_j_todd/status/1545765343532040192And like telling people to give 10% to good charities is great 👍