Clinical Trial Designer. Director of Modeling and Simulation, Berry Consultants. Statistics PhD Carnegie Mellon.

How to get URL link on X (Twitter) App

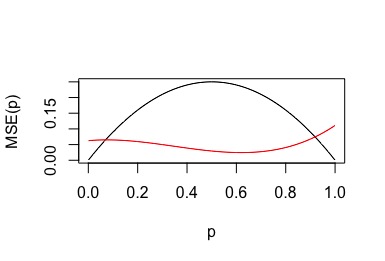

https://twitter.com/al_in_sweden/status/1432230677886427140(2/n) Ultra simple trial. Coin with unknown p=Prob(heads).

https://twitter.com/lakens/status/1429313825413779456(2/n) I’m just going to focus on the “how do you value an experiment prior to conducting it”. This is a practical problem for a funding agency evaluating grants, or for a regulatory agency deciding in advance if a study is likely to generate sufficient information for approval.

https://twitter.com/WesPegden/status/1424930180196356099(2/10) For safety events, required sample sizes are driven by the baseline rate of the event and the magnitude of change you are trying to detect. Smaller baseline rates require bigger sample sizes, as do smaller effects.

https://twitter.com/DrWoodcockFDA/status/1394306898753638402(2/n) First and foremost…FDA is wonderfully encouraging of these designs! Lots of comments on accelerating drug development. Also notes the extra complexity and startup time. Emphasis on “early and often” discussion with FDA.

(2/n) A lot of good examples are basket trials in oncology.

(2/n) A lot of good examples are basket trials in oncology.

https://twitter.com/ADAlthousePhD/status/1317087321112576003(2/n) Bias feels backward to a Bayesian confronted with data. Bias is phrased as “suppose we knew the true rate was X, would would our average data look like”. We are in the reverse situation. We know the exact data, we want to know the true rate.

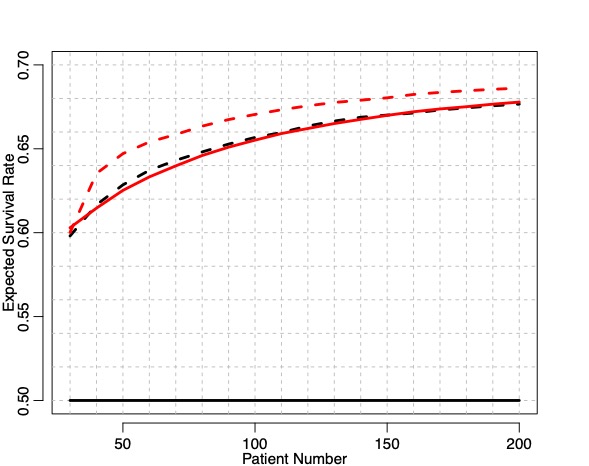

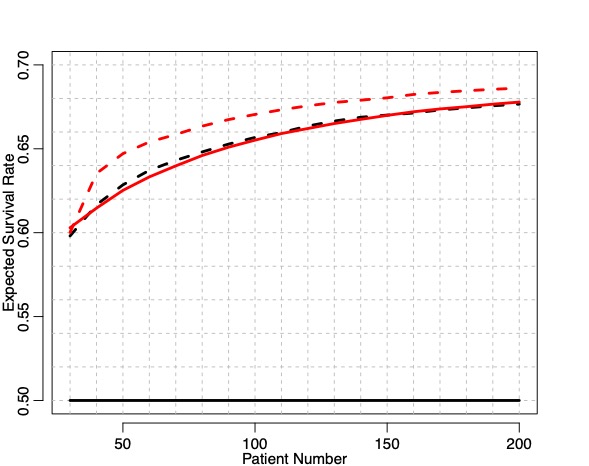

(2/n) Data is noisy…a good therapy can look bad early in a trial, and a null(dud) therapy can look good. A lot of statistical theory is dedicated to quantifying this range. When can we be “sure” the data is good enough the drug isn’t a dud? When can we be “sure” it works?

(2/n) Data is noisy…a good therapy can look bad early in a trial, and a null(dud) therapy can look good. A lot of statistical theory is dedicated to quantifying this range. When can we be “sure” the data is good enough the drug isn’t a dud? When can we be “sure” it works?

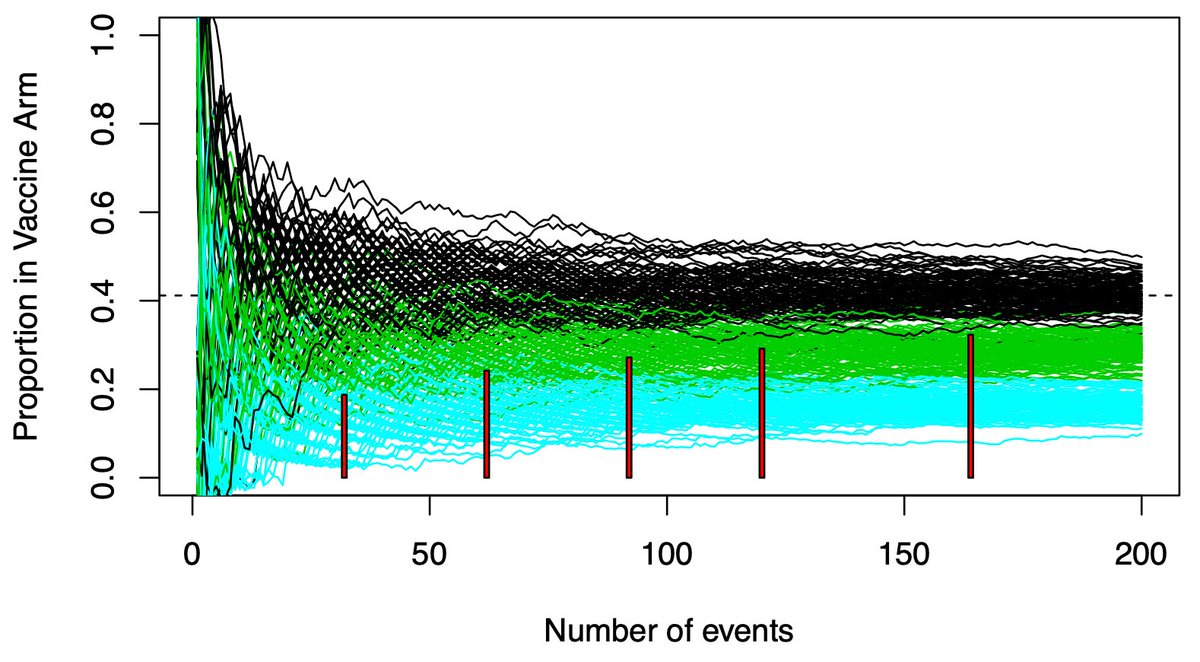

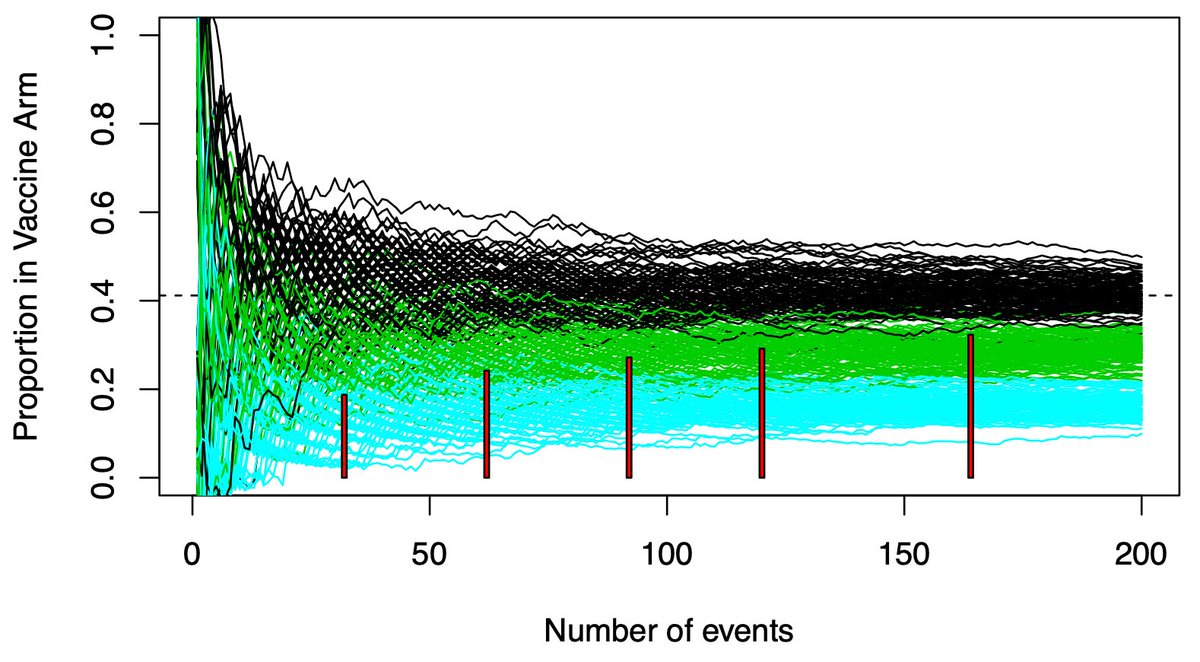

(2/12) Your timing matters. The information you could use to select an arm accumulates over time.

(2/12) Your timing matters. The information you could use to select an arm accumulates over time.

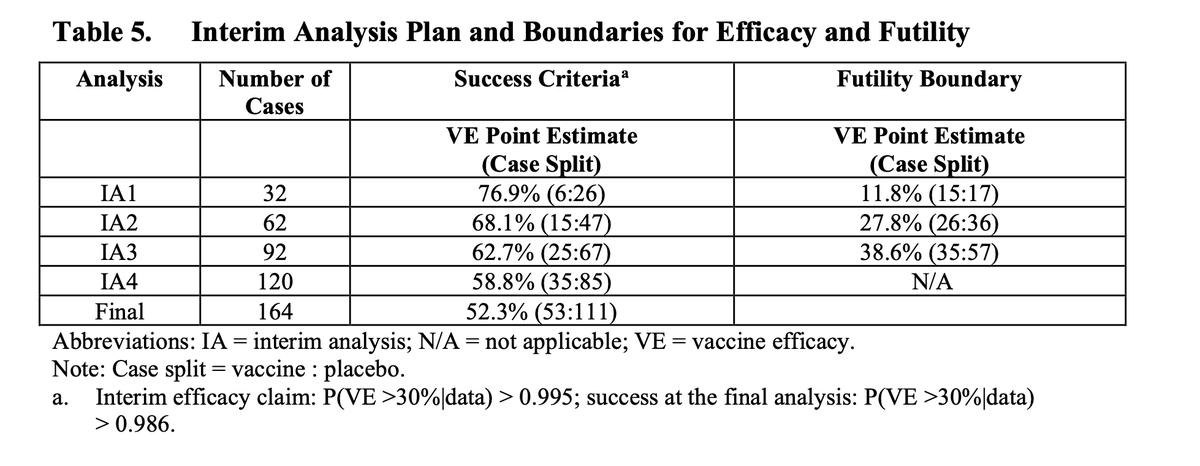

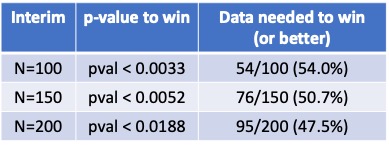

https://twitter.com/DrewQJoseph/status/1247245804861116416(2/20) I don’t have the details of the interims. If someone has them, I’ll followup with this specific trial. Without them, I’m going to discuss the general principles. The principles are easier to see in a single arm trial. They generalize completely to two arms.

2) Simple example…dichotomous outcome, you have a novel therapy and resources for 60 patients. You also have a database of untreated patients showing a 40% response rate. How can you incorporate the database into the trial?

2) Simple example…dichotomous outcome, you have a novel therapy and resources for 60 patients. You also have a database of untreated patients showing a 40% response rate. How can you incorporate the database into the trial?