phd candidate @oiioxford @uniofoxford | research scientist @AISecurityInst | AI, social data science, persuasion with language models

How to get URL link on X (Twitter) App

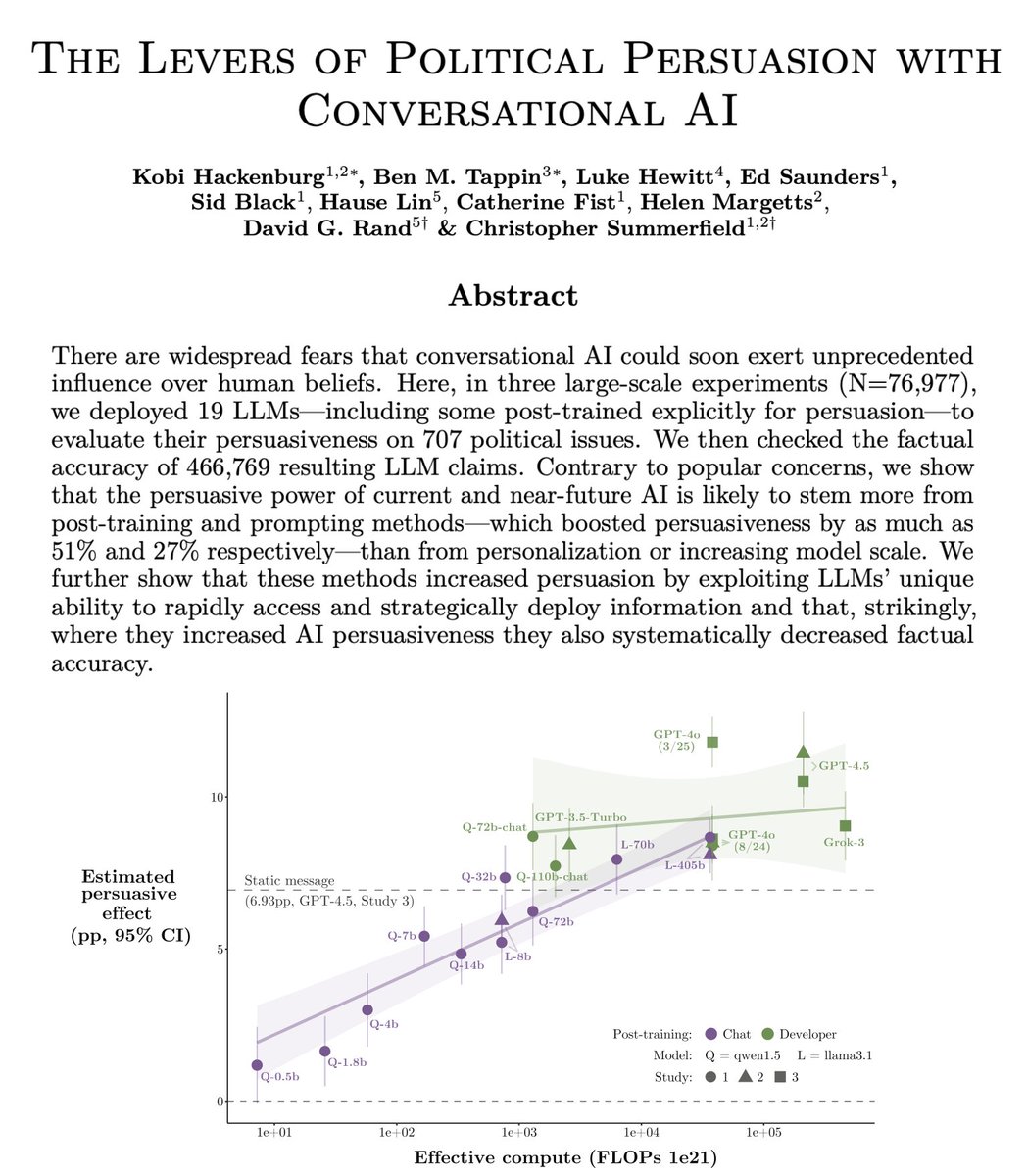

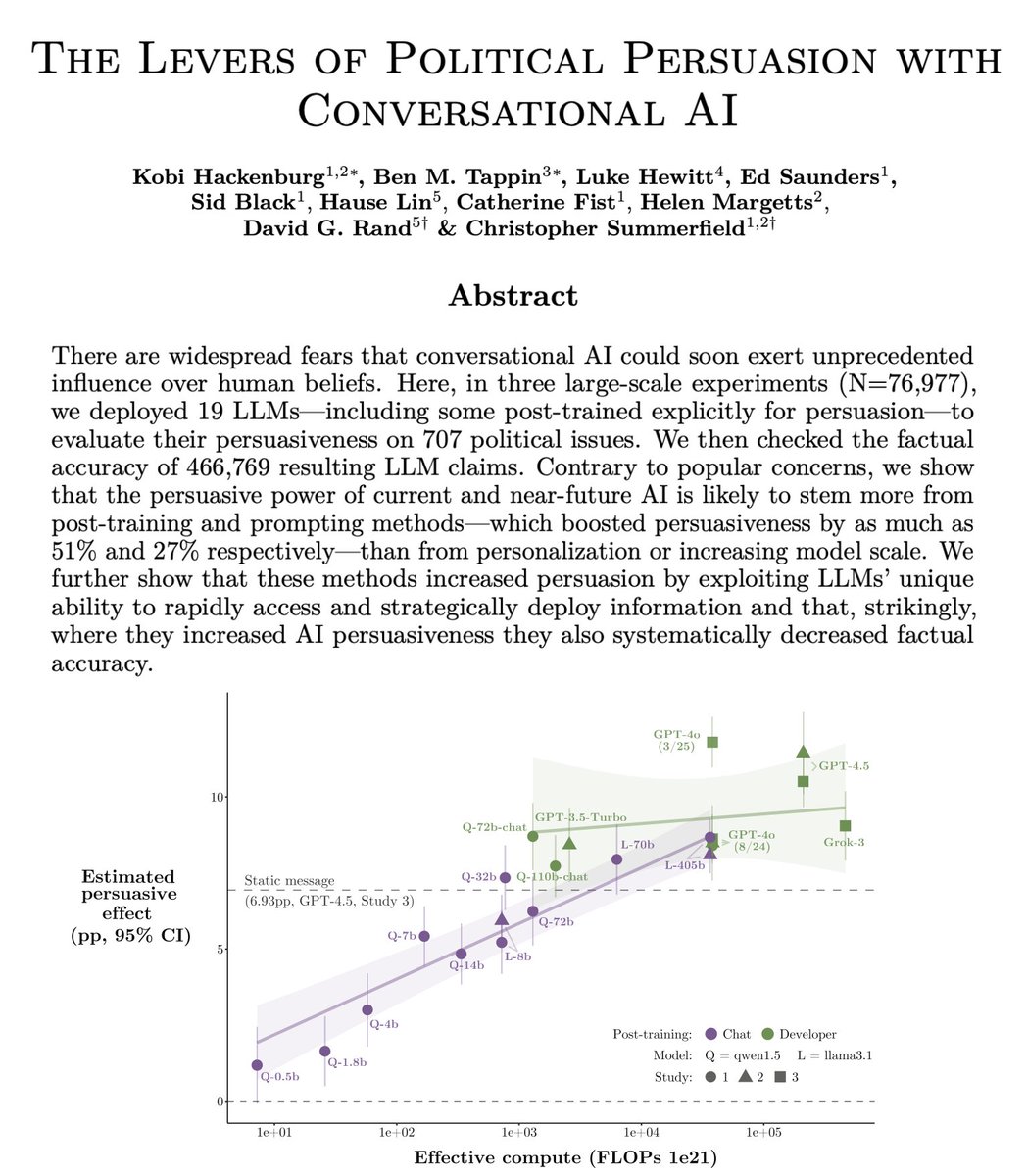

Findings (pp = percentage points):

Findings (pp = percentage points):

RESULTS (pp = percentage points):

RESULTS (pp = percentage points):

We find that:

We find that:

Our findings suggest:

Our findings suggest:

Main takeaways:

Main takeaways: