Lead Rendering Engineer @WeArePlayground working on @Fable. DMs open for graphics questions or mentoring people who want to get in the industry. Views my own.

2 subscribers

How to get URL link on X (Twitter) App

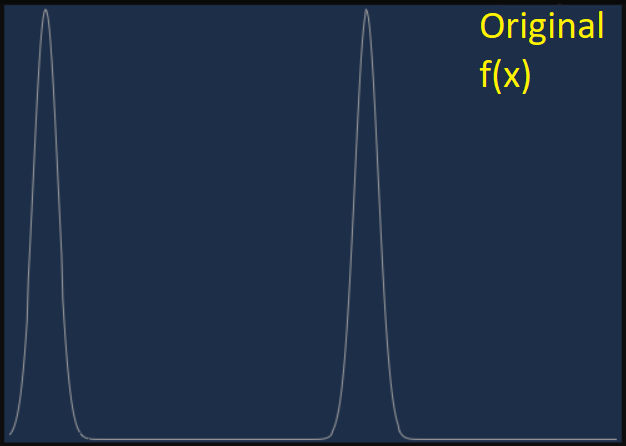

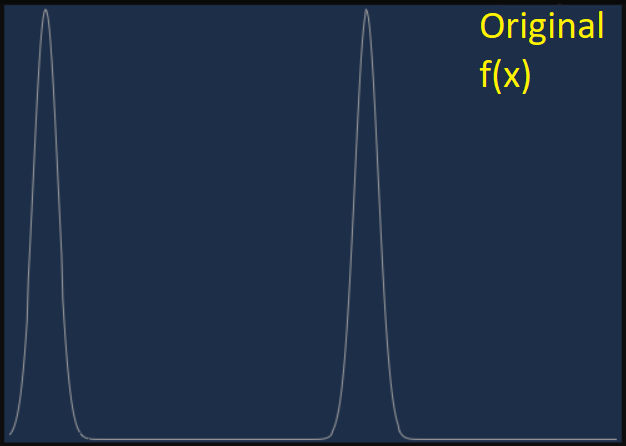

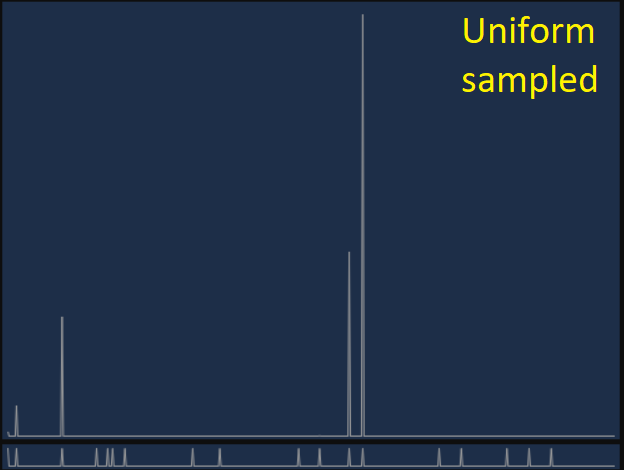

Capturing and processing many samples is expensive so we often randomly select a few and sum these only. If we uniformly (with same probability) select which samples to use though we risk missing important features in the signal, eg areas with large radiance (2/6).

Capturing and processing many samples is expensive so we often randomly select a few and sum these only. If we uniformly (with same probability) select which samples to use though we risk missing important features in the signal, eg areas with large radiance (2/6).

You can control the number of light bounces to emulate a more "traditional" game environment, without an advanced GI solution, to focus on the shape of the shadow or the response of a material to a dynamic light.

You can control the number of light bounces to emulate a more "traditional" game environment, without an advanced GI solution, to focus on the shape of the shadow or the response of a material to a dynamic light.