How to get URL link on X (Twitter) App

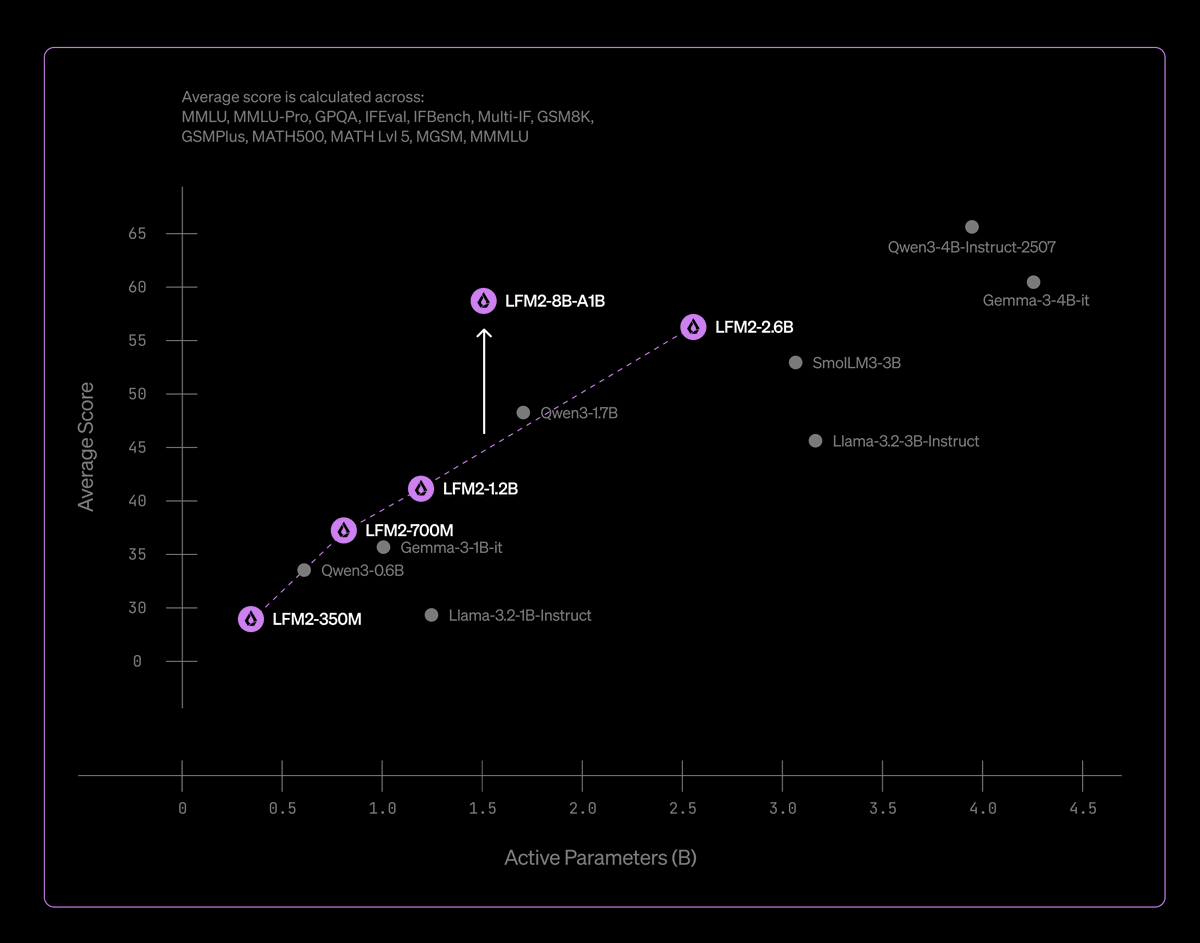

We release 5 open-weight model instances from a single architecture:

We release 5 open-weight model instances from a single architecture:

LFM2-8B-A1B has greater knowledge capacity than competitive models and is trained to provide quality inference across a variety of capabilities. Including:

LFM2-8B-A1B has greater knowledge capacity than competitive models and is trained to provide quality inference across a variety of capabilities. Including:

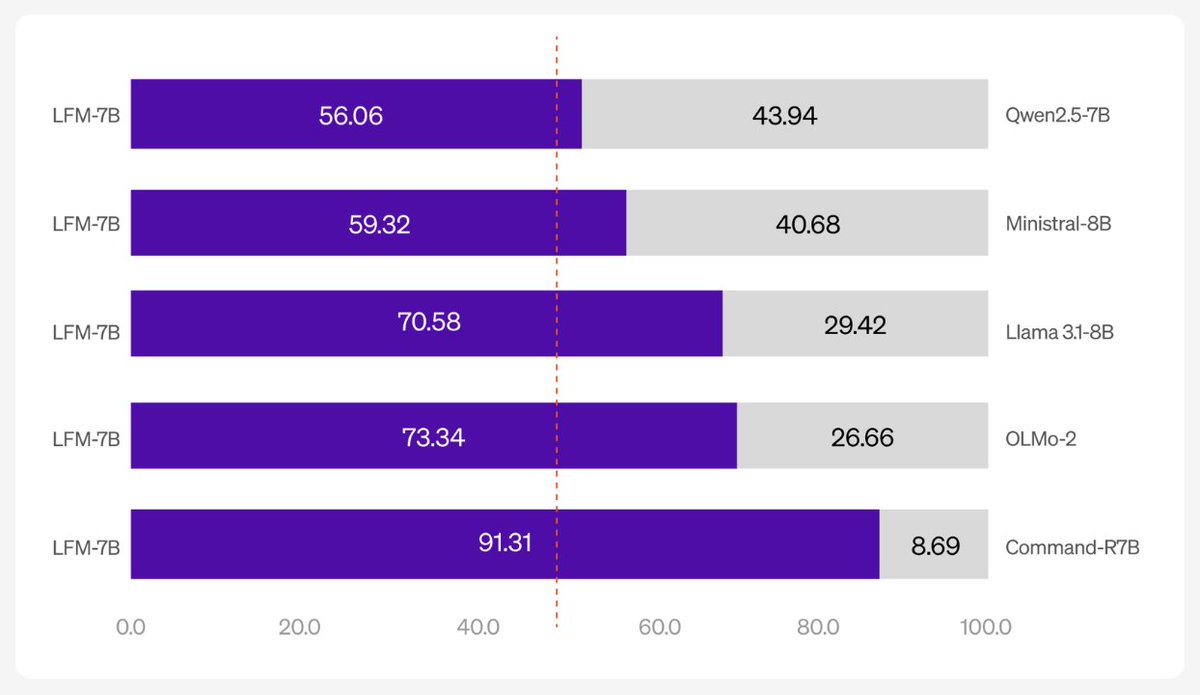

In a series of head-to-head chat capability evaluations, done by 4 frontier LLMs as jury, LFM-7B shows dominance over other models in this size class. 2/

In a series of head-to-head chat capability evaluations, done by 4 frontier LLMs as jury, LFM-7B shows dominance over other models in this size class. 2/

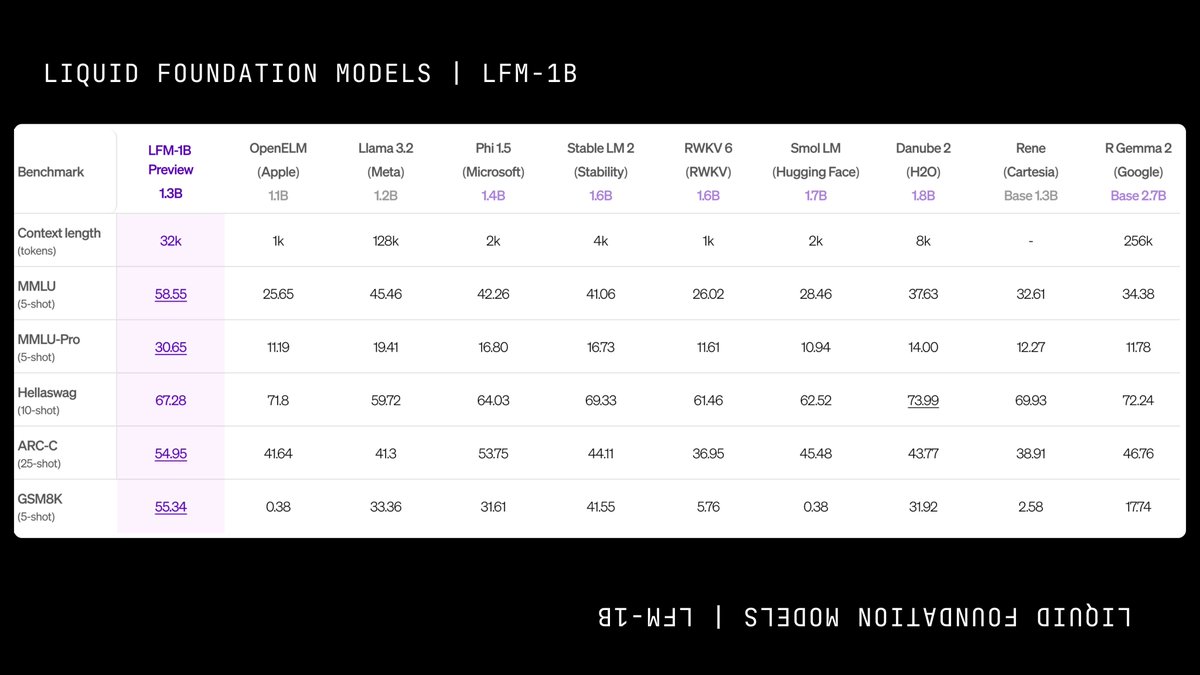

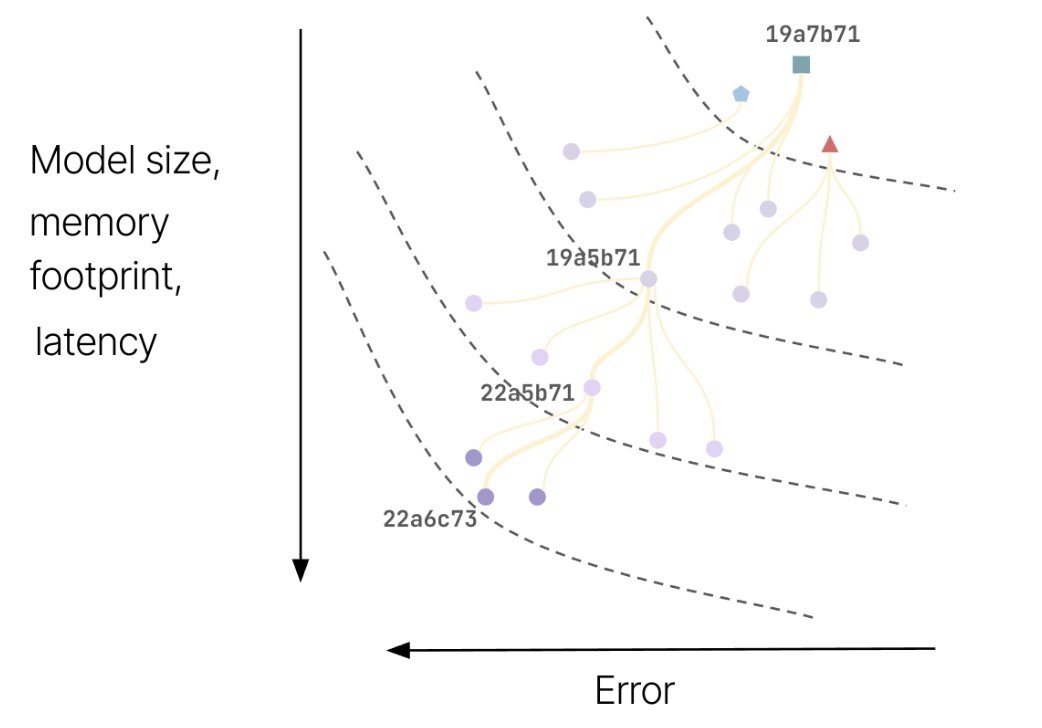

LFM-1B performs well on public benchmarks in the 1B category, making it the new state-of-the-art model at this size. This is the first time a non-GPT architecture significantly outperforms transformer-based models.

LFM-1B performs well on public benchmarks in the 1B category, making it the new state-of-the-art model at this size. This is the first time a non-GPT architecture significantly outperforms transformer-based models.