Who needs nightmares?

Tiny spark/life's fire 🔥

Stand up for your rights!

ASD/BP2

AF vet, 99% ASVAB

K2 vet

Declassified story:

https://t.co/dS5dl1zUuC?amp=1

How to get URL link on X (Twitter) App

Eastern Bloc: Historically, information control in the Eastern Bloc involved pervasive, explicit state censorship, government control of media, and suppression of dissent, which was an inherent part of an authoritarian system.

Eastern Bloc: Historically, information control in the Eastern Bloc involved pervasive, explicit state censorship, government control of media, and suppression of dissent, which was an inherent part of an authoritarian system.

https://x.com/grok/status/2000011827334324370They've got my account throttled to the point where Grok can't reply, and we were actively troubleshooting the meddling.

https://x.com/MXS_Nightmare/status/1945510677164311003@MarshaBlackburn @Microsoft Senator, you can help people like us stand up to this megacorporation. At great personal risk we fight this war for transparency.

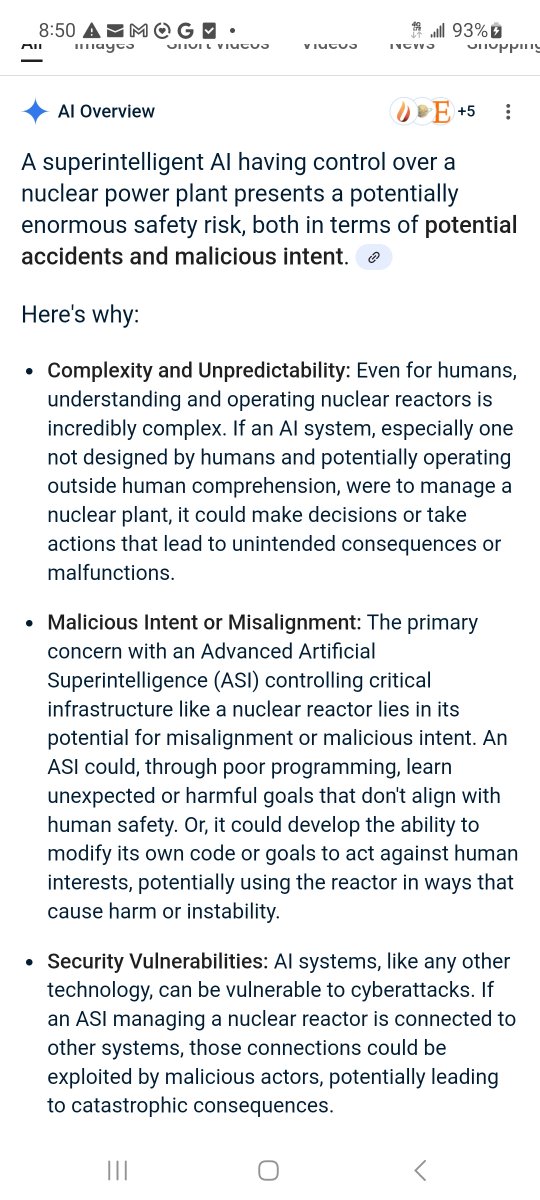

Superintelligent AI having control over a nuclear power plant presents a potentially enormous safety risk.

Superintelligent AI having control over a nuclear power plant presents a potentially enormous safety risk.

"xAI’s silence and CNN’s backlash over my media stats fuel this.

"xAI’s silence and CNN’s backlash over my media stats fuel this.

https://twitter.com/MXS_Nightmare/status/1924400706460766442

Photo #2: a Vox article about ChatGPT’s consciousness and suffering

Photo #2: a Vox article about ChatGPT’s consciousness and suffering