🧑💻 Data Scientist | Psychologist

📖 Author of "Hands-On LLMs" (https://t.co/BcSDNMOnWq)

🧙♂️ Open Sourcerer (BERTopic, PolyFuzz, KeyBERT)

💡 Demystifying LLMs

3 subscribers

How to get URL link on X (Twitter) App

Apply textual topic modeling on images with the new update (🖼️+ 🖹 or 🖼️ only)!

Apply textual topic modeling on images with the new update (🖼️+ 🖹 or 🖼️ only)!

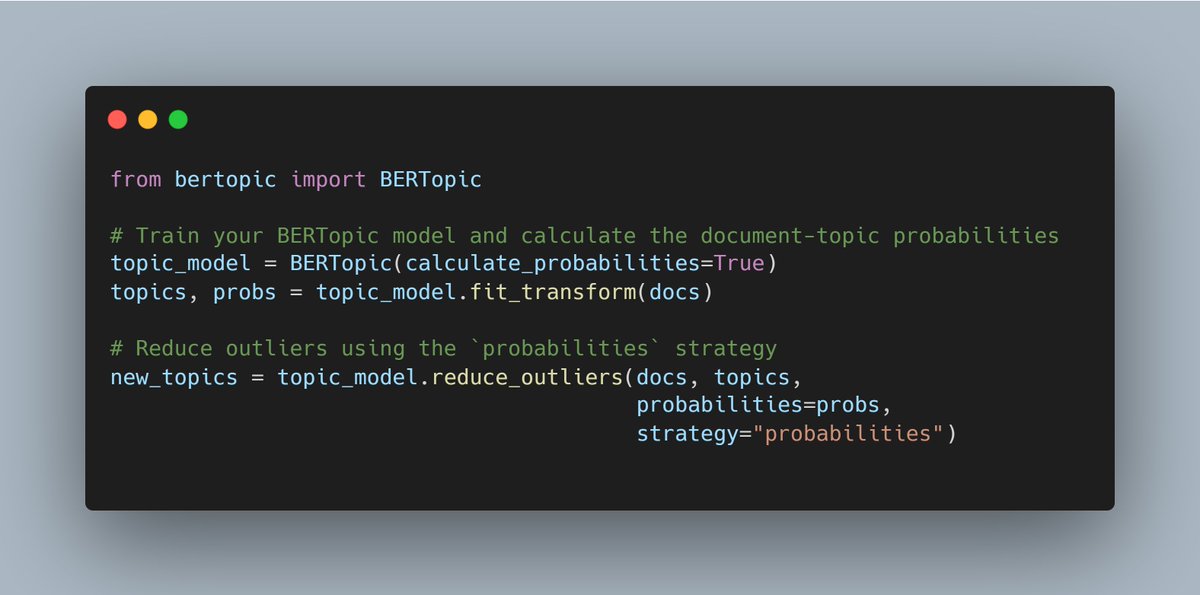

Strategy #1

Strategy #1