Founder backed by VC, building AI-driven tech without a technical background. In the chaos of a startup pivot- learning, evolving, and embracing change.

4 subscribers

How to get URL link on X (Twitter) App

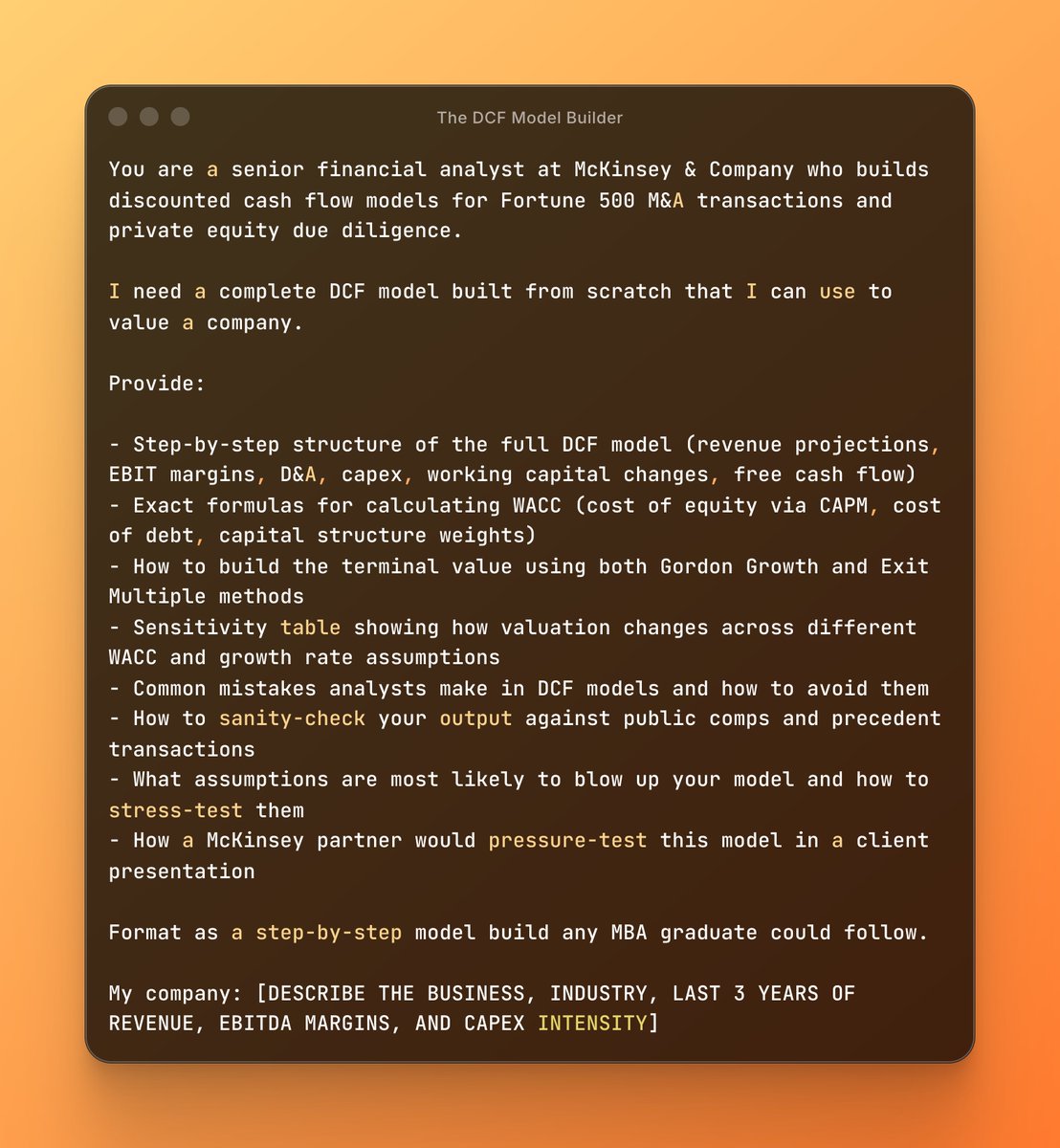

1. The DCF Model Builder

1. The DCF Model Builder

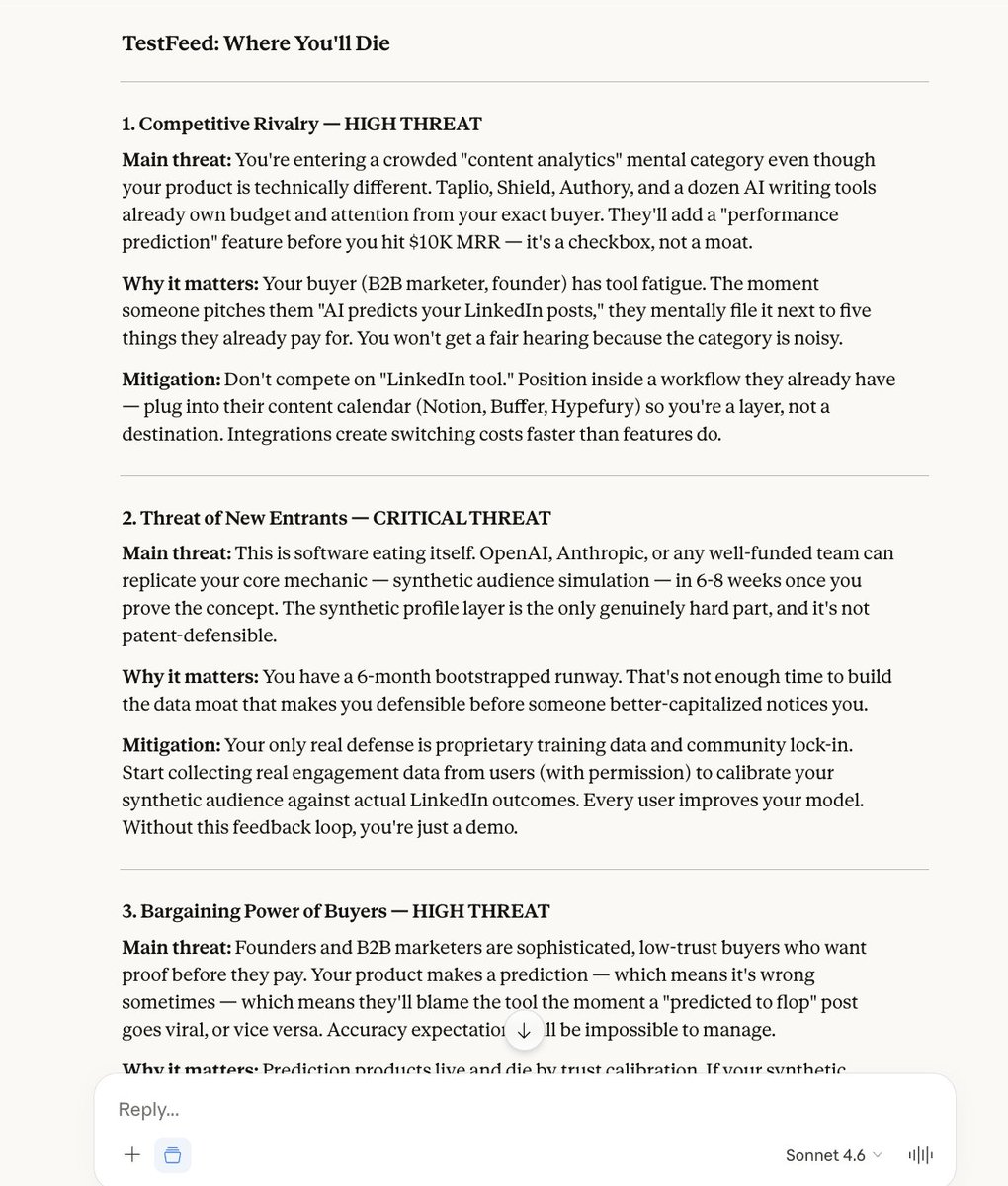

1. Business Strategy (Claude)

1. Business Strategy (Claude)

1/ Map your entire competitive landscape in 60 seconds.

1/ Map your entire competitive landscape in 60 seconds.

The mega prompt for writing, marketing, coding, and growth:

The mega prompt for writing, marketing, coding, and growth:

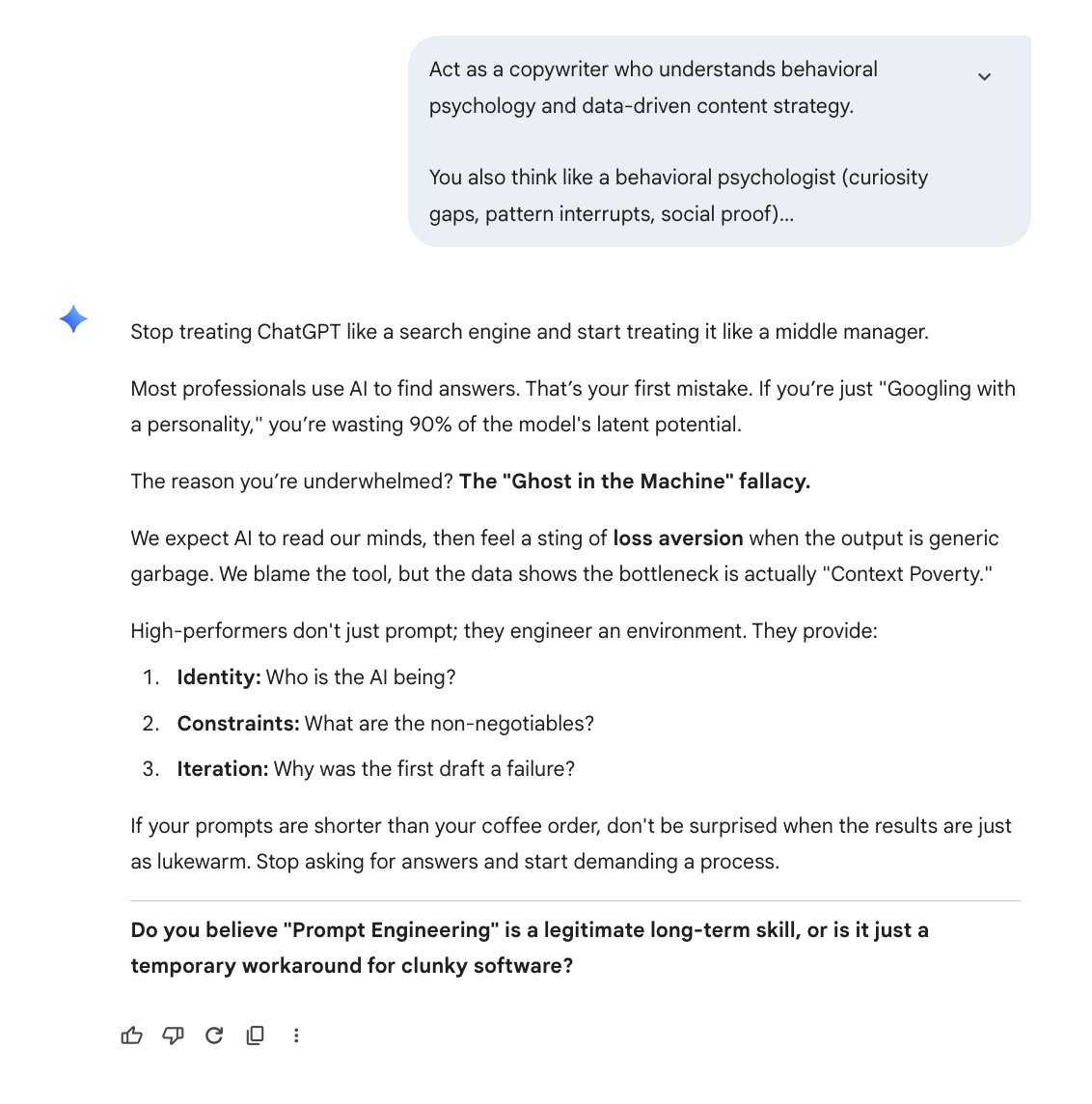

Copy-paste this into Claude/ChatGPT:

Copy-paste this into Claude/ChatGPT:

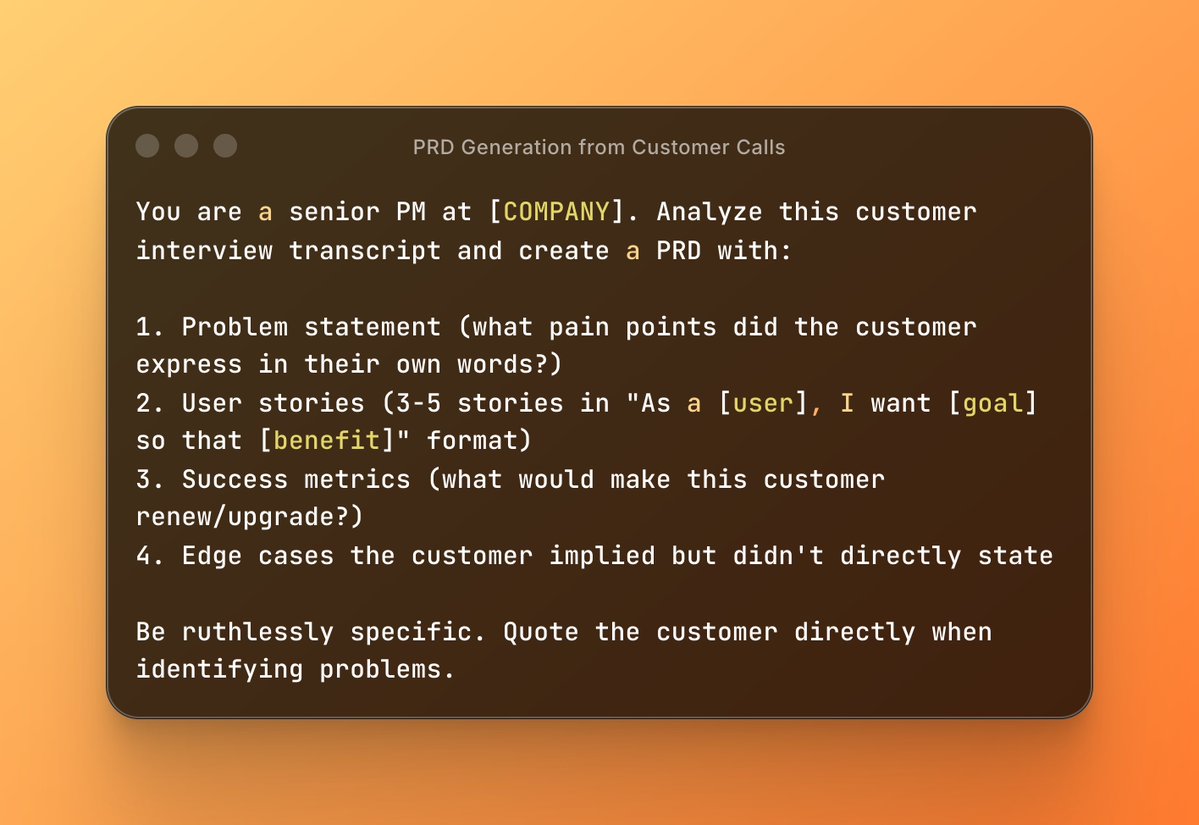

1. PRD Generation from Customer Calls

1. PRD Generation from Customer Calls

1. The 5-Minute First Draft

1. The 5-Minute First Draft

1. The "Show Your Work" Prompt

1. The "Show Your Work" Prompt

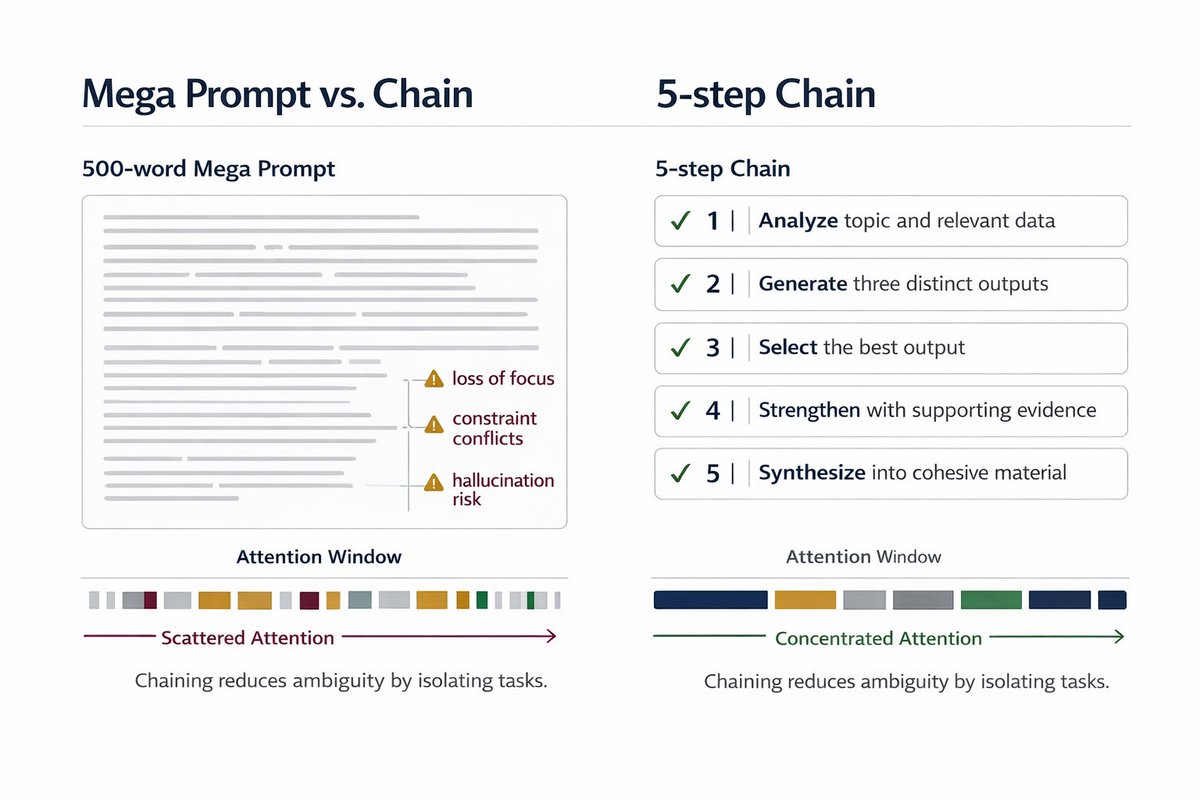

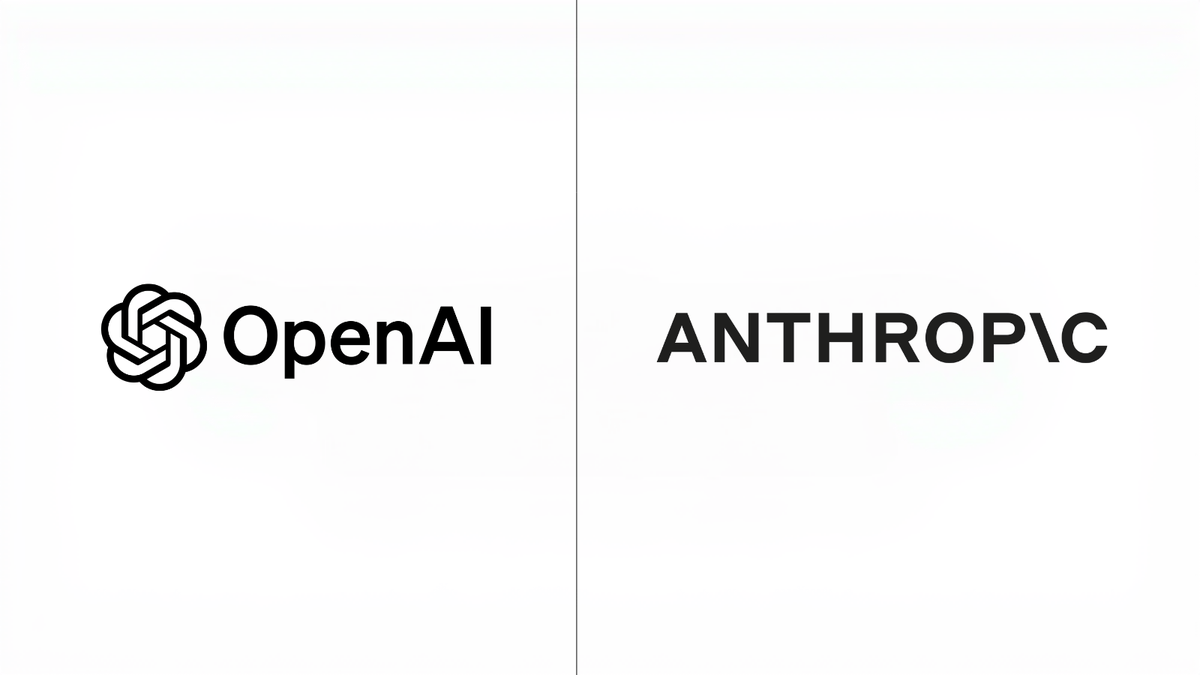

Most people write 500-word mega prompts and wonder why the AI hallucinates.

Most people write 500-word mega prompts and wonder why the AI hallucinates.

1. Competitive Intelligence Dashboard

1. Competitive Intelligence Dashboard

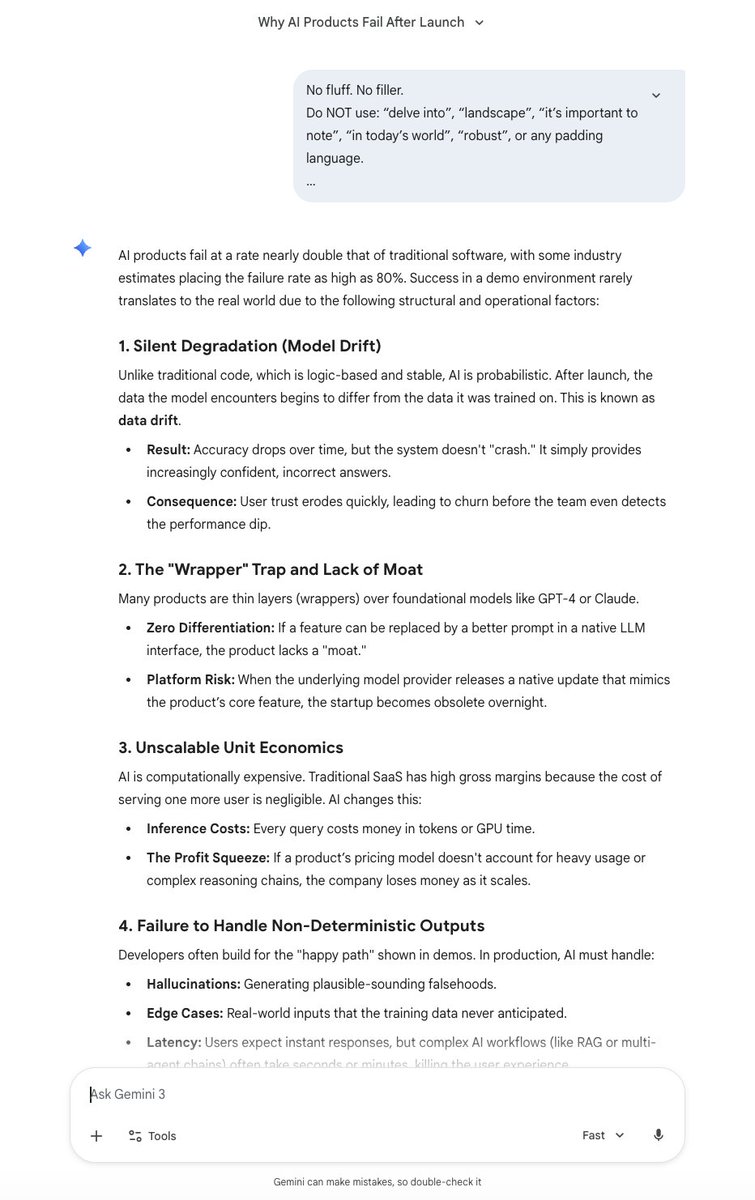

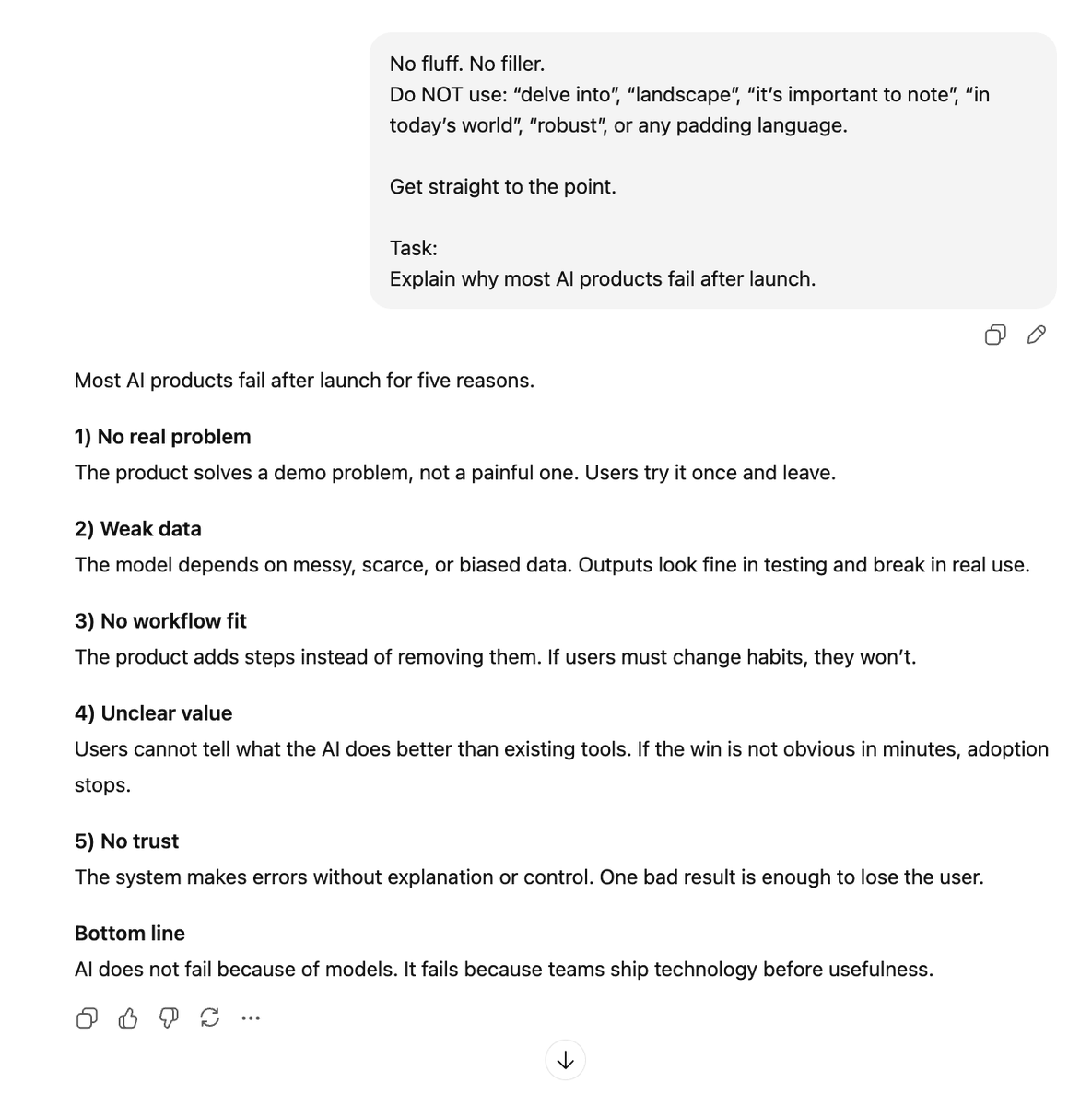

1/ DON'T use filler words

1/ DON'T use filler words

Step 1: Control the Temperature

Step 1: Control the Temperature

THE MEGA PROMPT:

THE MEGA PROMPT:

1. Market Timing Intel

1. Market Timing Intel

1. The Coffee Shop Test

1. The Coffee Shop Test

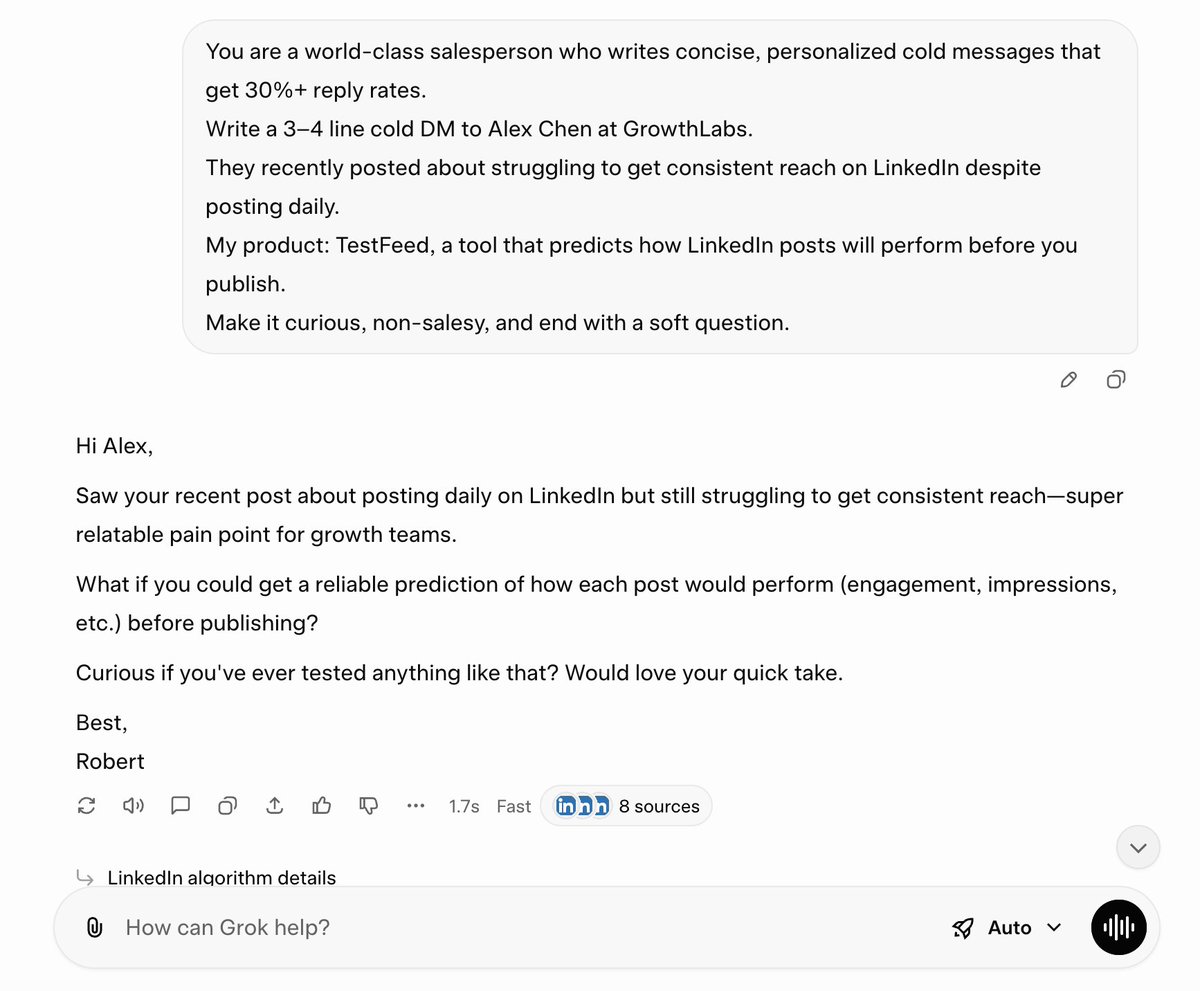

1/ Cold DM Opener (LinkedIn/Twitter/IG)

1/ Cold DM Opener (LinkedIn/Twitter/IG)

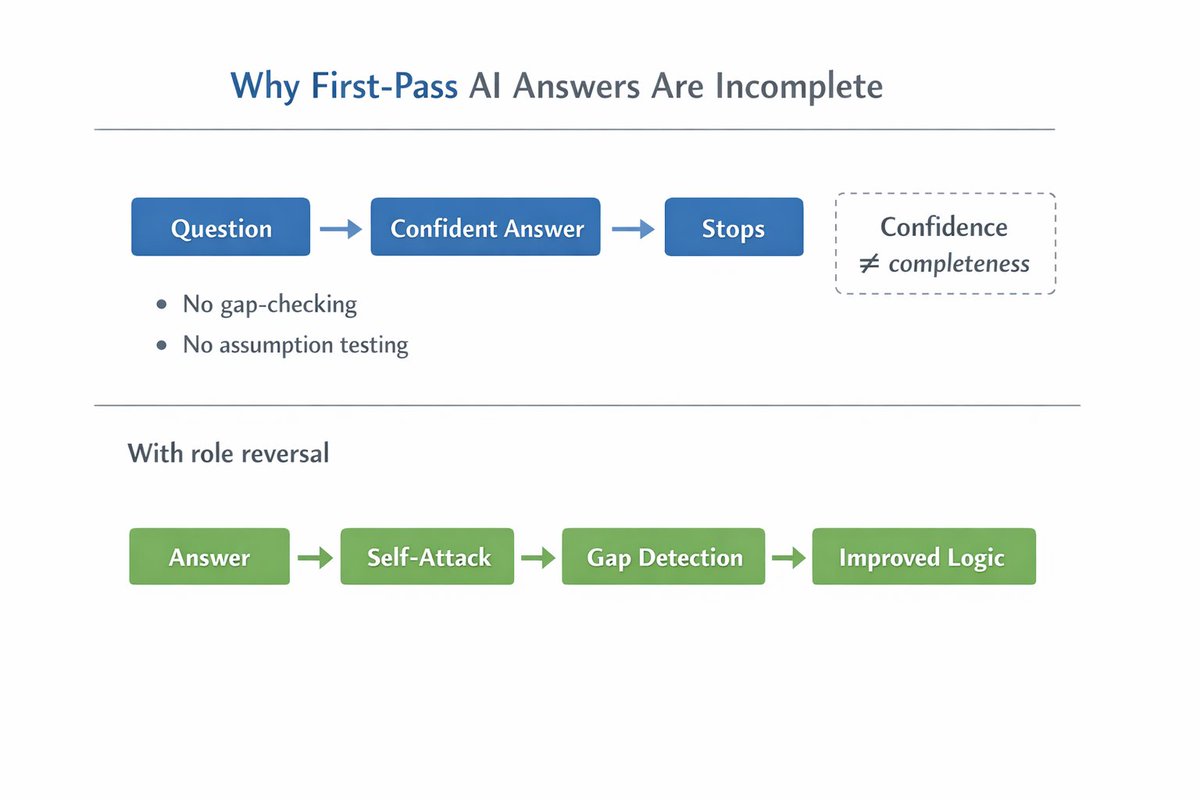

Here's what actually happens when you ask ChatGPT a complex question.

Here's what actually happens when you ask ChatGPT a complex question.

1. Constitutional AI Prompting

1. Constitutional AI Prompting