Studying Applied Mathematics and Statistics at @JohnsHopkins.

Studying In-Context Learning at The Intelligence Amplification Lab.

How to get URL link on X (Twitter) App

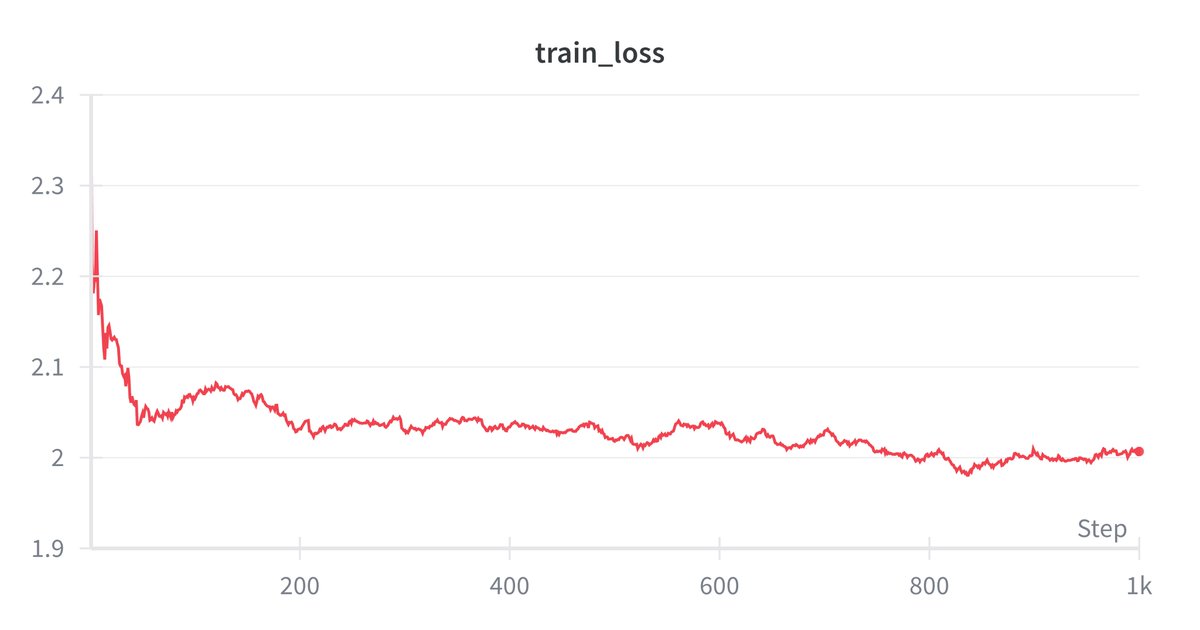

The training process is simple, and only takes 12 hourson an M3 Max. A simple LORA is applied to a quantized version of Qwen3-30B-A3B, which is trained to take in slopped stories and return humanlike outputs. I used 1000 training docs for this, for ~2.5M total tokens.

The training process is simple, and only takes 12 hourson an M3 Max. A simple LORA is applied to a quantized version of Qwen3-30B-A3B, which is trained to take in slopped stories and return humanlike outputs. I used 1000 training docs for this, for ~2.5M total tokens.

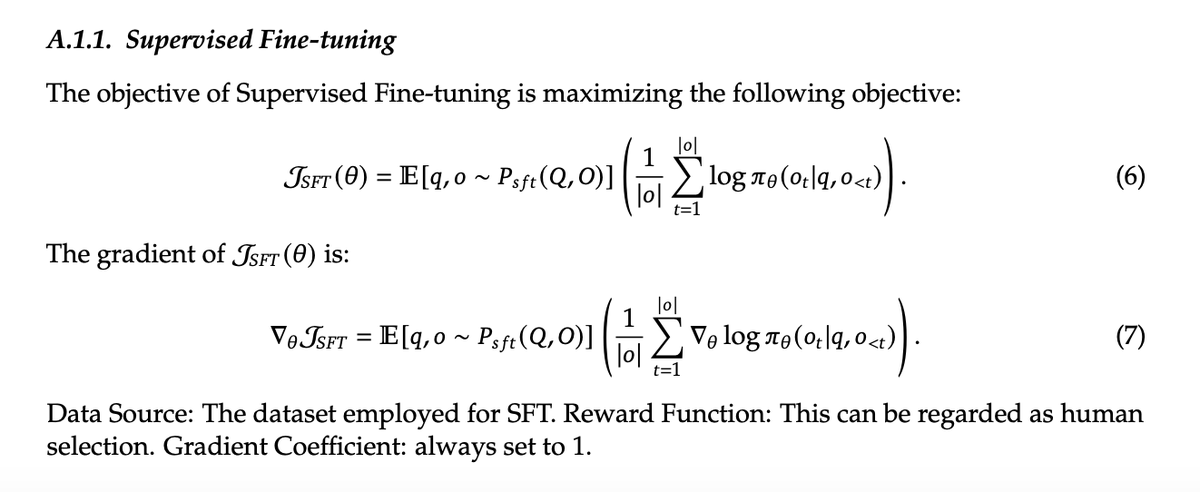

(i am math noob, so i won't try to explain this in a ton of depth) - but they make some really cool revelations - like showing how sft is just really simple RL:

(i am math noob, so i won't try to explain this in a ton of depth) - but they make some really cool revelations - like showing how sft is just really simple RL: