How to get URL link on X (Twitter) App

apple.github.io/embedding-atla…

apple.github.io/embedding-atla…

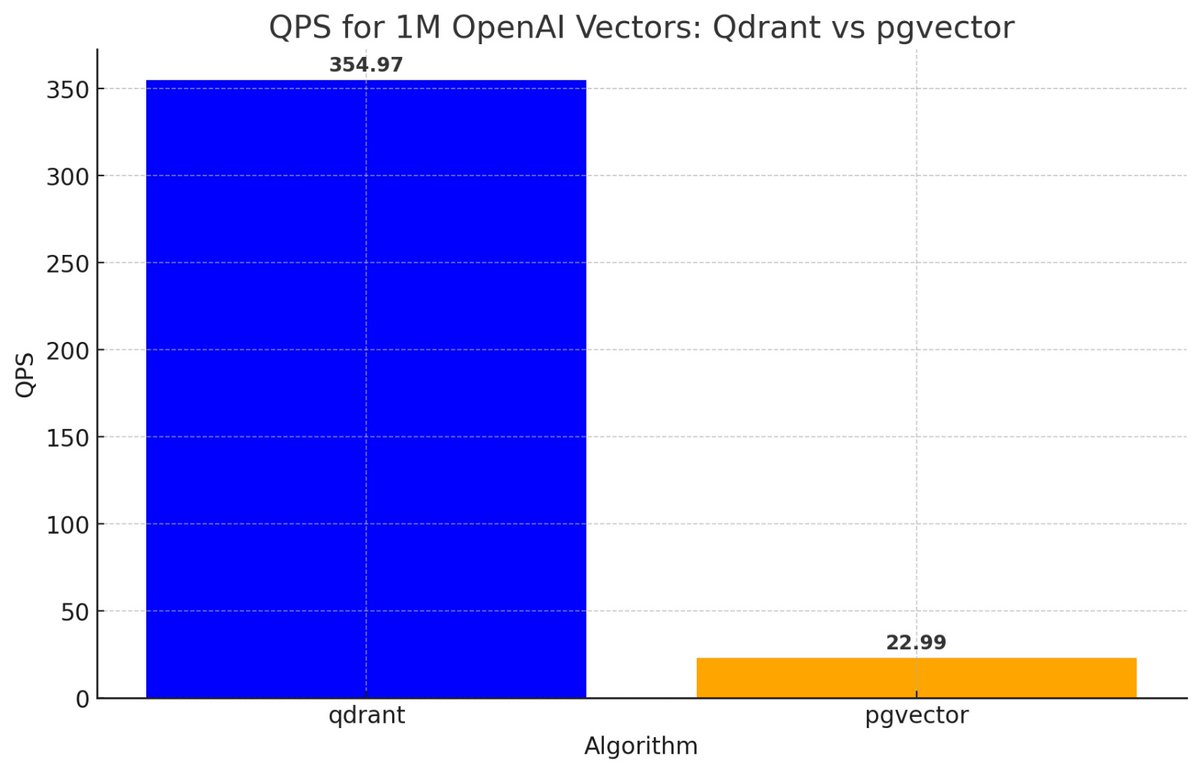

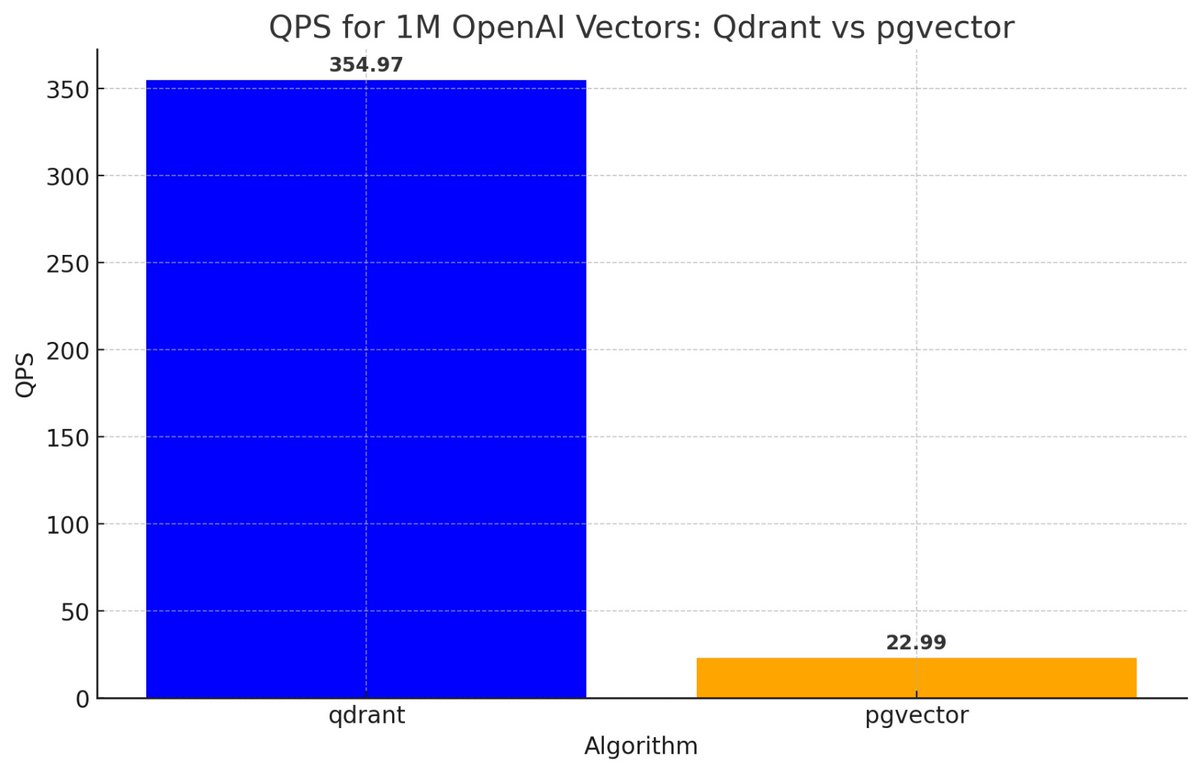

As a postgres fan, I am sad to see that pgvector not only starts at less than half the QPS at even 100K vectors — it dips really quickly beyond that.

As a postgres fan, I am sad to see that pgvector not only starts at less than half the QPS at even 100K vectors — it dips really quickly beyond that.