Senior Staff Research Scientist @GoogleDeepMind | Affiliated Lecturer @Cambridge_Uni | Assoc @clarehall_cam | GDL Scholar @ELLISforEurope. Monoids. 🇷🇸🇲🇪🇧🇦

4 subscribers

How to get URL link on X (Twitter) App

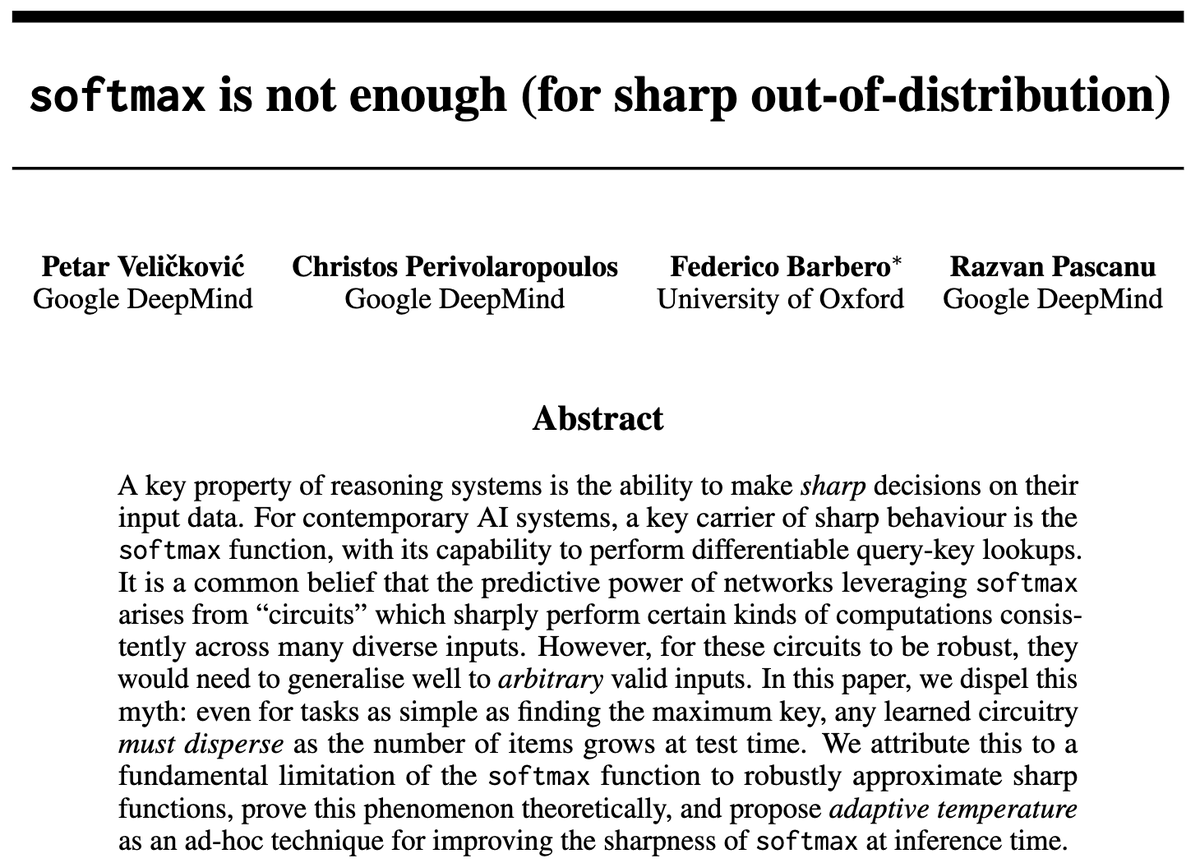

https://twitter.com/ytz2024/status/1874695567198265787For starters, a quick primer:

There's been a rightful surge of AI-powered competitive programming systems, typically deployed on classical contests such as Codeforces.

There's been a rightful surge of AI-powered competitive programming systems, typically deployed on classical contests such as Codeforces.

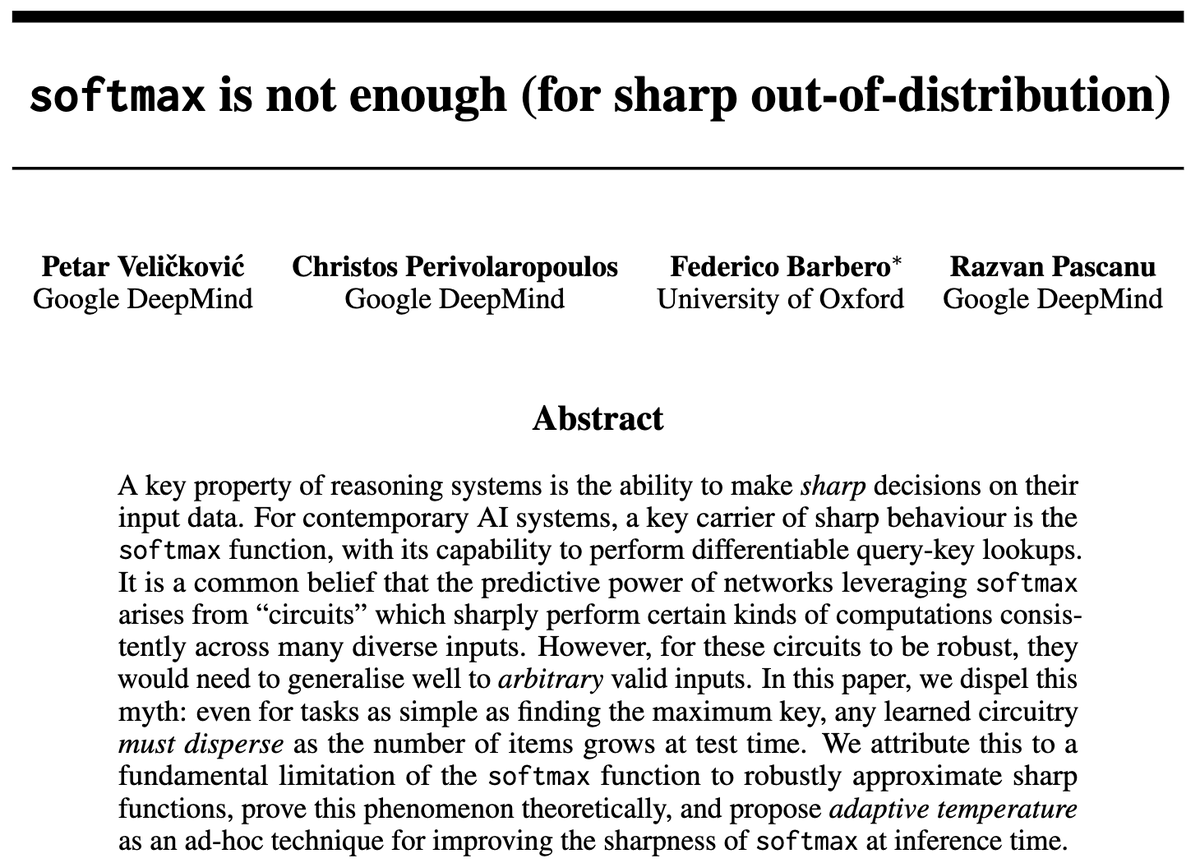

We start by asking a frontier LLM a simple query: copy the first & last token of bitstrings.

We start by asking a frontier LLM a simple query: copy the first & last token of bitstrings.

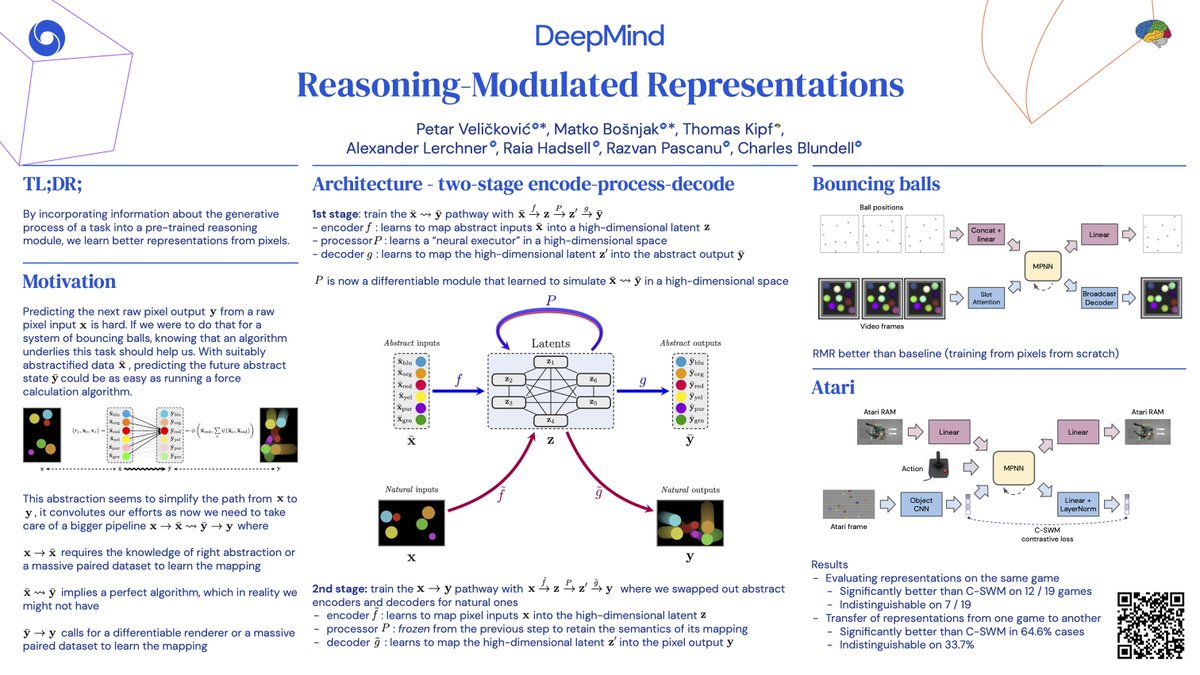

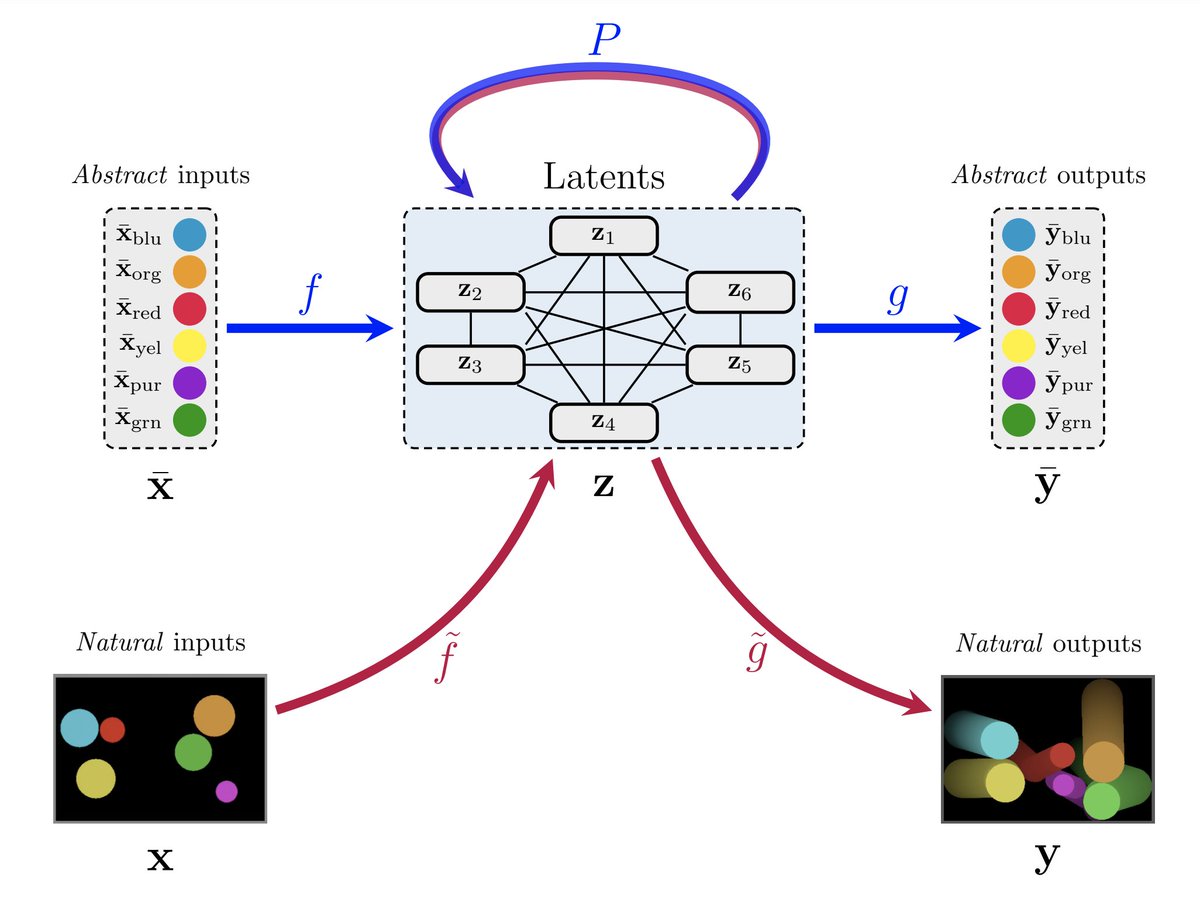

🌐 In "Reasoning-Modulated Representations", Matko Bošnjak, @thomaskipf, @AlexLerchner, @RaiaHadsell, Razvan Pascanu, @BlundellCharles and I demonstrate how to leverage arbitrary algorithmic priors for self-supervised learning. It even transfers _across_ different Atari games!

🌐 In "Reasoning-Modulated Representations", Matko Bošnjak, @thomaskipf, @AlexLerchner, @RaiaHadsell, Razvan Pascanu, @BlundellCharles and I demonstrate how to leverage arbitrary algorithmic priors for self-supervised learning. It even transfers _across_ different Atari games!

What to expect in the 2022 iteration?

What to expect in the 2022 iteration?

Why an algorithmic benchmark?

Why an algorithmic benchmark?

https://twitter.com/inoryy/status/1523354236473466882Why did I class the project as simple at first?

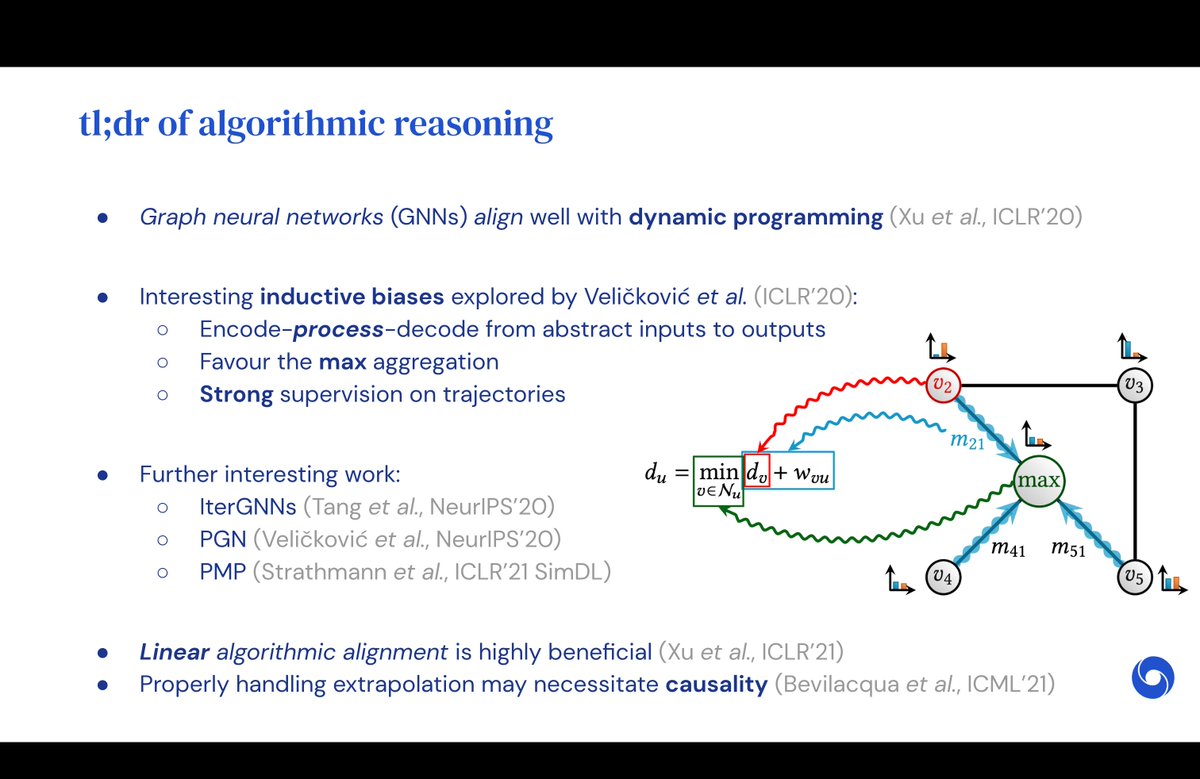

https://twitter.com/HamedSHassani/status/1501244879397003268GNN _computations_ align with DP. If you initialise the node features _properly_ (e.g. identifying the source vertex):

Trend #1: Geometry becomes increasingly important in ML. Quotes from Melanie Weber (@UniofOxford), @pimdehaan (@UvA_Amsterdam), @Francesco_dgv (@Twitter) and Aasa Feragen (@uni_copenhagen).

Trend #1: Geometry becomes increasingly important in ML. Quotes from Melanie Weber (@UniofOxford), @pimdehaan (@UvA_Amsterdam), @Francesco_dgv (@Twitter) and Aasa Feragen (@uni_copenhagen).

(1) "Neural Algorithmic Reasoners are Implicit Planners" (Spotlight); with @andreeadeac22, Ognjen Milinković, @pierrelux, @tangjianpku & Mladen Nikolić.

(1) "Neural Algorithmic Reasoners are Implicit Planners" (Spotlight); with @andreeadeac22, Ognjen Milinković, @pierrelux, @tangjianpku & Mladen Nikolić.

https://twitter.com/DeepMind/status/1466080533050535940It’s hard to overstate how happy I am to finally see this come together, after years of careful progress towards our aim -- demonstrating that AI can be the mathematician’s 'pocket calculator of the 21st century'.

https://twitter.com/andreeadeac22/status/1456636063271821314You might have seen XLVIN before -- we'd advertised it a few times, and it also featured at great length in my recent talks.

https://twitter.com/PetarV_93/status/1321114783249272832

For large-scale transductive node classification (MAG240M), we found it beneficial to treat subsampled patches bidirectionally, and go deeper than their diameter. Further, self-supervised learning becomes important at this scale. BGRL allowed training 10x longer w/o overfitting.

For large-scale transductive node classification (MAG240M), we found it beneficial to treat subsampled patches bidirectionally, and go deeper than their diameter. Further, self-supervised learning becomes important at this scale. BGRL allowed training 10x longer w/o overfitting.

We study a very common representation learning setting where we know *something* about our task's generative process. e.g. agents must obey some laws of physics, or a video game console manipulates certain RAM slots. However...

We study a very common representation learning setting where we know *something* about our task's generative process. e.g. agents must obey some laws of physics, or a video game console manipulates certain RAM slots. However...

We have investigated the essence of popular deep learning architectures (CNNs, GNNs, Transformers, LSTMs) and realised that, assuming a proper set of symmetries we would like to stay resistant to, they can all be expressed using a common geometric blueprint.

We have investigated the essence of popular deep learning architectures (CNNs, GNNs, Transformers, LSTMs) and realised that, assuming a proper set of symmetries we would like to stay resistant to, they can all be expressed using a common geometric blueprint.

https://twitter.com/PetarV_93/status/1385932158599114752During the early stages of my PhD, one problem would often arise: I would come up with ideas that simply weren't the right kind of idea for the kind of hardware/software/expertise setup I had in my department. 2/15

https://twitter.com/cHHillee/status/1323323061370724352Firstly, I'd like to note that, in my opinion, this is a very strong and important work for representation learning on graphs. It provides us with so many lightweight baselines that often perform amazingly well -- on that, I strongly congratulate the authors! 2/14

For blogs, I'd recommend:

For blogs, I'd recommend: