How to get URL link on X (Twitter) App

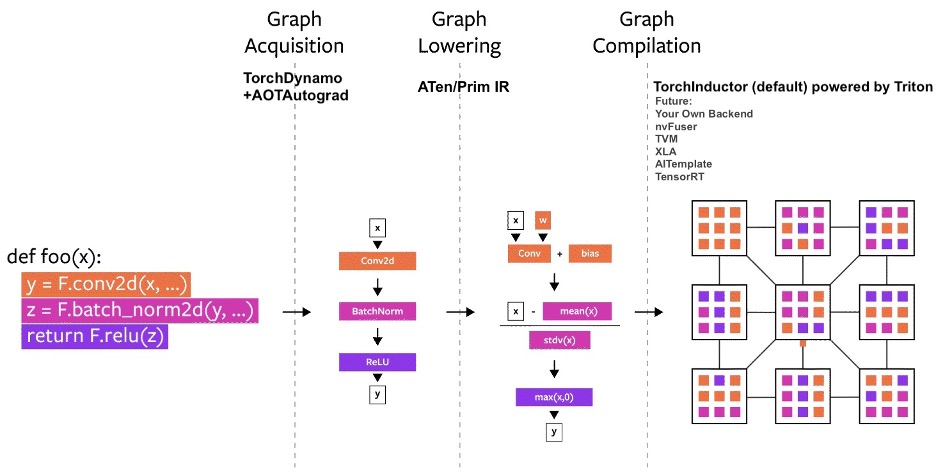

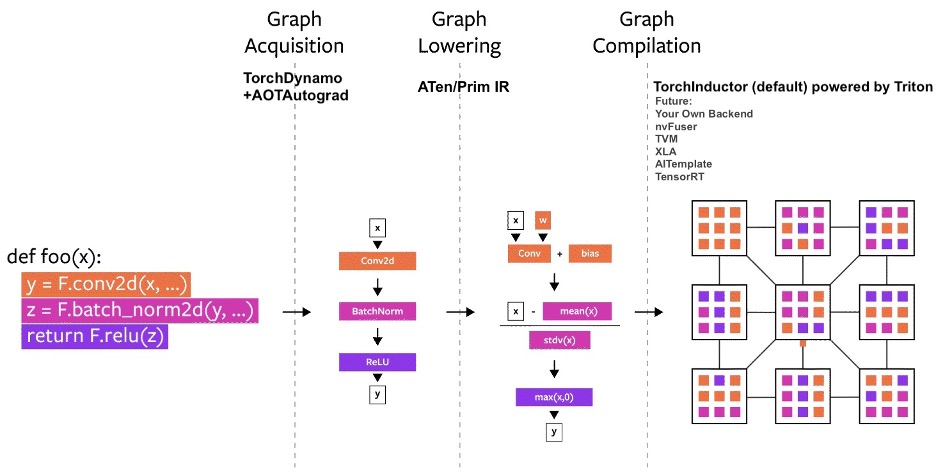

PyTorch 2.0 introduces torch.compile, a compiled mode that accelerates your model without needing to change your model code. On 163 open-source models ranging across vision, NLP, and others, we found that using 2.0 speeds up training by 38-76%.

PyTorch 2.0 introduces torch.compile, a compiled mode that accelerates your model without needing to change your model code. On 163 open-source models ranging across vision, NLP, and others, we found that using 2.0 speeds up training by 38-76%.

In PyTorch Datasets & Dataloaders Tutorial: Code for processing data samples needs to be organized. Dataset code should be decoupled from model training code for better readability & modularity. Learn in 5 steps.

In PyTorch Datasets & Dataloaders Tutorial: Code for processing data samples needs to be organized. Dataset code should be decoupled from model training code for better readability & modularity. Learn in 5 steps.