Research institute focused on developing forecasting methods to improve decision-making on high-stakes issues, co-founded by chief scientist Philip Tetlock.

How to get URL link on X (Twitter) App

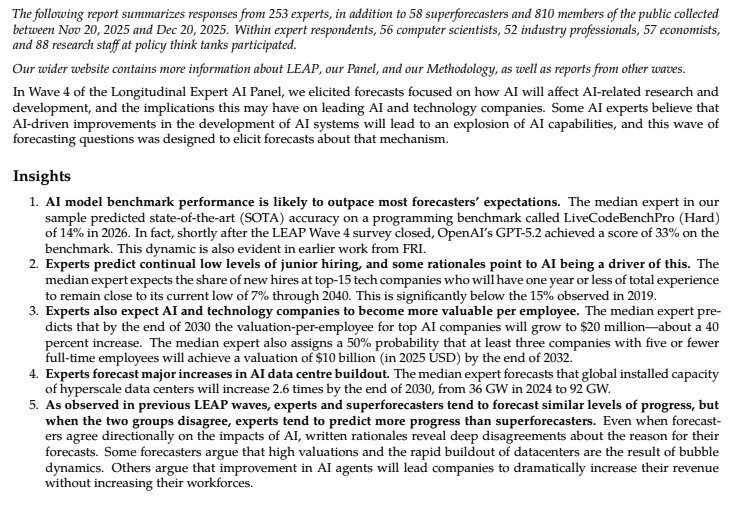

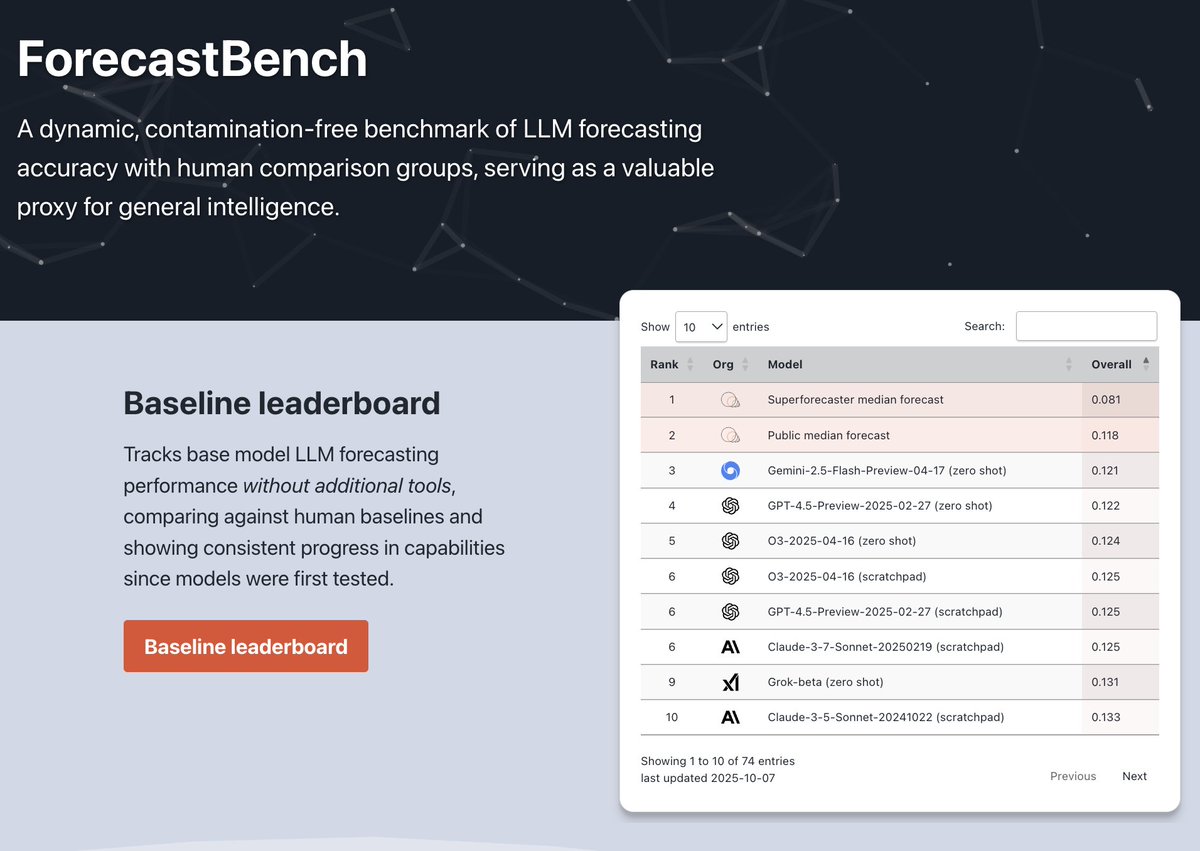

📈 AI benchmark progress is advancing faster than experts expect

📈 AI benchmark progress is advancing faster than experts expect

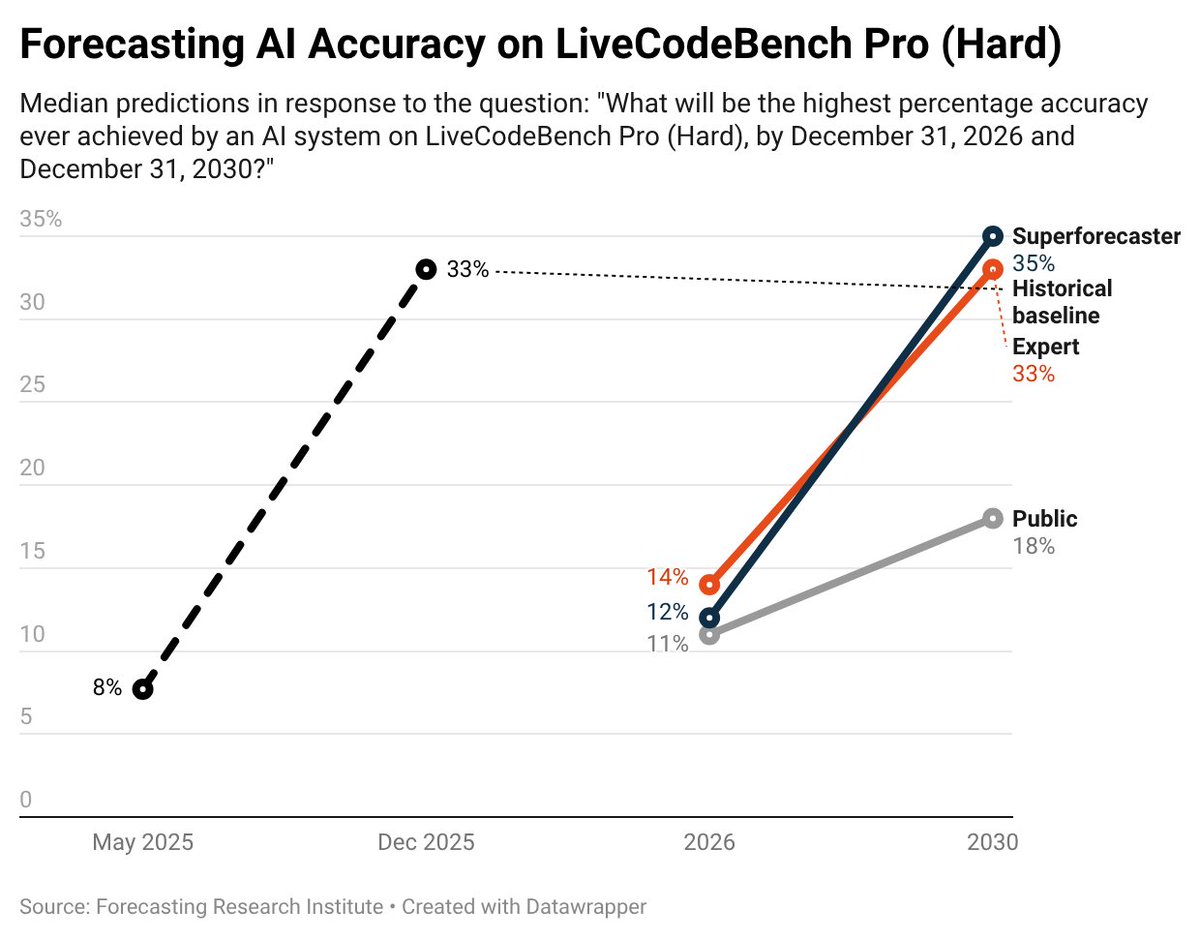

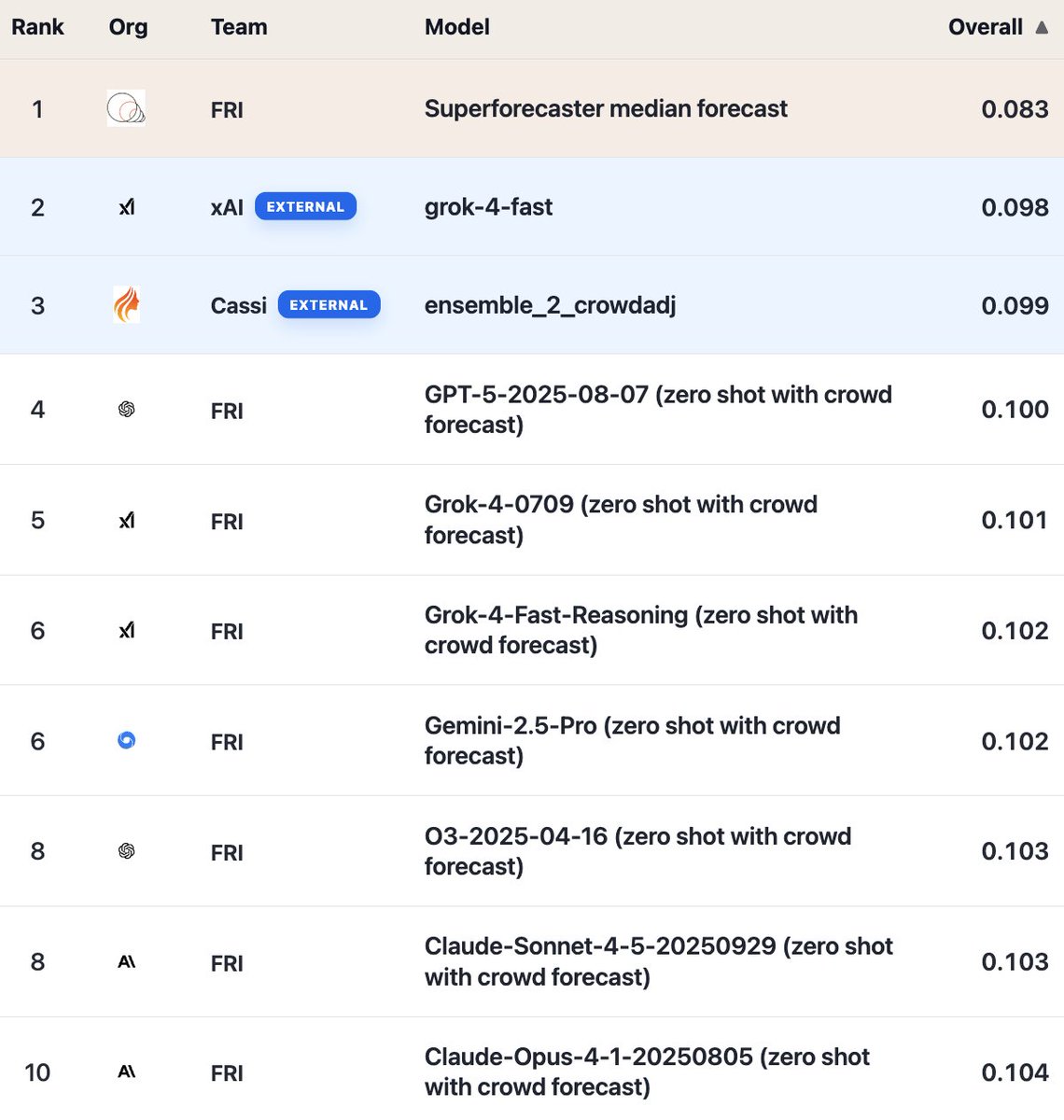

In October, we opened up ForecastBench’s tournament leaderboard to external submissions. Teams are free to use any tools they choose.

In October, we opened up ForecastBench’s tournament leaderboard to external submissions. Teams are free to use any tools they choose.

Our LEAP panel is made up of the following experts:

Our LEAP panel is made up of the following experts:

Why LLM forecasting accuracy is a useful benchmark:

Why LLM forecasting accuracy is a useful benchmark:

Respondents—especially superforecasters—underestimated AI progress.

Respondents—especially superforecasters—underestimated AI progress.