How to get URL link on X (Twitter) App

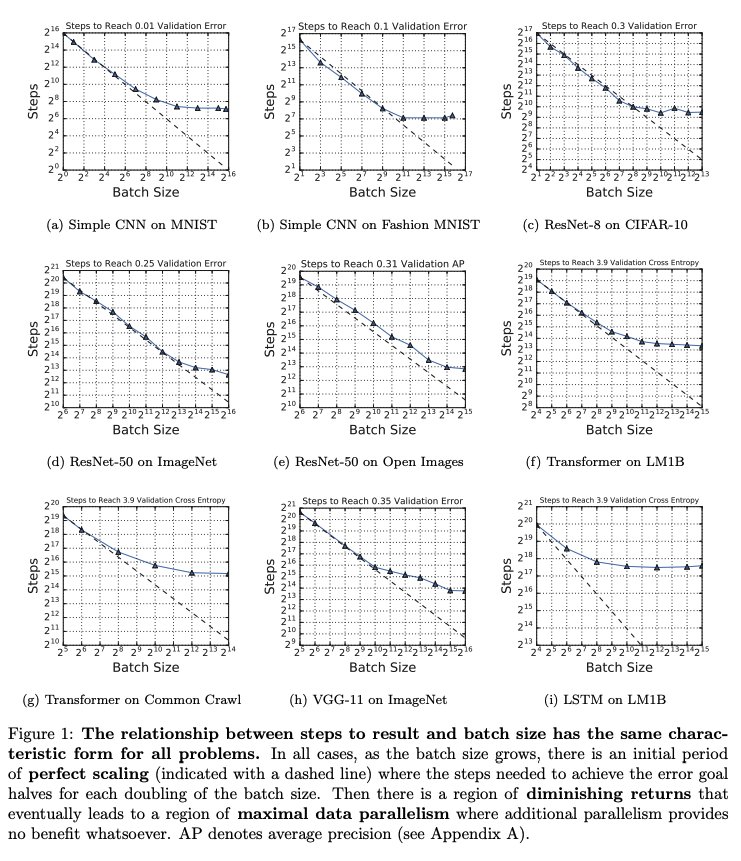

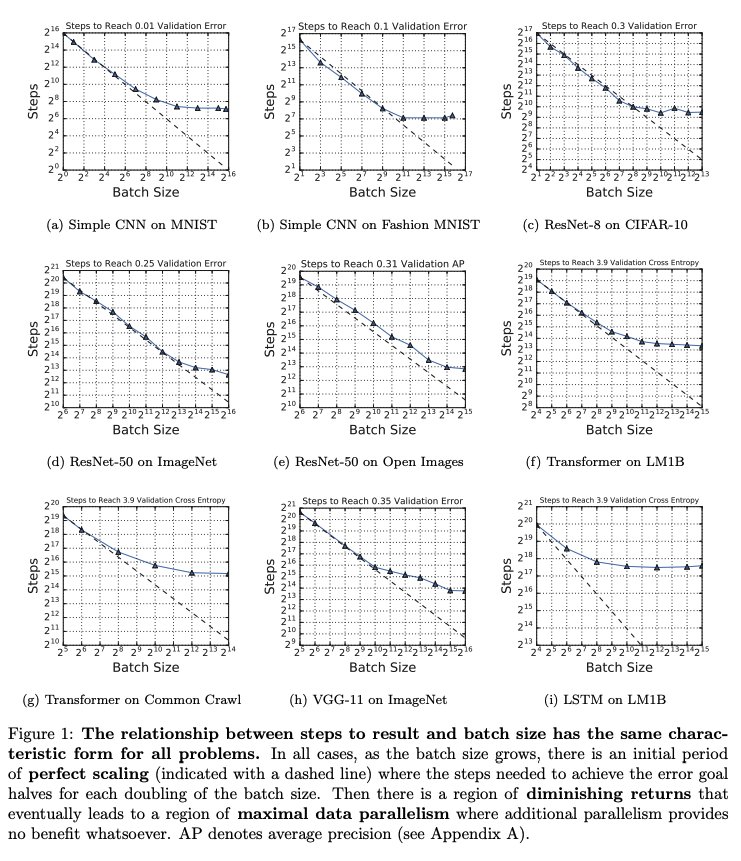

The paper is a good example of lots of elements of good experimental design. They validate their metric by showing lots of variants give consistent results. They tune hyperparamters separately for each condition, check that optimum isn't at the endpoints, and measure sensitivity.

The paper is a good example of lots of elements of good experimental design. They validate their metric by showing lots of variants give consistent results. They tune hyperparamters separately for each condition, check that optimum isn't at the endpoints, and measure sensitivity.